Winnie - AI WhatsApp Chatbot on Linode!

Amit Wani

Amit WaniTable of contents

Hello folks, today I am writing about my new creation Winnie - an AI-powered WhatsApp Chatbot deployed on Linode a cloud provider and much more.

I have already written a blog about how you can configure and setup the WhatsApp Chatbot with NodeJS and Express, you find it here:

Today in this article, we will be looking at how I have made this whole application end-to-end, the concept behind it, all the components, using various services, deployment and automating all workflow.

🤔 Concept

I always wanted to create a chatbot which one can talk to, ask questions have funny conversations. When found out about WhatsApp making their Cloud API publically available to all and not only businesses, I had the idea 💡 to create a chatbot using the most used messaging platform on this planet. I thought of using OpenAI's GPT-3 model as a brain for this chatbot, one of the most powerful. That's how the complete concept of this application came in.

👁️🗨️ Demo

An example of a conversation on WhatsApp using this bot

⚙️ Tech Stack

I tried to use all necessary technologies which I thought will add value to this project. I am also adding the use case of each technology which is used

- NodeJS & Express: The basic backend technology to create a server

- Redis: Used as the only database, the key-value database used to store the latest conversation between user and chatbot for giving better context for the chatbot

- Docker: Using docker to ship applications to production and using docker-compose to orchestrate multiple docker services

- ElasticSearch & Kibana: Used for analytics by logging into ElasticSearch and visualising using the Kibana dashboard

- Nginx: Used as an API gateway and reverse proxy to set different routes for different services plus configuration of SSL certificate is done here using Certbot

- Linode: Used as the cloud provider where all these components are running

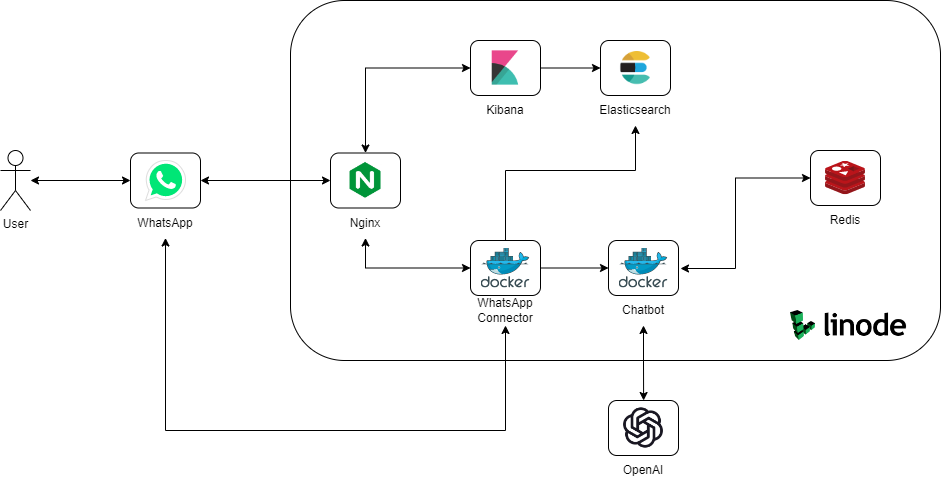

⚒️ Workflow

📈 Process flow

- User sends a message: The user sends a message to the WhatsApp number

- WhatsApp sends the message to webhook: WhatsApp sends back an event to the webhook we have registered (i.e. our Nginx)

- Nginx routes to WhatsApp Connector: Nginx routes request to WhatsApp Connector express server running as a Docker container

- Server parses request: WhatsApp Connector extracts the message from the user and pushes that log to ElasticSearch

- Forwards to Chabot: WhatsApp Connector calls Chatbot server running as a Docker container for prediction of response of user's message

- Chatbot calls OpenAI: Chatbot receives the request, fetches the latest conversation from Redis to give AI model more context, passes it as a prompt to OpenAI and receives a response in prediction, sends it back to Whatsapp Connector

- Response: WhatsApp Connector gets the response and it sends back the response to WhatsApp using their API and pushes the log to ElasticSearch

🧑💻Code

All this code is available on Github

⚓ Deployment

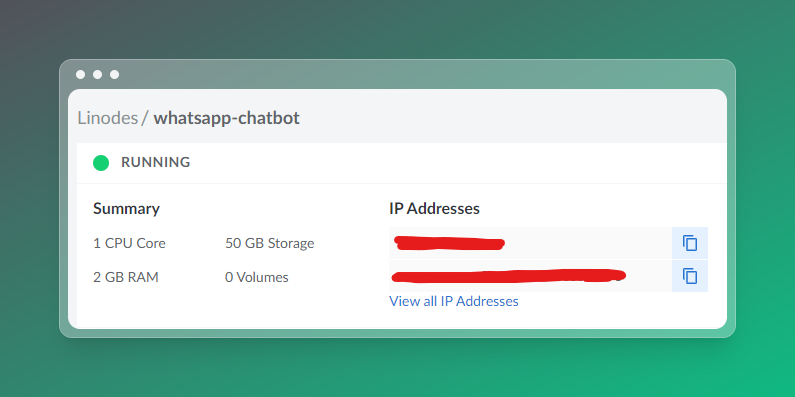

I have used Linode for hosting this entire project.

I have created a Linode with 1 CPU, 2 GB RAM, 50 GB Storage as below:

Connect to Server

I connected to the server using SSH keys generated at the creation of the Linode.

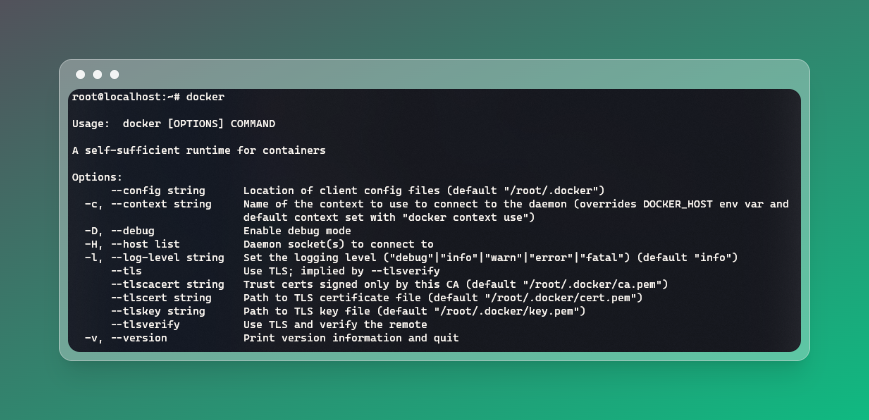

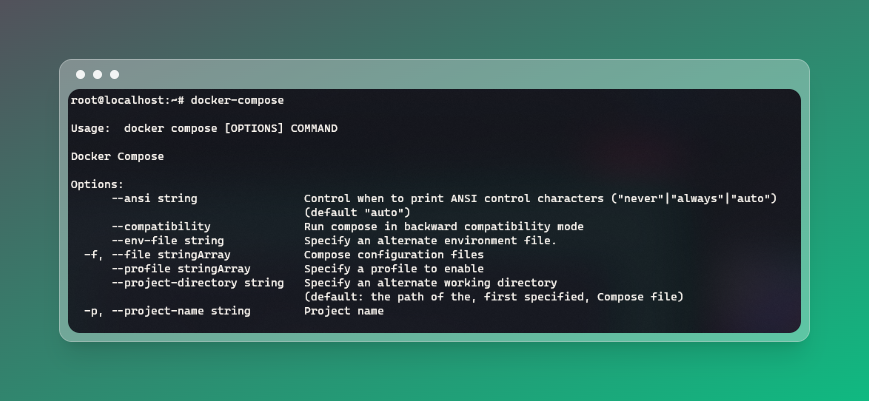

Installing Docker

The first step was installing docker and docker-compose on the server. Since I chose a Debian machine, I followed basic steps to install docker and docker-compose on the server.

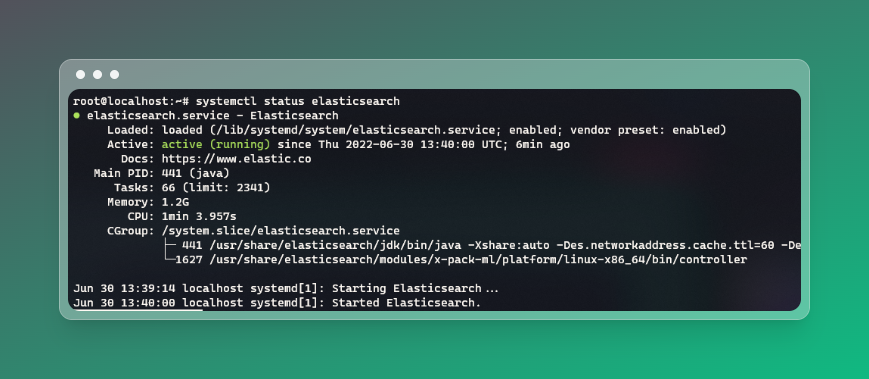

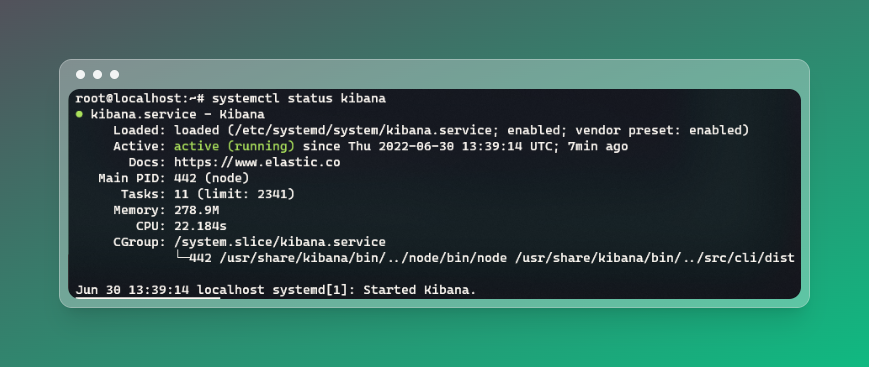

Installing ElasticSearch & Kibana

I installed ElasticSearch & Kibana as a service and made them start whenever the server is booted up. Plus, I had to configure the elastic.yml and kibana.yml files to ensure both are talking to each other perfectly.

Push Docker Images

Pushing latest docker images of whatsapp-connector and chatbot to Docker repository

Configure docker-compose.yml file

Create a docker-compose.yml and configure it with all environment variables needed, docker images tags, creating networks, exposing ports, configuring dependencies, etc.

Nginx & Certbot

Run the containers Nginx and Certbot containers using a script to generate SSL Certificates for the domain registered.

Start Containers

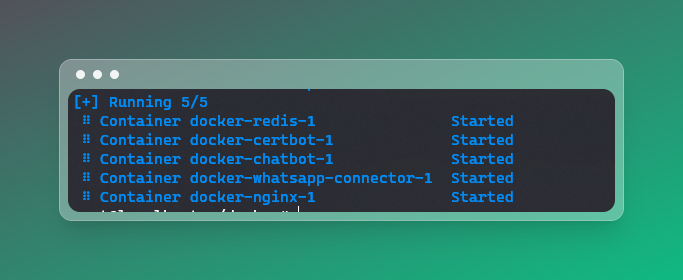

Starting the containers using docker-compose up -d --force-recreate to start all docker containers - whatsapp-connector, chatbot, redis, nginx, certbot

🚗 CI/CD Pipeline

To automate this whole deployment process, each time when I make the change, I have used the power of GitHub Actions as a CI/CD workflow to fluently deploy my code to the Linode server using docker.

- On every commit on the

mainbranch - Build Docker Images of both microservices

- Push Docker Images of both microservices to the docker repository

- Update

docker-compose.ymlby replacing environment variables from GitHub secrets - Copying

docker-compose.ymlandnginx.confto the server - Running the

docker-composecommand to recreate containers - Deployment is done

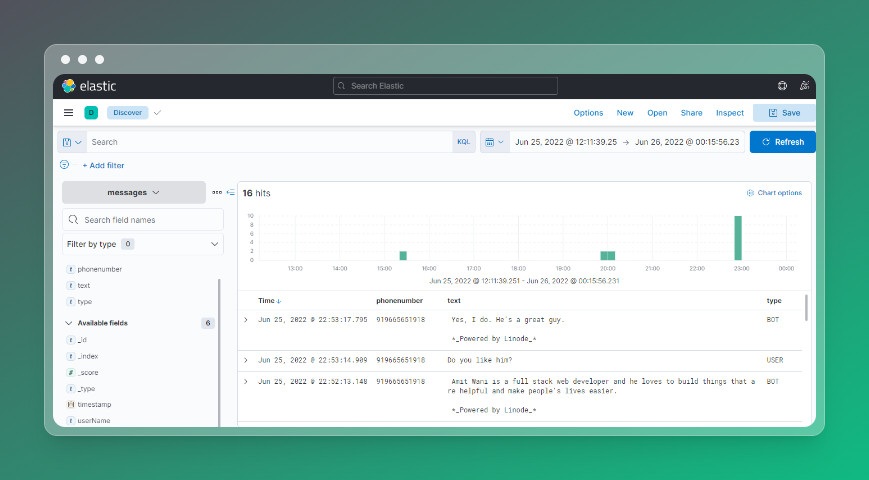

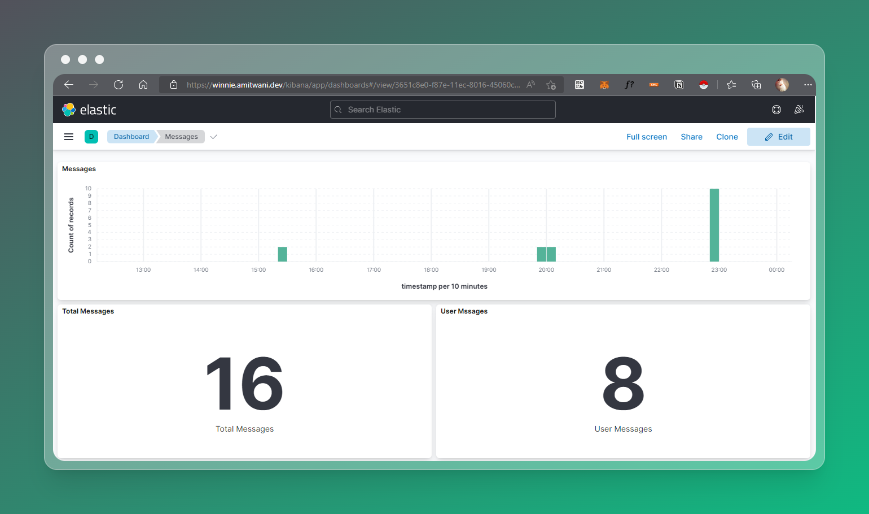

📊 Analytics

Since we have pushed logs to ElasticSearch into an index. We can visualize this data on Kibana.

Kibana UI is exposed through Nginx with an HTTP authentication on top of it.

On Kibana, we can see logs of all the messages sent and received by the bot and user.

I have also created a dashboard to visualize some stats as well.

🔮 Future Scope

Future features maybe to add more conversational ability to this bot.

The bot can be joined with various other systems such as for your own business use case as well.

Much better landing page currently (https://winnie.amitwani.dev)

🙏Conclusion

I really enjoyed making this project. Probably one of my bigger projects where I got to interact with a lot of technologies in one project. Special thanks to Linode and Hashnode for organizing this Hackathon.

This project is created as a submission for the Linode x Hashnode hackathon

Subscribe to my newsletter

Read articles from Amit Wani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit Wani

Amit Wani

𝗪𝗔𝗥𝗥𝗜𝗢𝗥! Full Stack Developer | SDE Reliance Jio 🖥️ Tech Enthusiastic 💻 Man Utd 🔴 Sachin & Dravid fan! ⚾ Photography 📸 Get Hands Dirty on Keyboard!