A template to set up multi-tenant Kubernetes cluster

Ravi Sharma

Ravi SharmaAbout Multi-Tenancy

If you have been using a Kubernetes cluster there must have been instances where your application co-exists with applications that belong to different groups in your org or have different purposes altogether. As different types of users access the same cluster resources it becomes very important to create these application boundaries and define how teams can deploy and access these multi-tenant clusters.

Thankfully, recently Kubernetes multi-tenancy group came out with some guidelines which gives us how best a multi-tenant cluster should be organized. Using principles defined by the Kubernetes multi-tenancy group I am attempting to provide a template that can be used when a cluster is created. This approach might help with the onboarding of teams and their applications and can be standardized to efficiently use cluster resources.

Disclaimer: By no means this is an exhaustive template. It can serve as a starting point for you and based on your requirements you can modify this template to further enhancements. But I would definitely appreciate it if you can share those enhancements with me!

A template for multi-tenancy cluster

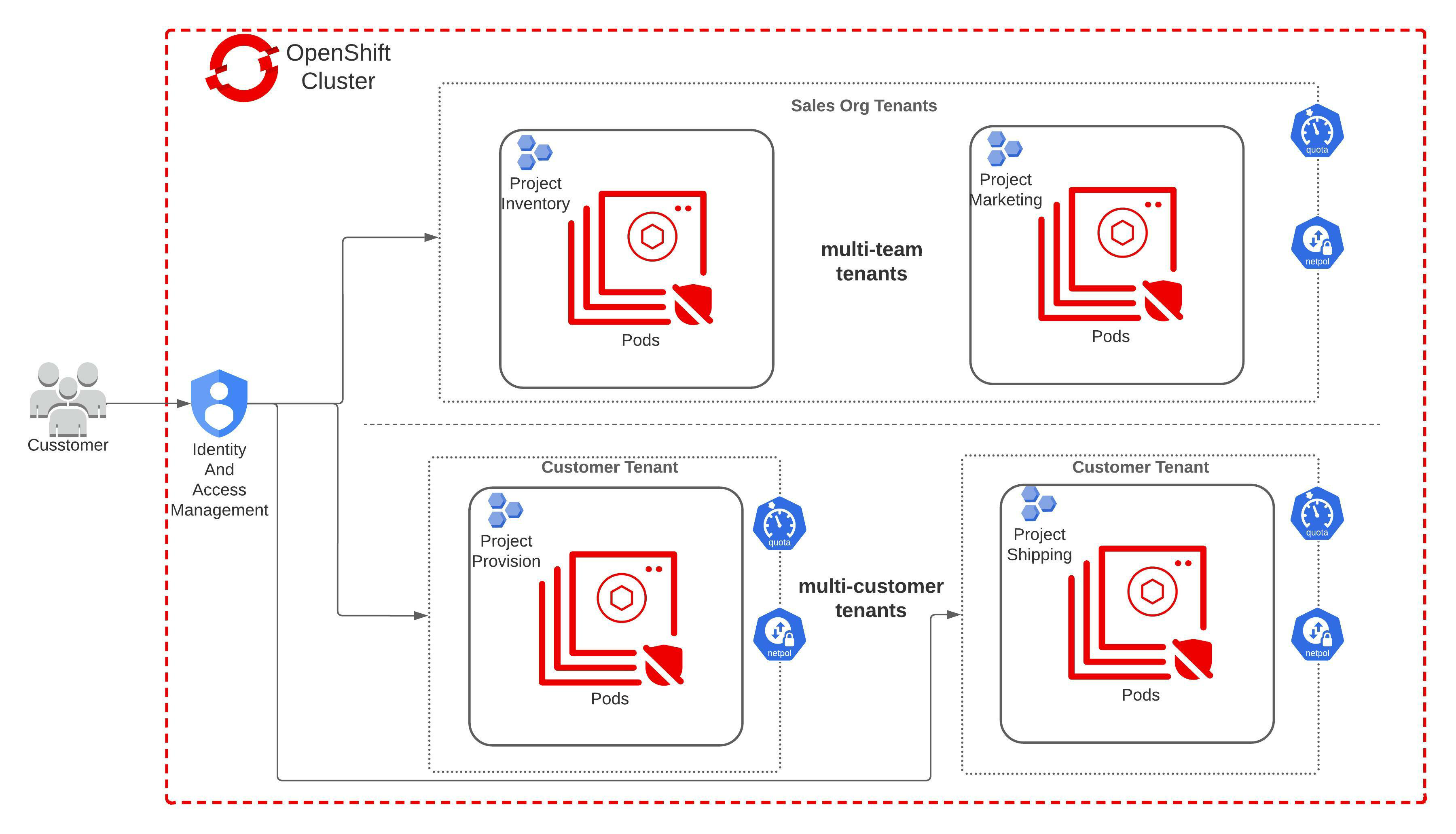

Imagine we have a cluster that needs to host multi-team and/or multi-customer tenants. A sample architecture may look like the below image where I have tried to list objects which are necessary for the cluster to be multi-tenant:

Now, let's start creating our cluster with these hypothetical tenants. We will be using RedHat's OpenShift cluster which is free to use.

Let's begin...

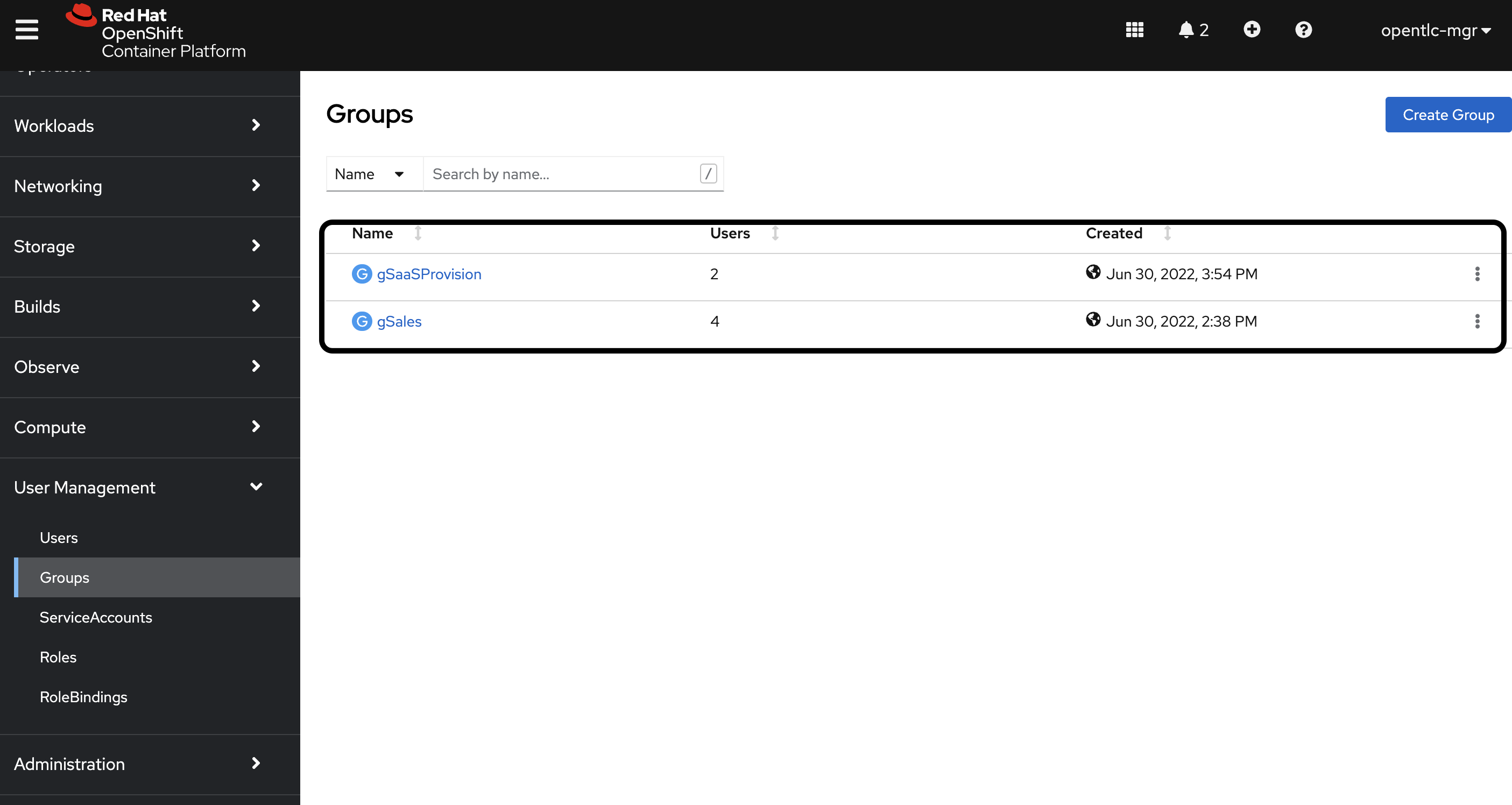

Create a group for the Sales org tenant and a SaaS customer tenant with some dummy users via console. For this, you will need to log in as an Admin on to the console URL provided after you create your cluster. And while in Administrator perspective you will see User Management on the left-hand pane.

Next use the below project template for creating multi-team or multi-customer tenants. I have set some values as a reference in this template. You will most likely need to modify based on your requirements for a tenant.

apiVersion: template.openshift.io/v1 kind: Template metadata: creationTimestamp: null name: multitenant-project-request objects: - apiVersion: project.openshift.io/v1 kind: Project metadata: annotations: openshift.io/description: ${PROJECT_DESCRIPTION} openshift.io/display-name: ${PROJECT_DISPLAYNAME} openshift.io/requester: ${PROJECT_REQUESTING_USER} creationTimestamp: null name: ${PROJECT_NAME} spec: {} status: {} - apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: creationTimestamp: null name: edit-binding namespace: ${PROJECT_NAME} roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: edit subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: ${PROJECT_GROUP} - apiVersion: v1 kind: ResourceQuota metadata: name: resource-quota namespace: ${PROJECT_NAME} spec: hard: pods: "10" requests.cpu: '4' requests.memory: 8Gi limits.cpu: '6' limits.memory: 12Gi requests.storage: 100Gi persistentvolumeclaims: '100' gold.storage-class.kubernetes.io/requests.storage: 3Gi gold.storage-class.kubernetes.io/persistentvolumeclaims: '5' silver.storage-class.kubernetes.io/requests.storage: 2Gi silver.storage-class.kubernetes.io/persistentvolumeclaims: '3' bronze.storage-class.kubernetes.io/requests.storage: 1Gi bronze.storage-class.kubernetes.io/persistentvolumeclaims: '1' - apiVersion: v1 kind: LimitRange metadata: name: mem-limit-range namespace: ${PROJECT_NAME} spec: limits: - default: memory: 2Gi cpu: '2' defaultRequest: memory: 1Gi cpu: '1' type: Container - apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-from-openshift-ingress namespace: ${PROJECT_NAME} spec: ingress: - from: - namespaceSelector: matchLabels: policy-group.network.openshift.io/ingress: "" podSelector: {} policyTypes: - Ingress - apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-from-openshift-monitoring namespace: ${PROJECT_NAME} spec: ingress: - from: - namespaceSelector: matchLabels: network.openshift.io/policy-group: monitoring podSelector: {} policyTypes: - Ingress - apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-same-namespace namespace: ${PROJECT_NAME} spec: podSelector: ingress: - from: - podSelector: {} parameters: - name: PROJECT_NAME - name: PROJECT_DISPLAYNAME - name: PROJECT_DESCRIPTION - name: PROJECT_GROUP - name: PROJECT_REQUESTING_USERApply the above template to

openshift-confignamespace.oc create -f multiTenantTemplate.yaml -n openshift-configThis includes all aspects which are required for a cluster to host multi-tenant projects. In addition to ResourceQuota, LimitRange, and NetworkPolicy OpenShift also provides Security Context Constraints(SCC) which by default get applied to Pods before their creation. You can look at default policies applied here.

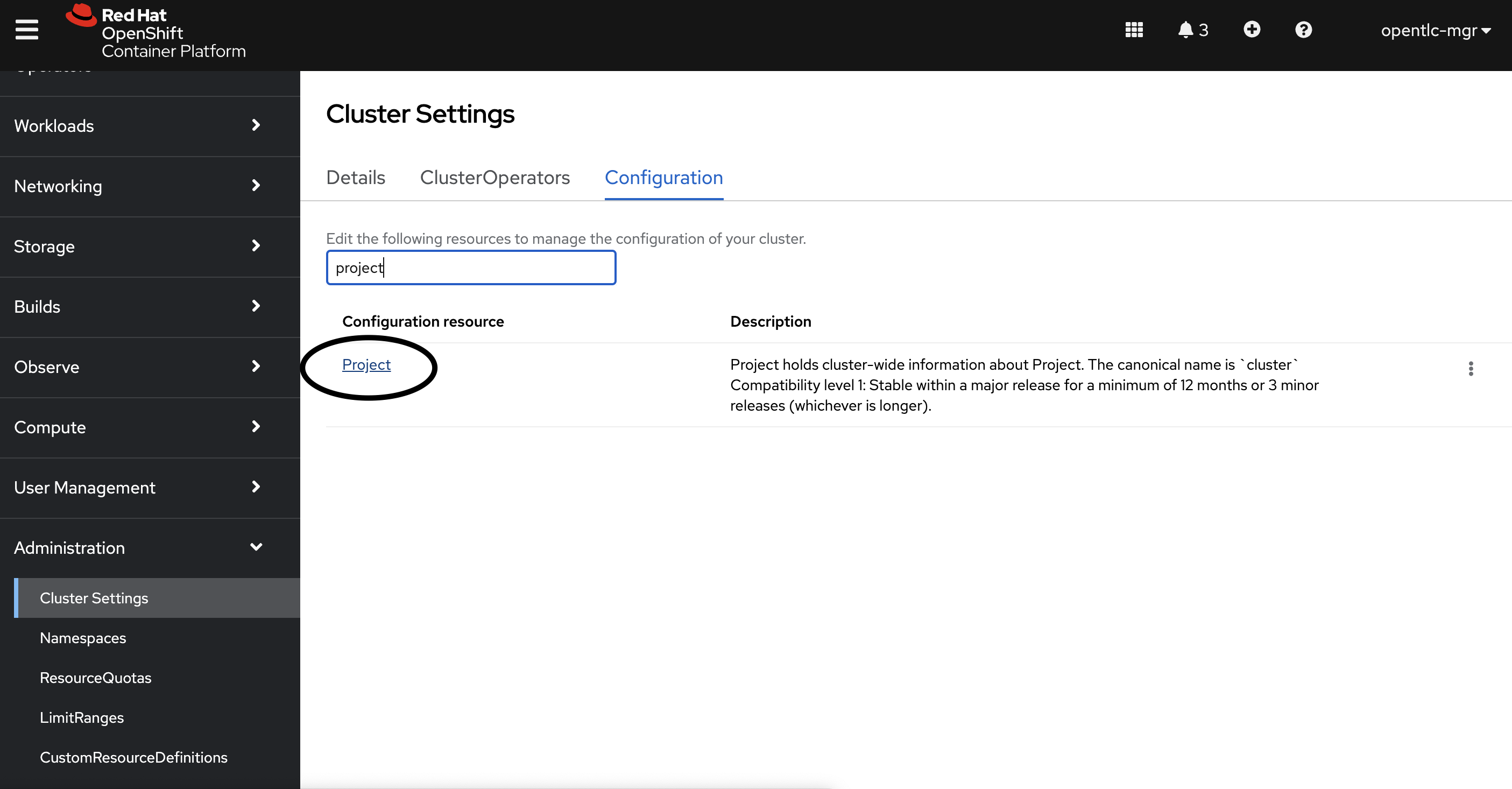

Once you have a template created locate

Projectconfiguration under Administration, Cluster Settings as shown below screenshot.

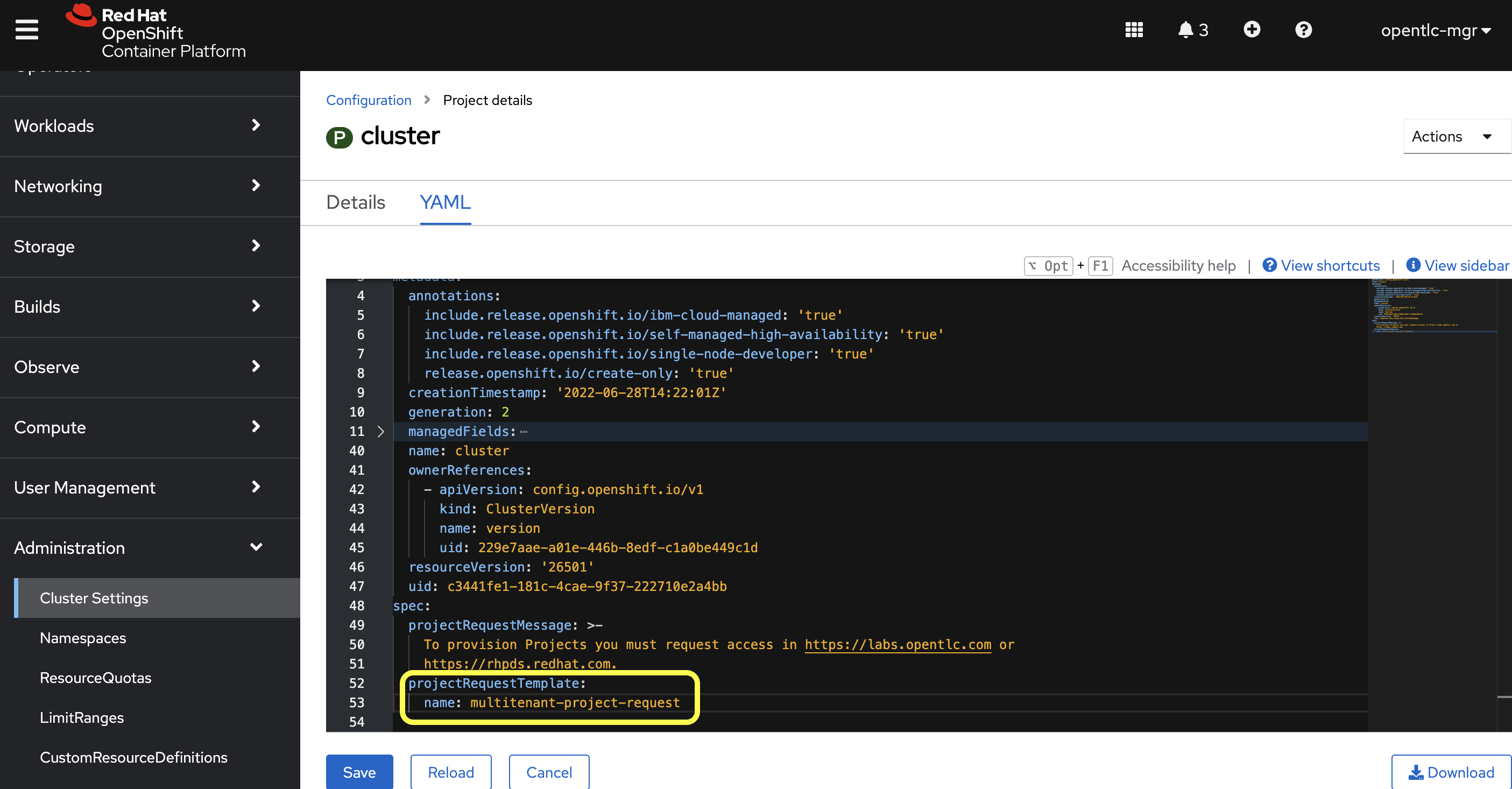

Update the

specsection to include theprojectRequestTemplateand name parameters, and set the name asmultitenant-project-requestwhich was the project template created on step#2. The default name isproject-request.

With our project template we also created a RoleBinding. And in that, we assigned only

editClusterRole to the Groups. This allows users to be able to modify resources to their project only and does not give them the power to view or modify roles or bindings. You can read more about different roles available here.

Once you have set up the above structure and configurations, it will become very easy for anyone to onboard new projects in a cluster. In certain cases, you can also configure Identity Provider for Authn and Authz while creating groups. Perhaps in my next post, I can show how we can use identity providers and sync groups with users in our cluster.

If you have any other suggestions or comments feel free to leave them below!

Subscribe to my newsletter

Read articles from Ravi Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ravi Sharma

Ravi Sharma

Senior Solutions Architect with RedHat, helping teams move to Cloud, adopt the best practices, and keep experimenting and learning.