Implementing rate limiting in NodeJs using Token bucket algorithm

Davis Omokaro

Davis Omokaro

I recently interviewed for a position and was given some sort of system design question that challenged my knowledge on preventing network congestion in an application and part of the challenge was to build/propose a high level architecture that prevents this. Part of what comprised as a solution was geared towards throttling. At the center stage of all this was a key element: rate-limiting, which I'm going to be writing about in this short article.

Prerequisite

- Some knowledge on throttling in an application

- Knowledge of NodeJs and it's runtime environment

What is a rate limiter

In order not to reinvent the wheel, i'll quote directly from cloudfare:

Rate limiting is a strategy for limiting network traffic. It puts a cap on how often someone can repeat an action within a certain timeframe – for instance, trying to log in to an account.

This leads to the question, why do we need to implement rate limiting feature in our application or what benefits do we get from implementing rate-limiter.

Why do we need a rate limiter

Different teams/organisations would have different reasons for implementing a rate limiting feature in whatever product that they're shipping. Among the top reasons though as to why teams would consider putting that in place is to avoid a particular endpoint being bombarded with too many request at the same time. Other reasons might include:

Keeping cost under control especially when using cloud providers

Businesses primarily using cloud providers take advantage of rate limiting feature to control their OpEx(Operating Expenditure) because of it's pay-as-you-go model. They can invariably estimate the cost associated with requests on their applications.

Could be used as a method in preventing DDoS attacks

Rate limiting can serve as a defence against DDoS attack intentionally or unintentionally done. Although this isn't enough against that but it could help in some way in preventing starvation of resources.

Achieving rate limiting

There are different ways you can achieve rate limiting in your application. Some of the ways include:

- Sliding window

- Leaky bucket algorithm

- Token bucket algorithm

We're going to be focusing on the token bucket algorithm.

What is a token bucket algorithm

Let's use an imaginary bucket that starts out empty in which tokens about to be spent are kept and gotten from. The bucket has a maximum capacity as to the number of tokens it can have at a particular time. Asides having a maximum capacity, it also has a refill duration in which it accepts new tokens as long as a request spends tokens from the bucket. The refill configuration works with whatever personalized parameters you use. Take for example you have a maximum bucket capacity of 8 tokens and a request cost 2 tokens you might set the refill configuration to be refill duration = 2/8 which gives 0.25 seconds. So you have a token put into the bucket every 0.25 seconds. What happens though when the client spends token faster than the bucket gets refilled? You are then served a 429 http status error which shows a message like this "Too many request".

Implementing token bucket algorithm in NodeJs

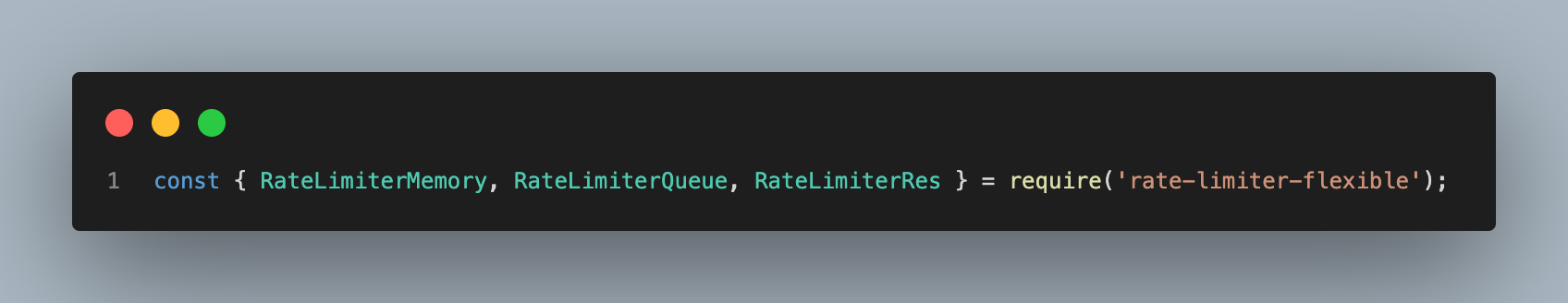

In order to implement this, a simple "Hello world" server was used with the help of NodeJs server framework Express and an NPM library called 'rate-limiter-flexible'.

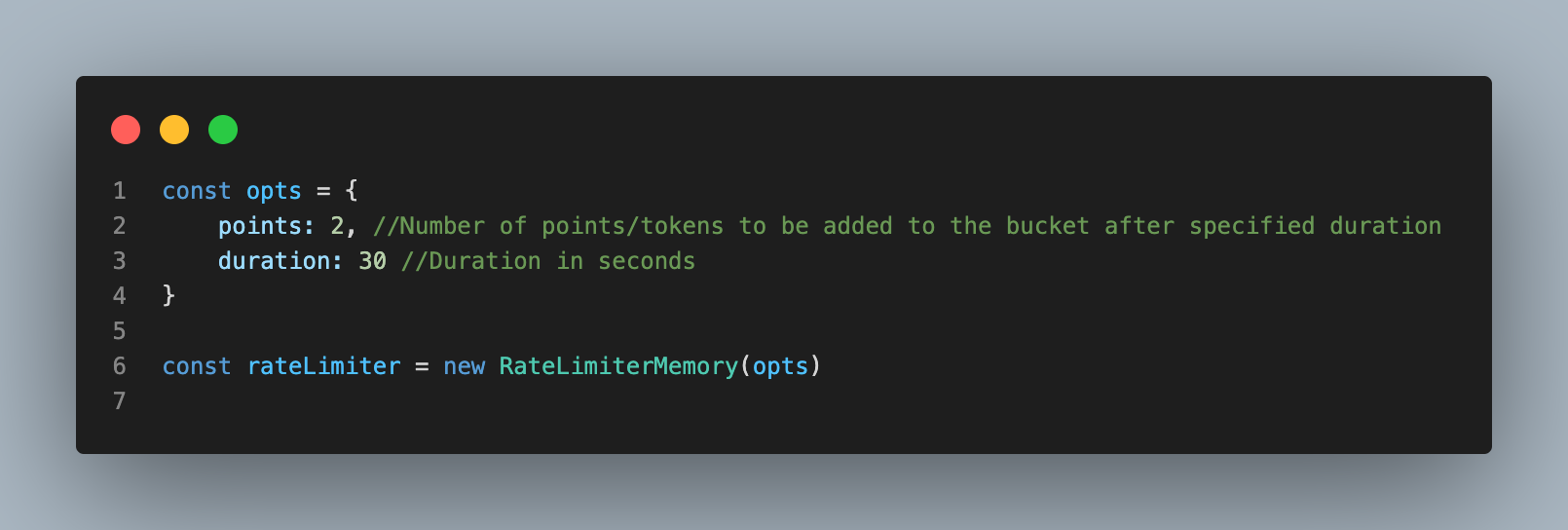

You'd then need to initialize it and pass in the options as shown below:

Points: This corresponds to the number of tokens/points to be added during a specified interval.

Duration: This represents the interval at which tokens/points are added to the bucket.

Next, we set it up in front of the route we're trying to rate limit. Add the following code below to the route you're trying to rate-limit:

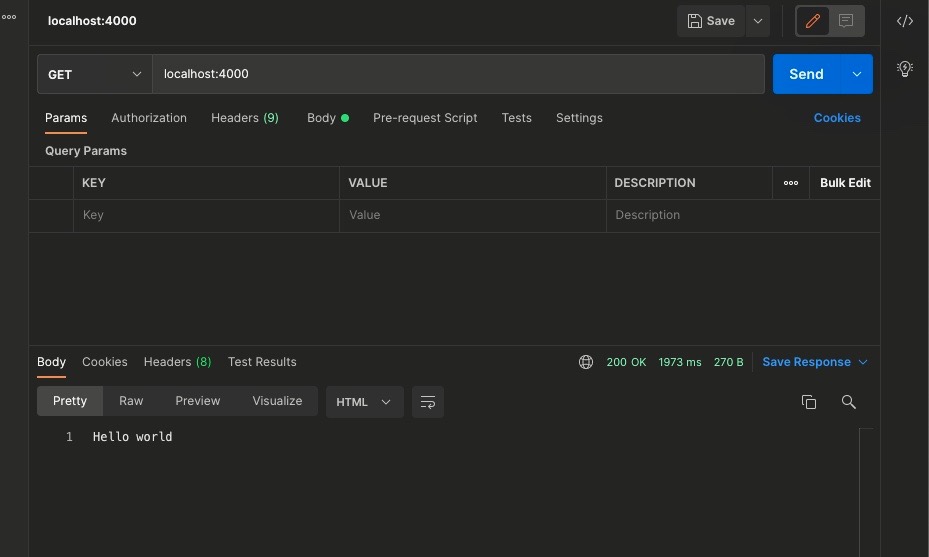

In this instance we're trying to rate-limit the homepage route(this can work for any route you intend to limit). On line 2 we're calling the consume method(a promised based method) which takes in two parameters: key and points. The key in this instance representing the clients IP address and points being number of tokens/points to be consumed per request. If the promise is successful we can write a logic to allow the request through, in our example we're responding with 'Hello world". You can also manipulate the promise object(rateLimiterRes) with whatever logic you want to achieve.

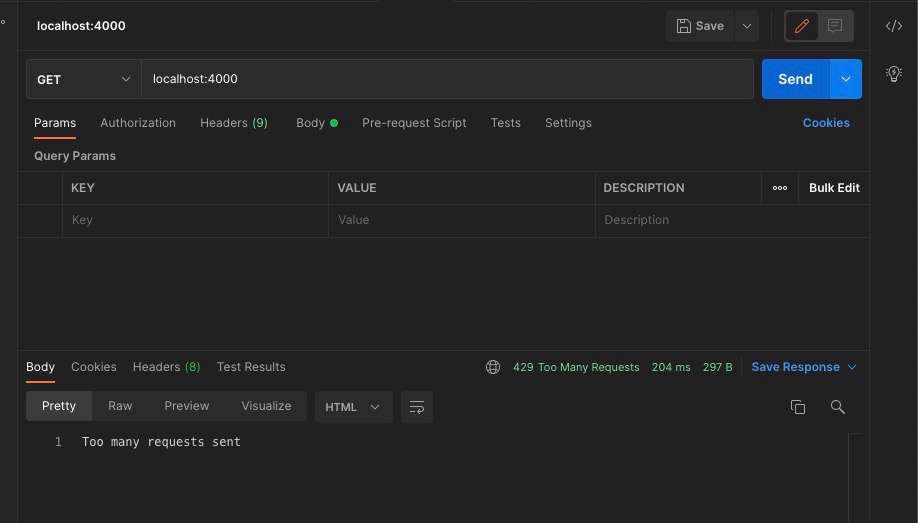

On the other hand if the promise is rejected, you'll be served with the 429 http error code. Below shows the examples on both a successful and rejected promise:

We get a 200 Ok response on successful completion of the request.

When the request get rate-limited we get a 429 limit error.

In conclusion: One of the main reason that makes this particular library one of my favorite is it allows you to share state with other servers/processes, it also works in a distributed environment.

Subscribe to my newsletter

Read articles from Davis Omokaro directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by