System Design

Quang Huong Nguyen

Quang Huong Nguyen2 min read

TextBenefits of using an API rate limiter:Text

- Prevent resource starvation caused by Denial of Serive

- Reduce cost. Limiting excess requests mean fewer servers and allocation more resources to high priority

- Prevent the server from being overloaded Process: Step1 : Establish design scope: -> server-side rate limiter , client-side Step 2 - Propose high-level design and get buy-in

- Gateway -Algorithms for rate limiting

- Token bucket : Bucket size, Rate refill Pros: • The algorithm is easy to implement. • Memory efficient. • Token bucket allows a burst of traffic for short periods. A request can go through as long as there are tokens left. Cons: • Two parameters in the algorithm are bucket size and token refill rate. However, it might be challenging to tune them properly. +Leaking bucket: FIFO Bucket size, Outflow rate Pros: • Memory efficient given the limited queue size. • Requests are processed at a fixed rate therefore it is suitable for use cases that a stable outflow rate is needed. Cons: • A burst of traffic fills up the queue with old requests, and if they are not processed in time, recent requests will be rate limited. • There are two parameters in the algorithm. It might not be easy to tune them properly Fixed window counter algorithm Sliding window log algorithm Sliding window counter algorithm

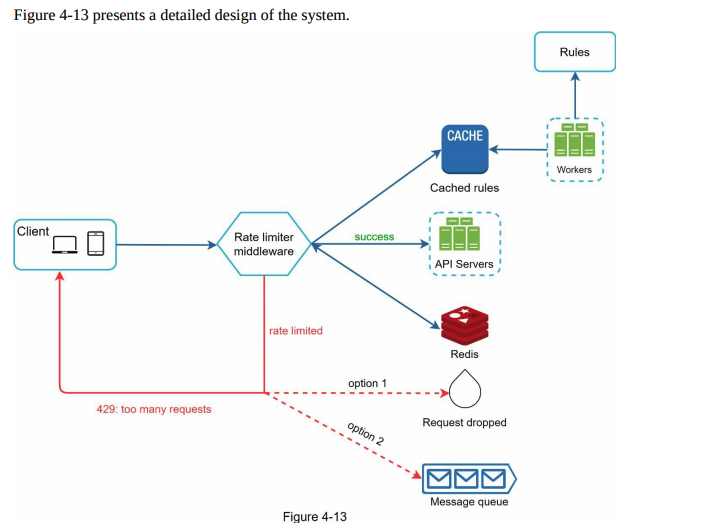

High-level architecture

track of how many requests are sent from the same user, IP address, etc. -> redis cache -> INCR and EXPIRE.

0

Subscribe to my newsletter

Read articles from Quang Huong Nguyen directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Quang Huong Nguyen

Quang Huong Nguyen

Gopher