3. The Rendering Pipeline

Kemal Enver

Kemal Enver

The GPU that's part of the graphics card in your computer is a piece of hardware that's capable of processing lots of data, in parallel really quickly. The types of processing it can perform are highly specialised and this is what allows it to perform all of the calculations necessary to render 3D graphics. The data that comes in to the GPU goes through many stages, combined these are referred to as a pipeline.

In the first part of this series I discussed some of the history of 3D graphics APIs. A characteristic of these early APIs was that they were not programable by developers, and the rendering pipeline was fixed. In modern APIs this limitation has been lifted, and parts of the pipeline are now programmable, this has increased the flexibility of the APIs but also introduced some complexity.

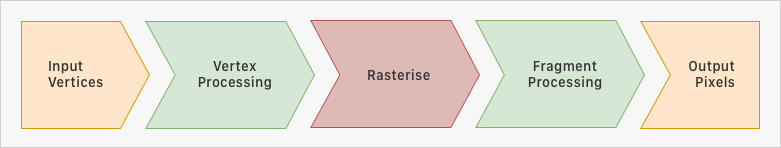

In the diagram above you can see that there are some inputs and outputs to the entire pipeline, and three main stages of processing. For simplicity only the really important stages of the pipeline are shown, but you should be aware that there are more.

At each stage of the pipeline an input and output is expected - for example the output of the vertex processing stage, becomes the input to the rasterisation stage; and the output of the rasterisation stage is the input to the fragment processing stage.

Input Vertices (Data)

The inputs to the pipeline take the form of some kind of data. This data can be anything at all, it's totally up to the developer to decide what to send to the pipeline, and how that data is structured. In these early examples we'll send through some vertices and colours for a single triangle.

Vertex Processing

The next stage of the pipeline is user programmable and performs some processing on the vertices that were passed through. The program that we'll write to perform this processing is called a vertex shader. Our first vertex shader will do very little, but in the future this is where we will be doing a lot of the heavy lifting to make things look 3D. In short this stage determines where things appear on the screen.

Rasterisation

The next stage of the pipeline is a fixed function step (can't be programmed). Rasterisation is the step of taking our geometry (3D scene objects) and mapping them to pixels on the screen. There are a few algorithms for doing this, but modern hardware is highly optimised to do this quickly. This stage is fixed as there is nothing to be gained by allowing a developer to do something custom – the hardware is already doing this in the best way possible.

Fragment Processing

The outputs from the rasterisation stage are fragments. The fragment processing stage is programable by writing a fragment shader, and allows us to add colour to the fragments. This can be as simple as returning the same colour for every pixel (resulting in a mono coloured fragment) or taking in to consideration the position of lights and textures to create more realistic effects. In short this stage determines what colour things appear on the screen.

What are Shader Programs?

Shader programs are small snippets of code that are run on every vertex or pixel. In Metal these programs are written using a language called MSL (Metal Shader Language) which resembles C++. The reason they run on each vertex or pixel is to take advantage of the multiple processing cores on the GPU hardware. For example if we could only loop through every pixel, processing would be limited by the throughput of one processing core. By writing programs that act on each individual item, we can take advantage of a GPUs multiple cores to process many things all at once.

Note: For the purpose of this series we are focussing on simple, entry level 3D graphics. You should know for future reference that there are other types of shaders, such as compute (mainly for machine learning), and more recently in Metal V3 two types of Geometry Shader (Object and Mesh) which replace the vertex shader stage.

What's next?

So armed with our knowledge of what a rendering pipeline is, we're ready to start making use of one. In the next instalment we'll implement a rendering pipeline and get the GPU to draw a triangle to the screen.

Subscribe to my newsletter

Read articles from Kemal Enver directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by