AI Creates STUNNING Images from text (Dall-e 2)🤯

chirag sharma

chirag sharma

Meet DALL • E

DALL-e is a new AI system that can create realistic art and images from a description in natural language.

It means that all the pictures above are not made by any artist, They were Dall-e generated images.

It is basically a giant brain that uses whole of the internet to create unique imagery with in 20 seconds.

It means that all the pictures above are not made by any artist, They were Dall-e generated images.

It is basically a giant brain that uses whole of the internet to create unique imagery with in 20 seconds.

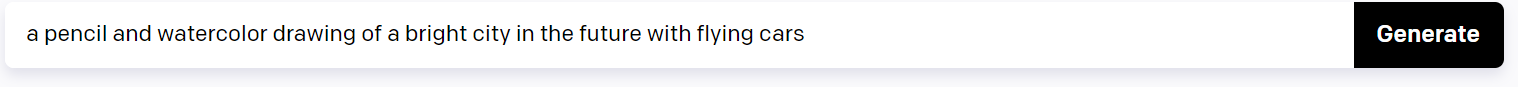

The concept is very simple. You just have to add information in the text box and click on Generate.

The system generates variations of images based on your input text.

The system generates variations of images based on your input text.

How DALL-e works?

There are two main AI technologies that are used in dall-e:

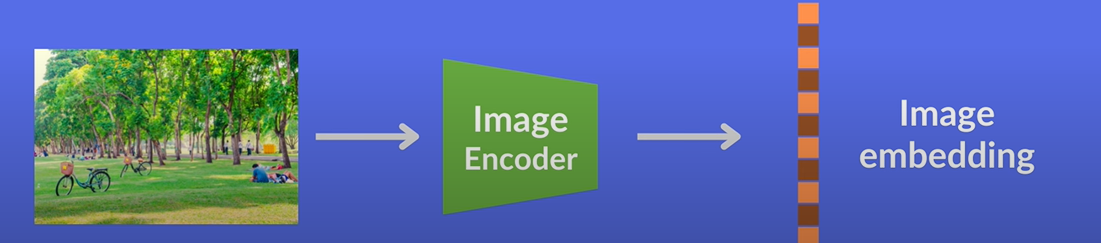

CLIP Clip is neural network model that returns the best caption given an image. Clip is the part of matching images to text and uses that match to train the computers to understand concepts in images. So, it can generate new images from the same concept. When you search for "Teddy bear wearing a hat", dall-e knows what "Teddy bear" is , it knows what "Hat" is and it knows what "wearing" is, so it creates a completely new versions of the image from it. For able to do this matching, Clip uses two encoders:

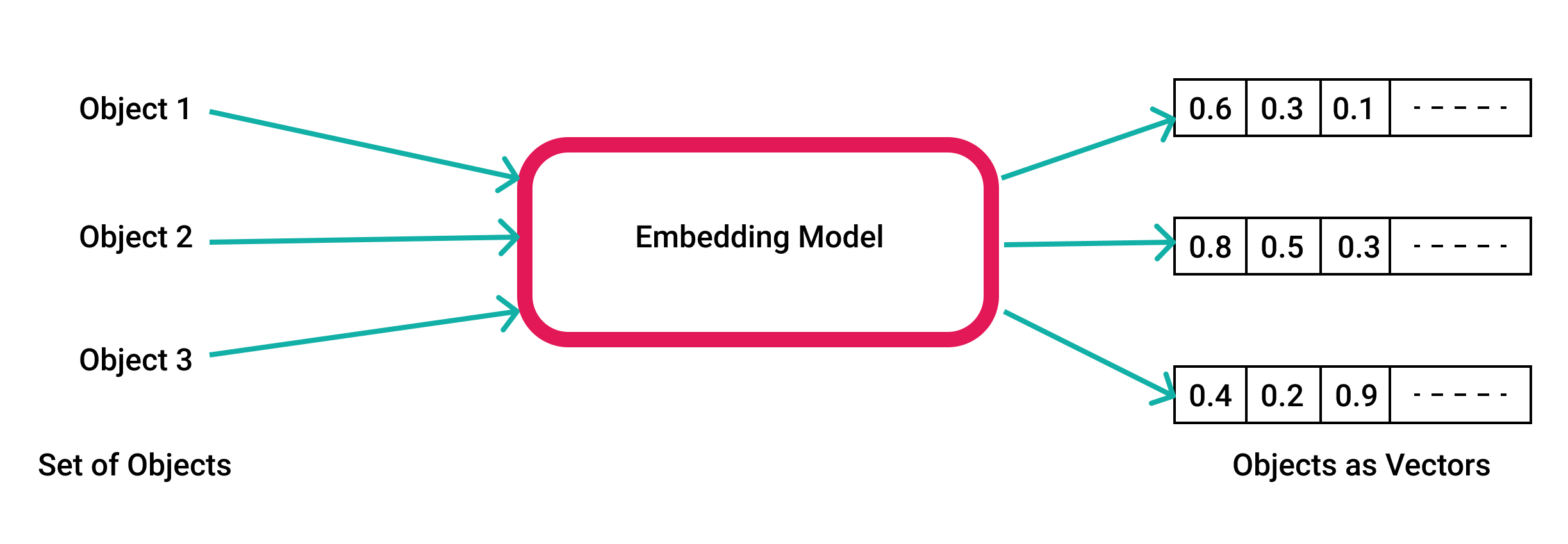

Image encoder: It turns images into image embeddings

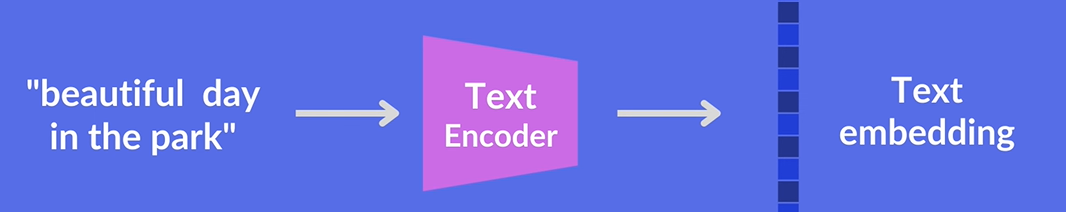

Text encoder: It turns text or caption to text embeddings

What is embeddings? Mathematical way of representing information.

CLIP does not have the ability to create good resolutions images by itself, It just creates a gist of an image.

- DIFFUSION: Diffusion models are generative models. They take a piece of data for example a photo, and gradually add noise to it until it is not recognizable anymore. While adding noise to the image, which erases some amount of information from it. From there they try to reconstruct the photo and return to its original form. By doing so it learns to regenerate what might have been erased in each step This gradually makes the image more and more realistic, eventually yielding a noiseless image.

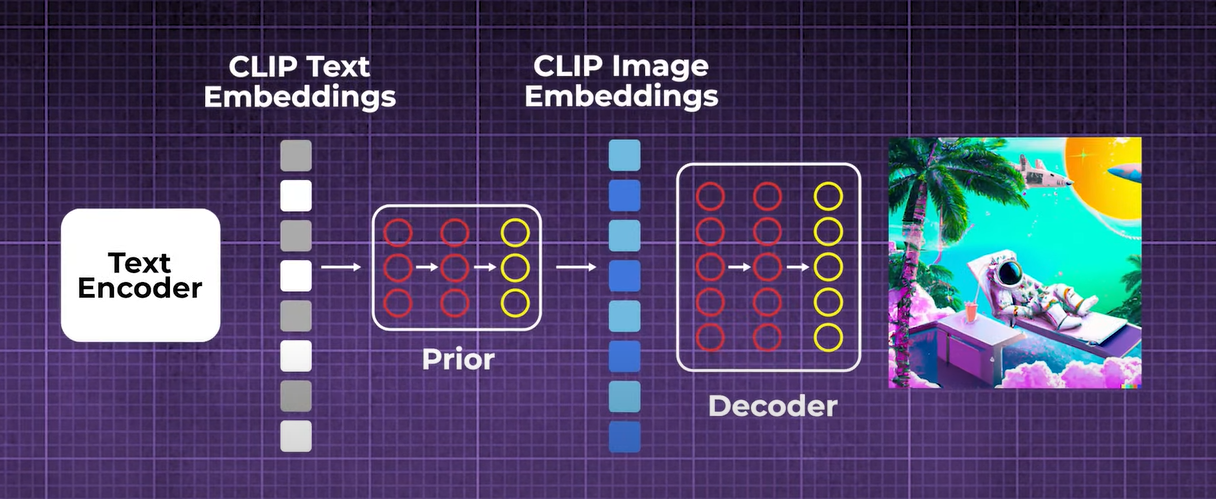

OK !! So you learned about what CLIP and DIFFUSION basically are, now let's use this information to dig deeper in Dall-e . Let's understand the basic architecture of dall-e:

The prior takes the CLIP text embedding which is easily generated from the caption through the CLIP text encoder and creates CLIP image embedding out of it.

The decoder is also a diffusion model but a little adjusted one also called unCLIP. It receives both a corrupted version of the image it is trained to reconstruct, as well as the CLIP image embedding of the clean image.

The prior takes the CLIP text embedding which is easily generated from the caption through the CLIP text encoder and creates CLIP image embedding out of it.

The decoder is also a diffusion model but a little adjusted one also called unCLIP. It receives both a corrupted version of the image it is trained to reconstruct, as well as the CLIP image embedding of the clean image.

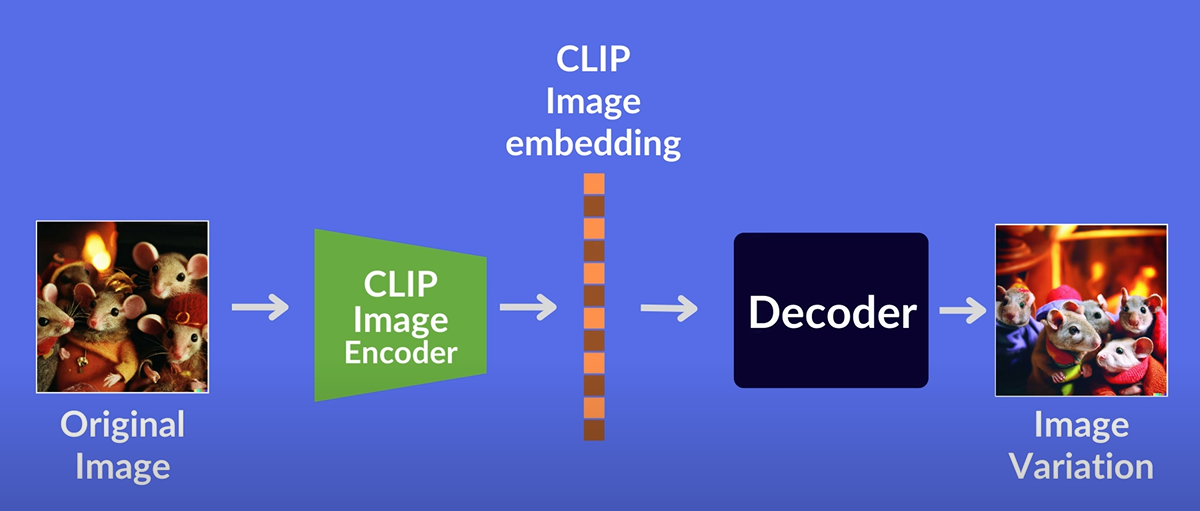

How Variations are created:

Making a variation of an image means that you keep main style and elements of the image but change trivial details. In dall-e, we use the original image in the CLIP image encoder and running through the decoder.

The Bigger Picture

A threat to designer jobs and stock images 🥲??? This is the first thought which comes to mind while using this platform. They can generate high quality realistic images within 20 seconds which is far beyond from human skills. Some experts disagree and emphasize that these types of tools will help designer's for better references so that they can create better designs. However, images generated from DALL-E and its alternatives are expected to be alternatives for expensive stock images. But stock image platforms could use them to expand their service offerings as well as their stock image repositories. Overall We can say that this is a revolutionary tool which further in future will expand and used for various purposes and Humans will adapt with this new technology very rapidly. Let's look at some amazing use cases of dall-e2

Film & television cameos

Want your favorite character in some other movies guest spot? Scroll through this great thread from @HvnsLstAngel on twitter

Sneaker design

How easy it becomes for a designer who uses dall-e generated images. Go through this amazing sneaker design thread generated from dall-e.

Furniture design

The fruity furniture theme has become a familiar idea within the AI-generated design space. Imagine a house completely designed from dall-e 😎

Digital artwork

Many people say digital artists jobs are in danger But I think dall-e has made life of digital artists more easier as they can use it for their design references.

Posters

seeing AI attempt to render typography can often shatter the illusion of intelligence, as it struggles with typesetting and generating believable words. That said, where past models failed, Dalle-2 is begining to succeed, as these examples from @jmhessle illustrate:

Fashion

There’s a whole host of AI companies that have sprung up over the last 5 years that help fashion retailers to digitally replace outfits on stock models or even computer generated super models.

As phenominal Dall-e is, it still has some limitations. Firstly, it is not good at generating images with coherent text. For instance, when asked to generate images with the prompt "A sign that says deep learning" It produces the below images

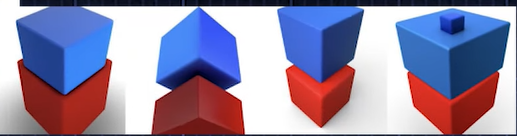

Secondly, it is not good at associating attributes with objects. "A red cube on top of the blue cube"

and many more instances where dall-e failed to generate the desirable results. as everything has it's pro's and cons, dall-e also has its cons. Presently we cannot see dall-e used commercially because of it's bias implications sometimes. But I am sure that it is a revolutionary tool which will expand rapidly in future.

Conclusion

Dall-e2 is a revolutionary tool. One should try to open imagination and create beautiful artwork.

Go on open AI platform and follow the instructions.

.

⚠️ warning: Dall-e2 is addictive.

jk do whatever you want to 🙂

Subscribe to my newsletter

Read articles from chirag sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by