Text Summarization using Facebook BART Large CNN

Charmy Dafda

Charmy Dafda

Introduction

Text Summarization using Facebook BART Large CNN text summarization is a natural language processing (NLP) technique that enables users to quickly and accurately summarize vast amounts of text without losing the crux of the topic.

We've all read articles and other lengthy writings that completely divert our attention from the topic at hand because of a tonne of extraneous information. This can become frustrating, especially when conducting research and gathering reliable data for any purpose. How will we overcome this?

The only answer is text summarization. In light of this, let's examine the two unique approaches to text summarizing.

Two Unique Approaches To Text Summarization

1. Extractive Text Summarization

The process of extractive text summarization looks for important sentences and adds them to the summary, which includes exact sentences from the source text.

2. Abstractive Text Summarization

In this method, it makes an effort to recognize key passages, evaluate the context, and deftly provide a summary.

Now let’s see how we could achieve this using Python. There are varieties of approaches in python using different libraries such as Gensim, Textrank, Sumy, etc. But today, we’re going to execute it using one of the most popularly used and most downloaded models from hugging face which is facebook/bart-large-cnn model.

Text Summarization using Facebook BART Large CNN

BART is a transformer encoder-encoder (seq2seq) model that combines an autoregressive (GPT-like) decoder with a bidirectional (BERT-like) encoder. In order to pre-train BART, it first corrupts text using a random noise function and then learns a model to restore the original text. BART performs well for comprehension tasks and is especially successful when tailored for text generation, such as summary and translation, e.g. text classification and question answering. This specific checkpoint has been refined using a big database of text-summary pairings called CNN Daily Mail.

Okay now, let’s get into the business.

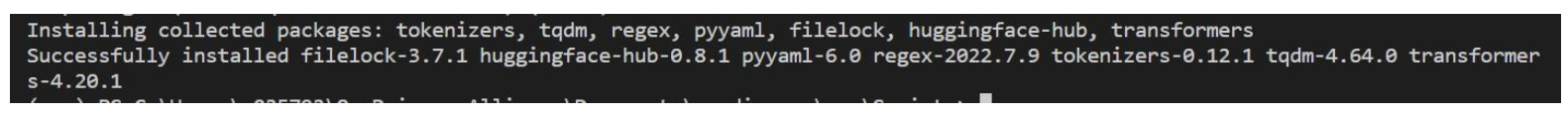

Step 1. Install Transformers

As a first step, we need to install transformers which is a thing in the NLP domain and one that facilitates achieving numerous complicated tasks in NLP.

pip install transformers

Step 2. Install Packages

Next step we’ll install packages that could extract text from both DOCX and PDF formats, the most popularly used formats in documents. For PDF, we’ll use pdfminer and docx2txt is used for extracting DOCX files. You can even summarize by giving input to a variable defined in the same program.

pip install pdfminer.six docx2txt

Step 3. Import Packages

Now we will import our packages

From transformers import pipeline

From pdfminer.high_level import extract_text Import docx2txt

Step 4. Load Model Pipeline

The model pipeline should first be loaded from transformers. Mention the task name and model name when defining the pipeline module. We use summarization as the task name argument and facebook/bart-large-xsum as our model.

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

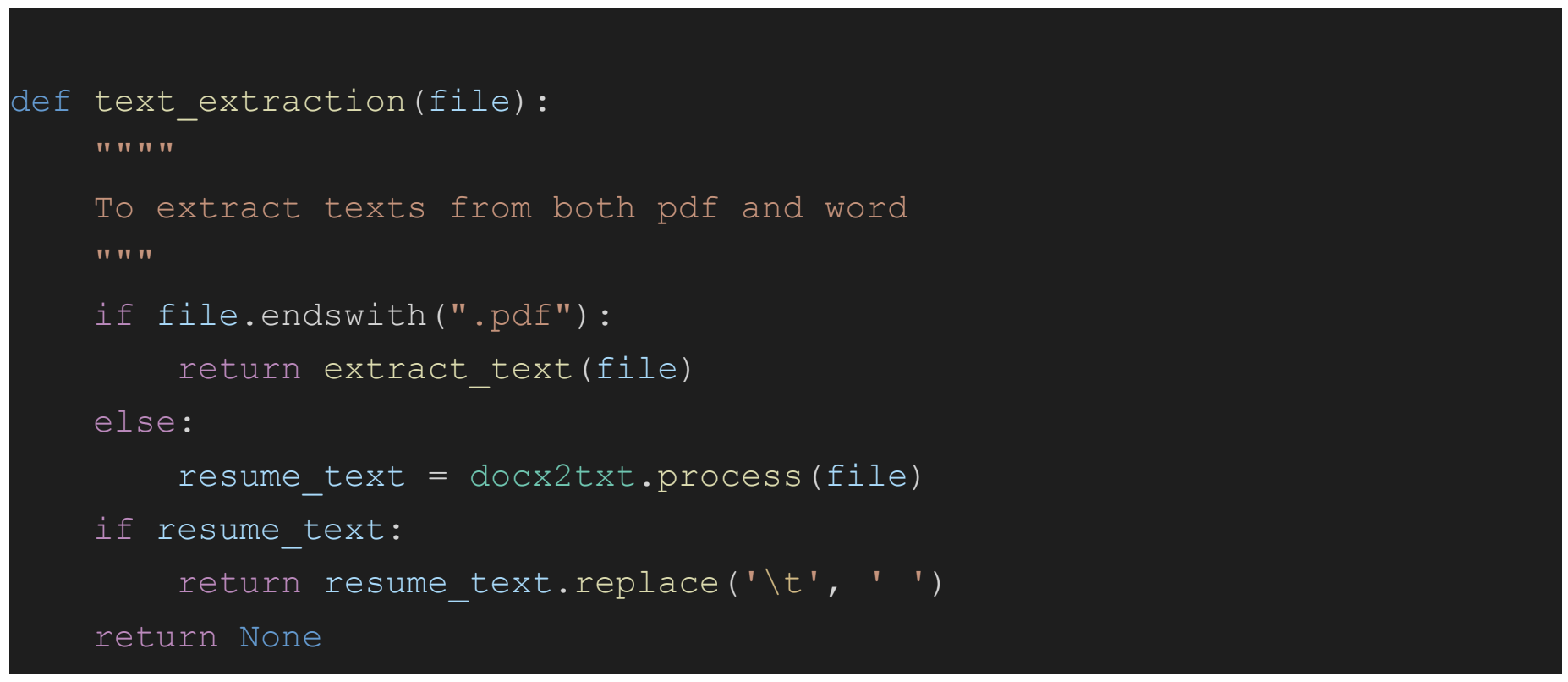

Step 5. Function to Read and Extract Text

Now, let’s write a function to read and extract text from both DOCX and pdf.

Step 6. Testing

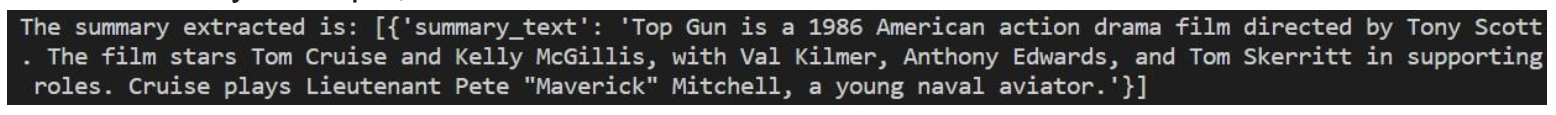

For testing, we’ll use the Wikipedia content of Tom Cruise’s famous flick Top Gun(1986) from which I have copy-pasted 290 words of content into a DOCX file.

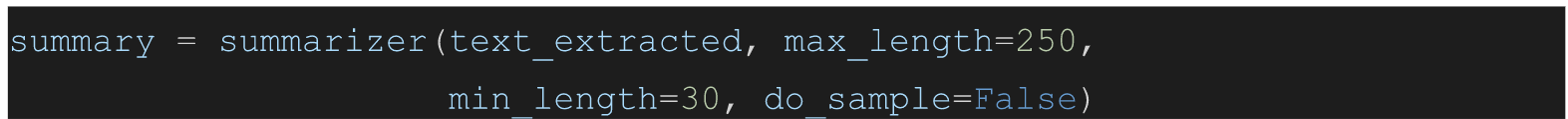

Step 7. Summarize Text

Now the extracted text is stored in the variable text_extracted.Next, we will summarize the extracted text using:

Here, the summarizer function is used and the first argument is the input text which we have stored in our variable text_extracted. The second argument is the maximum length of the summary. The third argument is the minimum length of the summary. We can adjust the maximum and minimum length in accordance with our requirements here we’re using max_length = 250 and min_length = 30. In the end, we’ll execute a print function to see our summary:

Here, the summarizer function is used and the first argument is the input text which we have stored in our variable text_extracted. The second argument is the maximum length of the summary. The third argument is the minimum length of the summary. We can adjust the maximum and minimum length in accordance with our requirements here we’re using max_length = 250 and min_length = 30. In the end, we’ll execute a print function to see our summary:

print("The summary extracted is:", summary)

Output

Voilà.!!! Here’s your output, and it’s returned in a list.

Now test it with your own sample documents and analyze the results. Happy coding!!!

Now test it with your own sample documents and analyze the results. Happy coding!!!

Conclusion

Out of many methods of text summarization we have used the most updated and advanced model in this tutorial. If you visit hugging face you could see a lot of other models to test out. You can further extend this solution into a web application. This solution can be used in multiple applications such as Median monitoring, Newsletter optimization, Media, Meetings, etc.

Subscribe to my newsletter

Read articles from Charmy Dafda directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by