Why I Created Thousands Google Meet Links and Rate Limited My Account

Shaheer Sarfaraz

Shaheer Sarfaraz

The Idea

It's simple really. What if you could have a Google Meeting with a code of your choosing.

As you might already know, creating a new meeting with Google Meet creates a new link with a pattern of random unique letters at the end. The pattern looks like this xxx-xxxx-xxx.

My idea is to create thousands of these URLs in the hope that maybe one pops up with a phrase in it that I liked, maybe my name, maybe my initials, it could be anything.

All in all, It's a project purely for fun. It's fun to just mess around every once in a while!

What I Already Knew

- A new link can be generated by visiting a specific url

- Links are always unique and random

- A Google Account which creates a link is always its host

- A meeting link is re-usable after leaving

How To Approach This Problem

There were a few ideas on how to generate these meetings and get their links.

Python + Selenium

Selenium is a web browser automation tool, and there's bindings for the Selenium API in Python

The Good

- An advantage of using Selenium would be the ability to hide a browser window completely whilst it generated links using Headless Mode. This would allow the program to run in the background even when you were using your device

The Bad

- Accessing a Google account in a Selenium driven window requires you to jump through quite a few hoops because of the possible breach in security this could lead to. This project needed to be built quickly, the quality of the code didn't matter too much because at the end of the day, this project won't ever serve a real purpose

- Before this, I'd only ever heard of Selenium and had a glance at the documentation, and never actually used it in a project, so there'd be a learning curve to overcome during this project too

Python +

The second idea, and the one I ended up going with was using Python andpyautoguipyautogui(a package to use a Python script to control the mouse and keyboard).

The Good

- There would be no browser compatibility issue, since I would be using my normal browser with its default Google Account.

- And, there'd be a easy and quick way to click on elements on screen. Simply take a screenshot of the element you want to click and reference it in your code. This completely avoids going through the pain of finding out which selector is precise enough in Selenium.

- Another positive for me was the fact that I had been using

pyautoguifor a while before this for AutoClass, so using it was almost second nature to me

The Bad

- It's slower than Selenium for sure, but absolute speed just wasn't the main goal here.

A Laundry List of Problems

How To Find Out When The URL Has Changed

The way I first imagined the program working was by looking at the URL bar of the browser. With Edge, whenever I joined a meeting, it would show a camera icon, indicating that the camera was being used. This of course would only happen after the page loaded up completely (and hence the link in the URL bar had changed from

https://meet.google.com/newto a new URL with the pattern), and since I could easily take a screenshot of anything on the screen and detect its presence and location, I felt this was a good place to start.Coding this up wasn't an issue, especially with the helper functions like

findImageTimeoutI had made previously which add some functionality which is actually on the roadmap of thepyautoguipackage. As the name suggestsfindImageTimeoutattempts to find an image on screen usingpyautogui, and keeps attempting to find it until the specified timeout is up.How To Get The URL From The URL Bar

Now I knew when the URL had updated to the new one, however I still hadn't gotten around to actually getting the URL into a variable. Checking to see if the camera was visible did help me in this case because the new URL is just slightly left of the where the camera icon appears. Now we know how to tell the program where to find the URL, but how to get it into a variable?

Purely by chance, I had stumbled across this exact problem in an earlier project (more on that in another post), and I knew how to solve it now. Using

pyautogui, you can send hotkeys likectrl + cto copy and in Python, you can access the contents of the clipboard in a few different ways.- The one I used was

pyperclipbecause of its simplicity, and reliability. - The second option was using

win32, which had extremely verbose syntax, and had to be executed with quite a few pauses withtime.sleep()to get it to work reliably, so it far slower, and more clunky to work with.

- The one I used was

The Complete Roadmap

So to get the URL from the browser, I can have the program click the URL bar slightly left of the coordinates of the camera icon, which automatically selects all the text in the field, then I can send a

ctrl + cusingpyautoguito copy the created URL into the clipboard. I can then read the clipboard usingpyperclip, and store it in a file.

v1.0

After implementing the very initial concept of the project laid out above, here's some gripes I still had with the program.

Clunky Codebase

The code base at this time was very bodged together, almost feeling like it was different pieces of code just duct taped together without thought. The program wasn't too reliable either, eg if a link failed to load, then the program would be unable to handle it.Speed

I measured the performance of the script in terms of seconds per link. In its current state, the script was taking around 13-15 seconds per link. This was directly and severely impacted by my (at the time) subpar internet performance, and other factors like when Edge decided to show the camera icon.

v2.0

Here comes the single biggest improvement to the speed of the program.

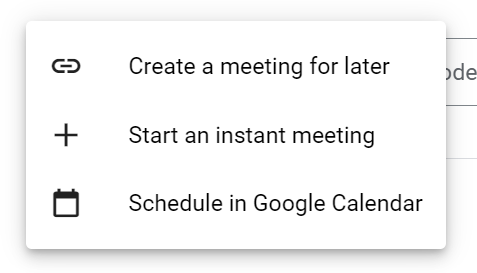

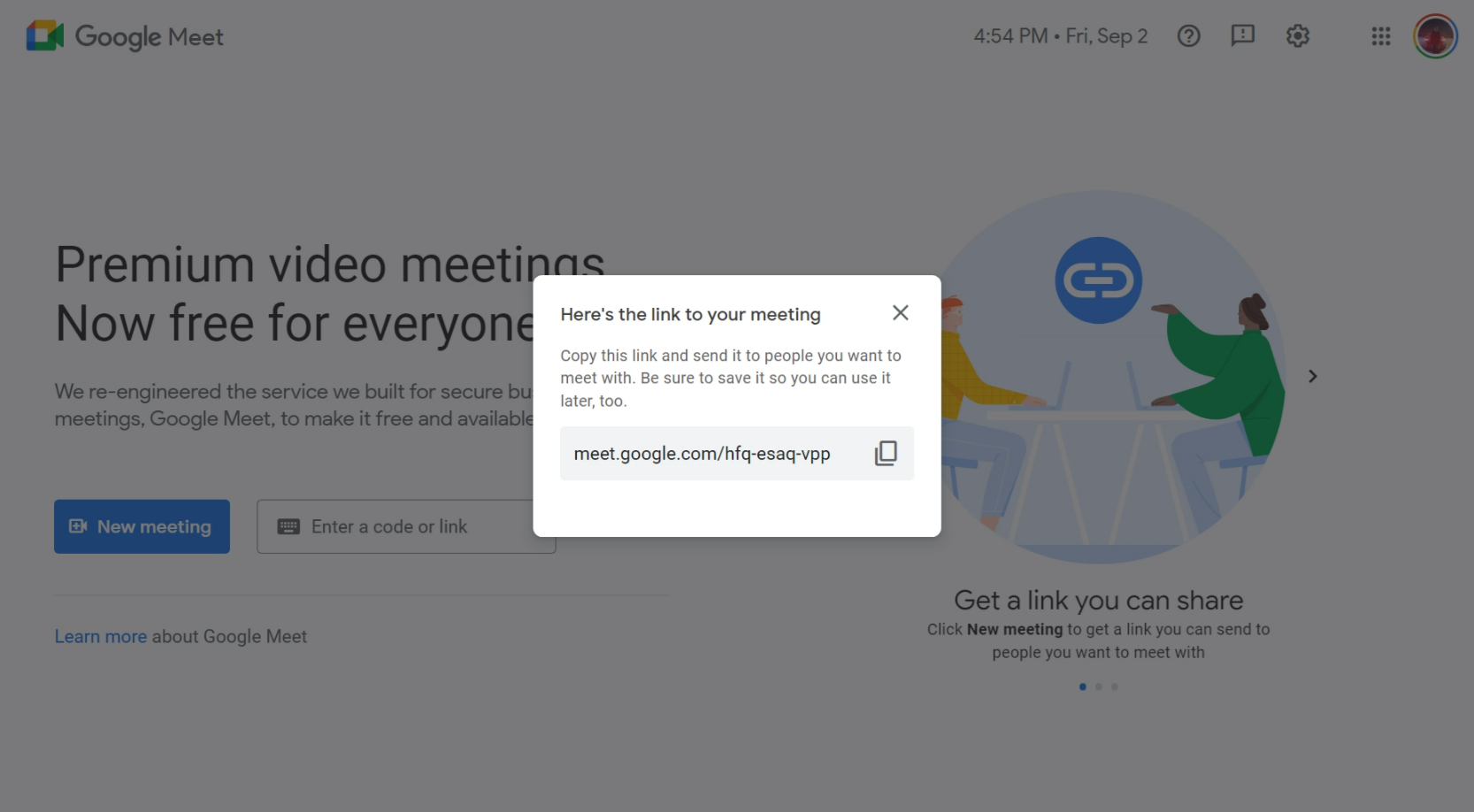

The Google Meet Homepage has an option to create the link for a later time.

Crucially for us, to create a new link, this option doesn't refresh the whole page. It loads a modal on the page, with just the link and a copy button.

Conveniently, it also has a close button on the modal, which resets the state of the webpage. This means this loop can be repeated extremely quickly.

With this method, I brought the program down to about 2 seconds per link. We're looking at roughly a 750% improvement from our initial starting point of 15 seconds per link.

v2.1

Previously, processes taking up fractions of a second wouldn't have made a significant performance improvement, since one link was taking 15 seconds. However now, with our time down to 2 seconds per link, I had to optimize these processes to improve speed further.

Factors Affecting Performance

One of the main areas where I could still improve was finding elements on the screen. Now seems like a good time to mention that just taking a screenshot of a 1080p display takes ~100ms, according to the pyautogui docs. Finding an image within this screenshot would take far more time, and so this was a main point of concern for performance. This can be improved significantly and we'll see how later.

Fractional Improvement

- To prevent

pyautoguifrom attempting to find static objects (eg the "close" button and other similar non moving objects) on the entire screen, I had to hard code the exact pixel coordinates of these locations, and clicking on these pre-defined points as opposed to taking time to find them on screen. There was at least one element I couldn't hard code however, and that was the copy button in the modal. This is because there's no set time for it to appear. It could appear in 300ms, or it could take up to 1000ms, all subject to my internet connection.

I could optimize this further however. Considering we already know that the copy button will appear only in a specific area, we can search for the copy button in that (far smaller) area instead of the entire screen. This makes the search process as efficient as it can be.

All of this improvement resulted in sub-second time for each link generally around 0.75 seconds for every link. The largest performance bottleneck now was my internet connection, and I considered this a good enough place to stop working on it, and also because I got the link I wanted to find, a link with

dakin its pattern.

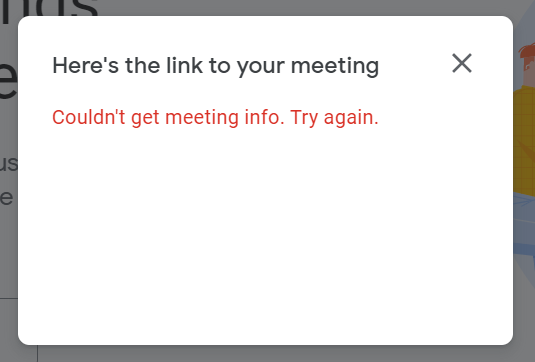

Being Rate Limited by Google

Since this program was creating links automatically (and in sub-second time), of course I left it running overnight. After a few nights of creating links, I ran into a limitation set in place by Google. If I generated more than 5000 links in a span of one hour, I got rate limited.

This was evident because the modal, instead of showing the copy button with a new link, showed an error message. I found out this limitation was account-specific, and switching to another account meant you could go all the way again. I felt quite good about myself since this was one of my first web scraping / web automation projects, and getting rate limited by Google seemed like quite an achievement.

Some Interesting Observations

- Google doesn't use the letter

Lin any of its link patterns - Google avoids common words in its links

Github

The Github Repo for the completed project is available here. Run laterMethod.py to use the Homepage method.

Get In Touch

👋 Hi, I’m @DaKheera47, you may know me as Shaheer Sarfaraz

👀 I’m interested in making web apps in React and Node, and you can view more of my projects here

💻 I'm also passionate about automating everything to improve my day-to-day life

🌱 Currently, I’m working as a Full Stack Web Developer at Project Mirage, a Design And Development Consultancy

📫 I'm free to talk any time on my email address, shaheer30sarfaraz@gmail.com

Subscribe to my newsletter

Read articles from Shaheer Sarfaraz directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Shaheer Sarfaraz

Shaheer Sarfaraz

I'm a Pakistani teenager with a passion for web development and automation