2. Cryptography - Concept of Information, Entropy and more on Hashing

Harsh Sharma

Harsh SharmaTable of contents

Information

Word "information" in communication theory relates not so much to what you do say, but instead as to what you could say.

- No. of choices we can make = Amount of Information

- If information is more predictable => It conveys less information

Example:

Bob is going.

Bob is going to the market.

Here, sentence 2 is more predictable than sentence 1 since it reveals more information "already". But sentence 1 contains more cases which could be possible than sentence 2 since it is more generic in nature.

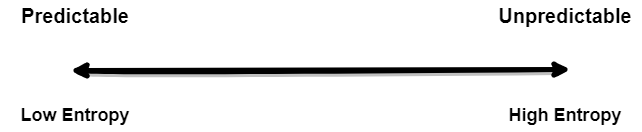

Entropy

Entropy: Amount of information conveyed by choice in bits.

Here quantity inside log () is the probability of each outcome.

Here quantity inside log () is the probability of each outcome.

Relation between Hashing and Entropy

If we must satisfy the conditions of an ideal hash function, we want it to be as Unpredictable as possible!

Now how can something be more unpredictable? How a person is unpredictable? Simply saying, more the number of outcomes => more unpredictability!

Collision Resistance: Possibility of a hash function to not produce same hash for different inputs.

A hash function should be as collision resistant as possible!

Therefore, if we see entropy and hashing, the hashing function must have high entropy i.e. as many numbers of possible hashes to minimize the probability of a hash collision.

Subscribe to my newsletter

Read articles from Harsh Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Harsh Sharma

Harsh Sharma

I am a Full Stack (Java + React) developer. I have 7+ years of total experience in Software Industry and currently working at JP Morgan Chase as full time employee.