Learning about time complexity the hard way

Yash Thakar

Yash Thakar

Introducing the Plot

I am nothing but a beginner in programming with some knowledge of C and Python under my belt, and some DSA lessons that I have been studying, as odd of a choice as it might be to go start solving problems on the project euler website, I did it, with utterly unjustified confidence, while I did get through the first two problems, problem 3 brought me down an internet rabbit hole and some lessons on what time complexity is, The goal of this article is to serve as an interesting read and tool to ignite curiosity.

Here is the aforementioned problem

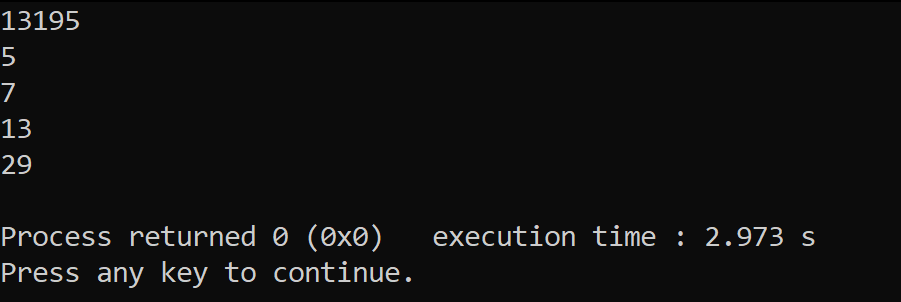

The prime factors of 13195 are 5, 7, 13 and 29. What is the largest prime factor of the number 600851475143 ?

and I did write code for the problem as shown below, but

Algorithm:

First, take the number n as input.

Then use a for loop to iterate the numbers from 1 to n

Then check for each number to be a prime number. If it is a prime number, divide n by it

If the remainder is zero print it.

You will get a ascending list of all prime factors the last one on the list is simply the largest.

Code in C:

#include<stdio.h>

int main()

{

long long int n , i, j, count;

scanf("%d", &n);

for(i=1; i<=n; i++)

{

count = 0;

for(j=2; j<=i-1; j++)

{

if(i % j == 0)

count++;

}

if (count == 0)

{

if(n % i == 0)

printf("%lld\n",i);

}

}

return 0;

}

I/O:

upon entering the example of n = 13195 the code works but you quickly run in to trouble trying to find the answer for n = 600851475143, see what happens for yourself.

The code goes for an infinite loop? No, it cant be I specifically used long long int as the data type to keep that massive number within range and it did work for n=13195. so what went wrong? the code just took too long to run, yes that bit of code in c, which is quite a fast programming language, simply has too many operations for the code to run in a reasonable amount of time; now I am sure the there are quite a lot of solutions to this problem of project Euler online but the part I got most interested in, trying to optimize the code by myself, is the part I want to talk about (see code below)

//just a snippet from the code in snippet 1

//not supposed to be complete

for(i=1; i<=n; i++)

{

count = 0;

for(j=2; j<=i-1; j++)

{

if(i % j == 0)

count++;

}

.

.

.

.

This nested for loop goes to check if the number is prime or not if it is prime and if so it increases the value of the variable count by one. Since the code runs by simple brute force, a logic so simple yet involving massive amount of calculations; for it to check if the number in this case the current value of variable 'i' is a prime number or not it simply divides the number by all the numbers from 2 to 'i-1', for the number to be prime, the number cannot be divisible by anything but 1 and itself and since those number are excluded from the for-loop, a prime number will not increment the variable count and composite number will do so.

Now since we are just trying to optimize the part of code that checks whether the number to be divided with the given number is prime or not, and does so for all numbers from '1 to n', lets look at a more specific code, below, one that prints all primes in a range from '1 to n'.

#include<stdio.h>

void main()

{

int i, j, n, count;

printf("Enter the range: \n");

scanf("%d", &n);

printf("The prime numbers in between the range 1 to %d:\n",n);

for(i = 1; i<=n; i++)

{

count = 0;

for(j=2; j<i; j++)

{

if(i%j==0)

//since prime numbers are only divisible by one and themselves

//the count will only increase if the number is not a prime

count++;

}

if(count==0 && i!= 1)

printf("%d\n",i);

}

}

here if n=100, 98 divisions will be performed for the if statement inside the nested for loop for n=1000 that's 998 calculations for a million that's two less than a million calculations JUST WHEN CHECKING IF THE LAST NUMBER IN THE RANGE IS PRIME OR NOT and given these calculations are performed in a sequential manner the code for anything larger than 5-6 digit numbers takes more than a reasonable amount of time to run.

Time Complexity

But first lets browse the web to try and understand time complexity,

The time complexity of an algorithm quantifies the amount of time taken by an algorithm to run as a function of the length of the input. Note that the time to run is a function of the length of the input and not the actual execution time of the machine on which the algorithm is running on.

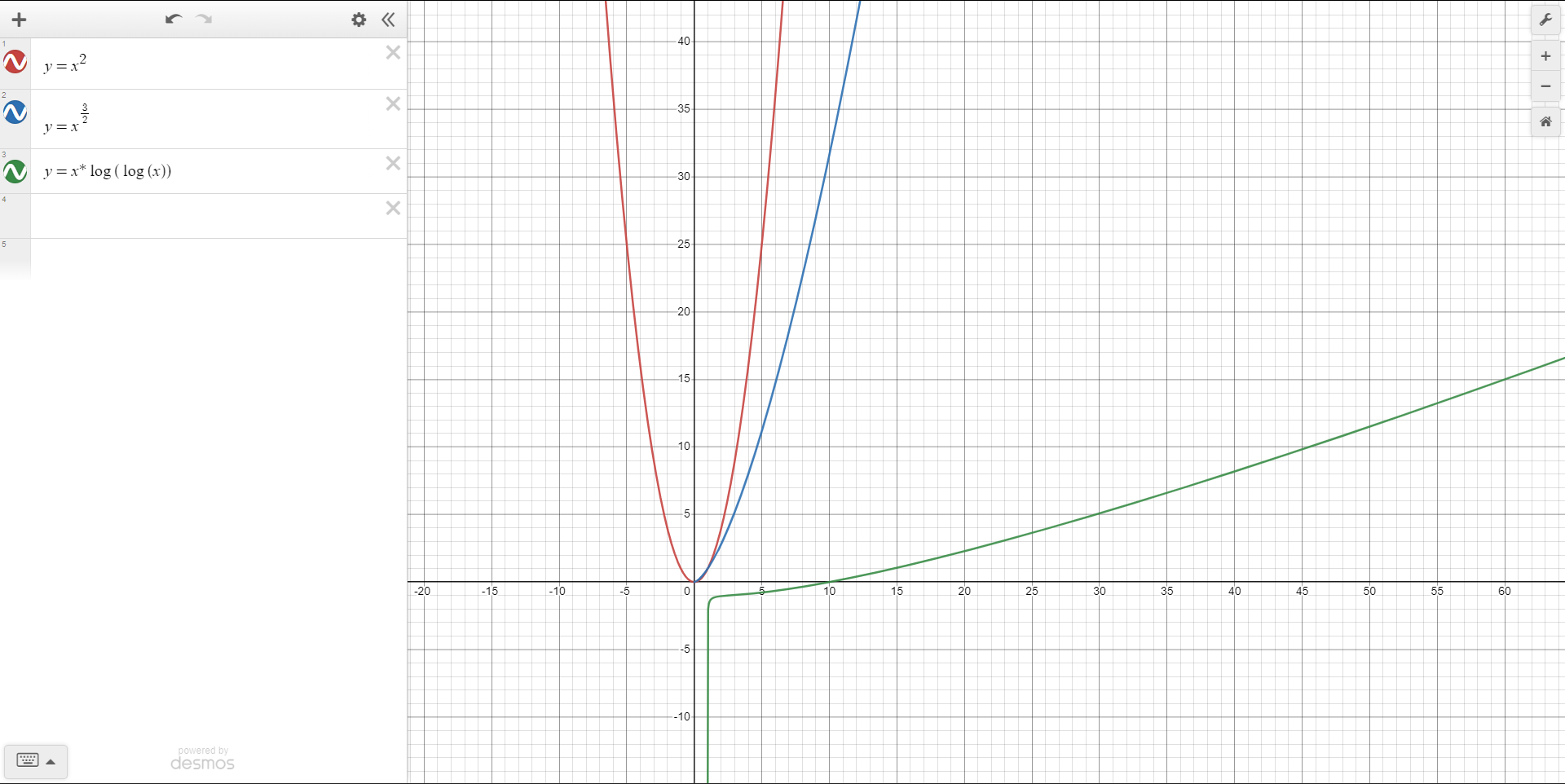

Now this is no tutorial on understanding time complexity and neither do I understand the technical depths of it. And regardless of how much you understand Time Complexity here's what you need to know, Time complexity will be evaluated for the algorithm, denoted by O(x) based on the length of the input the graph below shows some time complexity graphs the faster and the higher the CPU operations increase with respect to the length of the input the worse the algorithm is.

Our original algorithm obtained by brute force will have a time complexity of O(N^2). Now there are multiple approaches to optimize the algorithm to print prime numbers in a given range a lot of them

Since the number ‘n’ cannot be divided by any number greater than ‘n/2’. So we only need to iterate up to n/2.[Time Complexity: O(N^2)]

If n is not divided by any number less than or equals to the square root of n then, it will not be divided by any number greater than the square root of n. So, we only need to check up to the square root of n.[[Time Complexity: O(N^3/2)]]

refer here to get in depth codes and explanation

Sieve of Eratosthenes

But the most interesting of all and the method with the most understated logic of all simply skip the the multiples of each prime, starting with the first prime number, 2. The multiples of a given prime are generated as a sequence of numbers starting from that prime. This is the sieve's key distinction from using trial division to sequentially test each candidate number for divisibility by each prime.

You can learn more about it here and here

Here is a gif thoroughly demonstrating this method

This simple trick reduces the Time complexity to O(n*log(log(n))).

Here a look at the difference in Time Complexity Charts between all three approaches

In Desmos:

Just goes to show how seemingly random and little things in mathematics can speed up processes and help improve algorithms and how a little curiosity to explore out of the box leads to internet rabbit holes with a lot to learn. The first code I wrote to solve problem 3 on the project euler website lead me to learning on all this. Hope this helps you too.

And yes speaking of the code that started it all as I was writing this blogpost with the initial code running in the background. I got one more prime factor, yeah that code is really really that slow.

Subscribe to my newsletter

Read articles from Yash Thakar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by