Database Buffer Pool - Part 2

Samuel Sorial

Samuel Sorial

Introduction

As explained in Database Buffer Pool - Part 1, a buffer pool is a limited chunk of memory, which means that whenever we bring something from the disk, we need to evict something from the buffer pool and replace it. Buffer replacement is one of the most important topics for memory management in DBMS.

Operating-System Page Cache

Before digging into buffer pool replacement policies, we should know that there's another layer of caching, which is the OS page cache. When using OS API to do disk operations, the OS maintains its own filesystem cache, however, this cache can be bypassed. In our case, it's more common to bypass this cache, in order to avoid having a memory full of the same pages cached in different layers. Also, it allows the DBMS to manage its eviction policy, and improve its durability and recovery policy.

It is worth knowing that in case of using a DBMS that's not using OS cache, it requires giving it more memory to have sufficient cache, however, if DBMS is using OS cache, it requires less memory, therefore OS can have memory to manage his cache (like PostgreSQL).

Buffer Replacement Policies

Replacement policy is an algorithm that's used to make the decision about which page to evict. It should be correct, accurate, speedy, and doesn't consume too much memory on meta-data.

1- Least Recently Used (LRU)

LRU stores a timestamp of the last access of each page. When eviction is required, it selects the page with the oldest timestamp and evicts it. This meta-data can be stored in another data structure to allow sorting & improve efficiency.

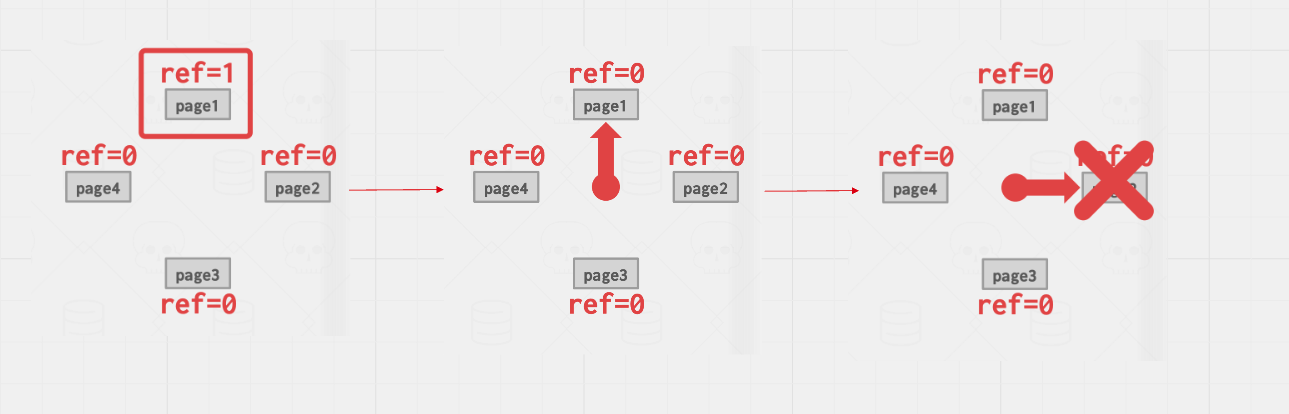

2- Clock

It's an approximation of LRU, but in this case, we don't store a timestamp, we store a reference bit. When a page is accessed, this bit is set to 1. It organizes the pages in a circular buffer with a clock hand, in case we need to evict, start sweeping, if the page bit is set to 1, then set it to 0, if it's 0, we can evict it!

Problems with LRU and Clock

Those 2 algorithms are prone to sequential flooding. Since sequential scans require all pages to be processed, it will flood the buffer pool with all pages, and in this case, timestamp or reference bit is not a great indicator of future need for it. As they were just used and will not be used in near future.

3- LRU-K

Instead of tracking only 1 timestamp, we keep track of the last K page references, whenever it needs to evict a page, it computes the interval between subsequent accesses, then it's used to predict future accesses. It prevents sequential flooding because in this case, the interval between accessing the same page is bigger than other pages used frequently, so it's evicted.

Optimizations

DBMS knows about the context of each page accessed during query execution, which enables it to provide hints for the buffer pool about which pages are important. Keep in mind that those hints might be respected from the buffer pool side or in some cases, it might be evicted depending on the situation.

References

- CMU15-445/645 Database Systems lecture notes. Retrieved from: 15445.courses.cs.cmu.edu/fall2022

Subscribe to my newsletter

Read articles from Samuel Sorial directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Samuel Sorial

Samuel Sorial

A software engineer who is enthusiast about back-end engineering and system design with a flexible mindset. I love playing with frameworks and moving between the languages just like an artist playing