Syncing development configs across repositories

Dirk de Visser

Dirk de Visser

At Lightbase we maintain quite a number of platforms, that in the basics use the same stack. As a result of this, they share a lot of developer configuration files. Think ESLint configuration and GitHub action workflow files. This post gives an idea in how to keep those configuration files in sync, and how you can do that too.

Before we had the sync, updating versions in our GitHub actions or adding an ESLint rule to our repositories was quite the task. Bumping actions/setup-node in all our workflows looks quite simple:

- uses: actions/setup-node@v3.5.0

+ uses: actions/setup-node@v3.5.1

However, repeating this change for a bunch of projects with each a few workflow files and their edge cases is quite error prone. And as someone who loves to write some custom tooling, we could do better!

It all starts with aligning the projects to use the same development tooling. In all the backend projects we use compas lint for running ESLint and Prettier, and in our frontend projects it is yarn format. We also have the same general deployment steps for each project. This results in the same developer experience on each project and also allows us to simplify the maintenance of the necessary configuration files.

Now we have a single source of truth; the sync. Updating GitHub actions workflow files or adding ESLint rules, it is all nicely proposed in a pull request on all our target repositories via a single command:

compas sync

Here is how we do it.

Sync configuration

First we selected the repositories that should be under sync. At the moment we only include the platforms that have a backend and frontend part. Which excludes frontend or app only projects. For the platform projects we define the following config:

export const repositories = [

{

name: "org-name/acme-backend",

type: "backend",

features: { /* ... */ }

},

{

name: "org-name/acme-frontend",

type: "frontend",

features: { /* ... */ },

},

{

name: "org-name/acme-app",

type: "app",

features: { /* ... */ },

},

];

All code blocks combined should result in a mostly complete script. It uses ES Modules (set

type: "module"in yourpackage.json) and requires @compas/stdlib and @octokit/rest to be installed.

For each repository under sync we specify the name which consists of a GitHub organisation name and the repository name. We also note what type of repository it is, either frontend, backend or app. Lastly we have a features object. We will get back to that one.

Cloning the repositories

We opted to run the sync on local clones of the repositories. The other option was to use the GitHub Git trees API. Working with the Git trees API's should result in a faster sync. However, this results in another API to learn for contributors to the sync. By cloning the repositories there is some extra state on disk involved, like ensuring that the checked out repository is at the correct branch. Another reason is that we wanted to integrate yarn upgrade-interactive which requires that all dependencies are already resolved and installed. We can get back some of that lost speed by caching the checkouts and updating the caches via a simple git fetch.

Note that the code blocks require basic knowledge of Git commands. If you are unsure what a command or option does. Check it out via explainshell.com

Cloning the repositories would then look like:

import { mkdir } from "node:fs/promises";

import { existsSync } from "node:fs";

import { pathJoin, exec, environment } from "@compas/stdlib";

export function syncCloneRepositories() {

const baseCheckoutPath = `.cache/checkout`;

await mkdir(baseCheckoutPath, { recursive: true })

for (const repo of repositories) {

const repoPath = pathJoin(baseCheckoutPath, repo.name);

if (existsSync(repoPath) {

// We already have the repo cloned.

// Reset it to latest main discarding any changes, another run of sync may have left a dirty state.

// We can run git commands in the checkout by providing `cwd`.

await exec(`git fetch`, { cwd: repoPath });

await exec(`git checkout --force main`, { cwd: repoPath });

await exec(`git reset --hard origin/main`, { cwd: repoPath });

await exec(`git clean -f -d`, { cwd: repoPath });

} else {

// Clone the repository at `.cache/checkout/$org/$reponame`

await exec(

`git clone https://${environment.GITHUB_TOKEN}@github.com/${repo.name}.git ${repo.name}`,

{ cwd: baseCheckoutPath },

);

}

}

}

Defining suites

At Lightbase we sync quite a few things, so we have separate sync suites each creating own pull request to the repository under sync. The sync then consists of sync features that are each responsible for syncing a single item. Features can have some configuration via the feature: { /* ... */ } object in our repositories array defined above.

export const suites = [

{

branch: "sync/configuration",

features: [

featConfigurationNodeVersionManager,

/* ... */

],

},

{

branch: "sync/workflows",

features: [

featWorkflowPullChecks,

/* ... */

],

},

];

Each suite is executed for each repository. The features themselves check if they need to do some work for a specific repository. Below we use this in the implementation of featWorkflowPullChecks that checks if the current repository is not of type app. Suites have their own static branch name allowing us to create pull requests for each suite separately, and also overwriting open pull requests for that branch if a sync source is updated.

Implementing a feature

A sync feature updates a specific configuration file to the expected state. The most simple variant here is updating for example the .nvmrc file with a specific version. We can just write the changes to disk and let the suite runners, which we will get to later, figure out if something has changed.

import { pathJoin } from "@compas/stdlib";

import { writeFile } from "node:fs/promises";

async function featConfigurationNodeVersionManager(suite, repo, repoPath) {

// Align `.nvmrc` files to Node.js 18 (it is LTS now :))

await writeFile(pathJoin(repoPath, ".nvmrc"), "18\n");

}

A more complex feature

Not all features are as straight forward as the above. Projects may have extra requirements and/or use additional tooling or may for some reason not upgrade to the same Node.js version as other projects. For this example we are syncing (part of) a GitHub action workflow file. We first need to specify what the difference is between this repository and the others. We do that via the features object in our repositories config:

export const repositories = [

{

name: "lightbasenl/acme-backend",

type: "backend",

features: {

"nodeVersion": "16",

},

},

{

name: "lightbasenl/acme-frontend",

type: "frontend",

features: {

"nodeVersion": "18",

},

},

];

We can use this project specific configuration in our features like so:

export function featWorkflowPullChecks(suite, repo, repoPath) {

if (repo.type === "app") {

// Since we don't have support for `app` projects here, we skip further syncing of this feature

return;

}

// Use a different lint command based on the repository type

const lintCommand = repo.type === "frontend" ? "yarn format" : "yarn compas lint";

const workflowSource = `name: "Pull request lint"

on: [pull_request]

jobs:

lint:

timeout-minutes: 15

runs-on: ubuntu-latest

permissions:

contents: read

steps:

- uses: actions/checkout@v3

- name: Node.js ${repo.features.nodeVersion}

uses: actions/setup-node@v3.5.1

with:

node-version: ${repo.features.nodeVersion}

cache: "yarn"

- name: Run lint

run: |

yarn install

${lintCommand}

`;

await writeFile(`./.github/workflows/pr-lint.yml`, workflowSource);

}

We have two feature configurations in the above example.

- We use a different command based on the

repository.type - We use a different Node.js version via

features.nodeVersion.

Running the suites

Now that we we have the features implemented, we need to call them via our suites. The suites first checkout a new branch, then run all the features and finally commit and push the result. Lets start with how these steps are reflected in our code:

export async function syncRunSuites() {

// The same as in `syncCloneRepositories`

const baseCheckoutPath = `.cache/checkout`;

for (const suite of suites) {

for (const repo of repositories) {

// The local checkout path of our repository

const repoPath = pathJoin(baseCheckoutPath, repo.name);

syncSetupSpecificSuite(suite, repo, repoPath);

syncRunSpecificSuite(suite, repo, repoPath);

syncCreatePullForSuite(suite, repo, repoPath);

}

}

}

Starting a new suite doesn't have to do much. We only need to make sure that we are on a clean branch started from main.

async function syncSetupSpecificSuite(suite, repository, repoPath) {

// Note the `-B`, it makes sure that our branch starts from `main` even if the branch already existed because of our cache.

await exec(`git checkout -B ${suite.branch} origin/main`, { cwd: repoPath });

}

Running through all features of a specific suite can be done with a short loop. The features have the responsibility to check if they need to do anything and else should early return.

async function syncRunSpecificSuite(suite, repository, repoPath) {

for (const feature of suite.features) {

// Call each feature, the feature itself should detect if it should do something

await feature(repository, repoPath);

}

}

Finalising the suite is somewhat more work. First we check if any files are changed in our local checkout. When git status --porcelain returns an empty result, the repository is already up to date and we are finished with this suite. When the repository has changes, we need to commit our changes, push the commit and create our pull request.

import { Octokit } from "@octokit/rest";

import { environment, exec } from "@compas/stdlib";

async function syncCreatePullForSuite(suite, repository, repoPath) {

const hasChangesResult = await exec(`git status --porcelain`, { cwd: repoPath });

if (hasChangesResult.stdout.trim().length === 0) {

// No changes detected.

return;

}

// We have changes, so commit and push

await exec(`git add --all`, { cwd: repoPath });

await exec(

`git commit -m "chore(sync): update configuration files for ${suite.branch}"`,

{ cwd: repoPath },

);

await exec(`git push -u origin +${suite.branch}`, { cwd: repoPath });

// Initialise an Octokit client (GitHub)

const githubApiClient = new Octokit({ auth: environment.GITHUB_TOKEN });

const [owner, repo] = repository.name.split("/");

// Get a list of open pull requests for this specific suite

const { data: openPulls } = await client.pulls.list({

base: "main",

owner,

repo,

head: `${owner}:${suite.branch}`,

state: "open",

});

if (openPulls.length === 1) {

// We have an open PR already.

// The branch is force pushed, so we are done with this suite.

return;

}

// No open pull request yet, so create one

await client.pulls.create({

owner,

repo,

head: branchName,

title: `chore(sync): update configuration files for ${suite.branch}`,

body: "_This PR is created by sync. It will be force pushed by later runs!_",

base: main,

});

}

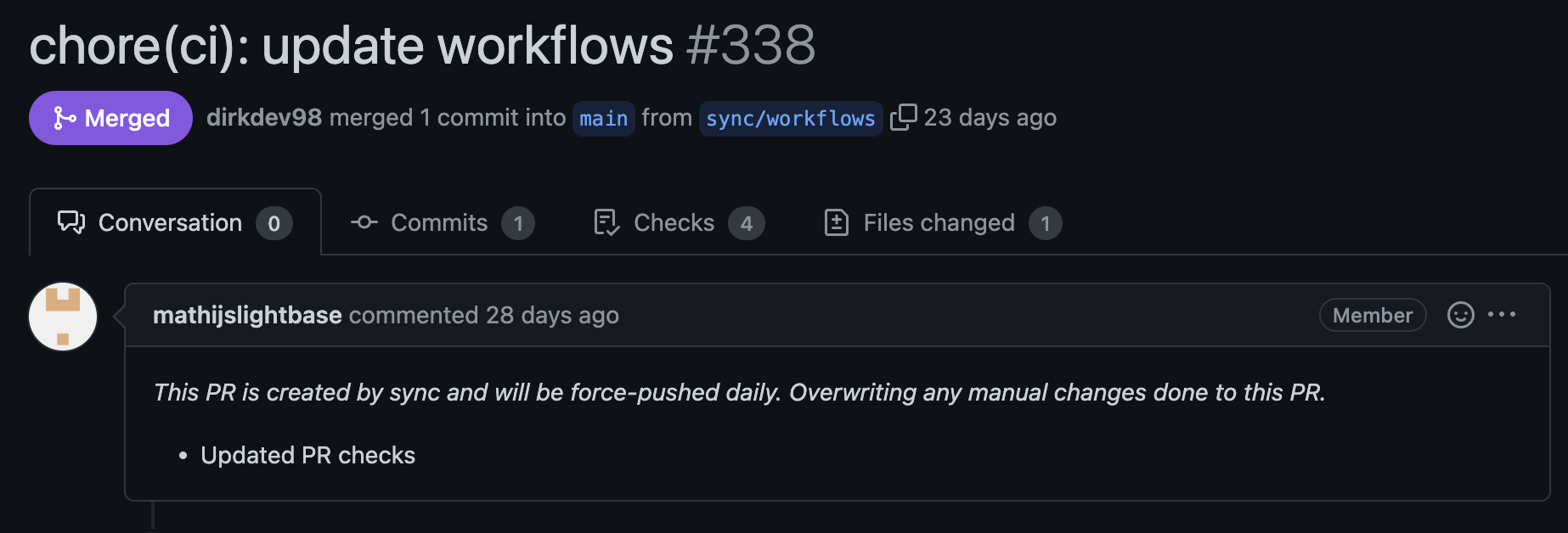

That was quite the experience, resulting in a pull request that could look like this one.

Final result

With all this in place we can create the final script that is executed and run it with node sync.js.

// sync.js

import { mainFn } from "@compas/stdlib";

mainFn(import.meta, main);

async function main() {

await syncCloneRepositories();

await syncRunSuites();

}

And we are ready to sync! Updating the Node.js version is now a single line change, running the sync and merging a few pull requests. Updating the version of actions/setup-node only needs to happen in a single place, run the sync and of you go. Preventing a lot of manual clones, finding what needs to change and where. And doing so without making mistakes, which is hard and a lot of work.

Further improvements

There are various improvements that can be added to improve this sync. First of all we can run this script on GitHub actions as well, running it for example weekly or daily:

name: Sync

on:

schedule:

# Run the sync daily on workdays at 4 AM

- cron: "0 4 * * 1-5"

# Or weekly at Sunday night 4 AM

# - cron: "0 4 * * 0"

We can also improve local debugging of the sync by creating Git diffs instead of opening pull requests when the script is used locally. This allows for local experimentation and validation of new or updated sync features.

When you have quite a few projects under sync, it takes time to run through it all. At that point you may want to allow filtering for which repository you want to run the sync on. You can do this easily with for example the @compas/cli package.

/**

* Sync command definition

*

* @type {CliCommandDefinitionInput}

*/

export const cliDefinition = {

name: "sync",

shortDescription: "Run the sync",

flags: [

{

name: "repositories",

rawName: "--repository",

description: "Optional repository selection",

modifiers: {

isRepeatable: true,

},

value: {

specification: "string",

},

},

],

};

Or maybe you want to add details of what is synced to the pull request, or maybe even replace all those Dependabot pull requests with a single pull request via sync, like we do!. There is a lot of potential to simplify the maintenance of projects. At Lightbase we use this setup for a few months now and has saved quite a lot of manual work, but we are always looking for more things to add to the sync. Feel free to share your ideas below!

Subscribe to my newsletter

Read articles from Dirk de Visser directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by