Exporting data from BigTable and Loading it into your Data Warehouse

Fritz Larco

Fritz LarcoTable of contents

BigTable

You may not have heard about Google’s BigTable but it is a thriving NoSQL database offering from the juggernaut, allowing exceptional read/write performance when properly tuned. According to Google Cloud’s own blog post, BigTable manages over 10 Exabytes of data and serves more than 5 billion requests per second. It also offers various features such as auto-scaling with the goal of optimizing costs and improved manageability.

Extract-Load Process

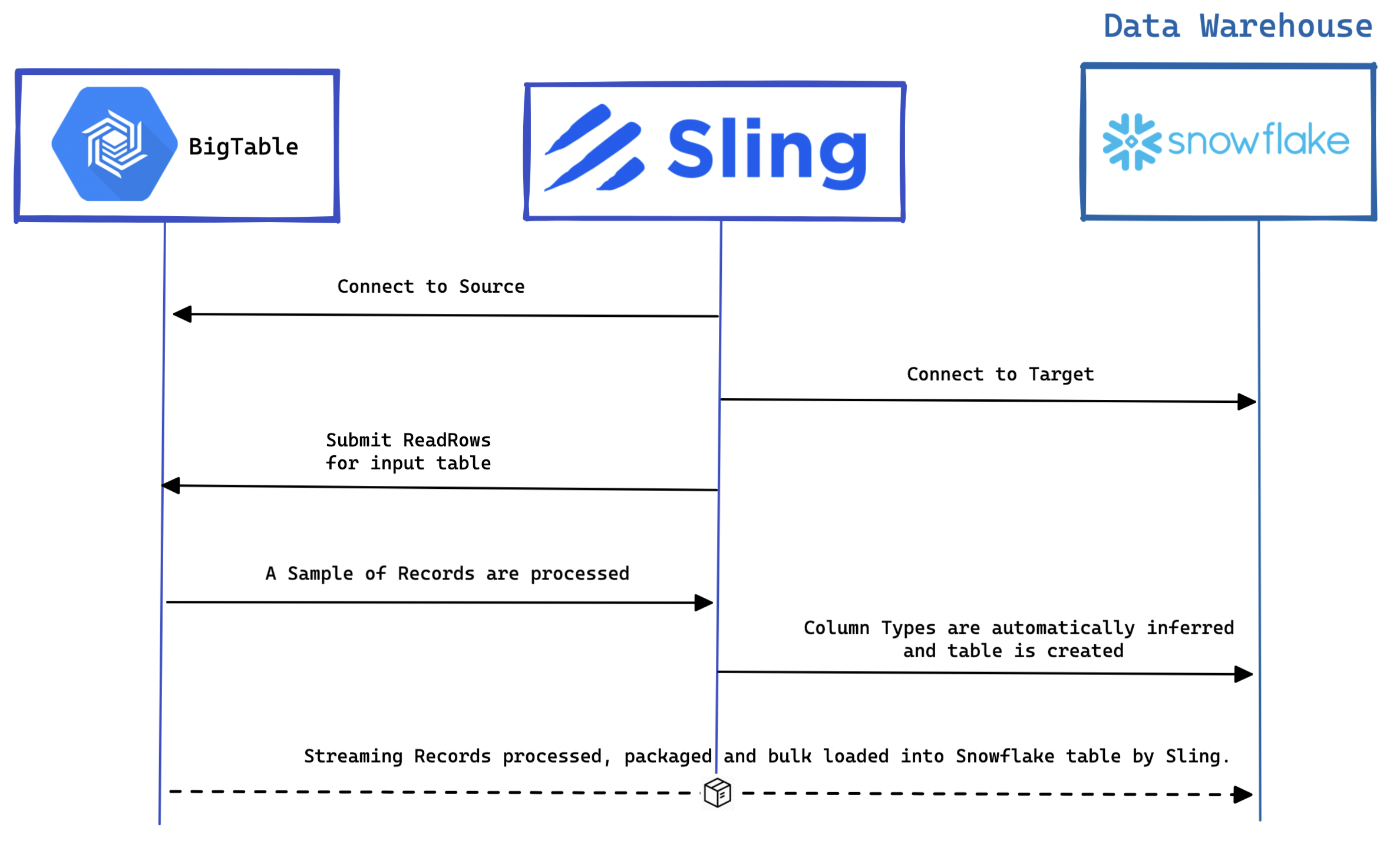

So you have a situation where you need to copy or move data out of BigTable and import it into your Data Warehouse. How to proceed? Through various Google search, it is apparent that there is a lack of tooling that properly handle BigTable as a source. Fortunately, this is not the case for Sling. The go-powered Extract-Load (EL) tool, allows you to natively connect to BigTable and export the unstructured data sets in a tabular format (CSV, TSV) or into the most popular RDBMS databases such as PostgreSQL, BigQuery, Redshift, SQL Server and Snowflake. See below for an illustration of how Sling does this when importing into Snowflake.

Using Sling CLI

Sling CLI is a command line tool (as the name suggests), which allows you great flexibility for custom automation, at the expense of doing things a little bit more manually. The first step is to install sling. Since it is built in Go, it is offered as a binary for whatever system you are using.

# On Mac

brew install slingdata-io/sling/sling

# On Windows Powershell

scoop bucket add org https://github.com/slingdata-io/scoop-sling.git

scoop install sling

# Using Python Wrapper via pip

pip install sling

If you have a Linux system or desire to download the binary manually , please head over here. Once you have it downloaded, the next step is to set your credentials. Sling primarily uses a env.yaml file located in the ~/.sling/ folder. Here is an example:

~/.sling/env.yaml

connections:

MY_BIGTABLE:

type: bigtable

project: sling-project-123

location: US

instance: big-table-instance

gc_key_file: /Users/me/.sling/sling-project-123-ce219ceaef9512.json

MY_SNOWFLAKE:

type: snowflake

username: fritz

password: my_pass23

account: abc123456.us-east-1

database: sling

schema: publicOnce the credentials are set, we can test connectivity with the sling conns test command:

$ sling conns list

+---------------+----------------+----------------+

| CONN NAME | CONN TYPE | SOURCE |

+---------------+----------------+----------------+

| MY_BIGTABLE | DB - BigTable | sling env yaml |

| MY_SNOWFLAKE | DB - Snowflake | sling env yaml |

+---------------+----------------+----------------+

$ sling conns test MY_BIGTABLE

5:13PM INF success!

$ sling conns test MY_SNOWFLAKE

5:14PM INF success!

Great, now we are ready to run our Extract-Load (EL) task.

$ sling run --src-conn MY_BIGTABLE --src-stream test_table3 --tgt-conn MY_SNOWFLAKE --tgt-object public.test_table3 --mode full-refresh

0:08AM INF connecting to source database (bigtable)

10:08AM INF connecting to target database (snowflake)

10:08AM INF reading from source database

10:08AM INF writing to target database [mode: full-refresh]

10:09AM INF streaming data

1s 10,000 33798 r/s

10:09AM INF dropped table public.test_table3

10:09AM INF created table public.test_table3

10:09AM INF inserted 10000 rows in 11 secs [901 r/s]

10:09AM INF execution succeeded

To learn more about Sling CLI, please see here.

Conclusion

We have demonstrated an easy way to move your data from BigTable to Snowflake. Similar steps can be used for any other destination databases supported by Sling. To see a list of compatible databases, please visit this page.

Subscribe to my newsletter

Read articles from Fritz Larco directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Fritz Larco

Fritz Larco

A technical data engineer / passionate technologist who loves tackling interesting problems with code, exploration & persistence.