Containerizing the Consumer

Mladen Drmac

Mladen Drmac

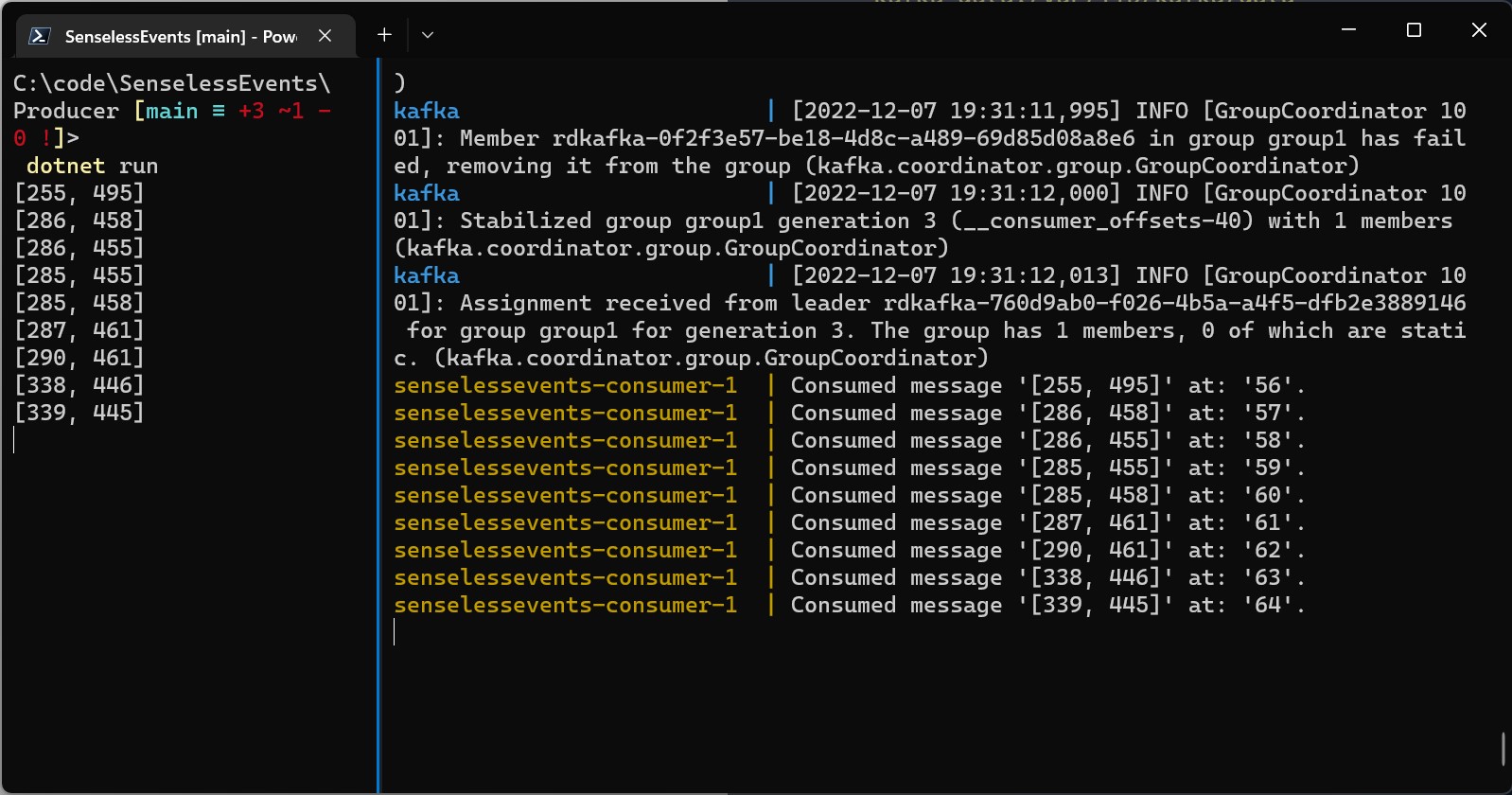

Our system currently consists of 2 .NET console applications communicating through Kafka + Zookeeper living inside Docker containers. Not bad for a start, but we can do better.

We want to have a fully scalable, easily deployable distributed system, and that's not possible if it only works on my machine.

The producer is currently bound to Windows because of P/Invoke for the mouse positions, but the consumer can be turned into a nice little Docker container, so let's do that.

By the way, containerization is another topic that I plan to write a deep dive series about, but let's focus on practical matters for now.

To create a Docker image for the app, we need to create a Dockerfile. It's useful to also add a .dockerignore file to specify files to ignore when building the image.

FROM mcr.microsoft.com/dotnet/sdk:7.0 AS build-env

WORKDIR /App

COPY . .

RUN dotnet publish -c Release --os linux -o out

FROM mcr.microsoft.com/dotnet/runtime:7.0 AS run-env

WORKDIR /App

COPY --from=build-env /App/out .

ENTRYPOINT ["dotnet", "Consumer.dll"]

Here we have a multi-stage Docker file, meaning that we are using the full dotnet SDK base image to compile the code (in Release configuration, targeting Linux), and only the dotnet runtime base image to run the code.

We can test this docker file by building the image.

docker build -t consumer .

Now we have an image and need to create a container from it. To avoid another docker run command, we can move to the next level and create a docker-compose file. We can specify all the images we use in our system and Docker will make sure to start them all up.

version: '3.8'

services:

zookeeper:

image: confluentinc/cp-zookeeper

container_name: zookeeper

volumes:

- zookeeper-data:/var/lib/zookeeper/data

- zookeeper-log:/var/lib/zookeeper/log

- zookeeper-secrets:/etc/zookeeper/secrets

ports:

- 2081:2081

environment:

- ZOOKEEPER_CLIENT_PORT=2181

- ZOOKEEPER_TICK_TIME=2000

kafka:

image: confluentinc/cp-kafka

container_name: kafka

volumes:

- kafka-data:/var/lib/kafka/data

- kafka-secrets:/etc/kafka/secrets

ports:

- 9092:9092

environment:

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_LISTENERS=PRODUCERS://kafka:9092,CONSUMERS://kafka:29092

- KAFKA_ADVERTISED_LISTENERS=PRODUCERS://localhost:9092,CONSUMERS://kafka:29092

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP=PRODUCERS:PLAINTEXT,CONSUMERS:PLAINTEXT

- KAFKA_INTER_BROKER_LISTENER_NAME=CONSUMERS

depends_on:

- zookeeper

consumer:

build:

context: .

dockerfile: Consumer/Dockerfile

depends_on:

- zookeeper

- kafka

volumes:

zookeeper-data:

zookeeper-log:

zookeeper-secrets:

kafka-data:

kafka-secrets:

For this to work, I had to play with Kafka arguments quite a lot. Due to the distributed nature of Kafka, connecting to the broker is not as simple as specifying the address and port. I will explain the details in one of the Kafka deep dives, I promise.

Consumer.cs file had to be changed as well, so that it connects to Kafka using the internal address.

// Consumer.cs

...

var kafkaConfig = new ConsumerConfig

{

...

BootstrapServers = "kafka:29092",

...

};

...

There was also a bit of a struggle with the volumes. I didn't realize at first that each of the 5 directories needed a separate volume. Another thing to look at in more detail.

During the trial-and-error process, I was often confused with the old version of my code running in the container. Because of that, I often had to stop and remove containers and images for the consumer. After I while I learned about docker compose up --build, a neat command that will rebuild the consumer image every time.

Another important thing to mention is that the producer is creating the Kafka topic if it doesn't exist, but the consumer will fail when trying to subscribe to the non-existent topic. Make sure to run the producer first, so the topic gets created.

Subscribe to my newsletter

Read articles from Mladen Drmac directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mladen Drmac

Mladen Drmac

I am a software developer with experience in .NET, SQL, APIs, and to a lesser extent JavaScript and TypeScript. Recently I've started studying concepts and technologies related to distributed systems, containerization, event processing, event streaming, and NoSQL, and decided to document my studying in this form.