Testing Bloom, an open-source large language model on OCI

Walid Hajeri (WalidHaj)

Walid Hajeri (WalidHaj)Table of contents

A couple of days ago, I stumbled upon this remarkable blog post from my colleague Carlos Giraldo about Generative AI on OCI (https://medium.com/oracledevs/generative-ai-in-oci-c0467e1a68f7)

I simply wanted to go through all the steps of it and give it a try, and as you'll see I wasn't disappointed.

Before I start sharing my first experience, I'll share with you what are Generative AI and Large Language Models, and give you an overview of Bloom, the model I could deploy and test on Oracle Cloud Infrastructure.

What are Generative AI and Large Language Models ?

Generative AI is a type of artificial intelligence that is capable of generating new content or data that is similar to a given input or training data. It is a subfield of machine learning that focuses on using statistical models or algorithms to generate synthetic data that is similar to real-world data.

Generative AI can be used for a wide range of applications, such as generating text, images, music, or other types of data. It can be used to generate realistic images or videos, synthesize music or other audio, or generate natural language text that is difficult to distinguish from text written by a person.

There are various techniques and approaches that can be used for generative AI, such as generative adversarial networks (GANs), autoencoders, and variational autoencoders (VAEs). These techniques use machine learning algorithms to learn the patterns and features of a given training dataset, and then use that knowledge to generate new, synthetic data that is similar to the training data.

Generative AI has the potential to revolutionize many industries, including creative arts, media, and entertainment, as well as scientific research and engineering. It can be used to create new content, design new products, or even discover new scientific insights.

Large language models are artificial intelligence (AI) systems that have been trained to process and generate human-like text. They are called "large" because they are typically trained on very large datasets, often consisting of billions of words or more.

This allows them to learn the patterns and structure of language at a very fine-grained level, and to generate text that is highly coherent and natural-sounding.

Large language models can be used for a variety of tasks, such as language translation, text generation, and question-answering. They are an active area of research in the field of natural language processing (NLP), and have achieved impressive results on a wide range of NLP benchmarks.

Is it a bit clearer to you? I hope so because this whole section was generated by ChatGPT3 (https://chat.openai.com/chat ) another very popular Large Language Model released by Open AI.

Bloom

BLOOM stands for BigScience Language Open-science Open-access Multilingual.

It is a generative language model developed by over 1000 researchers from 70+ countries and 250+ institutions including HuggingFaces & CNRS in France. It was recently trained using a partition of more than 400 nVIDIA A100-80 GPUs of the HPC SuperComputer Jean Zay located in the Paris Saclay region - one of Europe's most powerful supercomputers. Side note... Oracle has announced early December 2022 the availability of those nVidia GPU shapes on Oracle Cloud Infrastructure (see announcement here).

Bloom is a variant of the GPT (Generative Pre-training Transformer) language model that is trained to generate human-like text with a higher level of coherence and structure than GPT.

The main difference between BLOOM and GPT is that BLOOM is trained using a new pre-training objective called "BLOOM Pre-training" which focuses on generating text with a higher level of coherence and structure. This is achieved by training the model to predict missing words in a text given the context around them, as well as to predict whether a given sequence of words is coherent and follows a logical structure.

BLOOM is trained on a large dataset of human-generated text (1.61 terabytes of text spanning 46 natural languages), such as books and articles, and is able to generate text that is coherent, structured, and reads like it was written by a human. It can be used for a variety of natural language processing tasks, including language translation, language summarization, and text generation.

Disclaimer : parts of this paragraph were also generated by ChatGPT3

Steps I had to go through to deploy on Oracle Cloud :

Install OCI CLI from Windows. This is the local CLI that allowed me to communicate & authenticate with my OCI tenant and ultimately provision resources there (it uses a Python SDK & OCI APi under the hood).

To install it you can also follow Carlos' post : https://medium.com/@carlgira/install-oci-cli-and-configure-a-default-profile-802cc61abd4f

... with a variation which is I'm on Windows, so I used https://chocolatey.org/ as a package manager.

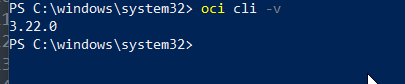

Once installed I check my version is OK

From there I run the terraform scripts that will provision my resources on OCI. The target VM will contain Bloom pre-installed but also other graphical-text to image deep learning- models which I personnally couldn't provision because of my very specific limitation on provisionning GPU shapes. Of course you shouldn't have that limitation on your own tenant.

Basically I had to change the terraform.tfvars with a non-GPU, non-Flex shape :

instance_shape = "VM.Standard1.16"

I applied the terraform script, waited and checked on my console that my generative-ai compute instance was starting & then running. I've noted down the IP address that showed up in the console when applying the terraform .

I created a ssh tunnel between my local laptop & the OCI instance with Putty (don't forget to convert the key format with puttygen otherwise you won't be able to connect). And voilà Bloom was accessible from my local browser http://localhost:5000

Now the holiday fun begins :

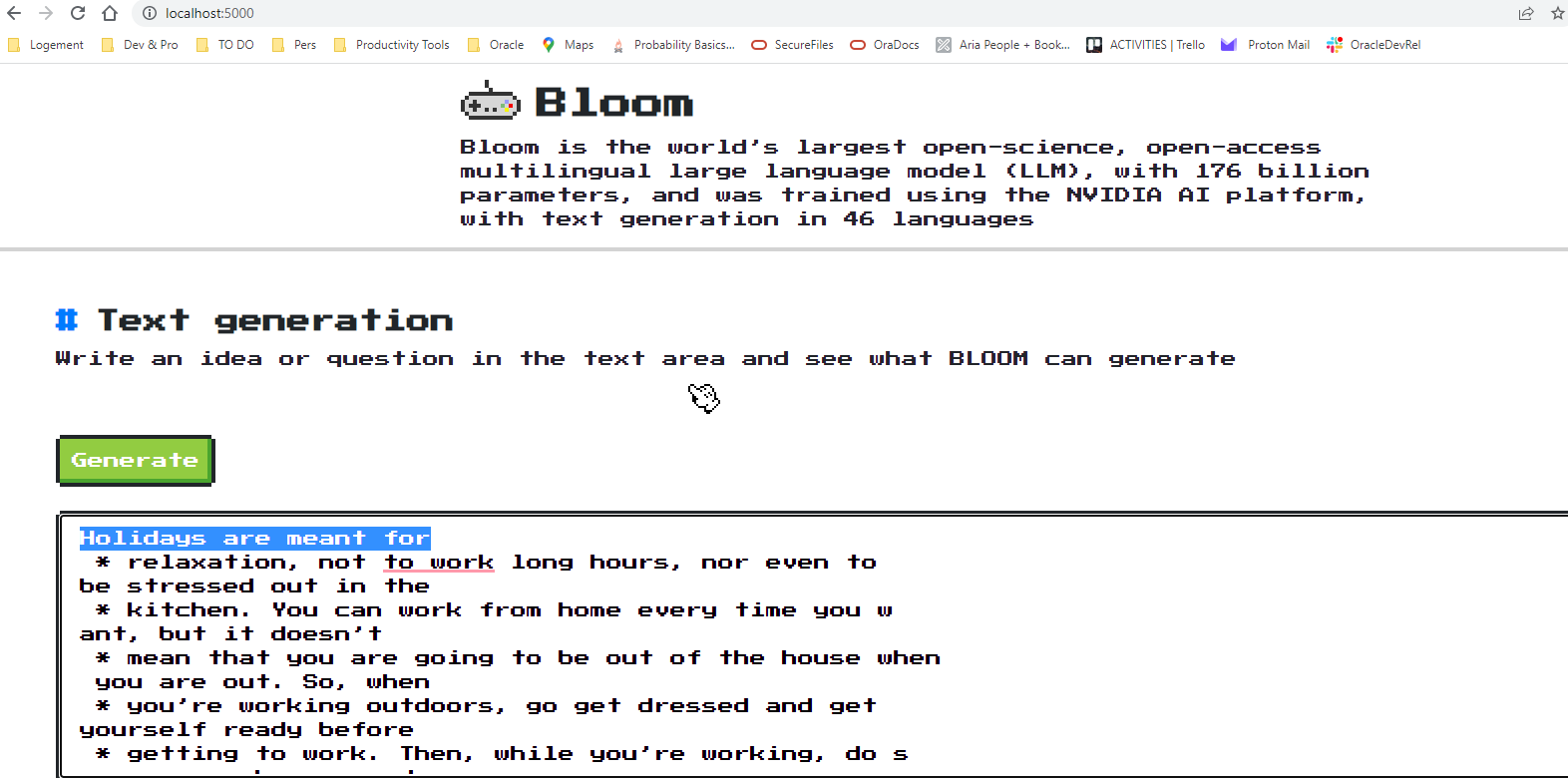

Bloom is capable of both answering questions and also generating a small text based on a short sentence start - the prompt- such as "Holidays are meant for" :

Sure, the User Interface is super basic , but the content generated was really fun.

I've also tried in french language by typing "La période de Noël est une période propice à " :

What I found quite funny is Bloom's attempt to answer one of my questions with credible mathematical reasoning. The assumption was however completely wrong (2000x 4000 m2 ??) and even the result was strange. But I would be stupid to consider it stupid.

Take this other example :

How many Christmas Trees could we store in a football stadium?

The answer was :

We assume that a football stadium is a single piece of space with a total area of 2000 x 4000 m^2.

We also assume that we want the number of trees to be distributed among all available places in such a way that we have a reasonable probability to find a tree somewhere.

There can be a l ot of trees in a football stadium, so we assume that we will store a maximu m of 200 trees and that they

have a certain number of available places. If more trees are stored, they will not have enough available places to store them all. You can think of a football stadium as a lot of

I've done many attempts with slight variations and the results are sometimes ridiculous, and sometimes it's really interesting and fascinating, for example when it said things like "it depends on the relationship between the number of trees and the number of people in the stadium" or even proposed an excel formula to solve the issue.

After a few tests, I started adapting my questions so that it could better understand it. I've even heard that communicating with AI generative models is even becoming a skill that a few people have started monetizing... (see https://promptbase.com/ )

When I asked the same question to ChatGPT3, I got a whole different type of answer (more disappointing this time) :

It is not possible to store Christmas trees in a football stadium, as a football stadium is designed for sporting events and does not have the necessary infrastructure or space to store large numbers of Christmas trees. Christmas trees are typically stored in a warehouse or other storage facility that is specifically designed for that purpose.

I hope you enjoyed reading this post, and that you'll follow Bloom's advice to relax and have fun !

Walid Hajeri

Disclaimer : Views are my own , none of the ideas expressed in this post are shared, supported, or endorsed in any manner by my current employer.

Subscribe to my newsletter

Read articles from Walid Hajeri (WalidHaj) directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Walid Hajeri (WalidHaj)

Walid Hajeri (WalidHaj)

Customer Engineer with a passion for well-designed tech products. Tech side - Interest in Cloud-native App Dev & AI Other side - University of Paris 1 Sorbonne alumnus, grew up in a creative family, passionate about all things related to visual arts & design in general.