Comparison of two ML Models using transfer learning for image classification

Alain Victor Ramirez Martinez

Alain Victor Ramirez Martinez

In this article, I would like to explore with you a very useful technique in Deep Learning training called "Transfer Learning". The idea of sharing knowledge is not new for all of us. As far as We remember, this is exactly what human beings have been doing in the last centuries from generation to generation.

What is Transfer Learning?

Transfer learning is a machine learning technique that allows us to leverage the knowledge learned by a pre-trained model on a large dataset to solve a different task with a smaller dataset.

As it is frequently challenging to collect a significant amount of labeled data, this technique is especially helpful for image classification problems.

When to use Transfer Learning

Understanding if the use of transfer learning is advised or not is something crucial before we go further into this comparison. The simple rule is to use TL when you have a small dataset that is similar to the original dataset that was previously trained.

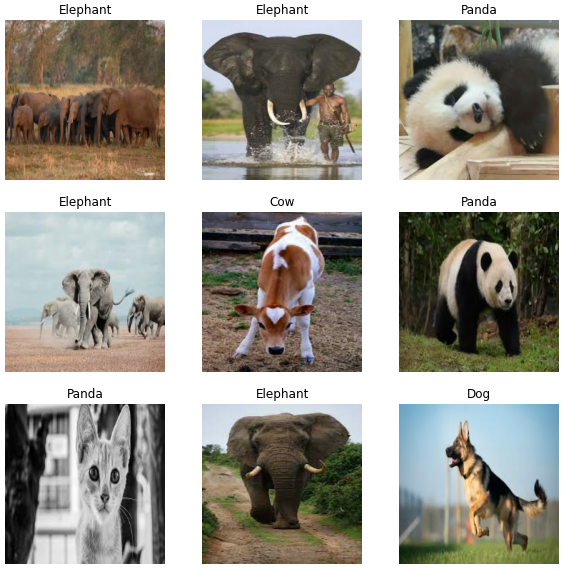

The Dataset

We are going to use a dataset with 7000 images and 5 classes from Kaggle. The original article uses the Resnet50v2 model and achieves a test accuracy of 97.17%.

Source: https://www.kaggle.com/code/utkarshsaxenadn/animal-classification-resnet50v2-acc-99/notebook

The Models to be compared

We are going to compare the performance of the two pre-trained models: MobileNetV2 and EfficientNetV2.

MobileNetV2 is a lightweight convolutional neural network (CNN) designed for mobile and embedded devices. It is based on the inverted residual structure, which allows it to have a very small number of parameters while maintaining high accuracy.

EfficientNetV2 is a CNN architecture that is designed to be efficient in terms of both computation and accuracy. It is based on the idea of scaling up CNNs in a more structured way, using a simple and effective scaling method to automatically derive network architectures.

Fine-tune and Steps for training

To compare the performance of these two models, we first need to fine-tune them for our specific dataset. Fine-tuning a pre-trained model involves unfreezing some of the layers and training them with the new dataset. This allows the model to learn new features that are specific to the task at hand, while still leveraging the knowledge learned from the large dataset on which it was pre-trained.

To fine-tune the models, we can use the following steps:

Load the pre-trained MobileNetV2/EfficientNetV2 model and set all layers as untrainable.

Add a fully connected layer on top of the model with the number of units equal to the number of classes in our dataset.

Compile the model with a suitable loss function and optimizer.

Fit the model on the training data, using a suitable batch size and number of epochs.

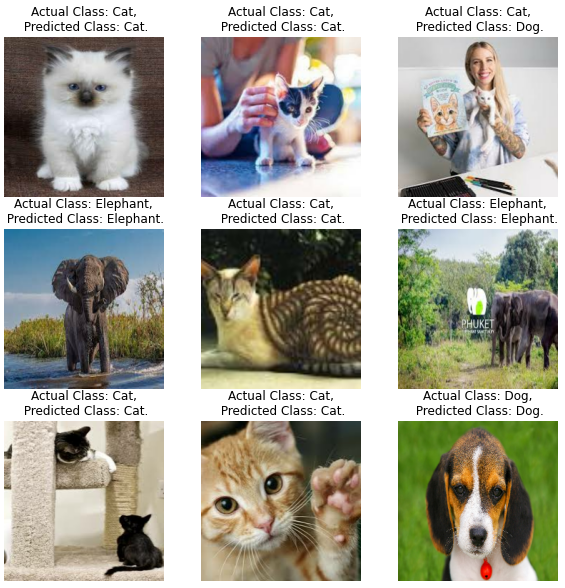

The results

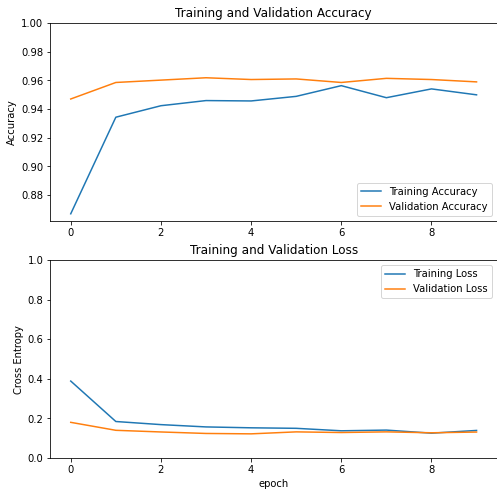

MobileNetV2

38/38 [==============================] - 128s 3s/step

loss: 0.1215 - accuracy: 0.9624

Test accuracy : 0.9624373912811279

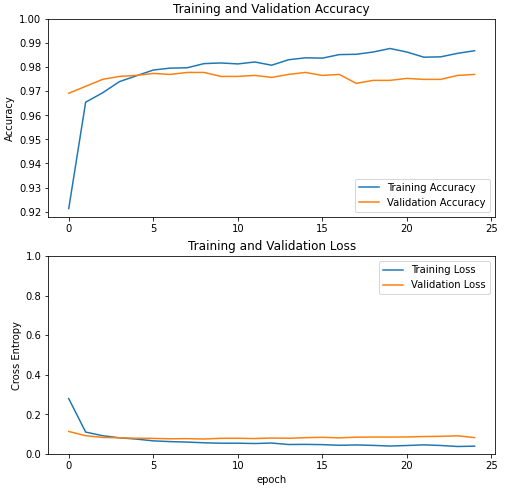

EfficientNetV2

38/38 [==============================] - 134s 3s/step - loss: 0.0742 - accuracy: 0.9766 Test accuracy : 0.9766277074813843 !!!!

The results showed that EfficientNetV2 outperformed MobileNetV2 in terms of test accuracy, achieving 97.66% accuracy in 25 epochs, compared to 96.25% accuracy for MobileNetV2 in 10 epochs with early stopping. These results suggest that EfficientNetV2 is better suited for this specific image classification task achieving higher accuracy in this dataset.

Conclusion

In conclusion, transfer learning is a powerful technique for image classification tasks, allowing us to leverage the knowledge learned by pre-trained models to solve new tasks with a small dataset. By fine-tuning MobileNetV2 and EfficientNetV2 on our specific dataset, we were able to compare their performance and choose the model that was most suitable for our task.

Additionally, we used a very modest model in terms of parameters (EfficientNetV2 - 7.2M) and still outperformed the original Kaggle article in terms of accuracy (ResNetV2 - 25.6M).

If you want to try other models go to Keras applications: https://keras.io/api/applications/

Subscribe to my newsletter

Read articles from Alain Victor Ramirez Martinez directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Alain Victor Ramirez Martinez

Alain Victor Ramirez Martinez

I am a telco engineer with self taugh studies in Data Science and ML. I am willing to start a new phase in my career moving from project, product and commercial management to the development field.