Skipgram Architecture

Yuvraj Singh

Yuvraj Singh

The last blog article, which is available here, explained how Word2Vec's CBOW architecture works for creating embeddings for textual data. In this piece, we'll explain how the skip-gram architecture works for building embeddings for words, how it differs from the CBOW, and which one you should choose. So without further ado, let's get going.

Intuition behind SkipGram

The fundamental idea behind skip-gram is that it can't just give a word a numerical representation; instead, it tries to solve a dummy issue, which is to discover context words based on the target word, and as a byproduct of the dummy problem's solution it derives the numerical representation of the word.

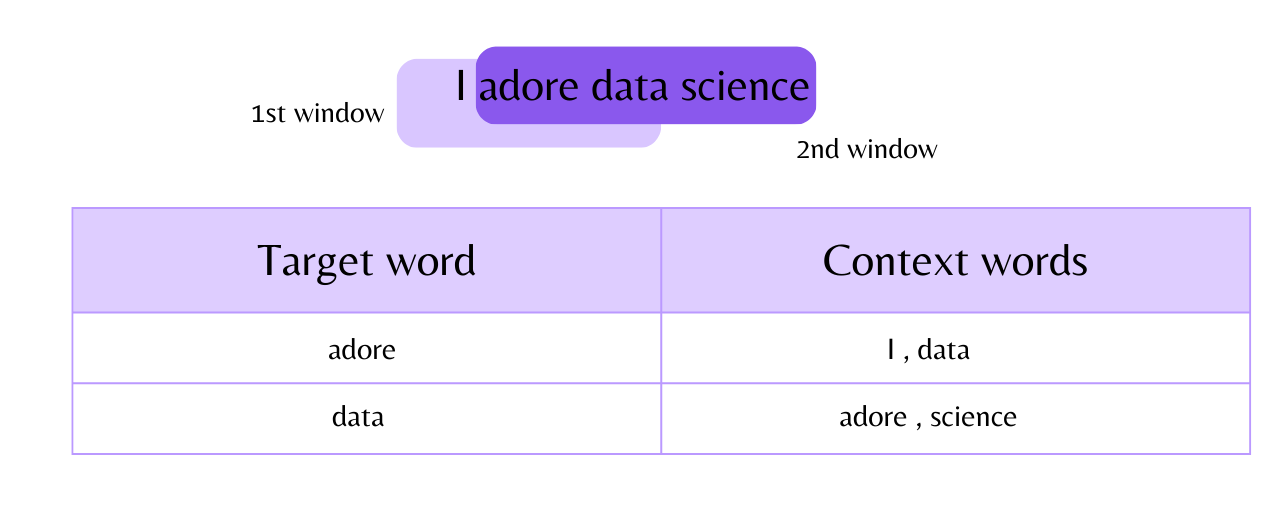

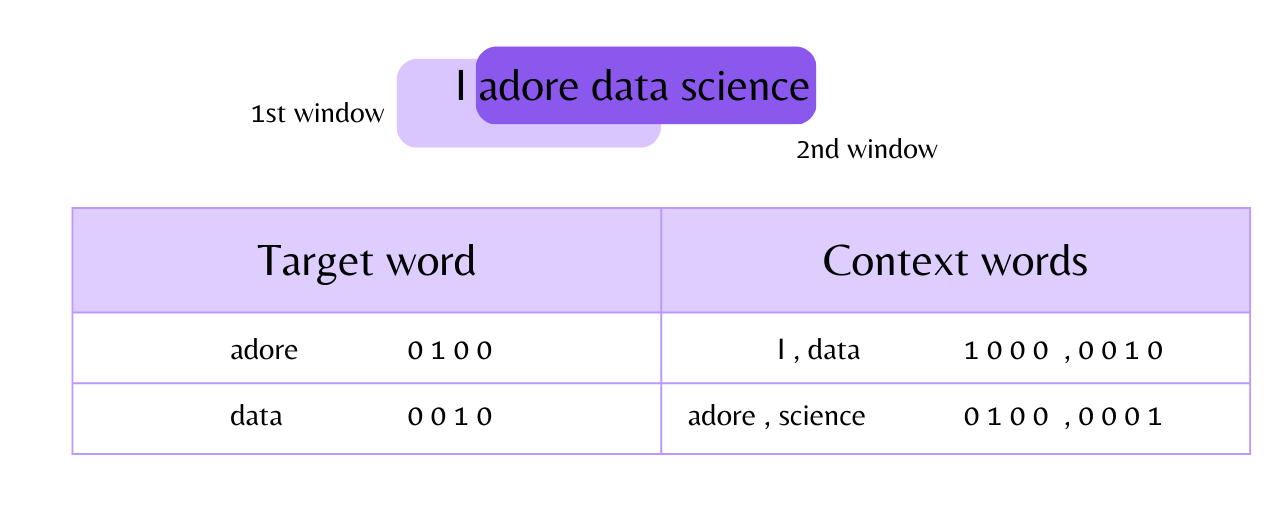

Let's suppose for the moment that we wish to generate word embeddings for the phrase "I adore data science" by using the skip-gram architecture of word2vec." Now, before doing anything else, we must decide how many values each word will have in its vector form ( In short we need to define by utilizing how many features the vocabulary word will get the numerical representation ). In our case, we want that every vector must have 3 values in it.

Now that we are aware, word2vec's skip-gram architecture initially attempts to resolve a dummy problem, which entails locating the context words based on the target word. So let's look at what the data frame for this problem might look like.

The words are then encoded using the one-hot encoding technique (OHE) after the data frame has been created.

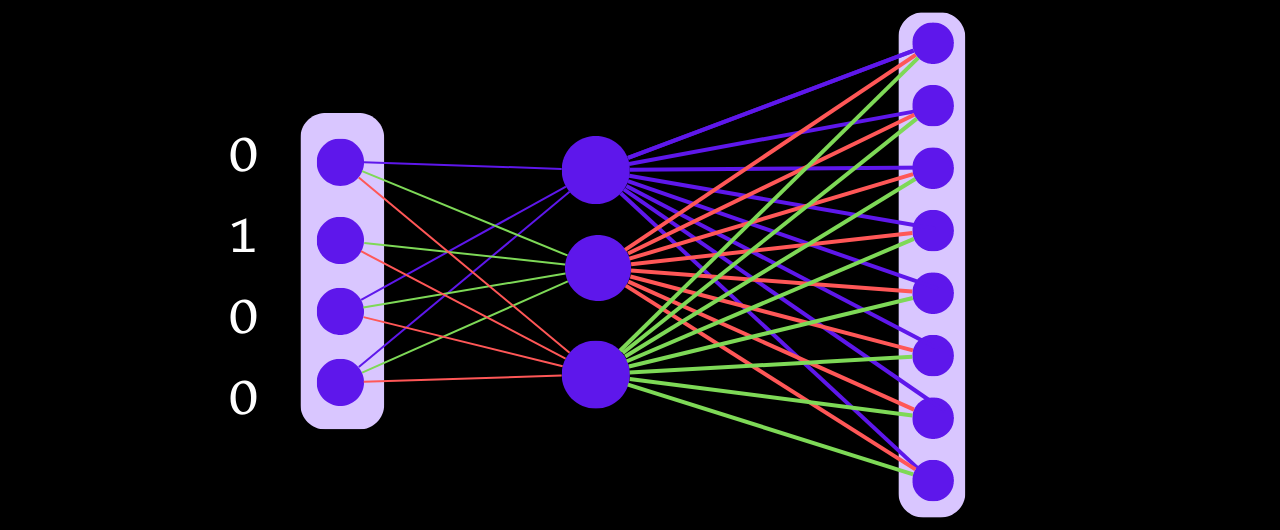

The skipgram neural network-based architecture will now appear as follows after performing one-hot encoding of all the target and context words: the input layer will have 5 nodes, the hidden layer will have 3 nodes (as we decided that we only wanted the vector to have three dimensions), and the output vector will have 10 nodes.

The input layer neurons will receive the one hot encoded value of the word "adore" for the first time, and certain weights will be initialized for each input layer neuron.

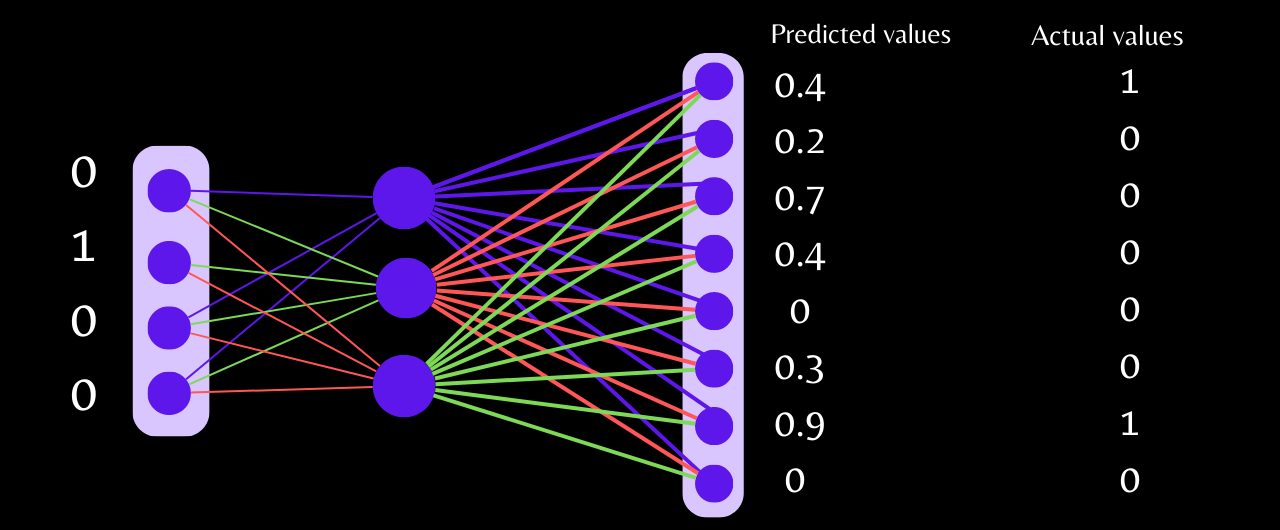

When the data is passed from the input layer to the hidden layer neurons, the linear function performs the sum of the weighted input and bias, and the output is passed to the softmax activation function, which returns a probability value. With this, after forward propagation is complete, the loss will be calculated, and then its value will be minimized through backpropagation.

The weights between each hidden layer neuron and input layer neuron in the neural network will be the vector representation of that word once the training is complete.

Which architecture, out of CBOW and skip-gram, should we use?

In case you are having small data then go with CBOW, whereas for large data skip-gram is a good choice.

That's all for now, and I hope this blog provided some useful information for you. Additionally, don't forget to check out my 👉 TWITTER handle if you want to receive daily content relating to data science, mathematics for machine learning, Python, and SQL in form of threads.

Subscribe to my newsletter

Read articles from Yuvraj Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Yuvraj Singh

Yuvraj Singh

With hands-on experience from my internships at Samsung R&D and Wictronix, where I worked on innovative algorithms and AI solutions, as well as my role as a Microsoft Learn Student Ambassador teaching over 250 students globally, I bring a wealth of practical knowledge to my Hashnode blog. As a three-time award-winning blogger with over 2400 unique readers, my content spans data science, machine learning, and AI, offering detailed tutorials, practical insights, and the latest research. My goal is to share valuable knowledge, drive innovation, and enhance the understanding of complex technical concepts within the data science community.