CloudFormation : CodePipeline and ECS Blue/Green Deployments (Part I)

Samuel Monroe

Samuel Monroe

Forewords

The context in which I set up this infrastructure for one of my projects is the following:

I have a GitHub repository where the CI part is managed through GitHub actions. The CodePipeline will thus not be in charge of the tests.

The application is a full-stack Phoenix app, this just means that this guide will focus on a single "instance" to be deployed. The templates should be easy to adapt to your needs anyway.

I don't want to mess up with security, IAM

rolesandpoliciesshould be fine-tuned and properly assigned.Now this has been said, let's see what is the desired infrastructure and how it will work together to make the magic happen.

I will try to stay as much concise as I can in my snippets, avoiding adding tags and other stuff that is not directly needed in the templates. Don't hesitate however to document as much as you can your templates and tag your resources accordingly.

My initial desire was to avoid splitting this blog post into different parts, however, I decided to do so after seeing it become a booklet.

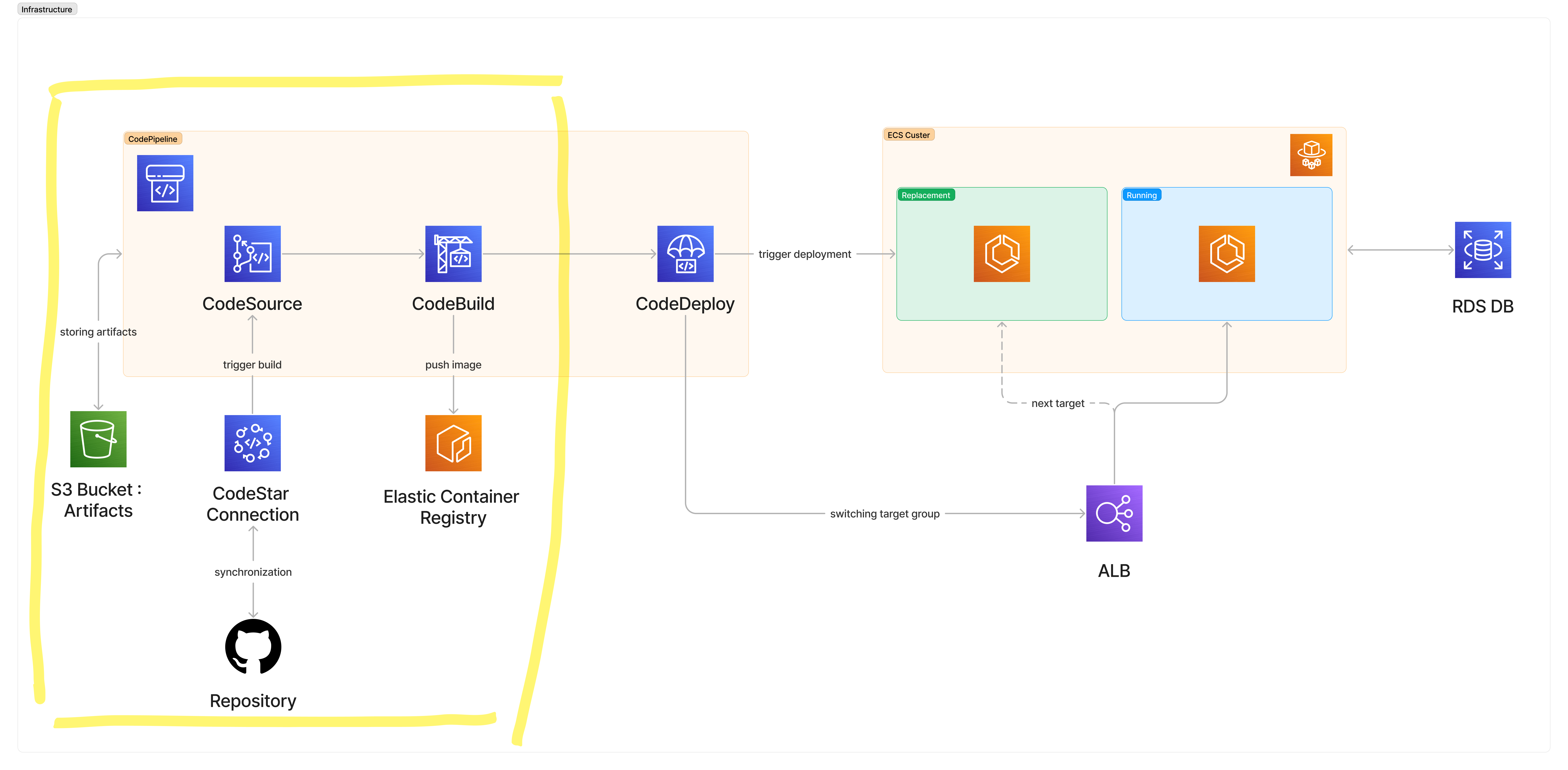

The Big Picture

Here is the big picture of the infra we will set up, and the highlighted portion will be tackled in this first part of the tutorial.

Although not complete, it's already a fully functional and useful setup for the first part and you will be granted fresh docker images of your app!

Pre-Requisites

CodeStar Connection

Our first step is to set up a CodeStar connection to our GitHub repository.

The idea of this CodeStar connection is to allow AWS to monitor one or multiple repositories and branches to act upon in our pipeline when changes are made.

As we want to trigger a build for a single repo on updates on master from Github, this is the way to go.

Once run, the template will generate a new connection that you can find listed at https://console.aws.amazon.com/codesuite/settings/connections.

This connection must be validated manually, selecting the needed repository and branches to track. You can find more info about validating the connection at https://docs.aws.amazon.com/dtconsole/latest/userguide/connections-update.html

AWSTemplateFormatVersion: "2010-09-09"

Resources:

MyAppGithubConnection:

Type: AWS::CodeStarConnections::Connection

Properties:

ConnectionName: my-app-github-connection

ProviderType: GitHub

Outputs:

Arn:

Value: !GetAtt MyAppGithubConnection.ConnectionArn

Security Groups

My application will need at least 3 security groups:

For the load-balancer, which is public-facing and receiving HTTP(S) traffic.

For the application itself, which can be contacted by the ALB only.

For the database, which can be reached by the application only.

AWSTemplateFormatVersion: 2010-09-09

Parameters:

VpcId:

Type: String

Resources:

ALBSG:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: "ALB SG"

GroupName: alb-sg

SecurityGroupIngress:

- Description: "Allows HTTP connections from the outside."

FromPort: 80

ToPort: 80

IpProtocol: tcp

CidrIp: 0.0.0.0/0

- Description: "Allows HTTPS connections from the outside."

FromPort: 443

ToPort: 443

IpProtocol: tcp

CidrIp: 0.0.0.0/0

VpcId: !Ref VpcId

ApplicationSG:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: "Application SG"

GroupName: app-sg

SecurityGroupIngress:

- Description: "Allows HTTP connections from production ALB."

FromPort: 4000 # Because my app is running on 4000

IpProtocol: tcp

SourceSecurityGroupId: !GetAtt ALBSG.GroupId

ToPort: 4000

VpcId: !Ref VpcId

DatabaseSG:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: "Database SG"

GroupName: database-sg

SecurityGroupIngress:

- Description: "Allows Postgres connections from the production application."

IpProtocol: tcp

FromPort: 5432

ToPort: 5432 # Postgres

SourceSecurityGroupId: !GetAtt ApplicationSG.GroupId

VpcId: !Ref VpcId

Outputs:

ALBSecurityGroupId:

Value: !GetAtt ALBSG.GroupId

ApplicationSecurityGroupId:

Value: !GetAtt ApplicationSG.GroupId

DBSecurityGroupId:

Value: !GetAtt DatabaseSG.GroupId

Database

This template will create a simple database in the selected availability zone, nothing crazy here. Pass it the security group id and the database will lie there ready to receive connections from your applications.

It might be the case that you need a first manual activity on your DB Server to create the database itself, then you have two solutions:

Change

PubliclyAccessibletotrueand open the right ports in theSecurityGroup. Don't forget to restrict those again afterward.Setup a bastion EC2 to operate on your database, and turn it on when needed only (the most preferred solution here)

AWSTemplateFormatVersion: 2010-09-09

Parameters:

MasterUsername:

Type: String

MasterUserPassword:

Type: String

VPCSecurityGroupId:

Type: String

Resources:

Database:

Type: AWS::RDS::DBInstance

Properties:

AllocatedStorage: 30

AutoMinorVersionUpgrade: true

AvailabilityZone: eu-central-1a

CopyTagsToSnapshot: true

DBInstanceClass: db.t3.small

DBInstanceIdentifier: myapp-production

Engine: postgres

MasterUsername: !Ref MasterUsername

MasterUserPassword: !Ref MasterUserPassword

PubliclyAccessible: false

VPCSecurityGroups:

- !Ref VPCSecurityGroupId

DeletionPolicy: Snapshot

CodePipeline : Source and Build

Now the real work can start, and I will explain logically and step-by-step the needed parts and resources to create to achieve the whole pipeline and get a continuous deployment that is triggered on every update to the master branch, and this with zero downtime thanks to ECS Blue/Green deployments.

These "logical" explanations follow sub-goals to set up the pipeline, those being:

Set up an ECR repository.

Make a first version of the Pipeline that can:

Pull your source

Build the application image

Push it to ECR

Deploy the first version of your app on an ECS cluster

Associate your ECS deployment with an ALB

Complete the CodePipeline so CodeDeploy can trigger the ECS Blue/Green by:

Spinning up a new instance of the app and pairing it with a Listener

Changing the target of the ALB to point to your new instance

Terminate the old instance when checks are green.

For clarity, I will keep the same snippet that I will progressively enhance through the section. The untouched resources will stay "closed" with suspension dots, as it would look in a code editor.

ECR Repository

Nothing crazy here, you now have an image repository for myapp.

AWSTemplateFormatVersion: 2010-09-09

Resources:

ElasticContainerRegistry:

Type: AWS::ECR::Repository

Properties:

EncryptionConfiguration:

EncryptionType: KMS

RepositoryName: myapp

Building Pipeline

Now let's tackle the first heavy part, having a CodePipeline building our app from the source and pushing it to the repository.

To build this we need to :

Add an S3 bucket to store artifacts created by the Pipeline during the process.

Create the roles that the CodePipeline and CodeBuild will need to interact with other resources.

Create the CodeBuild project which needs to find a

.buildspec.ymlfile in the source (you can specify the path, as I did by placing it in a.awsdirectory in my project folder).Create the base Pipeline with Source and Build stages

ArtifactStore bucket

Our first step here is to set up an S3 bucket that will be used by the CodePipeline, nothing crazy at this stage.

AWSTemplateFormatVersion: 2010-09-09

Parameters:

CodestarConnectionARN:

Type: String

VpcId:

Type: String

Resources:

ElasticContainerRegistry: #...

ArtifactStoreBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: myapp-pipeline-artifacts

VersioningConfiguration:

Status: Enabled

CodePipeline Role

For the CodePipeline to operate properly, we need to set up a dedicated role with the following permissions:

Using the ArtifactStoreBucket we created previously

Use the CodestarConnection to fetch our source code

Trigger CodeBuild we are about to define

AWSTemplateFormatVersion: 2010-09-09

Parameters:

CodestarConnectionARN: #...

VpcId: #...

Resources:

ElasticContainerRegistry: #...

ArtifactStoreBucket: #...

PipelineRole:

Type: AWS::IAM::Role

Properties:

RoleName: codepipeline-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Action: sts:AssumeRole

Effect: Allow

Principal:

Service: codepipeline.amazonaws.com

Policies:

- PolicyName: artfifact-storage-bucket-access

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- s3:GetObject

- s3:PutObject

- s3:GetObjectVersion

Resource: !Sub arn:aws:s3:::${ArtifactStoreBucket}/*

- PolicyName: codepipeline-access

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- codestar-connections:UseConnection

Resource: !Ref CodestarConnectionARN

- Effect: Allow

Action:

- codebuild:StartBuild

- codebuild:BatchGetBuilds

Resource: !GetAtt CodeBuildProject.Arn

CodeBuild Role

The CodeBuild we will define just after also needs some permissions :

As we want to build docker images, we want to interact with the ECR and log in to the service

We want to log the build process

We want to access the artifacts bucket

AWSTemplateFormatVersion: 2010-09-09

Parameters:

CodestarConnectionARN: #...

VpcId: #...

Resources:

ElasticContainerRegistry: #...

ArtifactStoreBucket: #...

PipelineRole: #...

CodeBuildRole:

Type: AWS::IAM::Role

Properties:

RoleName: codebuild-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: codebuild.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: push-to-ecr

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- ecr:CompleteLayerUpload

- ecr:GetAuthorizationToken

- ecr:UploadLayerPart

- ecr:InitiateLayerUpload

- ecr:BatchCheckLayerAvailability

- ecr:PutImage

Resource: !GetAtt ElasticContainerRegistry.Arn

- Effect: Allow

Action:

- ecr:GetAuthorizationToken

Resource: '*'

- PolicyName: log-authorizations

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource: '*' # New log group and stream being created on every build

- PolicyName: artfifact-storage-bucket-access

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- s3:GetObject

- s3:PutObject

- s3:GetObjectVersion

Resource: !Sub arn:aws:s3:::${ArtifactStoreBucket}/*

CodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Name: application-build

Artifacts:

Type: CODEPIPELINE

Source:

Type: CODEPIPELINE

BuildSpec: ".aws/buildspec.yml"

Environment:

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/standard:6.0

Type: LINUX_CONTAINER

PrivilegedMode: true # Needed to perform docker operations

EnvironmentVariables:

- Name: AWS_ACCOUNT_ID

Type: PLAINTEXT

Value: !Ref AWS::AccountId

- Name: IMAGE_REPO_NAME

Type: PLAINTEXT

Value: !Ref ElasticContainerRegistry

ServiceRole: !Ref CodeBuildRole

CodeBuild Project

Now we can define the CodeBuild project that will take place in the CodePipeline.

As you see, we are making use of the role and passing some environment variables to the build process. The CodeBuild process itself is defined in a .buildspec.yml file, that in my case lies in a directory .aws but is expected to be found at the root of your source code by default.

AWSTemplateFormatVersion: 2010-09-09

Parameters:

CodestarConnectionARN: #...

VpcId: #...

Resources:

ElasticContainerRegistry: #...

ArtifactStoreBucket: #...

PipelineRole: #...

CodeBuildRole: #...

CodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Name: application-build

Artifacts:

Type: CODEPIPELINE

Source:

Type: CODEPIPELINE

BuildSpec: ".aws/buildspec.yml"

Environment:

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/standard:6.0

Type: LINUX_CONTAINER

PrivilegedMode: true # Needed to perform docker operations

EnvironmentVariables:

- Name: AWS_ACCOUNT_ID

Type: PLAINTEXT

Value: !Ref AWS::AccountId

- Name: IMAGE_REPO_NAME

Type: PLAINTEXT

Value: !Ref ElasticContainerRegistry

ServiceRole: !Ref CodeBuildRole

Buildspec.yml

As you can see here, I just make use of default available environment variables like $CODEBUILD_RESOLVED_SOURCE_VERSION and the one I passed like $AWS_ACCOUNT_ID .

The full list of environment variables available can be found here https://docs.aws.amazon.com/codebuild/latest/userguide/build-env-ref-env-vars.html.

In this specification, I just create a docker image for my application and push it to the repository tagged with the commit hash and latest.

version: 0.2

phases:

pre_build:

commands:

- IMAGE_TAG=$(echo $CODEBUILD_RESOLVED_SOURCE_VERSION | cut -c 1-7) # Derive commit short hash

- ECR_IDENTIFIER="$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com"

- IMAGE_REGISTRY_PATH="$ECR_IDENTIFIER/$IMAGE_REPO_NAME"

- echo Logging in to Amazon ECR...

- aws ecr get-login-password --region $AWS_DEFAULT_REGION | docker login --username AWS --password-stdin $ECR_IDENTIFIER

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build -t $IMAGE_REGISTRY_PATH:$IMAGE_TAG -t $IMAGE_REGISTRY_PATH:latest .

post_build:

commands:

- echo Build completed on `date`

- echo Pushing the Docker image...

- docker push $IMAGE_REGISTRY_PATH --all-tags

CodePipeline

Now we can lay out the first version of our pipeline, building image upon source changes and storing the image into ECR thanks to the resources we created before.

This pipeline defines two stages:

The first one using CodeStar connection, which specifies which repo and which branch to use and defines the

OutputArtifacts. The artifacts, in this case, are the source code itself which will then be used by CodeBuild.The build stage makes use of the source code provided by the previous stage and runs what we defined in the

buildspec.ymlfile.

AWSTemplateFormatVersion: 2010-09-09

Parameters:

CodestarConnectionARN: #...

VpcId: #...

Resources:

ElasticContainerRegistry: #...

ArtifactStoreBucket: #...

PipelineRole: #...

CodeBuildRole: #...

CodeBuildProject: #

Pipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

ArtifactStore:

Location: !Ref ArtifactStoreBucket

Type: S3

Name: production-pipeline

RoleArn: !GetAtt PipelineRole.Arn

Stages:

- Name: Source

Actions:

- Name: Source

ActionTypeId:

Version: '1'

Owner: AWS

Category: Source

Provider: CodeStarSourceConnection

OutputArtifacts:

- Name: SourceCode

Configuration:

ConnectionArn: !Ref CodestarConnectionARN

BranchName: master

FullRepositoryId: "myGithubUser/myApp"

RunOrder: 1

- Name: Build

Actions:

- Name: Build

ActionTypeId:

Category: Build

Owner: AWS

Version: '1'

Provider: CodeBuild

Configuration:

ProjectName: !Ref CodeBuildProject

InputArtifacts:

- Name: SourceCode

OutputArtifacts:

- Name: BuildOutput

RunOrder: 1

What's next?

You should now have a running CodePipeline!

Once the template has been launched and the resources created, try to push on your selected branch and see your machinery being triggered, pulling your source, building a docker image for your app and storing it in ECR.

With this, we are ready to turn it into a resilient ECS Blue/Green deployment, automated in your pipeline.

Part 2 : https://blog.srozen.com/cloudformation-codepipeline-and-ecs-bluegreen-deployments-part-ii

Sources:

Subscribe to my newsletter

Read articles from Samuel Monroe directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by