Scrapping Stock Data with Sveltekit and Playwright

Rod Lu

Rod Lu

In this article we will see how to use Sveltekit and Playwright to achieve 2 main goals:

Build a

Playwrightweb scraper to extract stock price from a public investment website and save them to aPostgresdatabase;Display extracted stock data on a Sveltekit web page;

The latest version of Sveltekit comes with Playwright option for automated testing as part of its installation. We will play around with that to do some web scraping.

Playwright is an amazing framework that enables reliable end-to-end testing for modern web apps.

Packaged as a Node. js library, Playwright can run in headless mode to automate Chromium, Firefox, and WebKit with a single API that enables cross-browser web automation that is reliable and fast.

You could use both Playwright and Sveltekit on any virtual machine cloud provider or Platform as a service such as Digital Ocean or just run it locally.

There are several ways you could deploy Sveltekit and Playwright in a production environment. The cheapest one is probably to set up a virtual machine or a container in a cloud environment that supports Node.js.

To make things simpler, in our scenario, we will set up Playwright with Sveltekit in our local environment using a server-side script +page.server.ts as part of the Sveltekit framework project architecture.

A +page.server.ts runs entirely on the server side and is useful when you don't want to expose connection string data which should be secured.

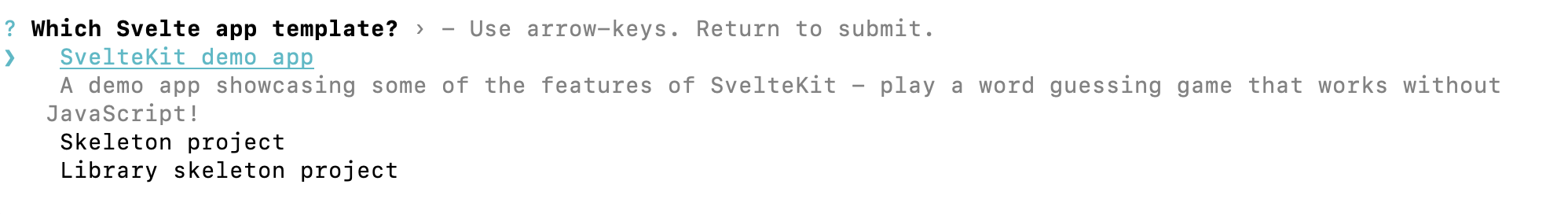

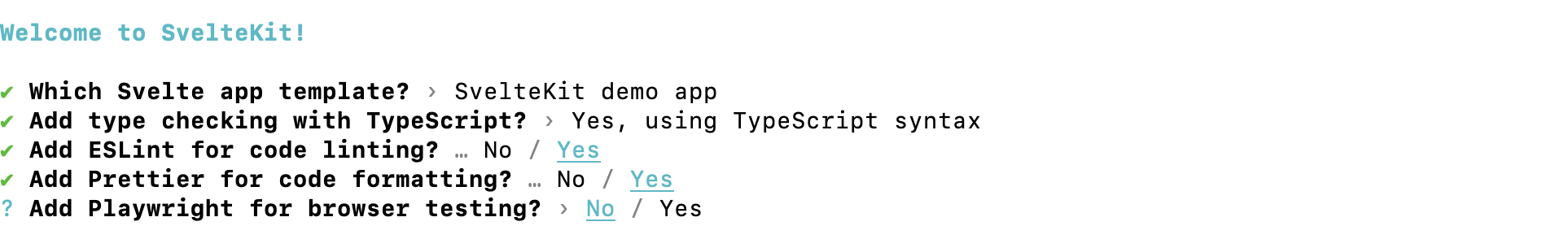

To start, we need to install the Sveltekit application using the following commands:

npm create svelte@latest stocks-scraper

cd stocks-scraper

npm install

npm run dev

Next, we will select Sveltekit Demo since it already ships with some pre-configured layout pages and we will just do some small modifications.

We will select typescript, but you could use just plain javascript, just don't forget to install Playwright.

For our header navigation, we will just have two pages:

Stocks to display data;

Scraper page just to run the web scrapping;

/routes/Header.svelte

<nav>

<svg viewBox="0 0 2 3" aria-hidden="true">

<path d="M0,0 L1,2 C1.5,3 1.5,3 2,3 L2,0 Z" />

</svg>

<ul>

<li aria-current={$page.url.pathname === '/' ? 'page' : undefined}>

<a href="/">Home</a>

</li>

<li aria-current={$page.url.pathname === '/' ? 'stocks' : undefined}>

<a href="/stocks">Stocks</a>

</li>

<li aria-current={$page.url.pathname === '/scraper' ? 'page' : undefined}>

<a href="/scraper">Scraper</a>

</li>

</ul>

<svg viewBox="0 0 2 3" aria-hidden="true">

<path d="M0,0 L0,3 C0.5,3 0.5,3 1,2 L2,0 Z" />

</svg>

</nav>

Inside Sveltekit's project, under the "routes" folder just create two folders: stocks and scraper. These will be the only 2 pages we will need.

We will start building the Scraper first since we will need the scraped data to save on our database.

Inside the scraper folder create a file +page.server.ts in which we will set up Sveltekit Load function that will be used to run the server-side script:

/scraper/+page.server.ts

import playwright from 'playwright';

import { Client } from 'pg';

export const load = async () => {

const browser = await playwright.chromium.launch({

headless: false,

args: [

'--user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36'

]

// devtools: true

});

const page = await browser.newPage({ bypassCSP: true });

const stocks = await listStocks();

for (let i = 0; i < stocks.length; i++) {

let stock = stocks[i];

//await scrapStock(stock, page, browser);

await page.waitForTimeout(1000)

}

await browser.close();

return {

stocks: { status: 'success' }

};

};

In order to run Playwright using Chomium browser make sure you set headless to false. This will open up chromium with "npm run dev" which makes it a lot easier to see what is going on and debug errors during the development stage.

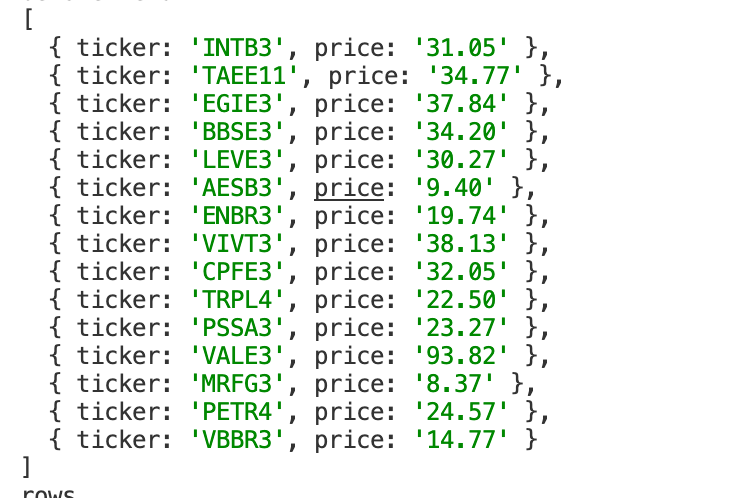

Next, we will implement listStocks function to grab some existing stock tickers from our database so that we can inform our web scrapper to use them.

/scraper/+page.server.ts

const listStocks = async () => {

const connectionString = 'postgres://username:password@localhost:5432/stocks_scraper';

const query = 'SELECT ticker, price FROM stocks order by id';

const client = new Client({

connectionString: connectionString

});

try {

await client.connect();

const res = await client.query(query);

client.end();

return res.rows;

} catch (err) {

console.log(err);

client.end();

return [];

}

};

Later, we will need to set up our Postgres database and insert some tickers.

For security purposes, you should not leave the connection string exposed like this and should store it preferably in an environmental variable.

We will not go over the setup of database users or schema security for this scenario.

But in case you need the basic configuration, here is the basic script to set up the database which we will call stocks_scraper.

CREATE DATABASE database_name;

create table stocks

(

ticker varchar(30)

constraint unique_ticker

unique,

price numeric(10, 2),

id serial

primary key

);

# Here is the script to insert some tickers

INSERT INTO stocks (ticker, price) VALUES ('PETR4', 0);

Observe that in our main Load function, we have commented on the scrapStock function, but now we will need to implement it. Just, don't forget to uncomment it later.

This function has 3 arguments: the stock object which contains the ticker and the price and the page and browser objects that are part of the Playwright framework.

//await scrapStock(stock, page, browser);

/scraper/+page.server.ts

async function scrapStock(stock, page, browser) {

await page.goto(`https://statusinvest.com.br/acoes/${stock.ticker}`);

await page.waitForLoadState();

const price = await page.$eval(

'div[title="Valor atual do ativo"] .value',

(el) => el.textContent

);

console.log(price);

updateStock(stock.ticker, price);

return null;

}

Since I am in Brazil, I am going to use a well-known Brazilian investment called StatusInvest site to extract information, but you can adapt to whatever public investment site you need.

I like this site because it is very clean and fast and speed is something you need to consider when running automated data scraping over the web.

In the script above we start by telling Playwright to go to the stock info page, for instance, https://statusinvest.com.br/acoes/PETR4.

Next, we wait for the complete state of the page to finish loading to avoid possible errors. Next, we grab the price using a very simple CSS selector using surround text "Valor atual do ativo" as a key.

Finally, after we get the new price, we just update our stock with the most recent price in the database.

The last part is very important. You could return the new object, but in our case, since we just use this function to update the database we can just return null.

But pay attention, since you run this function in async mode if you don't return anything you may face problems during the execution of the automation script.

Since we are scraping prices in Brazilian currency, we need to convert them to standard decimal-floating-point to ensure accurate calculations and avoid rounding errors.

For this purpose, we will use the function below which just replaces commas with dots and parses the final result to a floating decimal.

/scraper/+page.server.ts

function convertToUSCurrency(value) {

value = value.replace(',', '#');

value = value.replace('.', '');

value = value.replace('#', '.');

let number = parseFloat(value);

return number;

}

For our scraper svelte +page.js we can just create a very simple code. You could return something like a status "success", or maybe implement a button to call our scraper inside Stocks Page, but for demonstration purposes, I preferred to keep it simple as a regular Sveletkit route with just dummy code.

/scraper/+page.ts

<div class="text-column">

<h1>Web Scraping</h1>

</div>

Now that we have our stock data updated, we can now work on displaying it on the UI using Sveltekit.

Inside our stocks folder we just created under routes, we need 2 files:

+page.server.ts

+page.svelte.

The +page.server.ts is where we will grab data from our Postgres database and return our stocks object to our UI on +page.ts.

import {Client} from 'pg';

export const load = async () => {

const connectionString = 'postgres://postgres:123456@localhost:5432/stocks_scraper';

const client = new Client({

connectionString: connectionString

});

const query = client.connect()

.then(() => {

const query = 'SELECT ticker, price FROM stocks';

return client.query(query);

})

.then(res => {

console.log(res.rows);

console.log('rows');

client.end();

return res.rows;

})

.catch(err => {

console.log(err);

client.end();

return [];

});

return {stocks: query};

}

If you refresh the page you should see the output of our updated stocks table on the console on Vscode:

Although the process seems simple, it takes a lot of steps to implement it. We just covered the basics of it because they're way more stuff to play around like the Playwright inspector and error handling.

Finally, we just need to display it on screen on our Sveltekit Page +page.svelte. To access the returned stocks object we just destructure our data to a stock array of objects.

<script lang="ts">

export let data;

const { stocks } = data;

console.log(data);

</script>

{#each stocks as item}

<h1>{item.ticker} - {item.price}</h1>

{/each}

PS: Don't forget to change headless flag to true when you deploy it on the server.

Subscribe to my newsletter

Read articles from Rod Lu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rod Lu

Rod Lu

I am a web developer with over 10 years of experience in private and goverment sector, including 7 years in working as a developer in the the US; Experience with Mobile, Frontend and Backend applications; Experience with Startups; Experience in leading teams; Specialized in JavaScript and have professional experience working with Typescript and Svelte/Sveltekit. - I also have experience with web scrapping automations using Playwright and Pupeteer, CRM and ERP systems integrations and project management. I also have experience working with Angular, Vue, C#, Ruby on Rails Mobile as well as SQL and Non SQL distributed databases.