Exploring the Use of Generative Adversarial Networks in Image Synthesis

Susan Adesoji

Susan AdesojiTable of contents

Abstract: Generative adversarial networks (GANs) have proven to be a powerful tool in the field of machine learning, particularly in the generation of synthetic data. This article aims to explore the use of GANs in the synthesis of images, discussing the architecture and training of GANs, as well as their potential applications and limitations.

Introduction: Generative Adversarial Networks (GANs) are a type of deep learning model designed to generate synthetic data that mimics real-world data. GANs consist of two main components: a generator network and a discriminator network. The generator network produces synthetic data, while the discriminator network attempts to distinguish synthetic data from real data. The two networks are trained together in a game-theoretic framework, where the generator tries to produce data that can fool the discriminator while the discriminator tries to correctly identify the synthetic data.

Architecture and Training: GANs are typically composed of convolutional neural networks (CNNs) for image synthesis. The generator network takes a random noise vector as input and generates an image through a series of transposed convolutional layers. The discriminator network, on the other hand, takes an image as input and outputs a scalar value representing the probability that the image is real. The training process involves updating the weights of both networks in an alternating manner, with the generator trying to maximize the error of the discriminator, and the discriminator trying to minimize the error of the generator.

Generative Adversarial Networks (GANs) are a type of deep learning model that has gained significant attention in recent years due to their ability to generate synthetic data that mimics real-world data. GANs consist of two main components: a generator network and a discriminator network. The generator network produces synthetic data, while the discriminator network attempts to distinguish synthetic data from real data. The two networks are trained together in a game-theoretic framework, where the generator tries to produce data that can fool the discriminator, while the discriminator tries to correctly identify the synthetic data.

GANs have been particularly successful in the field of image synthesis and have been used to generate realistic images of objects, scenes, and people. In this paper, we will explore the use of GANs in image synthesis, discussing the architecture and training of GANs, as well as their potential applications and limitations.

The architecture of GANs for image synthesis typically consists of convolutional neural networks (CNNs). The generator network takes a random noise vector as input and generates an image through a series of transposed convolutional layers. The discriminator network, on the other hand, takes an image as input and outputs a scalar value representing the probability that the image is real.

The training process of GANs involves updating the weights of both networks in an alternating manner. The generator network is trained to maximize the error of the discriminator network, while the discriminator network is trained to minimize the error of the generator network. This creates a feedback loop where the generator is trying to produce data that can fool the discriminator, and the discriminator is trying to correctly identify the synthetic data.

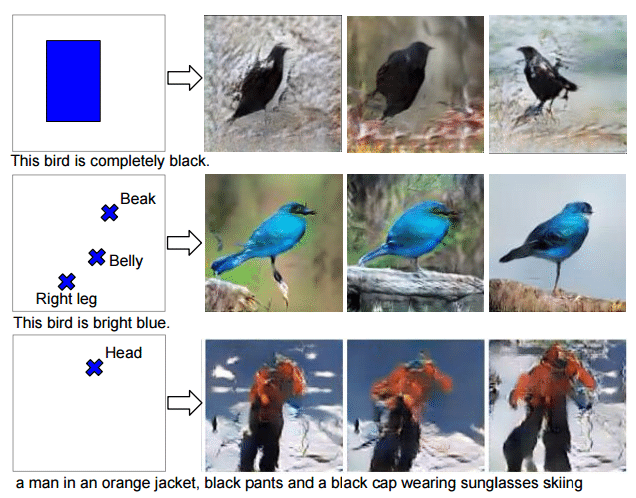

One of the most exciting applications of GANs in image synthesis is the generation of realistic images of objects, scenes, and people. For example, GANs have been used to generate images of realistic faces, animals, and even entire cities. This has the potential to be used in a variety of fields, such as in the entertainment industry for creating special effects in movies and video games, and in the medical field for creating synthetic images for training and testing medical imaging algorithms.

Another application of GANs in image synthesis is image-to-image translation. This involves using GANs to translate an image from one domain to another, such as converting a daytime image to a nighttime image or changing the color of an object in an image. This has the potential to be used in a variety of fields, such as in the field of photography for enhancing and editing images, and in the field of autonomous vehicles for creating synthetic images for training and testing algorithms.

Limitations: Despite their capabilities, GANs also have several limitations. One limitation is that the generated images may not always be of high quality and may contain artifacts or other distortions. Additionally, GANs can be difficult to train and can be sensitive to the choice of hyperparameters. Furthermore, GANs can be used to generate synthetic images that can be used to train models that can be used in malicious ways, such as in deepfakes.

Conclusion: Generative adversarial networks (GANs) have proven to be a powerful tool in the field of machine learning, particularly in the generation of synthetic data. GANs have a wide range of applications and have been used in image synthesis, image-to-image translation, and super-resolution. However, GANs also have limitations, and it’s important to consider these limitations when using them. GANs are an active area of research, and it’s likely that new techniques and architectures will be developed in the future to overcome these limitations and expand the capabilities of GANs.

Subscribe to my newsletter

Read articles from Susan Adesoji directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by