Running Serverless Functions on Kubernetes

Peter Mbanugo

Peter MbanugoTable of contents

Serverless functions are modular pieces of code that respond to a variety of events. It's a cost-efficient way to implement microservices. Developers benefit from this paradigm by focusing on code and shipping a set of functions that are triggered in response to certain events. No server management is required and you can benefit from automated scaling, elastic load balancing, and the “pay-as-you-go” computing model.

Kubernetes, on the other hand, provides a set of primitives to run resilient distributed applications using modern container technology. It takes care of autoscaling and automatic failover for your application and it provides deployment patterns and APIs that allow you to automate resource management and provision new workloads. Using Kubernetes requires some infrastructure management overhead and it may seem like a conflict putting serverless and Kubernetes in the same box.

Hear me out. I come at this with a different perspective that may not be evident at the moment.

You could be in a situation where you're only allowed to run applications within a private data center, or you may be using Kubernetes but you'd like to harness the benefits of serverless. There are different open-source platforms, such as Knative and OpenFaaS, that use Kubernetes to abstract the infrastructure from the developer, allowing you to deploy and manage your applications using serverless architecture and patterns.

This article will show you how to run serverless functions using Knative and Kubernetes.

Introduction to Knative

Knative is a set of Kubernetes components that provides serverless capabilities. It provides an event-driven platform that can be used to deploy and run applications and services that can auto-scale based on demand, with out-of-the-box support for monitoring, automatic renewal of TLS certificates, and more.

Knative is used by a lot of companies. In fact, it powers the Google Cloud Run platform, IBM Cloud Code Engine, and Scaleway serverless functions.

The basic deployment unit for Knative is a container that can receive incoming traffic. You give it a container image to run and Knative handles every other component needed to run and scale the application. The deployment and management of the containerized app is handled by one of the core components of Knative, called Knative Serving. Knative Serving is the component in Knative that manages the deployment and rollout of stateless services, plus its networking and autoscaling requirements.

The other core component of Knative is called Knative Eventing. This component provides an abstract way to consume Cloud Events from internal and external sources without writing extra code for different event sources. This article focuses on Knative Serving, but you'll learn about how to use and configure Knative Eventing for different use-cases in a future article.

Development Set Up

In order to install Knative and deploy your application, you'll need a Kubernetes cluster and the following tools installed:

Docker

kubectl, the Kubernetes command-line tool

kn CLI, the CLI for managing Knative application and configuration

Installing Docker

To install Docker, go to the URL https://docs.docker.com/get-docker and download the appropriate binary for your OS.

Installing kubectl

The Kubernetes command-line tool kubectl allows you to run commands against Kubernetes clusters. Docker Desktop installs kubectl for you, so if you followed the previous section on installing Docker Desktop, you should already have kubectl installed and you can skip this step. If you don't have kubectl installed, follow the instructions below to install it.

If you're on Linux or macOS, you can install kubectl using Homebrew by running the command brew install kubectl. Ensure that the version you installed is up to date by running the command kubectl version --client.

If you're on Windows, run the command curl -LO https://dl.k8s.io/release/v1.21.0/bin/windows/amd64/kubectl.exe to install kubectl, and then add the binary to your PATH. Ensure that the version you installed is up to date by running the command kubectl version --client. You should have version 1.20.x or v1.21.x because in a future section, you're going to create a server cluster with Kubernetes version 1.21.x.

Installing kn CLI

kn CLI provides a quick and easy interface for creating Knative resources, such as services and event sources, without the need to create or modify YAML files directly. kn also simplifies completion of otherwise complex procedures, such as autoscaling and traffic splitting.

To install kn on macOS or Linux, run the command brew install kn.

To install kn on Windows, download and install a stable binary from https://mirror.openshift.com/pub/openshift-v4/clients/serverless/latest. Afterward, add the binary to the system PATH.

Creating a Kubernetes Cluster

You need a Kubernetes cluster to run Knative. You can use a local cluster using Docker Desktop or kind.

Create a Cluster with Docker Desktop

Docker Desktop includes a stand-alone Kubernetes server and client. This is a single-node cluster that runs within a Docker container on your local system and should be used only for local testing.

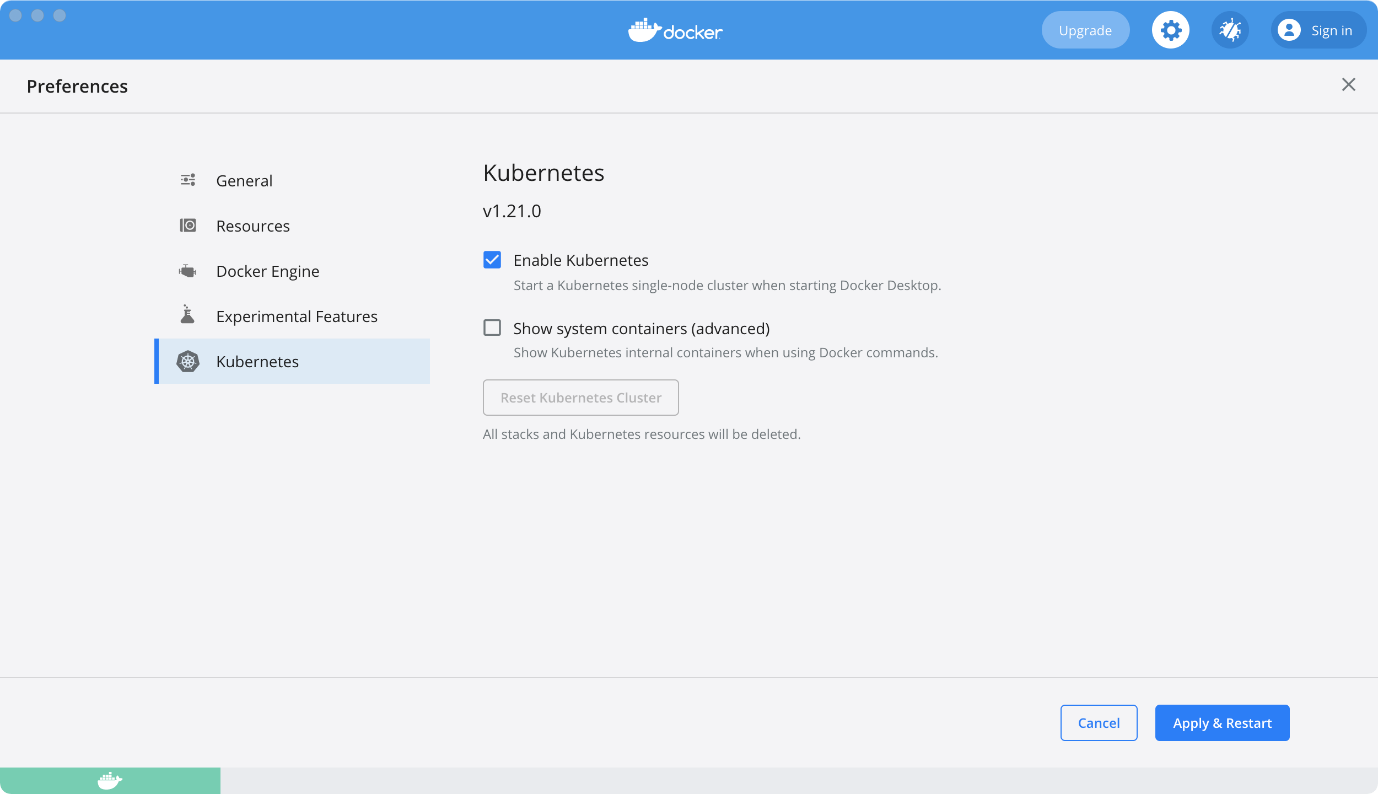

To enable Kubernetes support and install a standalone instance of Kubernetes running as a Docker container, go to Preferences > Kubernetes and then click Enable Kubernetes.

Click Apply & Restart to save the settings and then click Install to confirm, as shown in Figure 1. This instantiates the images required to run the Kubernetes server as containers.

Figure 1: Enable Kubernetes on Docker Desktop

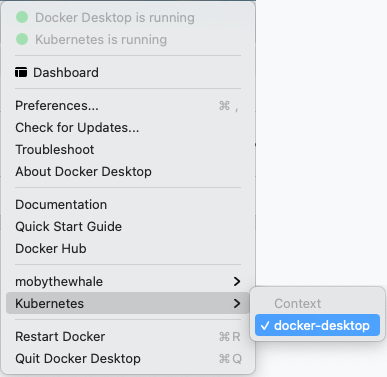

The status of Kubernetes shows in the Docker menu and the context points to docker-desktop, as shown in Figure 2.

Figure 2: kube context

You can also create a cluster using kind, a tool for running local Kubernetes clusters using Docker container nodes. If you have kind installed, you can run the following command to create your kind cluster and set the kubectl context.

curl -sL \

https://raw.githubusercontent.com/csantanapr\

/knative-kind/master/01-kind.sh | sh

You can also use a managed Kubernetes service like DigitalOcean Kubernetes Service. In order to use DigitalOcean Kubernetes Service, you need a DigitalOcean account. If you don't have an account, you can create one using my referral link -https://m.do.co/c/257c8259d8ef, which gives you $100 credit to try out different things on DigitalOcean.

You'll create a cluster using doctl, the official command-line interface for the DigitalOcean API. After you've created a DigitalOcean account, follow the instructions on docs.digitalocean.com/reference/doctl/how-to/ to install and configure doctl. (Note: You'll get an error trying to get to the docs if you don't have an account.)

After you've installed and configured doctl, open your command line application and run the command below in order to create your cluster on DigitalOcean.

doctl kubernetes cluster \

create serverless-function \

--region fra1 --size s-2vcpu-4gb \

--count 1

Wait for a few minutes for your cluster to be ready. When it's done, you should have a single-node cluster with the name serverless-function, in Frankfurt. The size of the node is a computer with two vCPUs, and 4GB RAM. Also, the command you just executed sets the current kubectl context to that of the new cluster.

You can modify the values passed to the doctl kubernetes cluster create command. The --region flag indicates the cluster region. Run the command doctl kubernetes options regions to see possible values that can be used. The computer size to use when creating nodes is specified using the --size flag. Run the command doctl kubernetes options sizes for a list of possible values. The --count flag specifies the number of nodes to create. For prototyping purposes, you created a single-node cluster with two vCPUs and 4GB RAM.

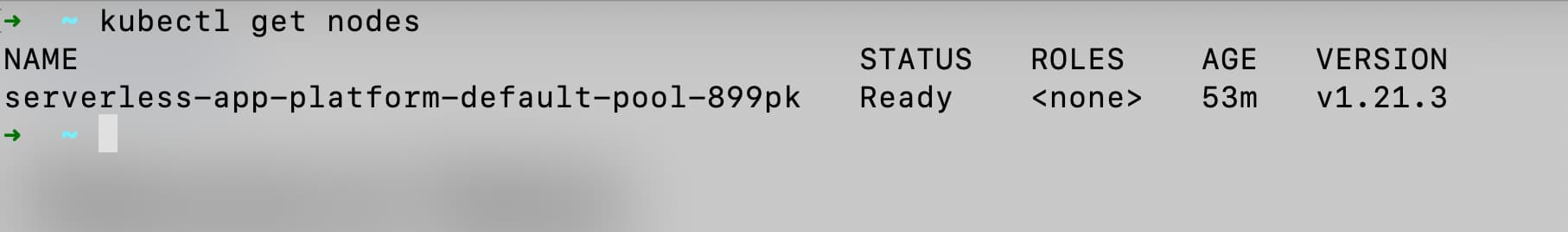

Check that you can connect to your cluster by using kubectl to see the nodes. Run the command kubectl get nodes. You should see one node in the list, and the STATUS should be READY, as shown in Figure 3.

Figure 3: kubectl get nodes

Install Knative Serving

Knative Serving manages service deployments, revisions, networking, and scaling. The Knative Serving component exposes your service via an HTTP URL and has safe defaults for its configurations.

For kind users, follow the instructions below to install Knative Serving.

Run the command

curl -sLhttps://raw.githubusercontent.com/csantanapr/knative-kind/master/02-serving.sh| shto install Knative Serving.When that's done, run the command

curl -sLhttps://raw.githubusercontent.com/csantanapr/knative-kind/master/02-kourier.sh| shto install and configure Kourier.

For Docker Desktop users, run the command curl -sL https://raw.githubusercontent.com/csantanapr/knative-docker-desktop/main/demo.sh | sh.

Follow the instructions below to install Knative in your DigitalOcean cluster. The same instructions will also work if you use Amazon EKS or Azure Kubernetes Service.

- Run the following command to specify the version of Knative to install.

export KNATIVE_VERSION="0.26.0"

- Run the following commands to install Knative Serving in namespace knative-serving.

~ kubectl apply -f \

https://github.com/knative/serving/releases/\

download/v$KNATIVE_VERSION/serving-crds.yaml

~ kubectl wait --for=condition=Established \

--all crd

~ kubectl apply -f \

https://github.com/knative/serving/releases/\

download/v$KNATIVE_VERSION/serving-core.yaml

~ kubectl wait pod --timeout=-1s \

--for=condition=Ready -l '!job-name' \

-n knative-serving > /dev/null

- Install Kourier in namespace kourier-system.

~ kubectl apply -f \

https://github.com/knative/net-kourier/\

releases/download/v0.24.0/kourier.yaml

~ kubectl wait pod \

--timeout=-1s \

--for=condition=Ready \

-l '!job-name' -n kourier-system

~ kubectl wait pod \

--timeout=-1s \

--for=condition=Ready \

-l '!job-name' -n knative-serving

- Run the following command to configure Knative to use Kourier.

~ kubectl patch configmap/config-network \

--namespace knative-serving \

--type merge \

--patch \

'{"data":{"ingress.class":\

"kourier.ingress.networking.knative.dev"}}'

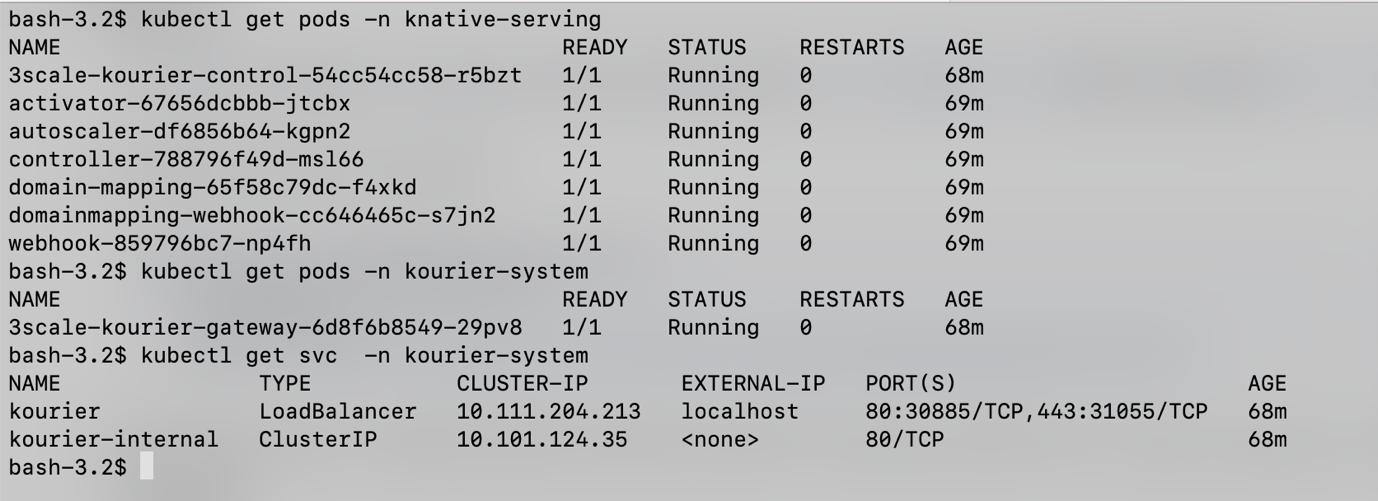

- Verify that Knative is installed properly. All pods should be in Running state and the kourier-ingress service configured, as shown in Figure 4.

~ kubectl get pods -n knative-serving

~ kubectl get pods -n kourier-system

~ kubectl get svc -n kourier-system

- Configure DNS for Knative Serving. You'll use a wildcard DNS service for this exercise. Knative provides a Kubernetes Job called default-domain that will only work if the cluster's LoadBalancer Service exposes an IPv4 address or hostname. Run the command below to configure Knative Serving to use

sslip.ioas the default DNS suffix.

~ kubectl apply -f \

https://github.com/knative/serving/releases/\

download/v$KNATIVE_VERSION/\

serving-default-domain.yaml

If you want to use your own domain, you'll need to configure your DNS provider. See https://knative.dev/docs/admin/install/serving/install-serving-with-yaml/#configure-dns for instructions on how to do that.

Figure 4: Verify installation

Serverless Functions on Kubernetes

func is an extension of the kn CLI, that enables the development and deployment of platform-agnostic functions as a Knative service on Kubernetes. It comprises of function templates and runtimes and uses Cloud Native Buildpacks to build and publish OCI images of the functions.

To use the CLI, install it using Homebrew by running the command brew tap knative-sandbox/kn-plugins && brew install func. If you don't use Homebrew, you can download a pre-built binary from https://github.com/knative-sandbox/kn-plugin-func/releases/tag/v0.18.0, then unzip and add the binary to your PATH.

Functions can be written in Go, Java, JavaScript, Python, and Rust. You're going to create and deploy a serverless function written in JavaScript.

Create a Function Project

To create a new JavaScript function, open your command-line application and run the command kn func create sample-func --runtime node. A new directory named sample-func will be created and a Node.js function project will be initialized. Other runtimes available are: Go, Python, Quarkus, Rust, Spring Boot, and TypeScript.

The func.yaml file contains configuration information for the function. It's used when building and deploying the function. You can specify the buildpack to use, environment variables, and options to tweak the autoscaling options for the Knative Service. Open the file and update the envs field with the value below:

- name: TARGET

value: Web

The index.js file contains the logic for the function. You can add more files to the project, or install additional dependencies, but your project must include an index.js file that exports a single default function. Let's explore the content of this file.

The index.js file exports the invoke(context) function that takes in a single parameter named context. The context object is an HTTP object containing the HTTP request data such as:

httpVersion: The HTTP version

method: The HTTP request method (only GET or POST supported)

query: The query parameters

body: Contains the request body for a POST request

headers: The HTTP headers sent with the request

The invoke function calls the handlePost function if it's a POST request, or the handleGet function when it's a GET request. The function can return void or any JavaScript type. When a function returns void, and no error is thrown, the caller will receive a 204 No Content response. If you return some value, the value gets serialized and returned to the caller.

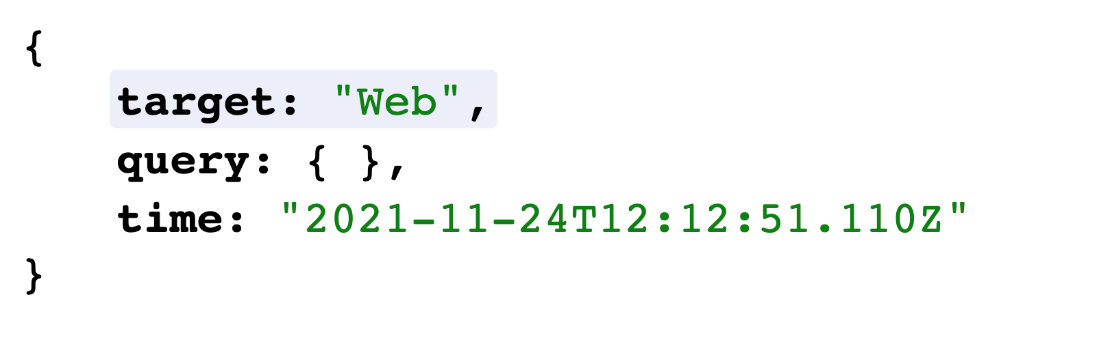

Modify the handleGet function to return the value from the TARGET environment variable, the query paramteter, and date. Open index.js and update the handleGet function with the function definition below:

function handleGet(context) {

return {

target: process.env.TARGET,

query: context.query,

time: new Date().toJSON(),

};

}

To deploy your function to your Kubernetes cluster, use the deploy command. Open the terminal and navigate to the function's directory. Run the command kn func deploy to deploy the function. Because this is the first time you're deploying the function, you'll be asked for the container registry info for where to publish the image. Enter your registry's information (e.g., docker.io/<username>) and press ENTER to continue.

The function will be built and pushed to the registry. After that, it'll deploy the image to Knative and you'll get a URL for the deployed function. Open the URL in a browser to see the returned object. The response you get should be similar to what you see in Figure 5.

Figure 5: Function invocation response

You can also run the function locally using the command kn func run.

Other Useful Commands

You're now familiar with creating and deploying functions to Knative using the build and deploy commands. There are other useful commands that can come in handy when working with functions. You can see the list of commands available using the command kn func --help.

The deploy command builds and deploys the application. If you want to build an image without deploying it, you can use the build command (i.e., kn func build). You can pass it the flag -i <image> to specify the image, or -r <registry> to specify the registry information.

The kn func info command can be used to get information about a function (e.g., the URL). The command kn func list on the other hand, lists the details of all the functions you have deployed.

To remove a function, you use the kn func delete <name> command, replacing <name> with the name of the function to delete.

You can see the configured environment variables using the command kn func config envs. If you want to add an environment variable, you can use the kn func config envs add command. It brings up an interactive prompt to add environment variables to the function configuration. You can remove environment variables as well using kn func config envs remove.

What's Next?

Serverless functions are pieces of code that take an HTTP request object and provide a response. With serverless functions, your application is composed of modular functions that respond to events and can be scaled independently. In this article, you learned about Knative and how to run serverless functions on Kubernetes using Knative and the func CLI. You can learn more about Knative on knative.dev, and a cheat sheet for the kn CLI is available on cheatsheet.pmbanugo.me/knative-serving.

A Good Book to Read

If you're interested in serverless, Kubernetes, and Knative, you should check out my book "How to build a serverless application platform on Kubernetes. You'll learn how to build a serverless platform that runs on Kubernetes using GitHub, Tekton, Knative, Cloud Native Buildpacks, and other interesting technologies. You can find it at https://books.pmbanugo.me/serverless-app-platform.

Article originally published on Code Magazine

Subscribe to my newsletter

Read articles from Peter Mbanugo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Peter Mbanugo

Peter Mbanugo

Peter Mbanugo is an enthusiastic software engineering professional with experience in the analysis, design, development, testing, and implementation of various internet-based applications. A proactive leader and an OSS contributor, with excellent interpersonal and motivational abilities to develop collaborative relationships amongst high-functioning teams. An experienced writer with experience writing articles, tutorials, and a self-published book. He's a contributor to Knative, vercel/micro, and Hoodie.