Azure 500 the TL;DR study guide

Roberto

RobertoTable of contents

- Following is a set of questions on the Cloud Guru AZ-500 guide

- There are four fundamental Azure roles:

- Differences between Azure roles and Azure AD roles

- Azure AD identities

- Conditional access conditions

- Shared responsibility model

- Azure hierarchy of systems

- Azure role-based access control (RBAC)

- Defense in depth

- Distributed Denial of Service (DDoS) Protection

- Configure VPN forced tunneling

- User Defined Routes and Network Virtual Appliances

- Deploy a Network Security Group

- Why use a service endpoint?

- Azure application gateway

- Azure front door

- ExpressRoute

- Endpoint protection

- Privileged access

- Virtual machine templates

- Disk encryption

- Azure Kubernetes Service architecture

- Azure Monitor

- Defender for Cloud

- Security center policies

- Defender for Cloud

- Log Analytics

- Microsoft identity platform

- Register an application with App Registration

- Microsoft Graph two types of permissions

- Managed identities

- Data sovereignty

- Shared access signatures

- Azure AD storage authentication

- SQL database authentication

- database auditing

- Key Vault

- Key Vault access

- key rotation

- Key Principles of Shift Left

- MCRA Notes (Microsoft Cybersecurity Reference Architectures)

- Common Vulnerability Scoring System (CVSS

- Key Components of CVSS

- Compliance design areas

- The enterprise access model

- Threat Modeling and the STRIDE Model

- Defender

- Fuzz Testing is a Software Testing

- What Are Dangling DNS Entries?

- The Microsoft Cloud Security Benchmark (MCSB)

- 1. Identity and Access Management (IAM)

- 2. Data Protection

- 3. Network Security

- 4. Threat Protection

- 5. Security Operations

- 6. Application Security

- 7. Endpoint Security

- 8. Governance and Compliance

- 9. Incident Response

- 10. Backup and Recovery

- 11. Secure Configuration

- 12. Supply Chain Security

- 13. Monitoring and Logging

- 14. Encryption and Key Management

- 15. Zero Trust Architecture

- Modern security practices assume that the adversary has breached the network perimeter.

- 1. Zero Trust Architecture

- 2. Network Segmentation

- 3. Endpoint Detection and Response (EDR)

- 4. Continuous Monitoring and Threat Hunting

- 5. Identity and Access Management (IAM)

- 6. Encryption and Data Protection

- 7. Incident Response and Recovery

- 8. Assume Breach in Design

- 9. Threat Intelligence Integration

- 10. User and Entity Behavior Analytics (UEBA)

- 11. Backup and Resilience

- 12. Security Awareness Training

- 13. Cloud-Specific Security Measures

- 14. Deception Technology

- 15. Regular Security Assessments

- What's the difference between layer 3 and layer 4 osi model?

- In azure The flow record allows a network security group to be stateful. what does this mean?

- References

Following is a set of questions on the Cloud Guru AZ-500 guide

Which Azure AD External Identities feature lets you invite guest users to collaborate with your organization?

- Azure AD B2B

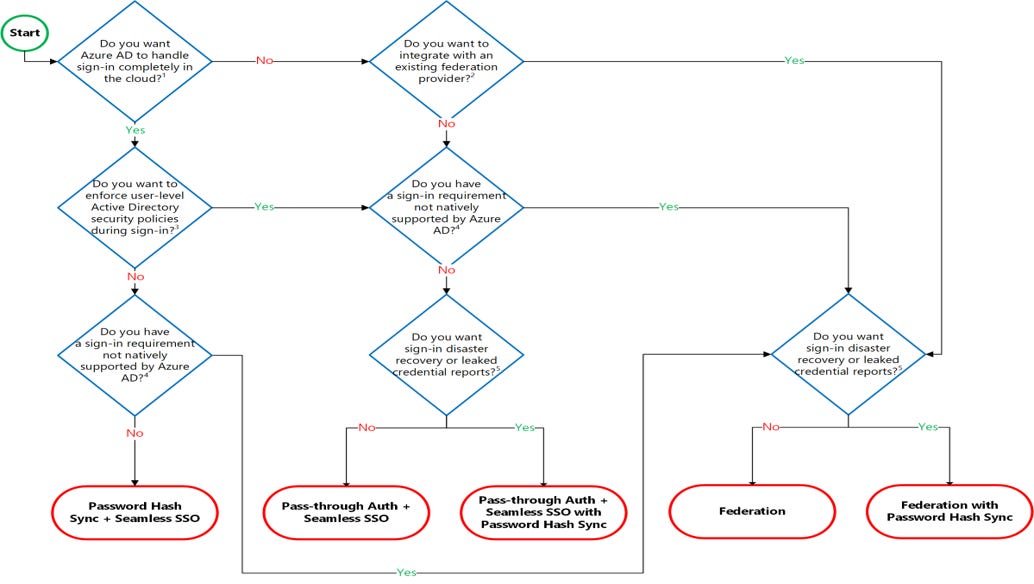

What are the Azure AD Connect hybrid identity authentication solutions?

Federation

Pass-through authentication

Password hash sync

How can you allow external users to sign up for specific applications themselves?

Configure user flows.

Enable guest self-service sign-up via user flows.

Which Azure Active Directory authentication options can enforce on-premises account policies at sign-in?

Pass-through authentication

Federation

What can be used to synchronize on-premises Active Directory users to Azure Active Directory?

- Azure AD Connect

Which Azure AD feature can be used to provide access for consumers using their preferred social, enterprise, or local account identities?

- Azure AD B2C

Which Azure AD External Identities feature lets you invite guest users to collaborate with your organization?

- Azure AD B2B

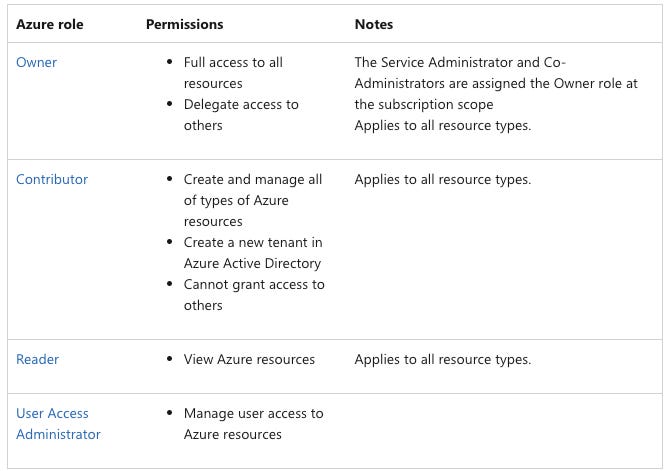

Contributor vs Owner

Contributor cannot modify permissions

Which of the following are examples of Azure AD permissions?

Create a security group

Create an administrative unit

Azure RBAC custom role definitions can include which types of permissions?

notActionsdefine what actions cannot be performed at the management layer in a custom role.''

notDataActionsdefine what actions cannot be performed at the data layer in a custom role.''

actionsdefine what actions can be performed at the management layer in a custom role.''

dataActionsdefine what actions can be performed at the data layer in a custom role.'

What is the difference between Owner and Contributor Azure roles?

The Contributor role has full access to a resource but cannot modify permissions.

An Owner role has full access to a resource and can modify permissions.

Custom roles are defined in which format?

- JSON

Which components make up an Azure RBAC assignment?

Security principal

Scope

Role definition

What is a valid requirement to create an Azure AD custom role?

The Global Administrator role

The Privileged Role Administrator role

An Azure AD Premium P1 or P2 license

Note: The User Access Administrator role does not permit the creation of Azure AD custom roles. It is an Azure RBAC role, not an Azure AD Role.

Azure RBAC permission assignments can be scoped to which Azure management scopes?

Management groups

Subscriptions

Resource groups

Resources

What is the principle of least privilege?

- When a user is given the minimum levels of access required to perform their job functions.

Which of the following are an example of an Azure permission (sometimes referred to as Azure RBAC)?

Create a virtual machine

Modify an Azure web app

Azure AD RBAC role assignments can be scoped to _____?

A tenant

Azure AD resources (e.g., applications)

Administrative units

There are four fundamental Azure roles:

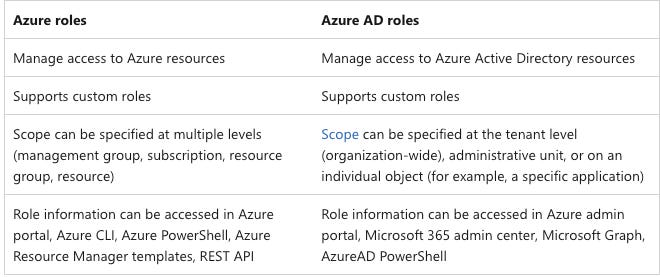

Differences between Azure roles and Azure AD roles

Azure roles control permissions to manage Azure resources, while Azure AD roles control permissions to manage Azure Active Directory resources

18/10/22

FLASH CARDS

The intention is to provide long term knowledge beyond of an exam which one day may expire and may become irrelevant. I'll write about the fundamentals that won't change over the longterm.

Thanks for reading Cloud Fabric! Subscribe for free to receive new posts and support my work.

Azure AD identities

Application Object:

Service principal: Think of it as a service account in Windows Active Directory

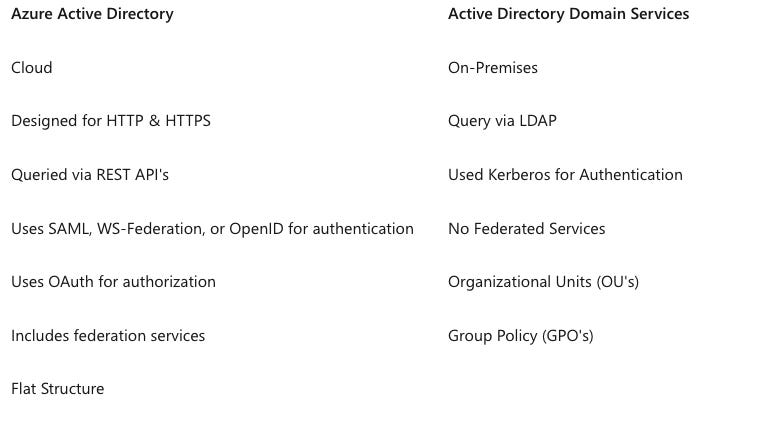

Difference between Azure AD and On-premise Active Directory

Azure AD Groups, 2 types

Security Groups: Are used to give group members access to applications, resources and assign licenses. Group members can be users, devices, service principals, and other groups.

Microsoft 365 groups: Used for collaboration, giving members access to a shared mailbox, calendar, files, SharePoint site, and so on. Group members can only be users.

Note no membership: Assigned or Dynamic - this last one requires at least a P1 license.

Multi-factor Authentication states

Disabled→Enabled→Enforced. Only administrators may move users between states

Disabled. User not enrolled for MFA

Enabled. User is enrolled in Azure AD Multi-Factor Authentication, but can still use their password for legacy authentication.

Enforced. The user is enrolled for MFA. Users who complete registration while in the Enabled state are automatically moved to the Enforced state.

Authentication Alternatives

Do you need on-premises Active Directory integration? No? then you would use Cloud-Only authentication.

If you do need on-premises Active Directory integration, then you would use Password Hash Sync + Seamless SSO.

If you do need on-premises Active Directory integration, but you do not need to use cloud authentication, password protection, and your authentication requirements are natively supported by Azure AD, then you would use Pass-through Authentication Seamless SSO.

If you do need on-premises Active Directory integration, but you do not need to use cloud authentication, password protection, and your authentication requirements are natively supported by Azure AD, then you would use Pass-through Authentication Seamless SSO.

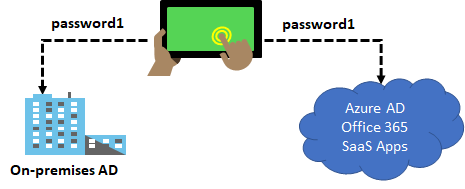

Password Hash Sync

It is important to understand that this is same sign-in, not single sign-on. The user still authenticates against two separate directory services, albeit with the same user name and password.

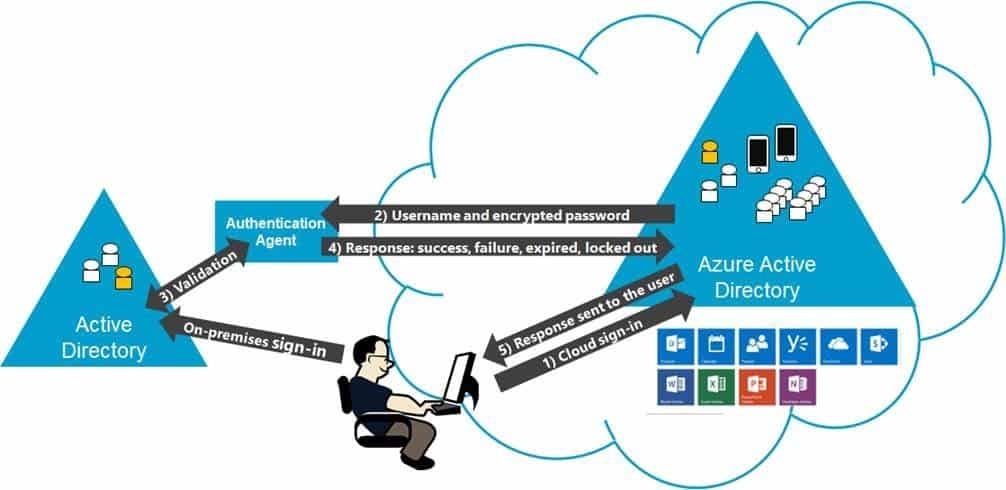

Pass-through Authentication

Pass-through authentication (PTA) is a feature of Azure AD Connect.

The password need not be present in Azure AD (in any form)

The agent connects outbound to Azure AD and listens for authentication requests, so it only requires outbound ports to be open.

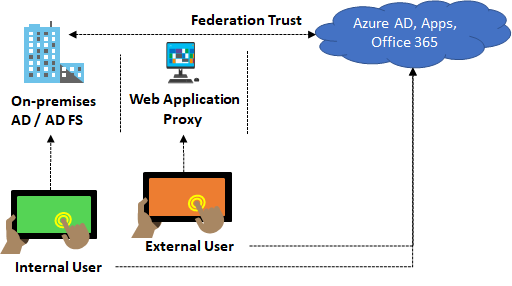

Federation with Azure AD

This sign-in method ensures that all user authentication occurs on-premise

Authentication Decision tree

Important authentication considerations:

Azure AD can handle sign-in for users without relying on on-premises components to verify passwords.

Azure AD can hand off user sign-in to a trusted authentication provider such as ADFS.

Security policies such as account expired, disabled account, password expired, account locked out, and sign-in hours on each user sign-in, Azure AD requires some on-premises components.

Sign-in features not natively supported by Azure AD:

Sign-in using smartcards or certificates.

Sign-in using on-premises MFA Server.

Sign-in using third-party authentication solution

Multi-site on-premises authentication solution.

Organizations can fail over to Password Hash Sync if their primary sign-in method fails and it was configured before the failure event.

Azure AD identity protection

Identity Protection is a tool that allows organizations to accomplish three key tasks:

Automate the detection and remediation of identity-based risks.

Investigate risks using data in the portal.

Export risk detection data to third-party utilities for further analysis.

Multifactor authentication in Azure

The security of MFA two-step verification lies in its layered approach.Authentication methods include:

Something you know (typically a password)

Something you have (a trusted device that is not easily duplicated, like a phone)

Something you are (biometrics)

Note:

The Trusted IPs bypass works only from inside of the company intranet.

Enable multifactor authentication

Remember you can only enable MFA for organizational accounts stored in Active Directory. These are also called work or school accounts.

All users start out Disabled.

When you enroll users in Azure AD Multi-Factor Authentication, their state changes to Enabled.

When enabled users sign in and complete the registration process, their state changes to Enforced.

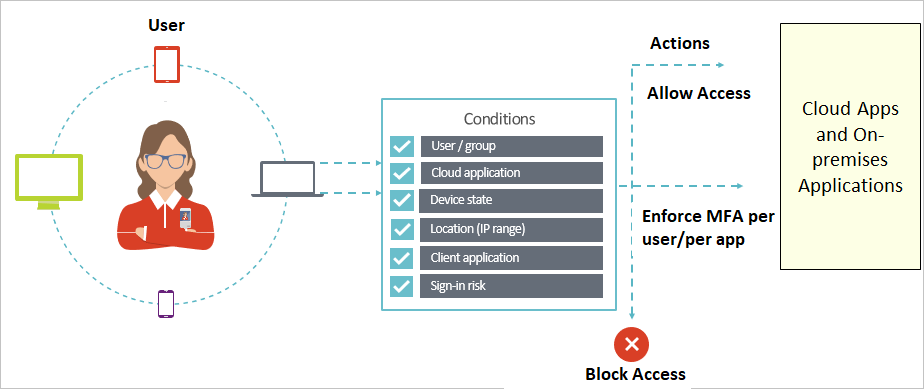

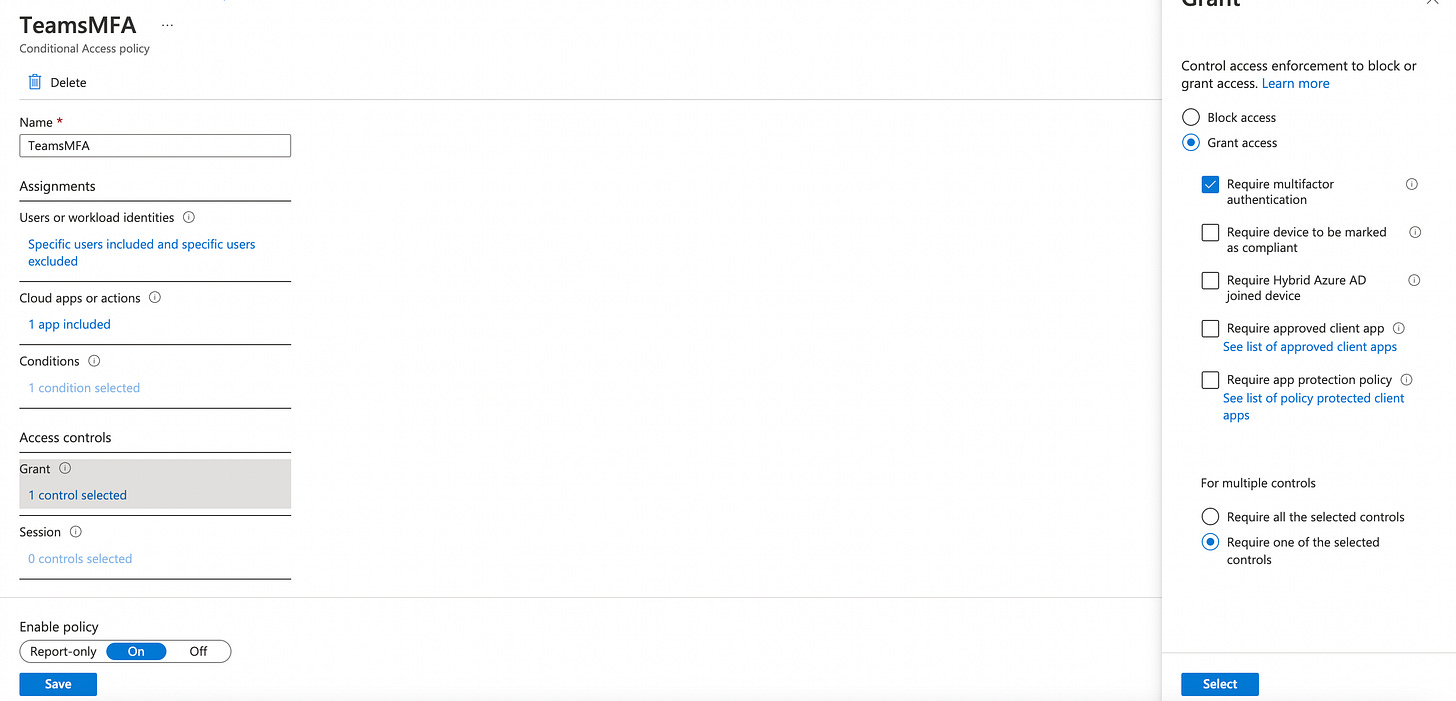

Conditional access conditions

With access controls, you can either Block Access altogether or Grant Access with more requirements by selecting the desired control:

Require MFA from Azure AD or an on-premises MFA (combined with AD FS).

Grant access to only trusted devices.

Require a domain-joined device.

Require mobile devices to use Intune app protection policies.

In this example always require MFA for Teams for a specific user.

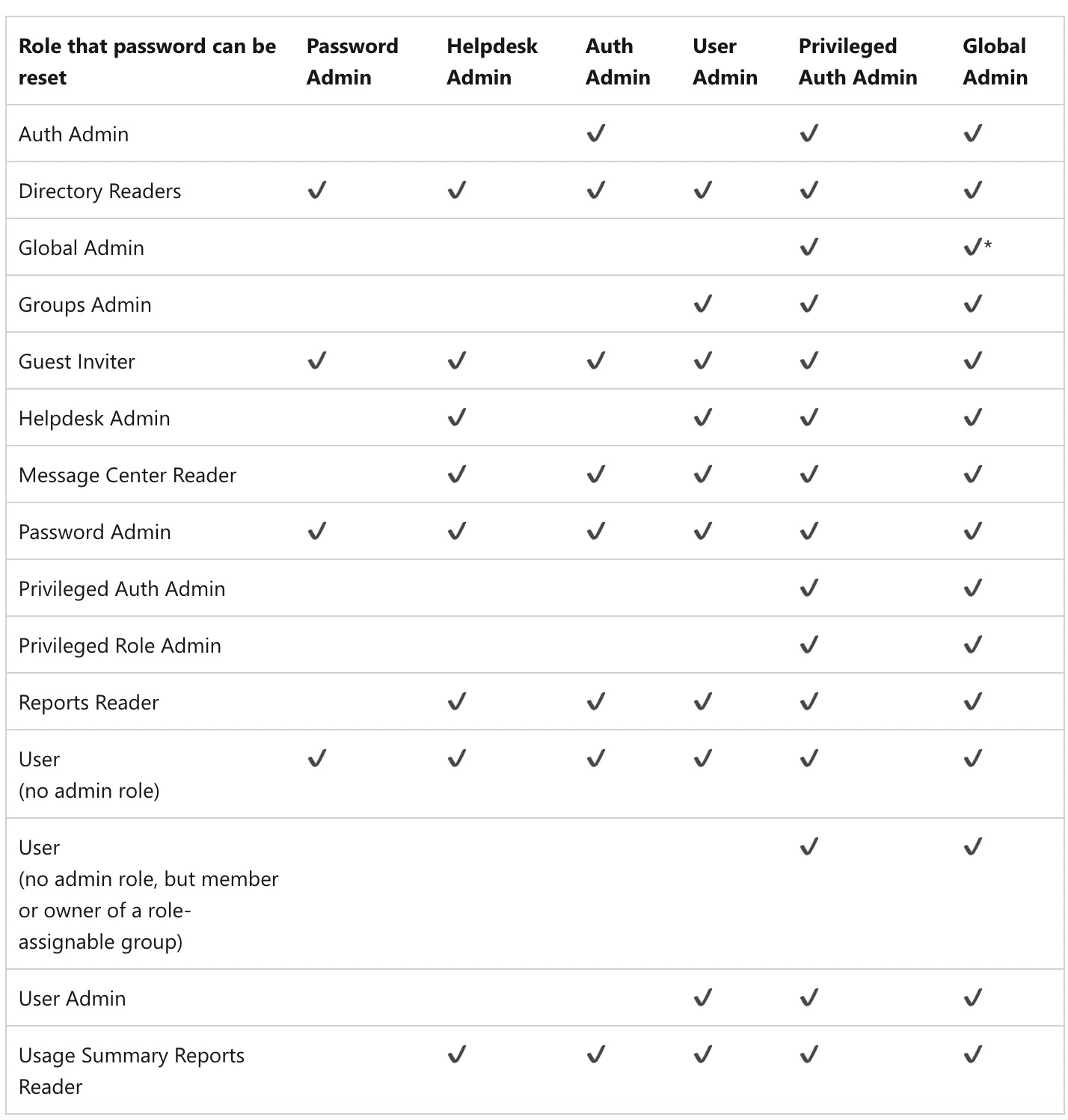

Who can reset passwords? See table below:

Zero Trust

Instead of assuming everything behind the corporate firewall is safe, the Zero Trust model assumes breach and verifies each request as though it originates from an open network.

Regardless of where the request originates or what resource it accesses, Zero Trust teaches us to never trust, always verify.

Every access request is fully authenticated, authorized, and encrypted before granting access.

No longer is trust assumed based on the location inside an organization's perimeter.

A Zero Trust model requires:

Signals to inform decisions

Policies to make access decisions

Enforcement capabilities to implement those decisions effectively.

Note: Identity is the control plane. If you can’t determine who the user is, you can’t establish a trust relationship for other transactions.

Guiding principles of Zero Trust

Verify explicitly. Always authenticate and authorize based on all available data points.

Use least privileged access. Limit user access with Just In Time and Just Enough Access (JIT/JEA).

Assume breach.

Verify all sessions are encrypted end to end. Use analytics to get visibility, drive threat detection, and improve defenses

The National Institute of Standards and Technology has a Zero Trust Architecture, NIST 800-207, publication. Click to download, it's a free PDF.

Some key tenets of the Zero Trust Architecture:

The entire enterprise private network is not considered an implicit trust zone.

No resource is inherently trusted.

All communication is secured regardless of network location.

Trust in the requester is evaluated before the access is granted.

Access should also be granted with the least privileges needed to complete the task

Tenets of Zero Trust

All data sources and computing services are considered resources.

All communication is secured regardless of network location.

Access to individual enterprise resources is granted on a per-session basis.

Access to resources is determined by a dynamic policy.

The enterprise monitors and measures the integrity and security posture of all owned and associated assets.

All resource authentication and authorization are dynamic and strictly enforced before access is allowed.

The enterprise collects as much information as possible about the current state of assets, network infrastructure and communications and uses it to improve its security posture.

Zero Trust view of Networks

The enterprise network is not considered an implicit trust zone.

Devices on the network may not be owned or configurable by the enterprise.

No resource is inherently trusted.

Not all enterprise resources are on enterprise-owned infrastructure.

Migrating to a Zero Trust Architecture

It is unlikely that any significant enterprise can migrate to zero trust in a single technology refresh cycle. There may be an indefinite period when ZTA workflows coexist with non-ZTA workflows in an enterprise.

Migration to a ZTA approach to the enterprise may take place one business process at a time. Migrating an existing workflow to a ZTA will likely require (at least) a partial redesign.

Key PIM features, Privileged Identity Management

Providing just-in-time privileged access to Azure AD and Azure resources

PIM allows you to set an end time for the role.

Requiring approval to activate privileged roles.

Enforcing Azure Multi-Factor Authentication (MFA) to activate any role.Using justification to understand why users activate.

Getting notifications. Conducting access reviews. Downloading an audit history.

Shared responsibility model

Regardless of the deployment type, you always retain responsibility for the following:

Data

Endpoints

Accounts

Access management

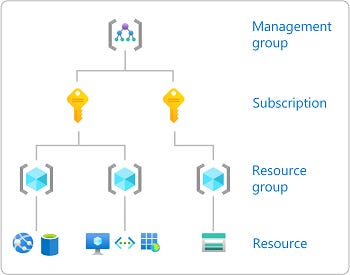

Azure hierarchy of systems

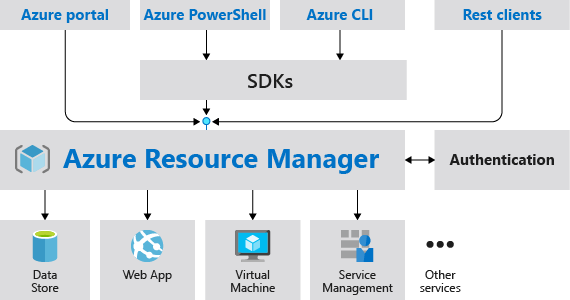

Azure Resource Manager is the deployment and management service for Azure.

Scope

Azure provides four levels of scope:

Management groups

Subscriptions

Resource groups

Resources

Lower levels inherit settings from higher levels.

Resource Groups

- All the resources in your group should share the same lifecycle. You deploy, update, and delete them together. If one resource, such as a database server, needs to exist on a different deployment cycle it should be in another resource group.

Azure role-based access control (RBAC)

RBAC is:

Control the ability for users to create, modify, or delete Azure resources and permissions.

RBAC is an authorization system built on Resource Manager that provides fine-grained access management of Azure resources.

You can use RBAC to let one employee manage virtual machines in a subscription while another manages SQL databases within the same subscription.

Each Azure subscription is associated with one Azure AD directory.

RBAC manages who has access to Azure resources, what areas they have access to and what they can do with those resource, examples:

Allowing a user, the ability to only manage virtual machines in a subscription and not the ability to manage virtual networks

Allowing a user, the ability to manage all resources, such as virtual machines, websites, and subnets, within a specified resource group

Allowing an app, to access all resources in a resource group

Allowing a DBA group, to manage SQL databases in a subscription

Defense in depth

Firewalls, DMZ, VNets, are no longer enough.

Network Micro-Segmentation

A best practice recommendation is to adopt a Zero Trust strategy based on user, device, and application identities. Zero Trust enforces and validates access control at “access time:

Azure Network Security Groups can be used for basic layer 3 & 4 access controls between Azure Virtual Networks, their subnets, and the Internet.

Application Security Groups enable you to define fine-grained network security policies based on workloads, centralized on applications, instead of explicit IP addresses.

IP addresses

Private - A private IP address is dynamically or statically allocated to a VM from the defined scope of IP addresses in the virtual network. VMs use these addresses to communicate with other VMs in the same or connected virtual networks which conforms to RFC 1918.

Public - Public IP addresses, which allow Azure resources to communicate with external clients

Network adapters

A VM can have more than one network adapter for different network configurations.

Distributed Denial of Service (DDoS) Protection

If the attack originates from one location, it is called a DoS.

f the attack originates from multiple networks and systems, it is called distributed denial of service (DDoS). A DDoS generally involves many systems sending traffic to targets as part of a botnet.

- botnets are also made up of Internet of Things (IoT) devices

Designing and building for DDoS resiliency:

Best practice 1

- Ensure that security is a priority throughout the entire lifecycle of an application

Best practice 2

Design your applications to scale horizontally to meet the demands of an amplified load—specifically, in the event of a DDoS.

Best practice 3

Implement security-enhanced designs for your applications by using the built-in capabilities of the platform.

How Azure denial-of-service protection works

DDoS Protection blocks attack traffic and forwards the remaining traffic to its intended destination. Within a few minutes of attack detection, you’ll be notified with Azure Monitor metrics.

DDoS Protection

Standardcan mitigate the following types of attacks:

Volumetric attacks: The attack's goal is to flood the network layer with a substantial amount of seemingly legitimate traffic.

Protocol attacks: Exploiting a weakness in the layer 3 and layer 4 protocol stack. It includes, SYN flood attacks, reflection attacks, and other protocol attacks.

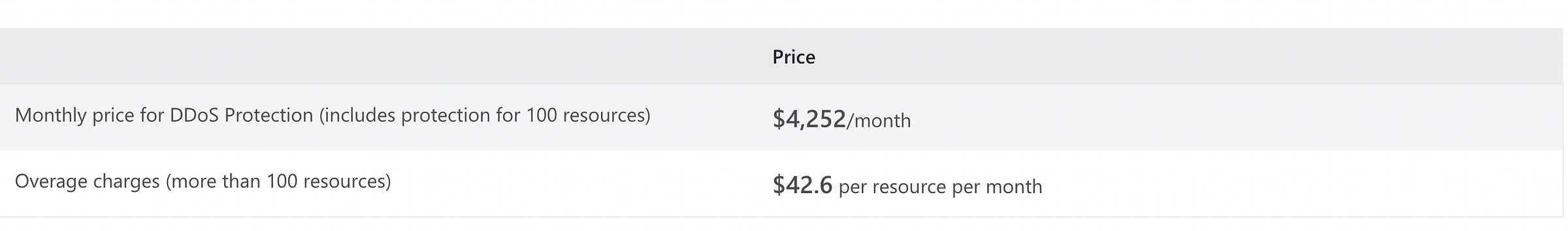

DDOS pricing as of 02/08/2022:

DDoS Protection Standard protects resources in:

virtual network including public IP addresses associated with virtual machines,

load balancers

application gateways.

Azure Firewall

Built-in high availability - Because high availability is built in, no additional load balancers are required and there’s nothing you need to configure.

Unrestricted cloud scalability

Network traffic filtering rules

Outbound Source Network Address Translation (OSNAT) support

Inbound Destination Network Address Translation (DNAT) support

Azure Monitor logging

Azure Firewall has three rule types:

NAT rules

Network rules, Applied first

Application rules, Applied second

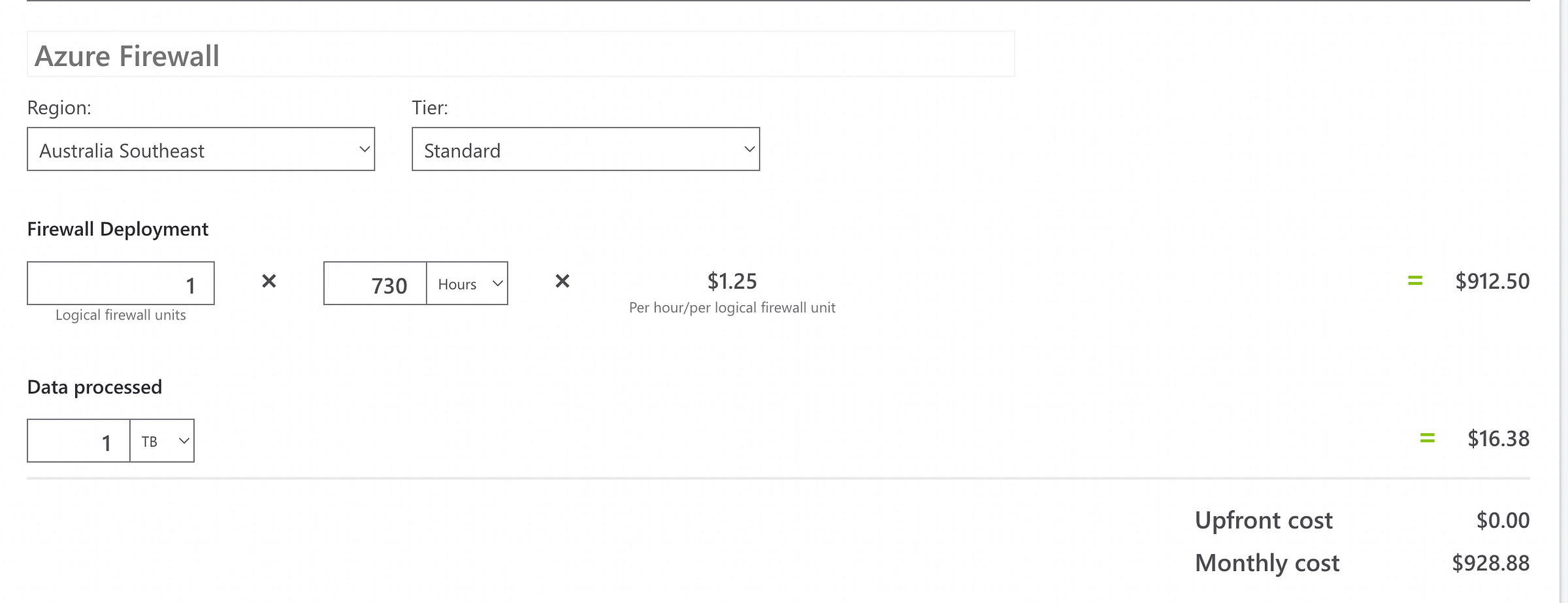

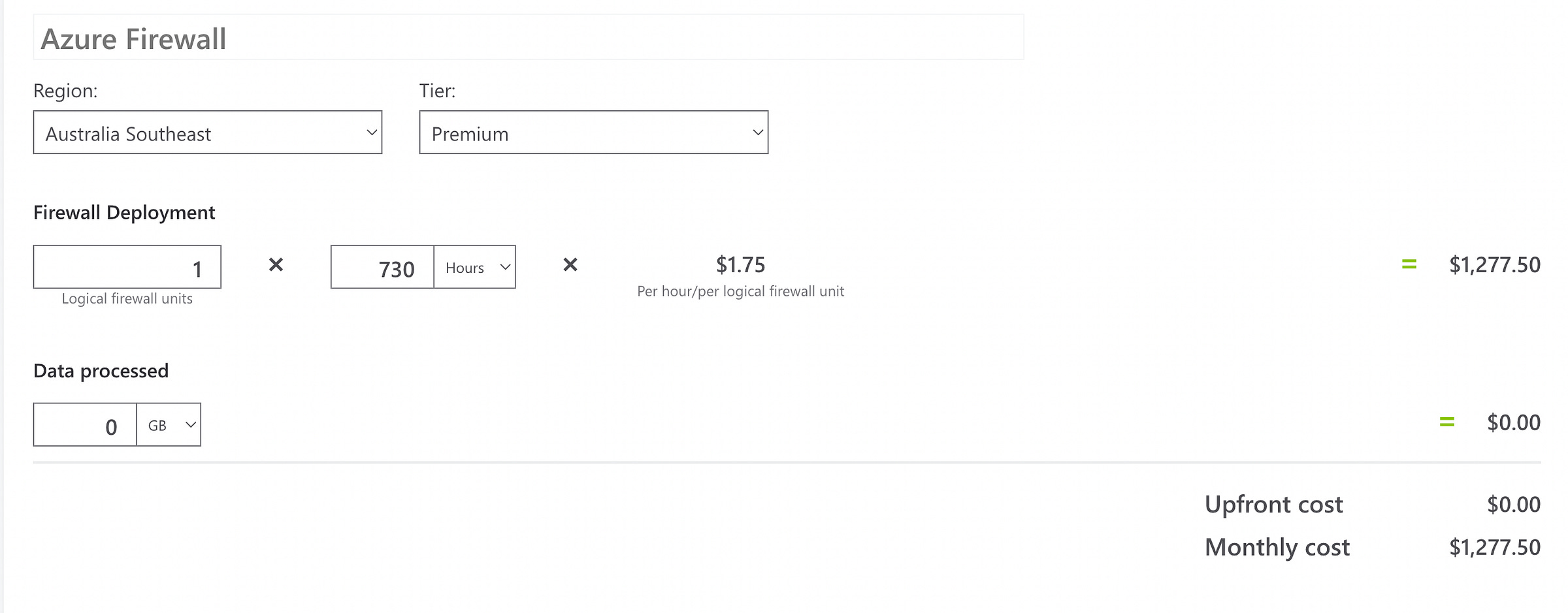

Azure Firewall Pricing as of 03/08/22

Standard size

Premium Size

Configure VPN forced tunneling

You configure forced tunneling in Azure via virtual network User Defined Routes (UDR).

User Defined Routes and Network Virtual Appliances

A User Defined Routes (UDR) is a custom route in Azure that overrides Azure's default system routes or adds routes to a subnet's route table.

Network Virtual Appliances

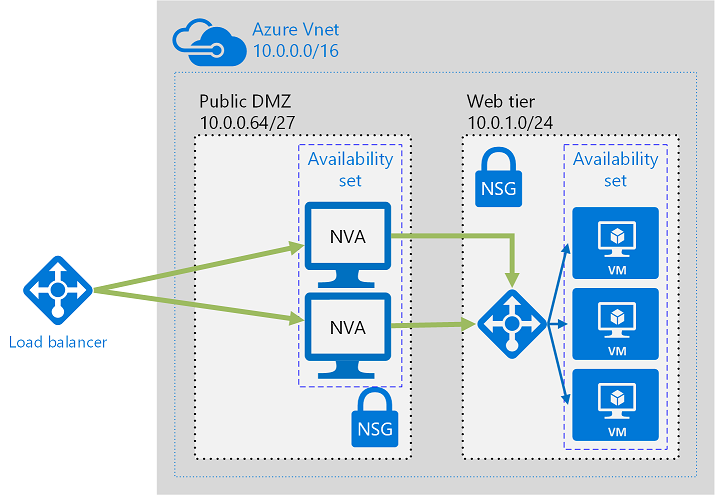

The following figure shows a high-availability architecture that implements an ingress perimeter network behind an internet-facing load balancer. This architecture is designed to provide connectivity to Azure workloads for layer 7 traffic, such as HTTP or HTTPS traffic. To make an NVA highly available, deploy more than one NVA into an availability set.

The benefit of this architecture is that all NVAs are active, and if one fails, the load balancer directs network traffic to the other NVA.

Both NVAs route traffic to the internal load balancer, so if one NVA is active, traffic will continue to flow.

The NVAs are required to terminate SSL traffic intended for the web tier VMs.

UDRs and NSGs help provide layer 3 and layer 4 (of the OSI model) security. NVAs help provide layer 7, application layer, security.

Deploy a Network Security Group

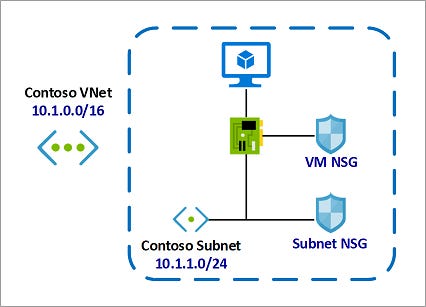

An individual subnet can have zero, or one, associated NSG.

An individual network interface can also have zero, or one, associated NSG.

You can effectively have dual traffic restriction for a virtual machine by associating an NSG first to a subnet, and then another NSG to the VM's network interface.

In this example, for inbound traffic:

The Subnet NSG is evaluated first.

Any traffic allowed through Subnet NSG is then evaluated by VM NSG.

The reverse is applicable for outbound traffic

with VM NSG being evaluated first.

Any traffic allowed through VM NSG is then evaluated by Subnet NSG.

How traffic is evaluated

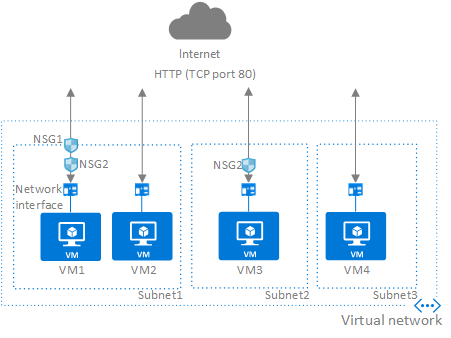

You can associate zero, or one, network security group to each virtual network subnet and network interface in a virtual machine.

Inbound traffic

VM1: To allow port 80 to the virtual machine, both NSG1 and NSG2 must have a rule that allows port 80 from the internet.

VM4: Traffic is allowed to VM4, because a network security group isn't associated to Subnet3, or the network interface in the virtual machine. All network traffic is allowed through a subnet and network interface if they don't have a network security group associated to them.

Traffic outbound is evaluated in the reverse order, Network Card NSG 1st then Vnet NSG.

Unless you have a specific reason to, we recommended that you associate a network security group to a subnet, or a network interface, but not both.

Why use a service endpoint?

Improved security for your Azure service resources

Optimal routing for Azure service traffic from your virtual network

Endpoints always take service traffic directly from your virtual network to the service on the Microsoft Azure backbone network.

Endpoints always take service traffic directly from your virtual network to the service on the Microsoft Azure backbone network.

With service endpoints, the source IP addresses of the virtual machines in the subnet for service traffic switches from using public IPv4 addresses to using private IPv4 addresses

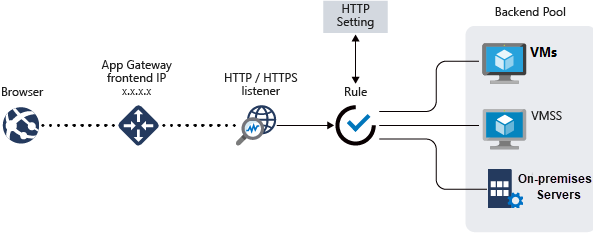

Azure application gateway

Application Gateway can make routing decisions based on additional attributes of an HTTP request, for example URI path or host headers. For example, you can route traffic based on the incoming URL. So if /images is in the incoming URL.

features:

Secure Sockets Layer (SSL/TLS) termination

Autoscaling

URL-based routing

Multiple-site hosting

Redirection

Session affinity

Custom error pages

Rewrite HTTP headers

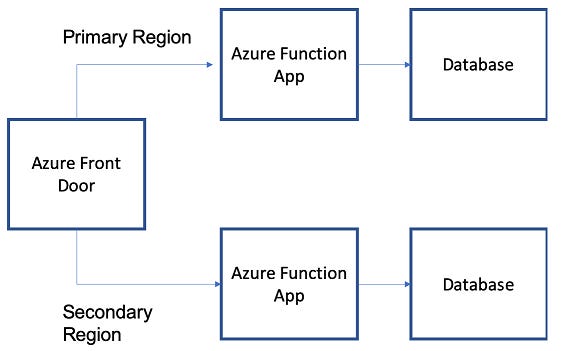

Azure front door

Front Door works at Layer 7 or HTTP/HTTPS layer and uses split TCP-based anycast protocol. Front Door ensures that your end users promptly connect to the nearest Front Door POP (Point of Presence).

Features:

Accelerate application performance - Using split TCP-based anycast protocol

Increase application availability with smart health probes

URL-based routing

Multiple-site hosting

Session affinity

TLS termination

Custom domains and certificate management

Application layer security

URL redirection

URL rewrite

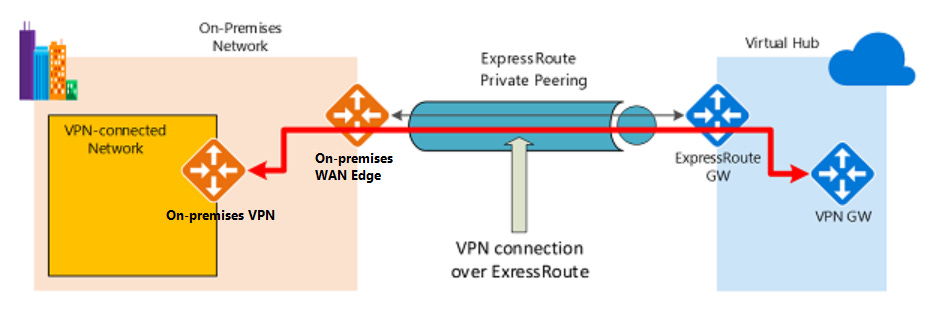

ExpressRoute

ExpressRoute is a direct, private connection from your WAN (not over the public Internet) to Microsoft Services, including Azure.

ExpressRoute Encryption

Azure Virtual WAN uses an Internet Protocol Security (IPsec) Internet Key Exchange (IKE) VPN connection from your on-premises network to Azure over the private peering of an Azure ExpressRoute circuit.

Endpoint protection

First step: Install antimalware to help identify and remove viruses, spyware, and other malicious software

Second Step: Monitor the status of the antimalware. Integrate your antimalware solution with Microsoft Defender for Cloud to monitor the status of the antimalware protection.

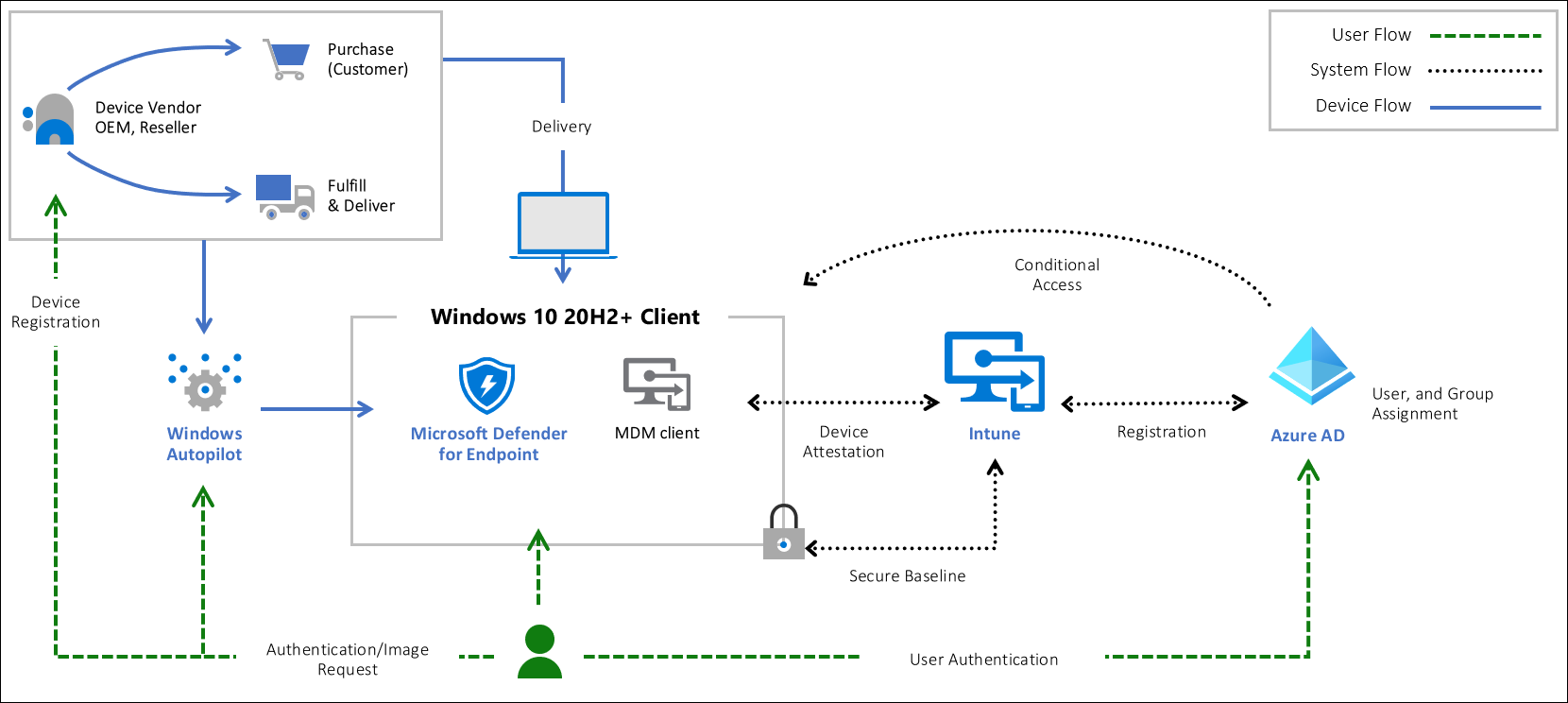

Privileged access

Zero Trust, means that you don't purchase from generic retailers but only supply hardware from an authorized OEM that support Autopilot.

Hardware root-of-trust

To have a secured workstation you need to make sure the following security technologies are included on the device:

Trusted Platform Module (TPM) 2.0

BitLocker Drive Encryption

UEFI Secure Boot

Drivers and Firmware Distributed through Windows Update

Virtualization and HVCI Enabled

Drivers and Apps HVCI-Ready

Windows Hello

DMA I/O Protection

System Guard

Modern Standby

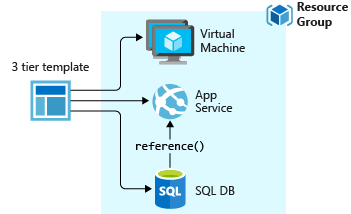

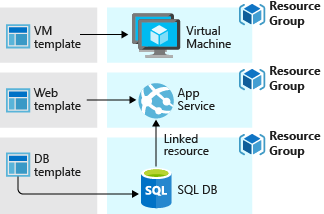

Virtual machine templates

How you define templates and resource groups is entirely up to you and how you want to manage your solution. For example, you can deploy your three tier application through a single template to a single resource group.

You don't have to define your entire infrastructure in a single template. Often, it makes sense to divide your deployment requirements into a set of targeted, purpose-specific templates. When you deploy a template, Resource Manager converts the template into REST API operations.

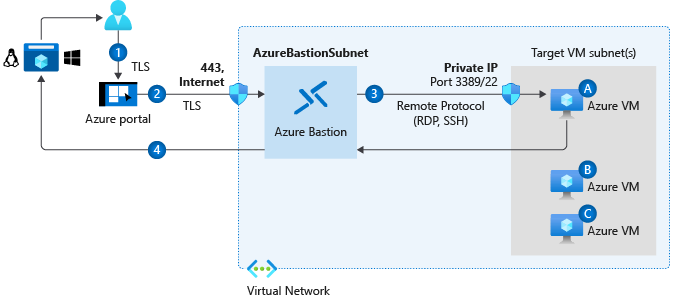

Azure Bastion

The Azure Bastion service is a fully platform-managed PaaS service that you provision inside your virtual network.

It provides secure and seamless RDP/SSH connectivity to your virtual machines directly in the Azure portal over TLS.

When you connect using Azure Bastion, your virtual machines do not need a public IP address.

Azure Bastion is deployed to a virtual network and supports virtual network peering. Specifically, Azure Bastion manages RDP/SSH connectivity to VMs created in the local or peered virtual networks.

The Bastion host is deployed in the virtual network.

The user connects to the Azure portal using any HTML5 browser.

The user selects the virtual machine to connect to.

With a single click, the RDP/SSH session opens in the browser.

No public IP is required on the Azure VM.

Disk encryption

Supported operating systems

Windows client: Windows 8 and later.

Windows Server: Windows Server 2008 R2 and later.

Windows 10 Enterprise multi-session.

Azure Disk Encryption uses the BitLocker external key protector for Windows VMs. For domain joined VMs, don't push any group policies that enforce TPM protectors.

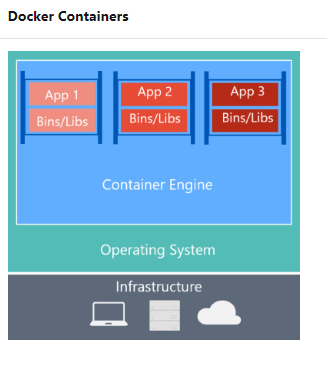

Features of Containers

Isolation

**Operating System,**Runs the user mode portion of an operating system.

Deployment

Persistent storage

Fault tolerance

Networking

Azure Container Instances

A container builds on top of the kernel, but the kernel doesn't provide all of the APIs and services an app needs to run–most of these are provided by system files (libraries) that run above the kernel in user mode.

Because a container is isolated from the host's user mode environment, the container needs its own copy of these user mode system files, which are packaged into something known as a base image.

Because containers require far fewer resources (for example, they don't need a full OS), they're easy to deploy and they start fast. This allows you to have higher density, meaning that it allows you to run more services on the same hardware unit, thereby reducing costs.

Containers are built from images that are stored in one or more repositories. These repositories can belong to a public registry, like Docker Hub, or to a private registry.

Azure Container Instances (ACI), is a PaaS service for scenario that can operate in isolated containers:

Including simple applications, task automation, and build jobs

For full container orchestration, including service discovery across multiple containers, automatic scaling, and coordinated application upgrades, best to use the Azure Kubernetes Service

Features of ACI

Deploy containers from DockerHub or Azure Container Registry.

Azure Container Instances enables exposing your container groups directly to the internet with an IP address and a fully qualified domain name (FQDN).

Azure Container Instances guarantees your application is as isolated in a container as it would be in a VM.

Custom sizes

Persistent storage

Flexible billing, Supports per-GB, per-CPU, and per-second billing.

Linux and Windows containers

A container registry

is a service that stores and distributes container images. Docker Hub is a public container registry that supports the open source community and serves as a general catalog of images.

Monitor container

The container monitoring solution in Log Analytics can help you view and manage your Docker and Windows container hosts in a single location:

View detailed audit information that shows commands used with containers.

Troubleshoot containers by viewing and searching centralized logs without having to remotely view Docker or Windows hosts.

Find containers that may be noisy and consuming excess resources on a host.

View centralized CPU, memory, storage, and network usage and performance information for containers.

Azure Container Registry authentication

Individual login with Azure AD

Service principal

Admin account

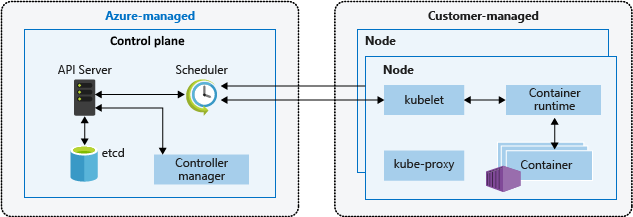

Azure Kubernetes Service (AKS)

Kubernetes is a platform that manages container-based applications and their associated networking and storage components.

The focus is on the application workloads, not the underlying infrastructure components.

Kubernetes cluster architecture

A Kubernetes cluster is divided into two components:

Control plane nodes provide the core Kubernetes services and orchestration of application workloads.

Nodes run your application workloads.

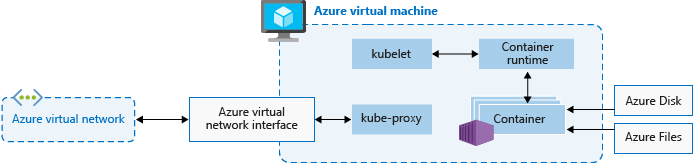

Azure Kubernetes Service architecture

Elements:

Cluster master

kube-apiserver

etcd

kube-scheduler

kube-controller-manager

Nodes and node pools

To run your applications and supporting services, you need a Kubernetes node. An AKS cluster has one or more nodes, which is an Azure virtual machine (VM) that runs the Kubernetes node components and container runtime:

AKS Terminology

Pools,Group of nodes with identical configuration

Node, Individual VM running containerized applications

Pods, Single instance of an application. A pod can contain multiple containers

Deployment, One or more identical pods managed by Kubernetes

Manifest, YAML file describing a deployment

Azure Kubernetes Service networking

Cluster IP - Creates an internal IP address for use within the AKS cluster.

NodePort - Creates a port mapping on the underlying node that allows the application to be accessed directly with the node IP address and port.

LoadBalancer - Creates an Azure load balancer resource, configures an external IP address, and connects the requested pods to the load balancer backend pool.

ExternalName - Creates a specific DNS entry for easier application access.

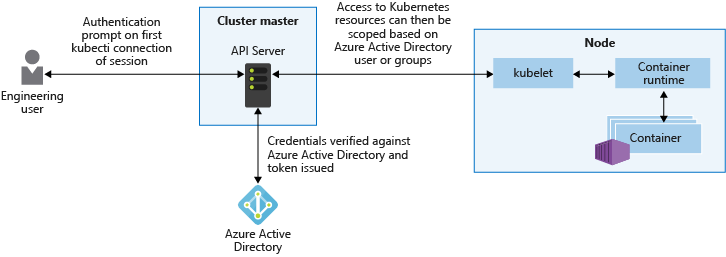

Authentication to Azure Kubernetes Service with Active Directory

The security of AKS clusters can be enhanced with the integration of Azure Active Directory (AD).

Azure AD authentication in AKS clusters uses OpenID Connect, an identity layer built on top of the OAuth 2.0 protocol.

Azure Monitor

You can analyze log data that Azure Monitor collects by using queries to quickly retrieve, consolidate, and analyze the collected data.

On the left side of the figure are the sources of monitoring data that populate these data stores.

Processed events that Microsoft Defender for Cloud produces are published to the Azure activity log, one of the log types available through Azure Monitor.

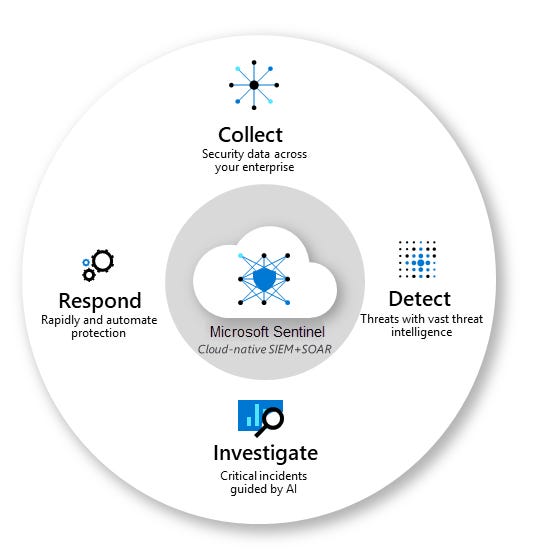

Sentinel:

- Provides SIEM & SOAR

SIEM: Security information and event management

SOAR: Security orchestration, automation, and response (SOAR)

Collect data at cloud scale across all users, devices, applications, and infrastructure, both on-premises and in multiple clouds.

Detect previously undetected threats

Investigate threats with artificial intelligence

Respond to incidents

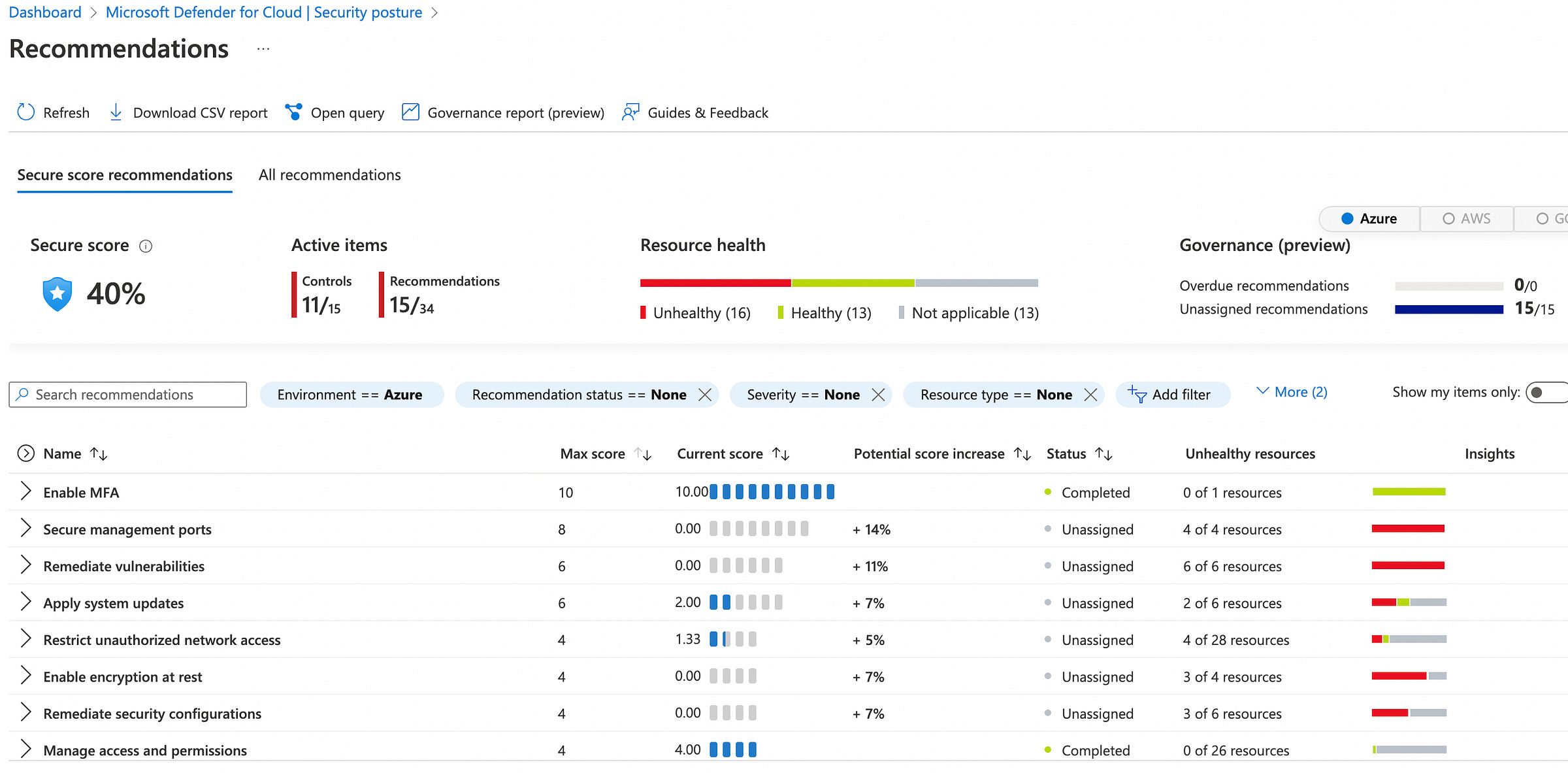

Defender for Cloud

Defender for Cloud is a unified infrastructure security management system, addresses the three most urgent security challenges:

Changing workloads

Increasingly sophisticated attacks

Security skills are in short supply

Security Center provides the tools to:

Strengthen security posture

Protect against threats

Get secure faster

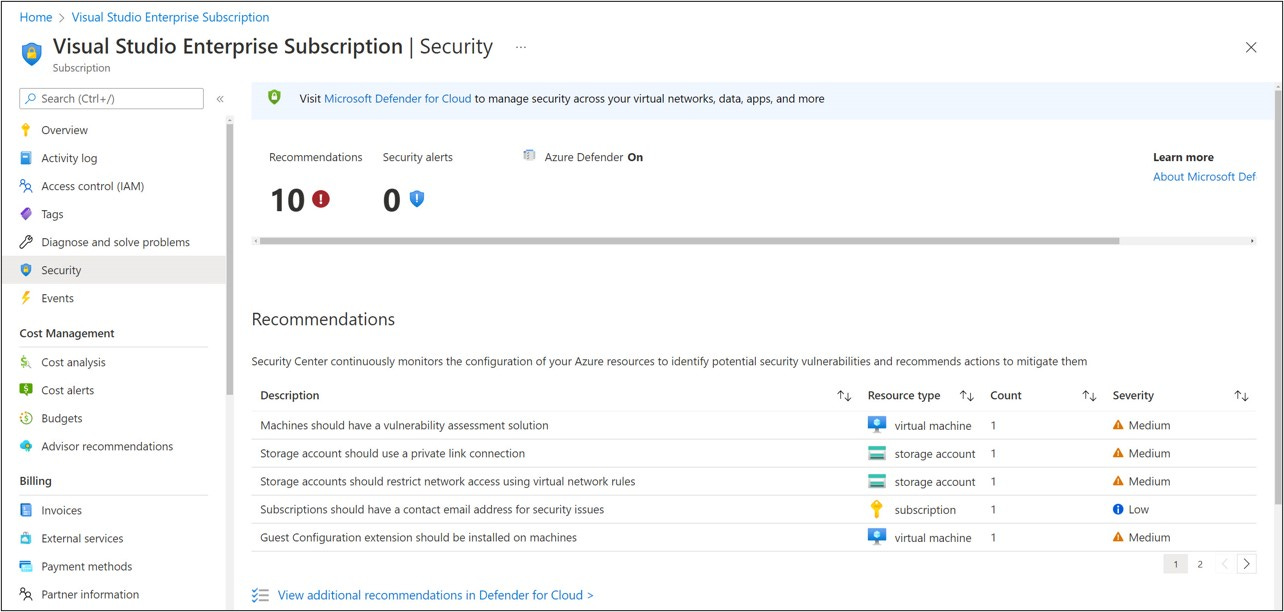

Security Center (Note: called simply Security in Azure) protects non-Azure servers and virtual machines in the cloud or on-premise.

Security Center

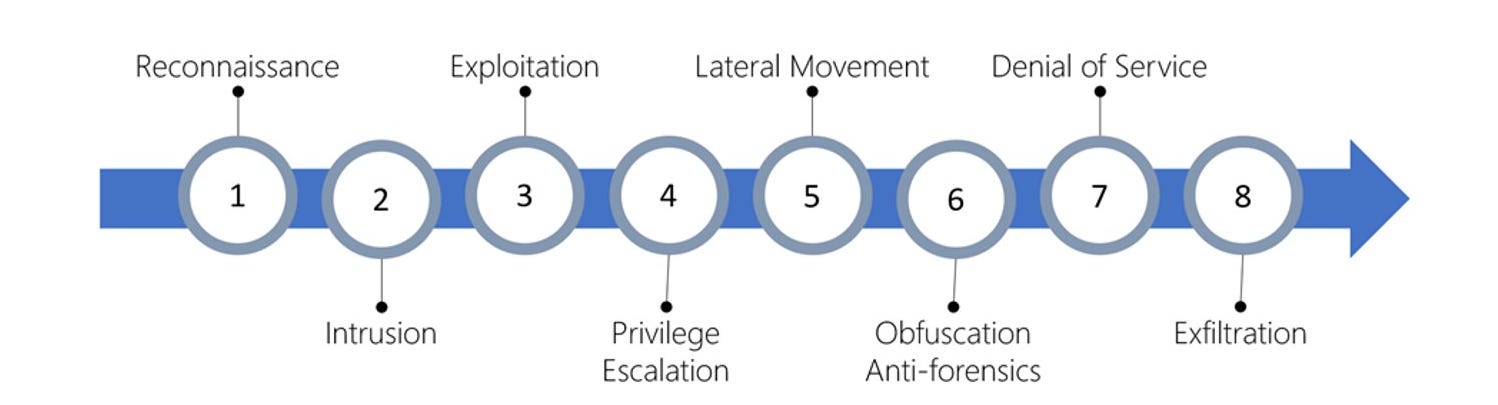

Enables you to detect and prevent threats at the Infrastructure as a Service (IaaS) layer, non-Azure servers as well as for Platforms as a Service (PaaS) in Azure. Security Center's supported kill chain intents are based on the MITRE ATT&CK™ framework.

MITRE ATT&CK®: is a globally-accessible knowledge base of adversary tactics and techniques based on real-world observations.

https://attack.mitre.org/

Security center policies

Azure Security Benchmark is the foundation for Security Center’s recommendations and has been fully integrated as the default policy initiative.

Security Center automatically creates a default security policy for each of your Azure subscriptions.

Azure policy:

A policy is a rule.

An initiative is a collection of policies.

An assignment is the application of an initiative or a policy to a specific scope (management group, subscription, or resource group).

In practice, it works like this:

Azure Security Benchmark is an initiative that contains requirements.

For example, Azure Storage accounts must restrict network access to reduce their attack surface.

The initiative includes multiple policies, ex: "Storage accounts should restrict network access using virtual network rules".

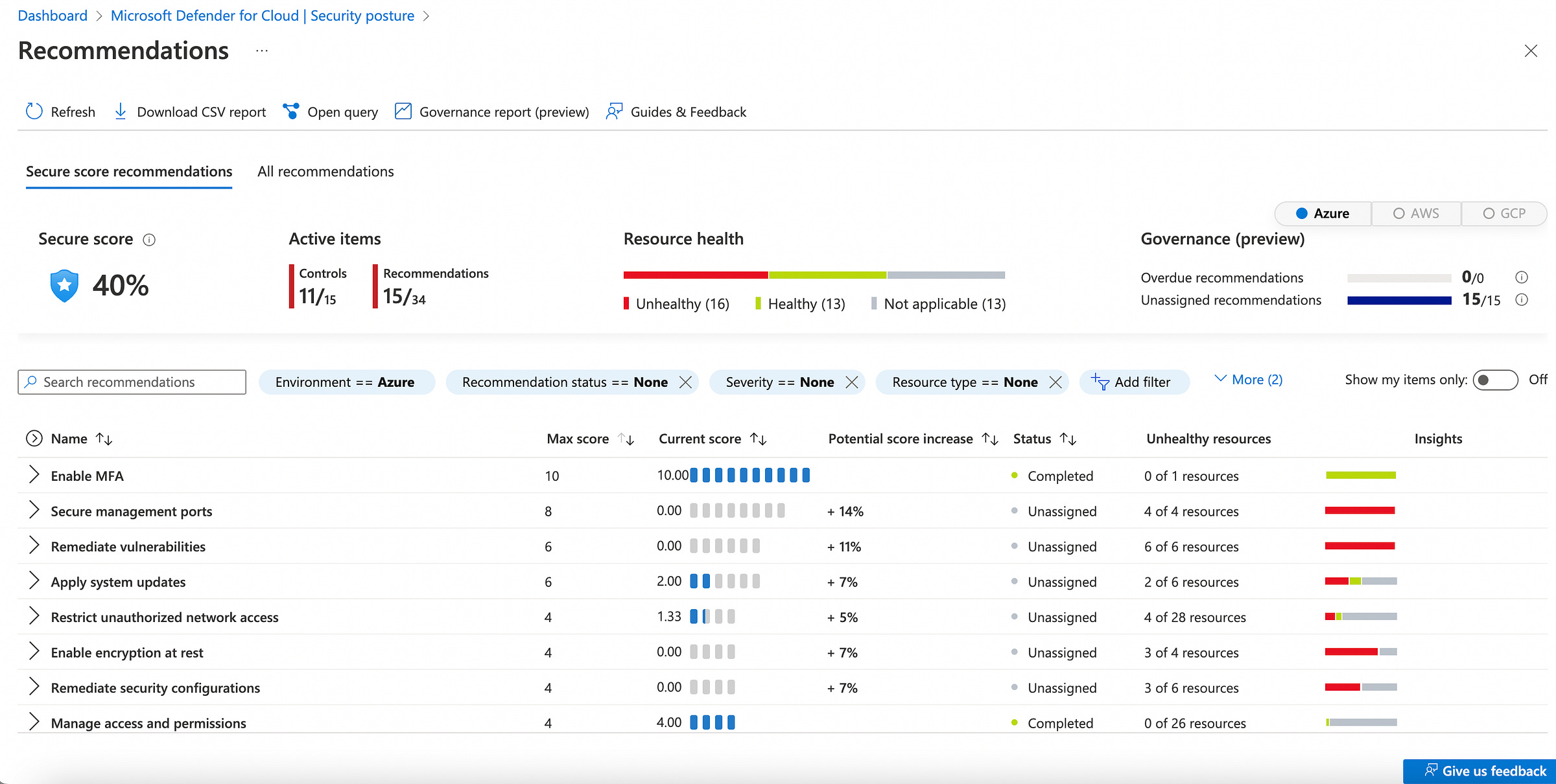

Microsoft Defender for Cloud continually assesses your connected subscriptions. If it finds a resource that doesn't satisfy a policy, it displays a recommendation to fix that situation and harden the security of resources that aren't meeting your security requirements.

Microsoft Defender for Cloud has two main goals:

understand your current security situation

improve your security score

Security Center continually assesses your resources, subscriptions, and organization for security issues.

It then aggregates all the findings into a single score so that you can tell, at a glance, your current security situation: the higher the score, the lower the identified risk level

Improving your secure score

To improve your secure score, remediate security recommendations from your recommendations list.

Defender for Cloud

Offered in two modes:

Without enhanced security features (Free) - Defender for Cloud is enabled for free on all your Azure subscriptions.

Defender for Cloud with all enhanced security features- not Free

Microsoft Defender for Endpoint - Microsoft Defender for Servers

Vulnerability assessment for virtual machines, container registries, and SQL resources

Multi-cloud security

Hybrid security

Threat protection alerts

Track compliance with a range of standards

Access and application controls

Container security features

Breadth threat protection for resources connected to Azure

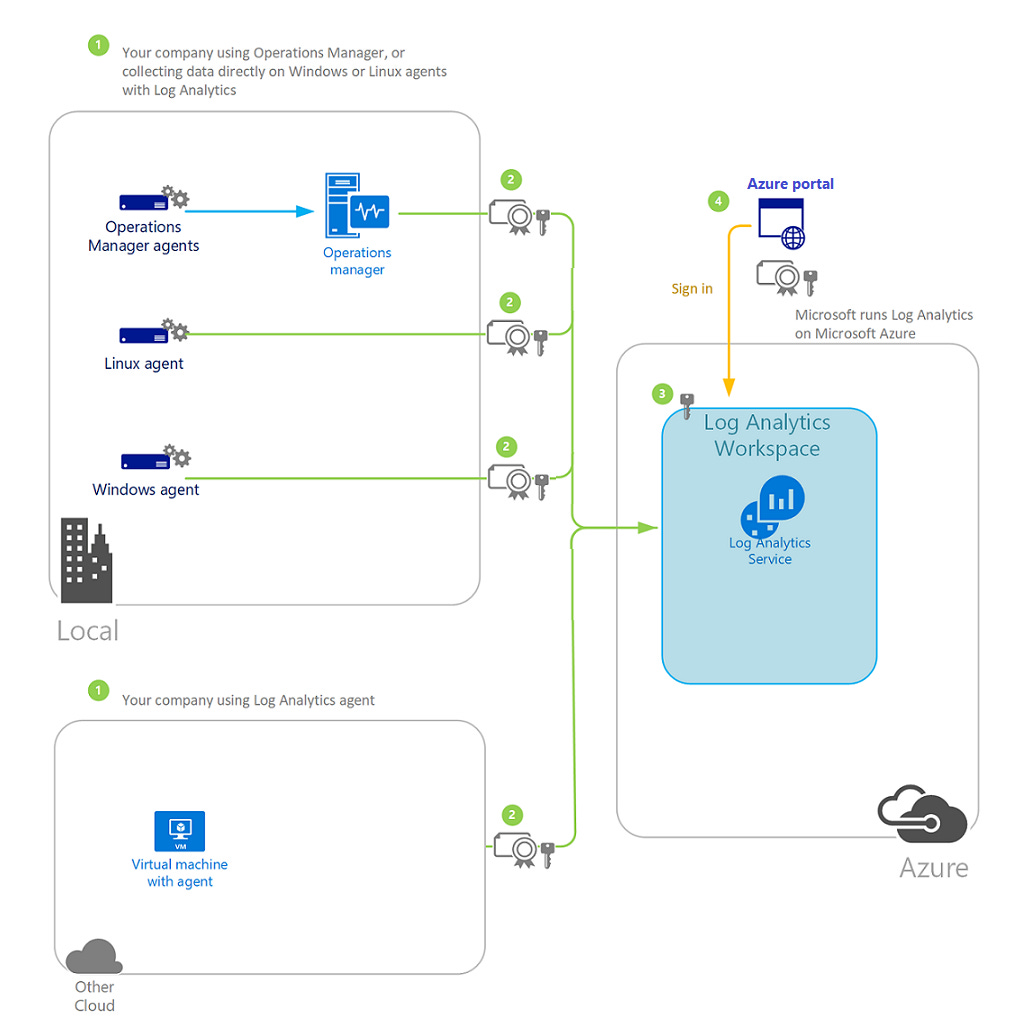

Log Analytics

Log Analytics helps you monitors cloud and on-premises environments to maintain availability and performance. Log Analytics is the primary tool in the Azure portal for writing log queries and interactively analyzing their results.

Data destinations

The Log Analytics agent sends data to a Log Analytics workspace in Azure Monitor. The Windows agent can be multihomed to send data to multiple workspaces and System Center Operations Manager management groups. The Linux agent can send to only a single destination.

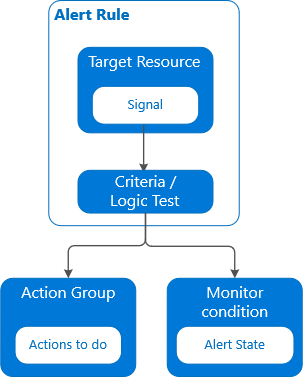

Alerts

Alerts in Azure Monitor notify you of critical conditions and potentially attempt to take corrective action.

Alert rules based on metrics provide near real time alerting based on numeric values

Rules based on logs allow for complex logic across data from multiple sources.

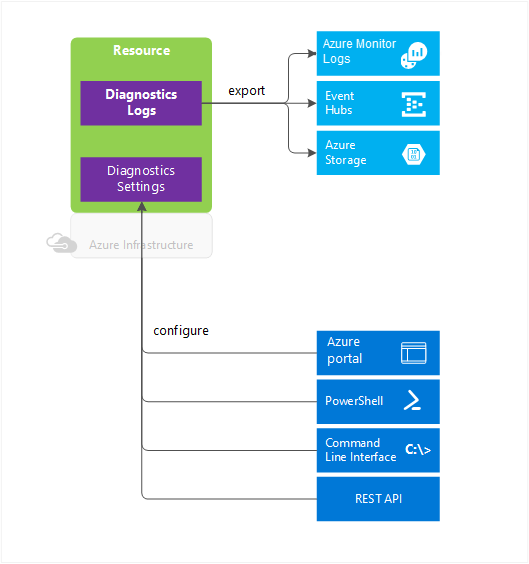

Azure Monitor makes two types of diagnostic logs available:

Tenant logs. These logs come from tenant-level services that exist outside an Azure subscription, such as Azure Active Directory

Resource logs. These logs come from Azure services that deploy resources within an Azure subscription, such as Network Security Groups

Uses for diagnostic logs

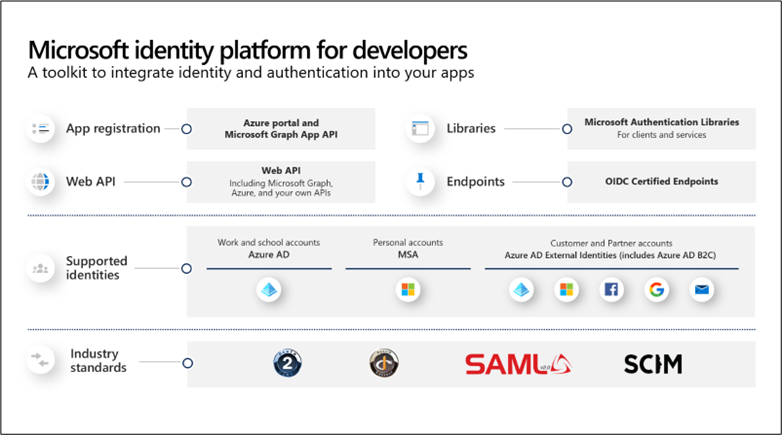

Microsoft identity platform

Is an evolution of the Azure Active Directory (Azure AD) developer platform, It consists of

an authentication service

open-source libraries

application registration, and configuration (through a developer portal and application API),

full developer documentation

quickstart samples

code samples,

tutorials,

how-to guides

The Microsoft identity platform supports industry-standard protocols such as OAuth 2.0 and OpenID Connect.

Microsoft Authentication Library (MSAL) is recommended for use against the identity platform endpoints. MSAL supports Azure Active Directory B2C

Microsoft identity platform has two endpoints (v1.0 and v2.0), always aim for v2.0. V2.0 endpoint is the unification of Microsoft personal accounts and works accounts into a single authentication system

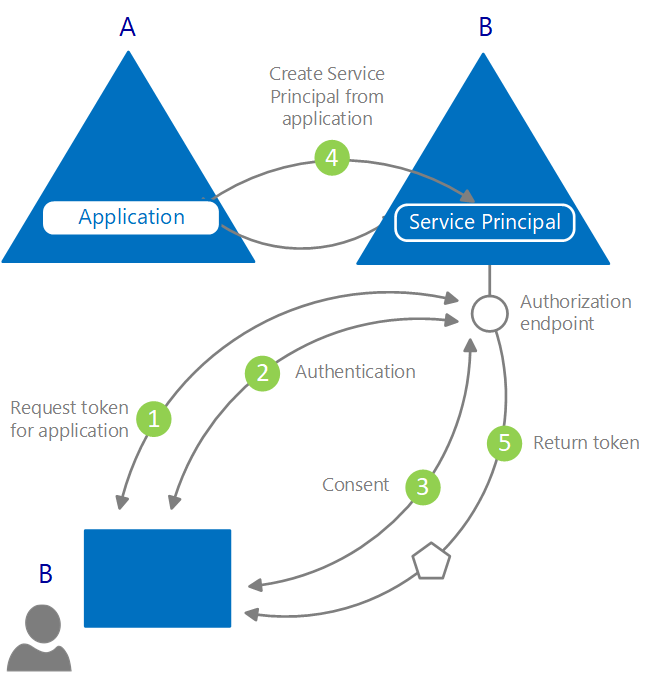

Azure AD represents applications following a specific model:

Identify the app according to the authentication protocols it supports:

This involves enumerating all the identifiers

URLs

Secrets, and related information

Holds all the data needed to support authentication at run time

Holds all the data for deciding which resources an app might need to access

Handle user consent during token request time and facilitate the dynamic provisioning of apps across tenants:

Enables users and administrators to dynamically grant or deny consent for the app to access resources on their behalf.

Enables administrators to ultimately decide what apps are allowed to do, which users can use specific apps, and how directory resources are accessed.

An application object describes an application as an abstract entity. Azure AD uses a specific application object as a blueprint to create a service principal. It's the service principal that defines what the app can do in a specific target directory, who can use it, what resources it has access to.

Register an application with App Registration

Before an app can get a token from the Microsoft identity platform, it must be registered in the Azure portal.

Registration integrates the app (yours) with the Microsoft identity platform and establishes the information that it uses to get tokens, including:

Application ID: A unique identifier assigned by the Microsoft identity platform.

Redirect URI/URL: One or more endpoints at which your app will receive responses from the Microsoft identity platform.

Application Secret: A password or a public/private key pair that your app uses to authenticate with the Microsoft identity platform. (Not needed for native or mobile apps.)

Microsoft Graph two types of permissions

Delegated permissions are used by apps that have a signed-in user present

Application permissions are used by apps that run without a signed-in user present; for example, apps that run as background services or daemons. Application permissions can only be consented by an administrator.

Your app can never have more privileges than the signed-in user.

For application permissions, the effective permissions of your app will be the full level of privileges implied by the permission. For example, an app that has the User.ReadWrite.All application permission can update the profile of every user in the organization.

Graph API

Graph Security API is an intermediary service (or broker) that provides a single programmatic interface to connect multiple Microsoft Graph Security providers (also called security providers or providers).

Graph Security API federates requests to all providers in the Microsoft Graph Security ecosystem.

Managed identities

Challenge: how to manage the credentials in your code for authenticating to cloud services.

Solution:

Managed Identities = Client ID + Principal ID + Azure Instance Metadata Service (IMDS)

Azure Key Vault provides a way to securely store credentials, secrets, and other keys, but your code has to authenticate to Key Vault to retrieve them.

Two types of managed identities:

A system-assigned managed identity is enabled directly on an Azure service instance.

A user-assigned managed identity

Data sovereignty

Data sovereignty is the concept that information, which has been converted and stored in binary digital form, is subject to the laws of the country or region in which it is located.

In Azure, customer data might be replicated within a selected geographic area for enhanced data durability during a major data center disaster, and in some cases will not be replicated outside it.

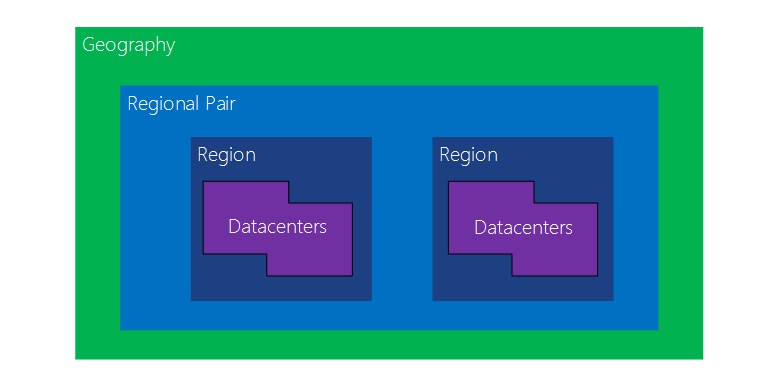

Paired regions

Each Azure region is paired with another region within the same geography, forming a regional pair.

Benefits of Azure paired regions

Physical isolation

Platform-provided replication

Region recovery order.

- If a broad outage occurs, recovery of one region is prioritized out of every pair.

Sequential updates

Data residency - To meet data residency requirements for tax and law enforcement jurisdiction purposes, a region resides within the same geography as its pair

Options for authorizing requests to Azure Storage include:

Azure AD provides superior security and ease of use over other authorization options

can use role-based access control (RBAC)

can grant permissions that are scoped to the level of an individual container or queue.

Azure AD

Azure Active Directory Domain Services (Azure AD DS) authorization for Azure Files

Shared Key. encrypted signature string that is passed on via the request in the Authorization header.

Shared Access Signatures - A shared access signature (SAS) is a URI that grants restricted access rights to Azure Storage resources.

Anonymous access to containers and blobs

Azure AD, you avoid having to store your account access key with your code, as you do with Shared Key authorization.

Shared access signatures

As a best practice, you shouldn't share storage account keys with external third-party applications.

For untrusted clients, use a shared access signature (SAS).

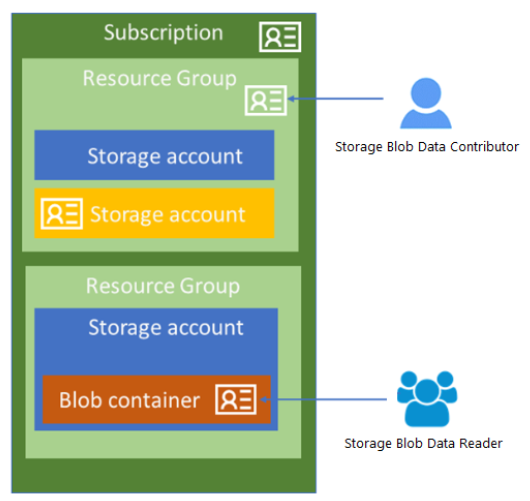

Azure AD storage authentication

You can use Azure role-based access control (Azure RBAC) to grant permissions to a security principal, which may be a user, group, or application service principal. The security principal is authenticated by Azure AD to return an OAuth 2.0 token. The token can then be used to authorize a request against the Blob service.

With Azure AD, access to a resource is a two-step process. First, the security principal's identity is authenticated and an OAuth 2.0 token is returned. Next, the token is passed as part of a request to the Queue service and used by the service to authorize access to the specified resource.

You can assign a role to a user, group, service principal, or managed identity. This is also called a security principal.

Service principal - A security identity used by applications or services to access specific Azure resources

Managed identity - An identity in Azure Active Directory that is automatically managed by Azure

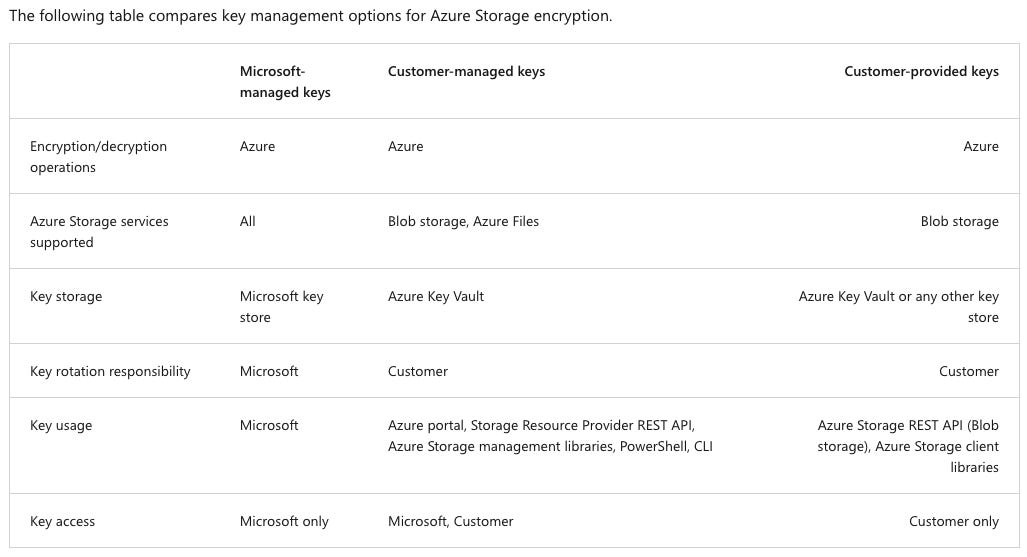

Storage service encryption

All data (including metadata) written to Azure Storage is automatically encrypted using Storage Service Encryption (SSE).

Azure AD integration is supported for blob and queue data operations.

Data can be secured in transit between an application and Azure by using Client-Side Encryption, HTTPS, or SMB 3.0

OS and data disks used by Azure virtual machines can be encrypted using Azure Disk Encryption.

Delegated access to the data objects in Azure Storage can be granted using a shared access signature.

All Azure Storage resources are encrypted, including blobs, disks, files, queues, and tables. All object metadata is also encrypted.

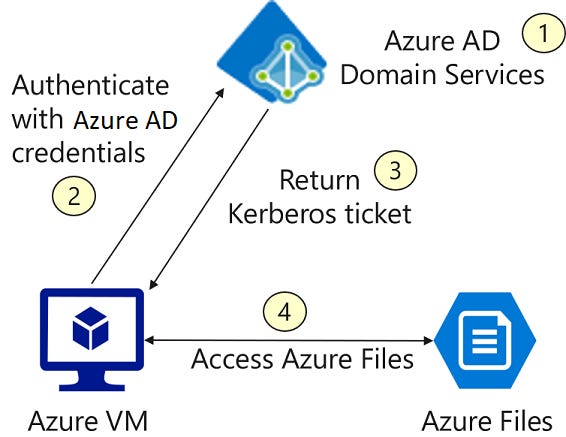

Azure files authentication

Azure Files enforces authorization on user access to both the share and the directory/file levels

Share-level permission assignment can be performed on Azure Active Directory (Azure AD) users or groups managed through the role-based access control (RBAC) model

With RBAC, the credentials you use for file access should be available or synced to Azure AD.

You can assign built-in RBAC roles like Storage File Data SMB Share Reader to users or groups in Azure AD to grant read access to an Azure file share.

At the directory/file level, Azure Files supports preserving, inheriting, and enforcing Windows DACLs just like any Windows file servers.

Identity-based authentication for Azure Files offers several benefits over using Shared Key authentication:

Azure Files supports using both on-premises AD DS or Azure AD DS credentials to access Azure file shares over SMB from either on-premises AD DS or Azure AD DS domain-joined VMs.

Enforce granular access control on Azure file shares.

Back up Windows ACLs (also known as NTFS) along with your data

Before you can enable authentication on Azure file shares, you must first set up your domain environment.

For Azure AD DS authentication, you should enable Azure AD Domain Services and domain join the VMs you plan to access file data from.

Your domain-joined VM must reside in the same virtual network (VNET) as your Azure AD DS.

Similarly, for on-premises AD DS authentication, you need to set up your domain controller and domain join your machines or VMs.

- Azure file shares supports Kerberos authentication for integration with either Azure AD DS or on-premises AD DS.

Before you can enable authentication on Azure file shares, you must first set up your domain environment

Azure Files supports preserving directory or file level ACLs when copying data to Azure file shares.

You can copy ACLs on a directory or file to Azure file shares using either Azure File Sync or common file movement toolsets. For example, you can use robocopy

Azure Storage doesn't support HTTPS for custom domain names, this option is not applied when you're using a custom domain name.

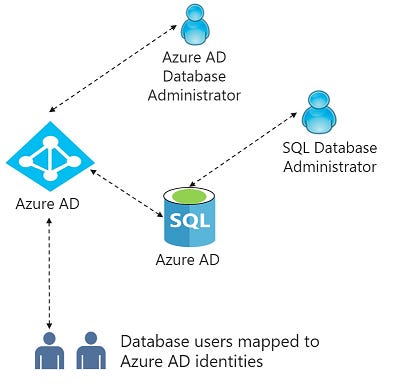

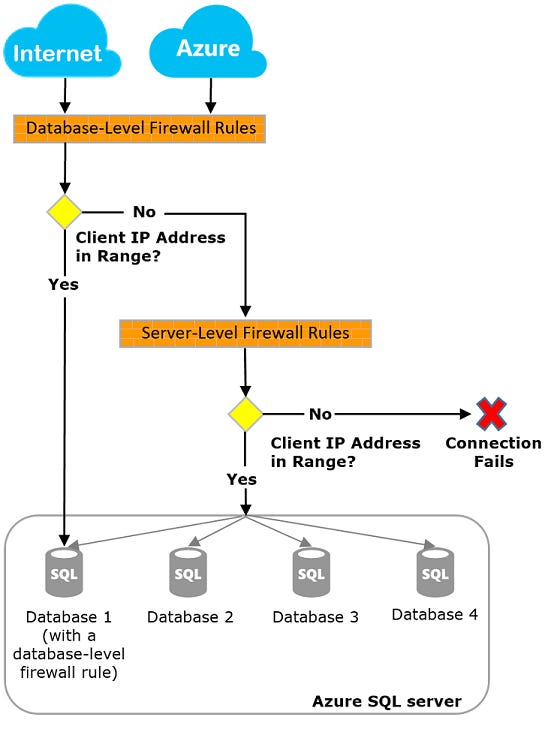

SQL database authentication

Use Azure Active Directory authentication to centrally manage identities of database users and as an alternative to SQL Server authentication.

Initially, all access to your Azure SQL Database is blocked by the SQL Database firewall. To access a database server, you must specify one or more server-level IP firewall rules that enable access to your Azure SQL Database.

database auditing

You can use SQL database auditing to:

Retain an audit trail of selected events. You can define categories of database actions to be audited.

Report on database activity. You can use pre-configured reports and a dashboard to get started quickly with activity and event reporting.

Analyze reports. You can find suspicious events, unusual activity, and trends.

Key Vault

You can use Key Vault to create multiple secure containers, called vaults.

Vaults help reduce the chances of accidental loss of security information by centralizing application secrets storage.

Key vaults also control and log the access to anything stored in them.

Azure Key Vault helps address the following issues:

Secrets management.

Key management

Certificate management

Store secrets backed by hardware security modules (HSMs)

Azure Key Vault is designed to support application keys and secrets. Key Vault is not intended as storage for user passwords.

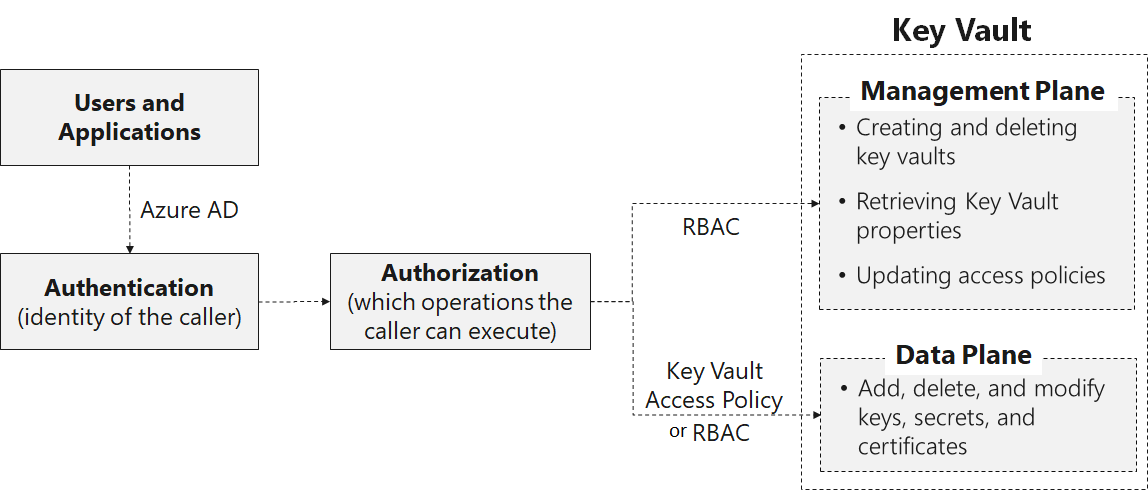

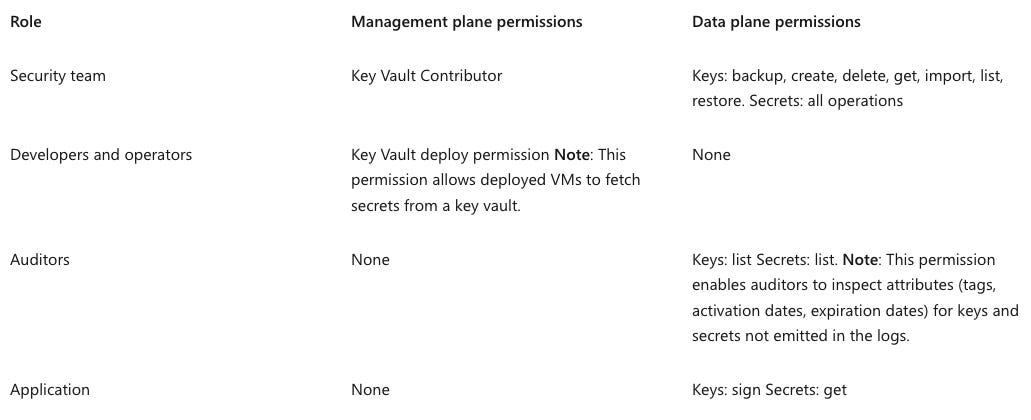

Key Vault access

Access to a key vault is controlled through two interfaces: the management plane, and the data plane:

The management plane is where you manage Key Vault itself. Operations in this plane include creating and deleting key vaults, retrieving Key Vault properties, and updating access policies.

The data plane is where you work with the data stored in a key vault. You can add, delete, and modify keys, secrets, and certificates from here.

Authentication establishes the identity of the caller. Authorization determines which operations the caller can execute.

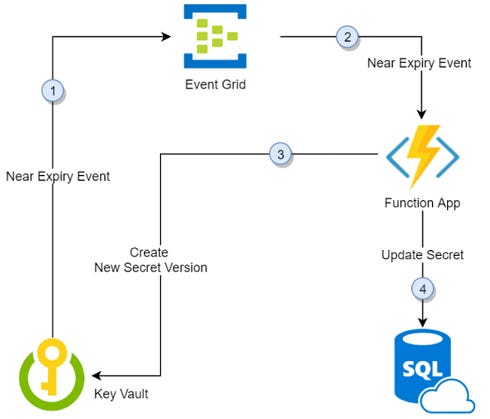

key rotation

Thirty days before the expiration date of a secret, Key Vault publishes the "near expiry" event to Event Grid.

Event Grid checks the event subscriptions and uses HTTP POST to call the function app endpoint subscribed to the event.

The function app receives the secret information, generates a new random password, and creates a new version for the secret with the new password in Key Vault.

The function app updates SQL Server with the new password.

Shift Left is a concept in software development and IT operations that emphasizes moving tasks, processes, and testing earlier in the software development lifecycle (SDLC). The goal is to identify and resolve issues as early as possible, reducing the cost and effort of fixing problems later in the development process.

The term "shift left" comes from the idea of moving tasks leftward on a timeline of the SDLC, which is often visualized as a linear process from left (planning) to right (deployment and operations).

Key Principles of Shift Left

Devops Security, Controls: DS-6

Early Testing:

Perform testing (e.g., unit tests, integration tests, security tests) as early as possible in the development process.

This helps catch bugs and vulnerabilities before they reach production.

Continuous Feedback:

- Provide developers with immediate feedback on their code changes through automated testing and code reviews.

Automation:

- Automate repetitive tasks like testing, code analysis, and deployment to ensure consistency and efficiency.

Collaboration:

- Encourage collaboration between development, operations, and security teams to address issues early.

Proactive Problem-Solving:

- Identify and resolve potential issues before they become critical.

Why Shift Left?

Cost Efficiency:

- Fixing issues early in the development process is significantly cheaper than fixing them in production.

Faster Delivery:

- By catching issues early, you reduce the time spent on rework and debugging, enabling faster delivery of features.

Improved Quality:

- Early testing and feedback lead to higher-quality software with fewer defects.

Enhanced Security:

- Integrating security checks early (e.g., static code analysis, vulnerability scanning) helps prevent security vulnerabilities from reaching production.

Better Collaboration:

- Shifting left encourages collaboration between teams, breaking down silos and improving communication.

How to Implement Shift Left

Adopt Agile and DevOps Practices:

Use Agile methodologies to break work into smaller, manageable chunks.

Implement DevOps practices to automate and streamline the development pipeline.

Automate Testing:

Use tools like JUnit, Selenium, or Cypress to automate unit, integration, and end-to-end tests.

Integrate these tests into your CI/CD pipeline.

Integrate Security Early:

- Use tools like SonarQube, OWASP ZAP, or Snyk to perform static code analysis and vulnerability scanning during development.

Continuous Integration (CI):

- Set up a CI pipeline to automatically build and test code changes as they are committed.

Continuous Delivery (CD):

- Automate the deployment process to ensure that code changes can be deployed to production quickly and safely.

Code Reviews:

- Conduct peer code reviews to catch issues early and share knowledge across the team.

Monitoring and Feedback:

- Use monitoring tools to provide real-time feedback on application performance and errors.

Examples of Shift Left in Practice

Unit Testing:

- Developers write and run unit tests as they write code, ensuring that individual components work as expected.

Static Code Analysis:

- Tools like SonarQube analyze code for quality and security issues during development.

Security Testing:

- Security teams integrate vulnerability scanning into the CI/CD pipeline to identify and fix security issues early.

Infrastructure as Code (IaC):

- Use tools like Terraform or Ansible to define and test infrastructure configurations before deployment.

Shift Left in CI/CD Pipelines:

- Automate testing, linting, and security checks in the CI/CD pipeline to catch issues before code is merged or deployed.

Benefits of Shifting Left

Reduced Risk:

- Early detection of issues reduces the risk of failures in production.

Faster Time-to-Market:

- By resolving issues early, you can deliver features and updates more quickly.

Improved Collaboration:

- Teams work together more effectively, leading to better outcomes.

Cost Savings:

- Fixing issues early is cheaper than fixing them in production.

Higher Customer Satisfaction:

- Delivering high-quality, secure software leads to happier customers.

Challenges of Shifting Left

Cultural Resistance:

- Teams may resist changing their workflows or adopting new tools.

Tooling and Automation:

- Setting up and maintaining automated testing and security tools can be complex.

Skill Gaps:

- Developers may need training to write effective tests or use security tools.

Initial Investment:

- Shifting left requires an upfront investment in tools, training, and process changes.

Shift Left vs. Shift Right

Shift Left: Focuses on early testing and problem-solving in the development phase.

Shift Right: Focuses on monitoring and testing in production to gather real-world feedback and improve the application.

Both approaches are complementary and can be used together to ensure high-quality software.

Let me know if you need further clarification or examples! 😊

MCRA Notes (Microsoft Cybersecurity Reference Architectures)

Key Takeaway: Increasing security resilience requires looking at the full security lifecycle

Security is simple in concept and is similar other risk disciplines that focuses on avoiding, limiting, or managing a negative event well (financial, natural disaster, cybersecurity attacks, etc.)

•‘Left of bang’ - Before an attack, security must focus proactively on preventing or lessening the impact of an attack with people/process, and technology investments

•‘Right of bang’ – security must also be prepared to handle the attacks that happen, rapidly and effectively removing attacker access from business assets

Key Takeaway: Increasing security resilience requires an end to end security approach

Effective security requires an end to end security strategy and architecture (that follow Zero Trust principles) to connect and guide security people, process, and technology.

Common Vulnerability Scoring System (CVSS

The Common Vulnerability Scoring System (CVSS) is a standardized framework for rating the severity of security vulnerabilities. It provides a numerical score (ranging from 0.0 to 10.0) that reflects the severity of a vulnerability, helping organizations prioritize remediation efforts.

In the context of Microsoft Azure, CVSS scores are often used to assess the severity of vulnerabilities in Azure services, virtual machines, or other resources. Azure Security Center and other Azure tools may use CVSS scores to highlight critical vulnerabilities and recommend mitigation step

Key Components of CVSS

CVSS scores are calculated based on three metric groups:

Base Metrics:

Reflect the intrinsic characteristics of a vulnerability that are constant over time and across user environments.

Includes:

Attack Vector (AV): How the vulnerability is exploited (e.g., network, adjacent, local, physical).

Attack Complexity (AC): The difficulty of exploiting the vulnerability.

Privileges Required (PR): The level of privileges an attacker needs to exploit the vulnerability.

User Interaction (UI): Whether user interaction is required to exploit the vulnerability.

Scope (S): Whether the vulnerability affects resources beyond the vulnerable component.

Confidentiality (C): The impact on data confidentiality.

Integrity (I): The impact on data integrity.

Availability (A): The impact on system availability.

Temporal Metrics:

Reflect characteristics of a vulnerability that change over time.

Includes:

Exploit Code Maturity (E): The likelihood of the vulnerability being exploited.

Remediation Level (RL): The availability of a fix or workaround.

Report Confidence (RC): The confidence in the existence of the vulnerability.

Environmental Metrics:

Reflect the impact of a vulnerability based on the specific environment.

Includes:

Collateral Damage Potential (CDP): The potential for collateral damage.

Target Distribution (TD): The proportion of vulnerable systems in the environment.

Security Requirements (CR, IR, AR): The importance of confidentiality, integrity, and availability in the environment.

CVSS Score Ranges

0.0: No severity.

0.1–3.9: Low severity.

4.0–6.9: Medium severity.

7.0–8.9: High severity.

9.0–10.0: Critical severity.

How Azure Uses CVSS

Azure Security Center (rebranded and expanded into Microsoft Defender for Cloud)

Azure Security Center uses CVSS scores to prioritize vulnerabilities in your Azure resources.

It provides recommendations for mitigating vulnerabilities based on their severity.

Azure Defender:

- Azure Defender integrates with CVSS to identify and alert on critical vulnerabilities in virtual machines, containers, and other resources.

Compliance design areas

Security, governance, and compliance are key topics when designing and building an Azure environment. These topics help you start from strong foundations and ensure that solid ongoing processes and controls are in place.

The tools and processes you implement for managing environments play an important role in detecting and responding to issues. These tools work alongside the controls that help maintain and demonstrate compliance.

As the organization's cloud environment develops, these compliance design areas are the focus for iterative refinement. This refinement might be because of new applications that introduce specific new requirements, or the business requirements changing. For example, in response to a new compliance standard.

Expand table

| Design area | Objective |

| Security | Implement controls and processes to protect your cloud environments. |

| Management | For stable, ongoing operations in the cloud, a management baseline is required to provide visibility, operations compliance, and protect and recover capabilities. |

| Governance | Automate auditing and enforcement of governance policies. |

| Platform automation and DevOps | Align the best tools and templates to deploy your landing zones and supporting resources. |

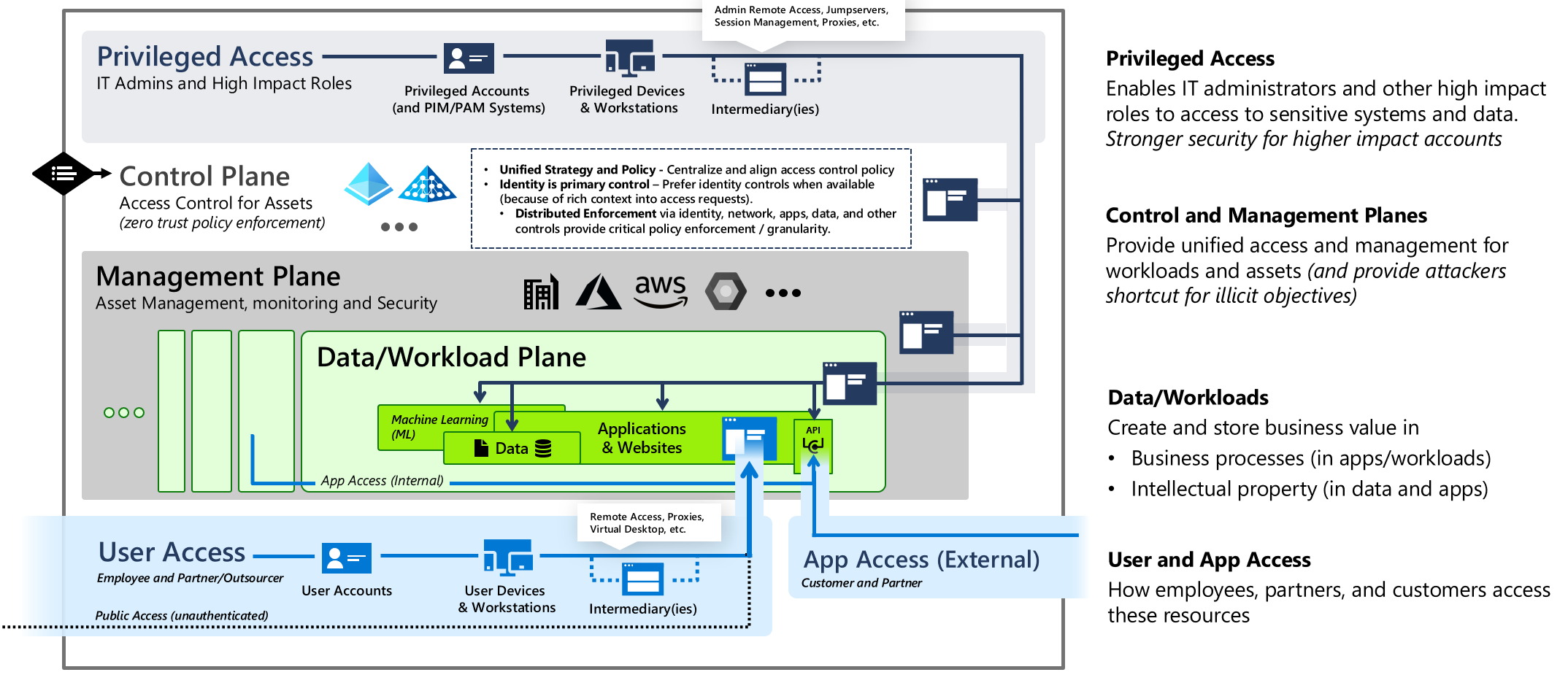

The enterprise access model

The primary stores of business value that an organization must protect are in the Data/Workload plane:

Each of these planes has control of the data and workloads by virtue of their functions, creating an attractive pathway for attackers to abuse if they can gain control of either plane.

These systems must be managed and maintained by IT staff, developers, or others in the organizations, creating privileged access pathways

Providing consistent access control in the organization that enables productivity and mitigates risk requires you to:

Enforce Zero Trust principles on all access

Pervasive security and policy enforcement across

Mitigate unauthorized privilege escalation

The enterprise access model incorporates full access management requirements of a modern enterprise that spans on-premises, multiple clouds, internal or external user access, and more.

Threat Modeling and the STRIDE Model

Threat Modeling is a structured approach used to identify, prioritize, and mitigate potential security threats in an application or system.

The STRIDE model is a framework used in threat modeling to categorize threats into six categories:

Spoofing: Impersonating a user or system.

Tampering: Unauthorized modification of data.

Repudiation: Denial of actions without proof.

Information Disclosure: Unauthorized access to sensitive data.

Denial of Service: Disrupting system availability.

Elevation of Privilege: Gaining unauthorized access to higher privileges.

How Threat Modeling Fits into DevSecOps

Integration in CI/CD Pipeline:

Threat modeling is performed during the design and development phases of the application lifecycle.

It ensures that security considerations are embedded early in the development process (shift-left security).

Automation:

- Use tools like Microsoft Threat Modeling Tool to automate and integrate threat modeling into the CI/CD pipeline.

Continuous Improvement:

- Regularly update threat models as the application evolves or new threats emerge.

Steps to Implement Threat Modeling in DevSecOps

Identify Assets:

- Determine the critical components of your application (e.g., databases, APIs, user interfaces).

Create Data Flow Diagrams (DFDs):

- Visualize how data moves through the application and identify trust boundaries.

Apply the STRIDE Model:

- Analyze the DFDs to identify potential threats in each STRIDE category.

Mitigate Threats:

- Implement security controls to address identified threats (e.g., encryption for information disclosure, input validation for tampering).

Validate and Monitor:

Use automated security testing tools (e.g., SAST, DAST) to validate mitigations.

Continuously monitor for new threats and update the threat model.

Tools for Threat Modeling in Azure

Microsoft Threat Modeling Tool:

- A free tool that helps developers create and analyze threat models using the STRIDE framework.

Azure Security Center:

- Provides recommendations and insights to address security issues identified during threat modeling.

Azure DevOps:

- Integrate threat modeling into your CI/CD pipelines using tasks and extensions.

Why Threat Modeling is Critical in DevSecOps

Proactive Security:

- Identifies and mitigates security risks before they are exploited.

Compliance:

- Helps meet regulatory requirements by demonstrating a structured approach to security.

Cost-Effective:

- Fixing security issues early in the development process is less expensive than addressing them post-deployment.

Defender

As of now, there are 14 Microsoft Defender products, each tailored to secure specific environments, platforms, or workloads. These products can be used individually or integrated into Microsoft Defender XDR for a unified security solution.

Microsoft offers a variety of Defender products, each designed to protect different aspects of your digital environment. Here are some of the main ones:

Microsoft Defender for Endpoint: Protects devices and endpoints.

Microsoft Defender for Office 365: Protects email, prevents phishing attacks, and secures collaboration tools.

Microsoft Defender for Identity: Monitors user behavior and protects against identity-based threats.

Microsoft Defender for Cloud Apps: Secures cloud applications.

Microsoft Defender Vulnerability Management: Manages known vulnerabilities and tracks security posture.

Microsoft Defender for IoT: Protects IoT and OT environments.

Microsoft Defender for Servers: Protects servers.

Microsoft Defender for SQL: Secures SQL databases.

Microsoft Defender for Containers: Protects containerized environments.

Microsoft Defender for App Service: Secures app services.

Microsoft Defender for Key Vault: Protects key vaults.

Microsoft Defender for DNS: Secures DNS infrastructure.

Microsoft Defender for Resource Manager: Protects resource management.

Microsoft Defender for open-source relational databases: Secures open-source databases.

There are also other specialized Defender products like Microsoft Defender Threat Intelligence and Microsoft Defender External Attack Surface Management (EASM).

Fuzz Testing is a Software Testing

technique that uses invalid, unexpected, or random data as input and then checks for exceptions such as crashes and potential memory leaks.

Fuzz testing can be done in a variety of ways, including:

File fuzzing: providing random or malformed data as inputs to a file-parsing function to identify issues such as buffer overflows or other memory-corruption issues.

Network fuzzing: sending malformed or unexpected data as inputs to a network protocol to identify issues such as denial of service (DoS) attacks or other security vulnerabilities.

API fuzzing: sending random or unexpected data as inputs to an application programming interface (API) to identify issues such as input validation issues or other security vulnerabilities.

Dangling DNS entries occur when a DNS record points to a resource that no longer exists, such as a decommissioned server, a deleted cloud instance, or an expired domain. This can lead to security vulnerabilities, misconfigurations, or service disruptions. Here's an overview of the issue and how to address it:

What Are Dangling DNS Entries?

A DNS record (e.g., A, CNAME, or ALIAS) points to an IP address or domain that is no longer valid.

Common scenarios:

A cloud instance is terminated, but the DNS record still points to its IP.

A domain expires, but subdomains or aliases still reference it.

A service is decommissioned, but its DNS records are not cleaned up.

Risks of Dangling DNS Entries

Security Vulnerabilities:

Attackers can register the abandoned IP or domain and take control of the DNS entry.

This can lead to phishing, malware distribution, or impersonation attacks.

Service Disruptions:

- Users may be unable to access the intended service.

Reputation Damage:

- If attackers exploit the dangling entry, it can harm your organization's reputation.

How to Identify Dangling DNS Entries

Audit DNS Records:

- Regularly review all DNS records to ensure they point to valid resources.

Monitor for Changes:

- Use tools to detect when resources (e.g., cloud instances) are deleted or modified.

Check for Unused Records:

- Identify records that are no longer in use or point to invalid destinations.

How to Prevent Dangling DNS Entries

Automate DNS Cleanup:

- Integrate DNS management with your infrastructure (e.g., cloud platforms) to automatically remove records when resources are deleted.

Use Alias Records:

- In cloud environments, use alias records (e.g., AWS Route 53 Alias) that automatically update or delete when the associated resource is removed.

Regular Audits:

- Schedule periodic reviews of your DNS configuration.

Monitor Expiry:

- Track domain and SSL certificate expirations to avoid unintentional lapses.

Implement Security Policies:

- Enforce policies to ensure DNS records are updated or removed when resources are decommissioned.

Tools to Help Manage DNS Entries

DNS Management Platforms:

- AWS Route 53, Cloudflare, Google Cloud DNS, etc.

Monitoring Tools:

- Tools like Nagios, Datadog, or custom scripts can alert you to changes in DNS or infrastructure.

Security Scanners:

- Use tools like DetectDangling or open-source scripts to scan for dangling DNS records.

Steps to Fix Dangling DNS Entries

Identify and remove or update records pointing to invalid resources.

Verify that all active DNS records point to valid and secure endpoints.

Implement automated processes to prevent future occurrences.

By proactively managing DNS records and integrating DNS management with your infrastructure, you can reduce the risk of dangling DNS entries and their associated vulnerabilities.

The Microsoft Cloud Security Benchmark (MCSB)

is a comprehensive set of security recommendations and best practices designed to help organizations secure their cloud environments, particularly those using Microsoft Azure. It aligns with industry standards like NIST, CIS, and ISO, and provides actionable guidance for improving cloud security posture. Below are the key items and focus areas of the MCSB:

1. Identity and Access Management (IAM)

Key Focus:

Implement strong authentication (e.g., multi-factor authentication (MFA)).

Use role-based access control (RBAC) to enforce least privilege.

Monitor and manage privileged accounts.

Key Recommendations:

Enable Azure AD Conditional Access policies.

Regularly review and revoke unused permissions.

Use managed identities for Azure resources.

2. Data Protection

Key Focus:

Encrypt data at rest and in transit.

Classify and label sensitive data.

Implement data loss prevention (DLP) strategies.

Key Recommendations:

Use Azure Key Vault for managing encryption keys and secrets.

Enable Azure Storage Service Encryption.

Implement Azure Information Protection for data classification.

3. Network Security

Key Focus:

Secure network boundaries and segmentation.

Monitor and control network traffic.

Protect against DDoS attacks.

Key Recommendations:

Use Azure Firewall or Network Security Groups (NSGs) to restrict traffic.

Enable Azure DDoS Protection.

Implement Azure Virtual Network (VNet) peering and private links.

4. Threat Protection

Key Focus:

Detect, investigate, and respond to threats (DIR)

Use advanced threat detection tools.

Automate threat response.

Key Recommendations:

Enable Microsoft Defender for Cloud (formerly Azure Security Center).

Use Microsoft Sentinel for SIEM and SOAR capabilities.

Configure automated responses to security incidents.

5. Security Operations

Key Focus:

Establish a security operations center (SOC).

Monitor and log all activities.

Conduct regular security assessments.

Key Recommendations:

Use Azure Monitor and Log Analytics for centralized logging.

Perform regular vulnerability assessments and penetration testing.

Implement a continuous monitoring strategy.

6. Application Security

Key Focus:

Secure application development and deployment.

Protect against common vulnerabilities (e.g., OWASP Top 10).

Use secure coding practices.

Key Recommendations:

Integrate security into DevOps (DevSecOps).

Use Azure Web Application Firewall (WAF).

Regularly scan for vulnerabilities in application code.

7. Endpoint Security

Key Focus:

Secure endpoints (e.g., virtual machines, containers).

Protect against malware and unauthorized access.

Ensure endpoint compliance.

Key Recommendations:

Use Microsoft Defender for Endpoint.

Enable endpoint detection and response (EDR) capabilities.

Apply security baselines to endpoints.

8. Governance and Compliance

Key Focus:

Establish policies and procedures for cloud governance.

Ensure compliance with regulatory requirements.

Conduct regular audits.

Key Recommendations:

Use Azure Policy to enforce organizational rules.

Leverage Azure Blueprints for consistent deployment.

Monitor compliance with tools like Microsoft Compliance Manager.

9. Incident Response

Key Focus:

Prepare for and respond to security incidents.

Conduct post-incident reviews.

Improve incident response processes.

Key Recommendations:

Develop and test an incident response plan.

Use Microsoft Sentinel for incident investigation.

Integrate incident response with threat intelligence.

10. Backup and Recovery

Key Focus:

Ensure data resilience and availability.

Protect against ransomware and data loss.

Test recovery processes.

Key Recommendations:

Use Azure Backup for critical data.

Implement Azure Site Recovery for disaster recovery.

Regularly test backup and restore procedures.

11. Secure Configuration

Key Focus:

Harden cloud resources and services.

Follow security baselines and benchmarks.

Automate configuration management.

Key Recommendations:

Use Azure Security Center to assess and improve configurations.

Apply CIS or NIST benchmarks to Azure resources.

Use Infrastructure as Code (IaC) tools like ARM templates or Terraform.

12. Supply Chain Security

Key Focus:

Secure third-party integrations and dependencies.

Monitor for supply chain risks.

Ensure software integrity.

Key Recommendations:

Use Azure Artifacts for secure package management.

Monitor third-party dependencies for vulnerabilities.

Implement code signing and integrity checks.

13. Monitoring and Logging

Key Focus:

Collect and analyze logs for security insights.

Detect anomalies and suspicious activities.

Retain logs for compliance and investigation.

Key Recommendations:

Use Azure Monitor and Log Analytics.

Enable diagnostic logging for all critical resources.

Set up alerts for suspicious activities.

14. Encryption and Key Management

Key Focus:

Protect sensitive data with encryption.

Securely manage cryptographic keys.

Rotate keys regularly.

Key Recommendations:

Use Azure Key Vault for key management.

Enable encryption for all storage accounts and databases.

Implement hardware security modules (HSMs) for high-security scenarios.

15. Zero Trust Architecture

Key Focus:

Adopt a Zero Trust security model.

Verify every request and enforce least privilege.

Assume breach and minimize attack surface.

Key Recommendations:

Use Azure AD Conditional Access.

Implement network micro-segmentation.

Continuously validate trust for all users and devices.

Modern security practices assume that the adversary has breached the network perimeter.

This shift in mindset is a cornerstone of modern security practices, often referred to as the "assume breach" philosophy. Instead of solely focusing on preventing attackers from entering the network, organizations now prioritize detecting, containing, and mitigating threats that have already bypassed traditional perimeter defenses. This approach is critical because sophisticated adversaries often find ways to breach even the most secure perimeters.

Here’s how modern security practices address the assumption that the adversary has already breached the network:

1. Zero Trust Architecture

Core Principle: Never trust, always verify.

Key Practices:

Verify every user, device, and application before granting access.

Enforce least privilege access (only the minimum permissions necessary).

Use multi-factor authentication (MFA) for all users.

Continuously monitor and validate trust throughout the session.

2. Network Segmentation

Core Principle: Limit lateral movement within the network.

Key Practices:

Divide the network into smaller, isolated segments (micro-segmentation).

Use firewalls, VLANs, and software-defined networking (SDN) to enforce boundaries.

Restrict communication between segments to only what is necessary.

3. Endpoint Detection and Response (EDR)

Core Principle: Monitor and respond to threats on endpoints.

Key Practices:

Deploy EDR solutions to detect malicious activity on endpoints (e.g., laptops, servers).