How to create a reverse proxy using Nginx to returns a JSON when hits some endpoint

Bruno Trindade Bragança

Bruno Trindade BragançaTable of contents

To see this post in Portuguese, click here

The problem

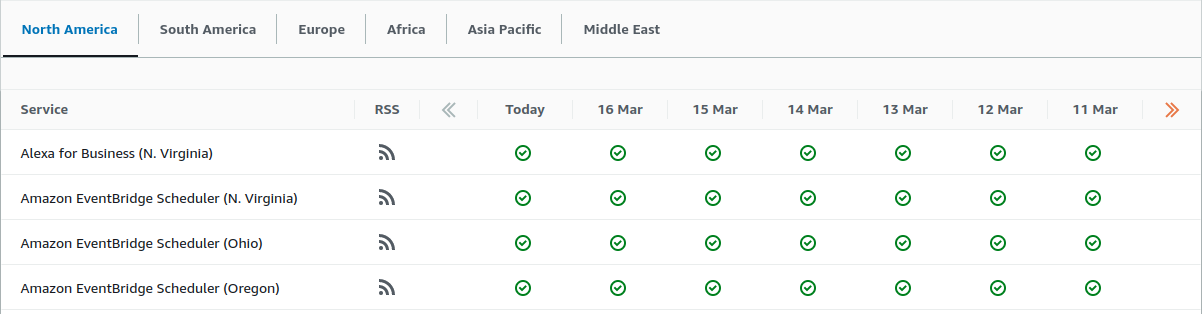

At my current job, I had to build a page to check if some environments are running or down.

Just like AWS, Apple, etc, have, as you can see below.

Job done, then I have to write tests to ensure the quality, to prevent that future changes breaks the current code, etc.

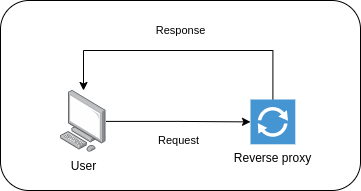

But how can I do this? It's wrong to use real endpoints in the tests (except if are integration tests, but this isn't the case). So an alternative is to use a reverse proxy to send back a response when my application hit an endpoint. The image below shows the request-response flow.

To achieve this, I set up a reverse proxy using NGINX and up it with docker/docker-compose.

Reverse proxy

A reverse proxy is a server that sits between a client and a web server, forwarding client requests to the appropriate web server and returning the server's response to the client. Nginx is a popular open-source web server that can also act as a reverse proxy. In this case, the response will be sent automatically to the client instead of being forwarded to another server.

First of all, I created a Dockerfile with the reverse proxy image, as shown below, and copied nginx.conf to the proxy container:

FROM nginx:alpine

COPY nginx.conf /etc/nginx/nginx.conf

The nginx.conf is the below, and must be in the same directory of Dockerfile:

worker_processes 1;

events { worker_connections 1024; }

http {

sendfile off;

upstream docker-nginx {

server localhost:80;

}

server {

listen 8080;

location ~ /backend {

default_type application/json;

return 200 '{"api_version":"4.0.0", "status":"Ok"}\n';

}

location ~ /frontend {

default_type application/json;

return 200 '{"version":"2.0.0","status":"OK"}\n';

}

location ~ /* {

default_type text/html;

return 200 'NGINX reverse proxy up\n';

}

}

}

I set worker_processes explicitly to 1 which is the default value. It is common practice to run 1 worker process per core. For more about it, check Thread Pools in NGINX Boost Performance 9x.

The worker_connections sets the maximum number of simultaneous connections that can be opened by a worker process (default=1024).

The sendfile is usually essential to speed up any web server passing the pointers (without copying the whole object) straight to the socket descriptor. However, we use NGINX as a reverse proxy to serve pages from an application server, we can deactivate it.

The upstream directive in ngx_http_upstream_module defines a group of servers that can listen on different ports. So, the upstream directive is used to define the servers.

The server was configured to listen to port 8080, and when we call localhost:8080/some_endpoint, the request will match with one of the locations and returns what is configured.

If the endpoint is localhost:8080/backend, then the response will be the json defined in location ~ /backend with the status code defined

If the endpoint is localhost:8080/frontend, then the response will be the json defined in location ~ /frontend with the status code defined

If the endpoint is different from /backend and /frontend, the response will be the html defined in location ~ /* with the status code defined

Note here: default_type must be added, otherwise the browser will download it as an unrecognized file.

Docker compose

With Compose, we define a multi-container application in a single file, then spin our application up in a single command which does everything that needs to be done to get it running.

The main function of Docker Compose is the creation of microservice architecture, meaning the containers and the links between them.

Compared with docker commands, the docker-compose commands are not only similar, but they also behave like docker counterparts. The only difference is that docker-compose commands affect the entire multi-container architecture defined in the docker-compose.yml configuration file and not just a single container.

There are three steps to using Docker Compose:

Define each service in a Dockerfile.

Define the services and their relation to each other in the docker-compose.yml file.

Use docker-compose up to start the system.

Below we can see part of docker-compose.yml file:

reverse_proxy:

build:

context: ./reverse_proxy/

dockerfile: Dockerfile

ports:

- 8080:8080

expose:

- "8080"

restart: always

networks:

local_network:

aliases:

- reverse_proxy

The context is ./reverse_proxy/ because both Dockerfile and nginx.conf are inside the reverse_proxy folder.

Testing

After set up, we can use the command docker-compose up to up our applications and test our reverse proxy.

user@machine:~$ docker-compose up

Creating reverse_proxy_1 ... done

user@machine:~$ curl http://localhost:8080/test

NGINX reverse proxy up

user@machine:~$ curl http://localhost:8080/backend

{"api_version":"4.0.0", "status":"Ok"}

user@machine:~$ curl http://localhost:8080/frontend

{"version":"2.0.0","status":"OK"}

That's it. Now we can test our application without any issues.

Notes

This post was written with help of the following websites:

Subscribe to my newsletter

Read articles from Bruno Trindade Bragança directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by