The Caching Trap

Henning Rohde

Henning Rohde

Reliable distributed systems are notoriously difficult to architect. Even somewhat standardized designs, such as stateless microservices, require navigating numerous pitfalls when converting theory into practice. As discussed in a previous article, The Next 700 Stateless Services, such services delegate much of their complexity to an external database in return for a more straightforward, scalable design.

Or so the theory goes.

But it is no free lunch: the Achilles Heel of the stateless design is database latency. Re-reading everything of consequence in each database transaction is necessary to keep the atomicity dragons away, but it is expensive and slow. High latency is a tax on every interaction, and a sluggish experience is a usability killer. The patience from the 90's dial-up era is gone. Low latency is an essential feature.

Caching is commonly used as a universal fix for latency and scalability. By retaining data in memory instead of re-reading it transactionally, services can use cached data to achieve a massive speedup. A handy technique, but it is also a subtle trap set by the atomicity dragons. In some situations, stale data is acceptable. In others, it is not. It often depends on user expectations.

Elements of Caching

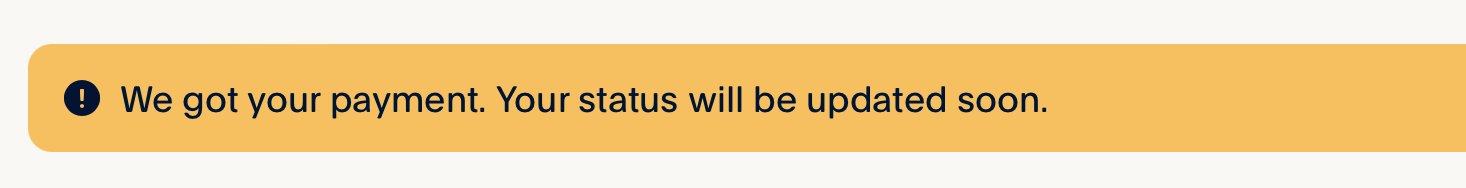

Before you think "how hard can it be?", consider the following. After making a payment at PayPal recently, the invoice status remained "Not Paid" with the website immediately displaying this banner.

That is a ... bold embrace of stale data. For an invoice that "customers can pay instantly" of all places. Disclaimer: I have no idea how the system is designed and if caching is at fault, but if nothing else, their workaround is refreshingly honest.

A design with caching must take multiple concerns into account:

Read-after-write consistency. When data is updated, subsequent reads must return the updated version. Caching may violate that guarantee temporarily. Near-static data is particularly tempting to cache because it almost never changes. But there is a catch: just after that rare update is made is precisely when it is re-read, typically by the updater who wants to verify the change took effect. Violating read-after-write consistency works best for a read-only audience who does not know or care exactly when the data is updated.

Size, inclusion, and invalidation. The standard questions to settle are what to add to the cache and when to remove it again, usually subject to some size limitation. We have all made these decisions many times and typically have some default preference. Let me guess: cache every instance of some key entity type, guesstimate an initial size (which is never later revisited), and pick Least-Recently-Used (LRU) as the eviction policy. Close? But these can be consequential decisions: not all data lends itself well to caching, and a bad cache invalidation policy is just a memory leak with more steps. It all depends on how it is used.

Location. The location of the cache(s) is the next question. A common choice is an in-memory cache on each node with no particular coordination, either on the caller or callee side. Whatever requests hit that node have their responses cached, and cache hits have optimal latency. Simple and often adequate, but the hit rate diminishes as the system scales. Distributed caching - whether internally managed or delegated to, e.g., Memcached or Redis - adds a bit of latency and various failure modes that come with coordination in return for better size utilization and cache hit rates. In a large system, there will be an abundance of possibilities for cache placement.

Effectiveness. Finally, the most important - yet most overlooked - question. It is easy to get carried away with cache utilization and hit rates, but does the cache achieve what it was designed to do? Lower latency? Increased scale? Both? But what about correctness? Hmmm. Tradeoffs are necessarily made.

The default option is to slap a fixed-size, local LRU cache in front of all database reads and hope for the best. But for updaters of near-static data, such a cache would be almost 100% ineffective: the first read is likely a cache-miss, and the second read - although technically a cache-hit - finds stale data.

Of course, if you are serving cat videos or puppy photos, stale data is not a concern - all versions are cute. But when correctness matters, in the sense of read-after-write consistency, that line of thinking is at the heart of misapplied caching and the inevitable problems that follow.

Caching at "The Usual Pizza"

"The Usual Pizza" is an online (fictional) pizzeria that caters to repeat customers, introduced in The Overuse Of Microservices. Their signature feature is the 1-click reorder, where customers can reorder "the usual" in a single request without specifying any further information.

The system must fetch the current delivery address and "usual pizza" selection with a list of ingredients as part of placing the order. The fetching is done as part of a single database transaction to avoid atomicity problems if another order that updates the information is placed concurrently due to account sharing.

But soon, latency becomes a problem. Repeat customers expect placing an order of "the usual" to be fast. Complaints rise. Pressure on engineering increases. Is caching a solution?

On the surface, the near-static "the usual" selection and address look promising for caching. This data rarely change. But, on closer inspection, we recognize they are similar to the earlier near-static updaters. Caching them will re-introduce correctness problems and likely be similarly inefficient. In general, customer-specific data does not lend itself easily to caching because it is rarely read and typically part of a read-after-write scenario.

What about the list of ingredients? Surely, that's cachable.

Well, it depends.

Pointed question: is that ingredient list immutable as stored? In other words, is the "usual" selection just a reference to a shared, immutable representation? If each pizza, such as "Hawaiian," is stored with an internal id and an immutable list of ingredients, then caching that list by id would not impact correctness. In that case, a local or distributed cache of ingredient lists is likely effective. Crisis averted.

In contrast, if each ingredient list were stored directly with the "usual" selection, it would be hard to cache.

Caching opportunities

Effective caching opportunities are created rather than found. However, they can be surprisingly elusive when correctness matters. In practice, a habit of isolating immutable data goes a long way, but there are always tradeoffs.

In the case of "The Usual Pizza," immutable ingredient lists also mean that if an ingredient is permanently no longer available (at a reasonable price point), then changing which ingredients go into a "Hawaiian" pizza is either not something that takes effect immediately or it requires that all "Hawaiian" customers must update their selection. Custom pizzas become trickier to manage if we want to share their ingredient lists. If we don't, then they are not fast. If we do, we must be careful not to share any custom names customers might give their creations. The alternative of storing the list of ingredients avoids these concerns by basically treating all pizzas as custom.

There is one more thing.

Request-driven caching is inherently probabilistic, and a "cold" cache does not improve latency. What if that is not good enough? Repeat customers want consistently low latency, even if their selection is Squid-Clam-Pineapple pizza with a Pepto-Bismol topping. If the number of pizza options is small and new pizzas are rarely added, there is an easy solution: load all ingredient lists on start-up and initialize a node-local cache with them. If not, a distributed cache would work better at the expense of some coordination - optionally combined with a local cache for the most popular options. The possibilities are endless, but missteps are easy.

Caching works best when either you can detect and recover from stale data, the data is immutable, or you don't care about the consistency/quality of the response. If you're serving cat videos, you can do no wrong. But when correctness even remotely matters, the default option is a trap that gets you into trouble.

Subscribe to my newsletter

Read articles from Henning Rohde directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Henning Rohde

Henning Rohde

Programming enthusiast drawn to distributed systems at scale. Extensive experience across infrastructure, big data, cloud computing, low-latency trading systems, static analysis, and an unlikely number of languages and technologies.