How to Monitor Your Axum App with Prometheus and Grafana.

Carlos Armando Marcano Vargas

Carlos Armando Marcano Vargas

DISCLAIMER: This article will focus on how to integrate the app with Prometheus and Grafana.

Learn how to monitor an Axum app with Prometheus and Grafana. This article provides instructions for installing Prometheus and Grafana, adding Prometheus as a data source, creating a dashboard, configuring the Prometheus exporter, and using the metrics_exporter_prometheus crate to export metrics to Prometheus. It also provides code examples and references to relevant documentation.

Requirements

- Rust installed

Prometheus

Prometheus is an open-source systems monitoring and alerting toolkit originally built at SoundCloud. Since its inception in 2012, many companies and organizations have adopted Prometheus, and the project has a very active developer and user community.

Grafana

Grafana is an open-source platform for monitoring and observability. It allows you to query, visualize, alert on and understand your metrics no matter where they are stored. Create, explore, and share beautiful dashboards with your team and foster a data-driven culture.

Installation

First, we have to install Prometheus and Grafana.

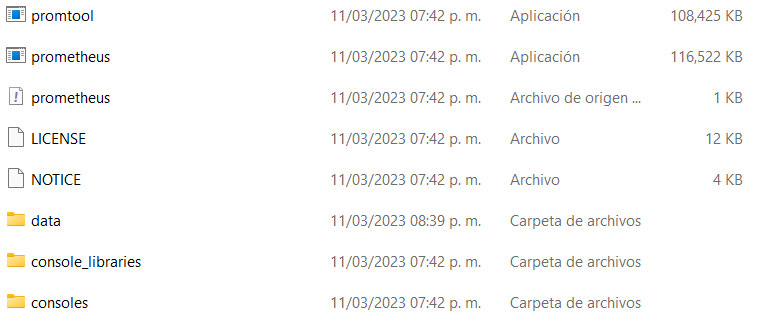

Prometheus

To install Prometheus, you can use a Docker Image or download a precompiled binary. We will use a precompiled binary, to download it, we have to go to this site.

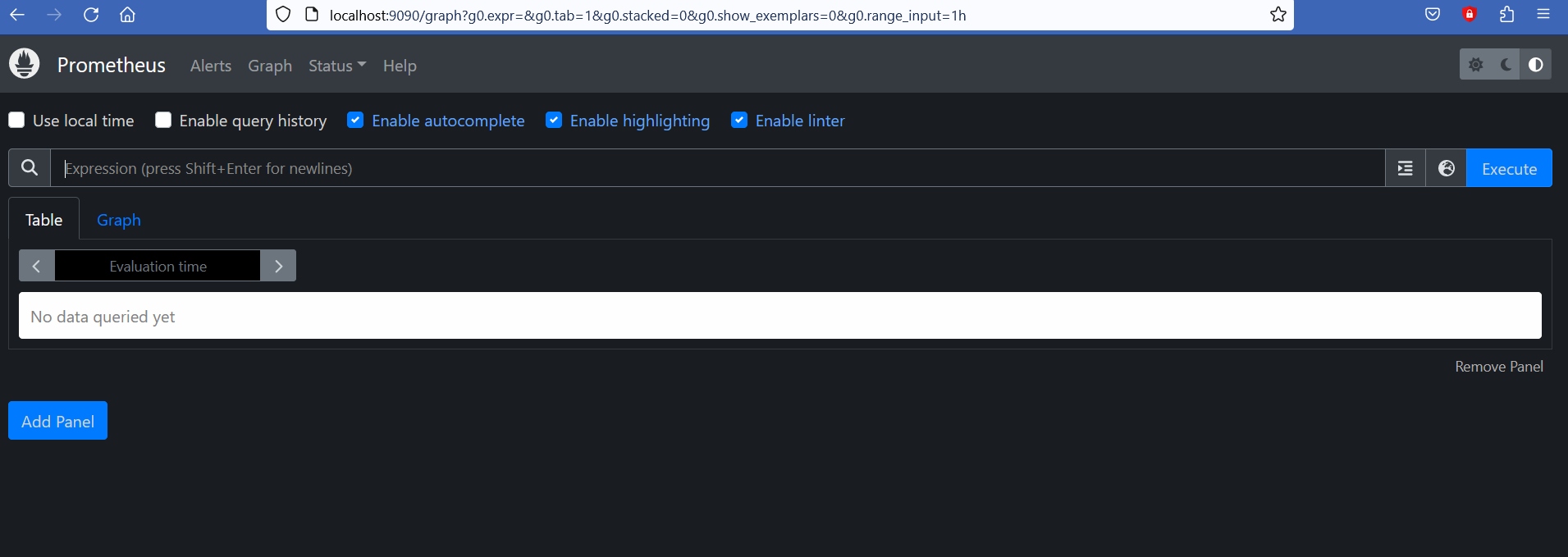

The prometheus server will start on port 9090. So we go to localhost:9090 to see its UI.

Grafana

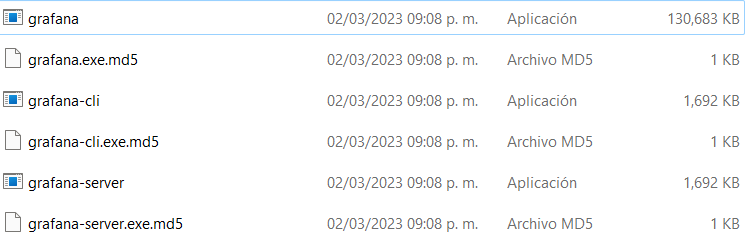

To install Grafana we have to download the installer from this site.

In the case of Windows users, after installation, we have to go to programs file > GrafanaLabs > grafana > bin and start grafana-server.exe .

Adding Prometheus as a data source

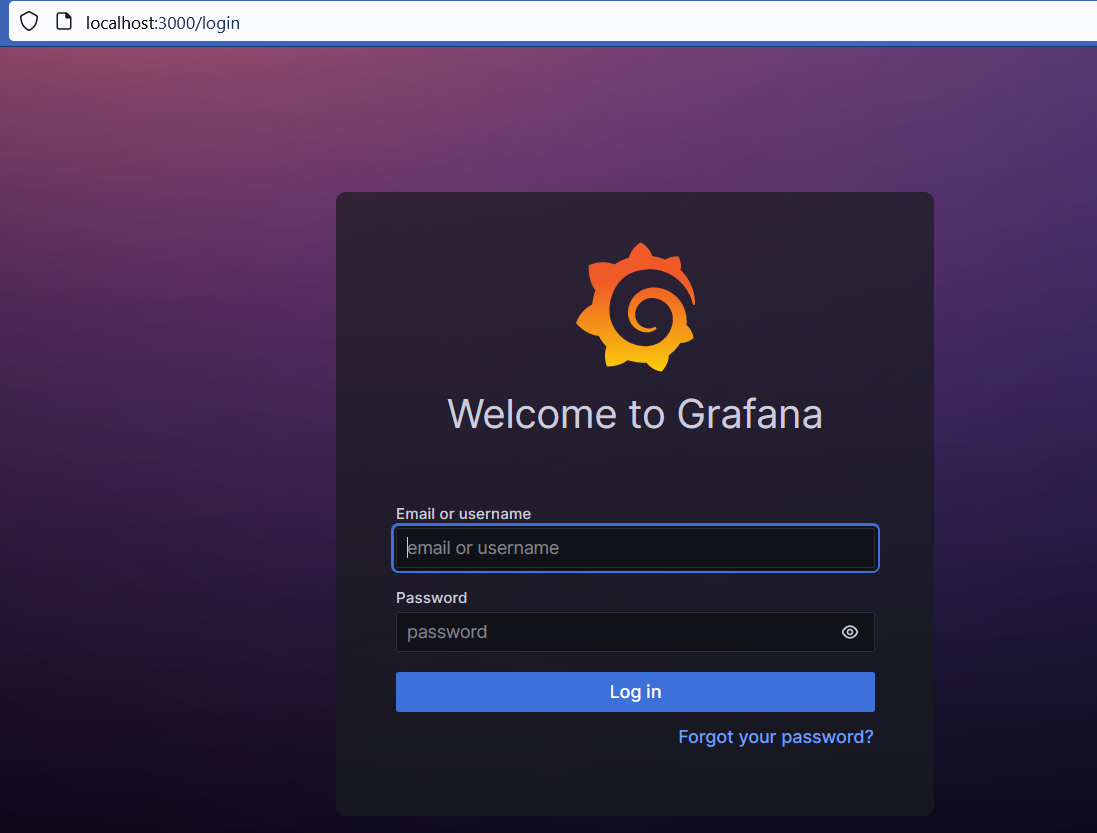

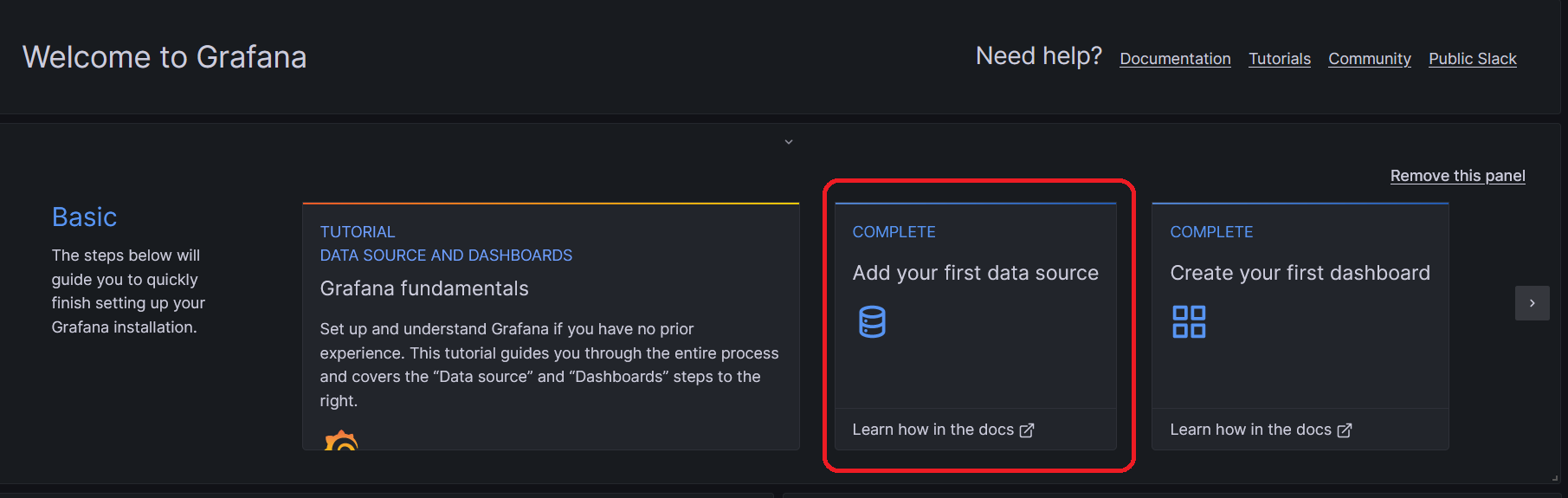

We start the grafana-server a go to its default port: localhost:3000.

We log in using "admin" both as username and password.

We click on "Add your first data source".

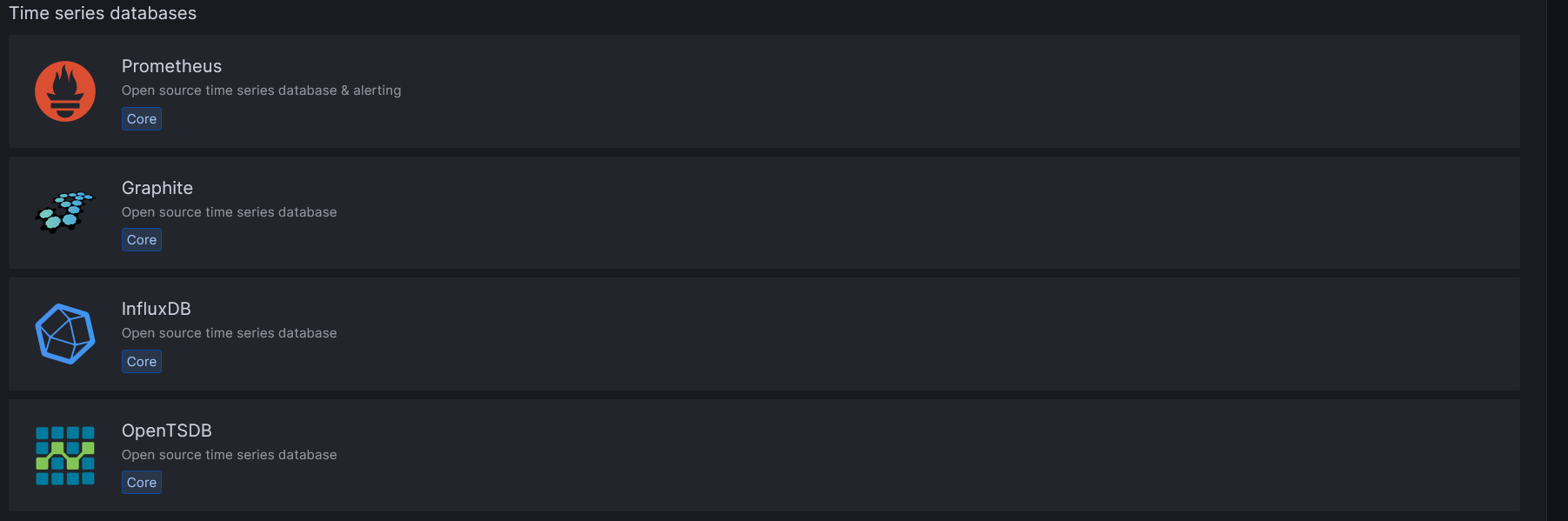

We select "Prometheus" as data source.

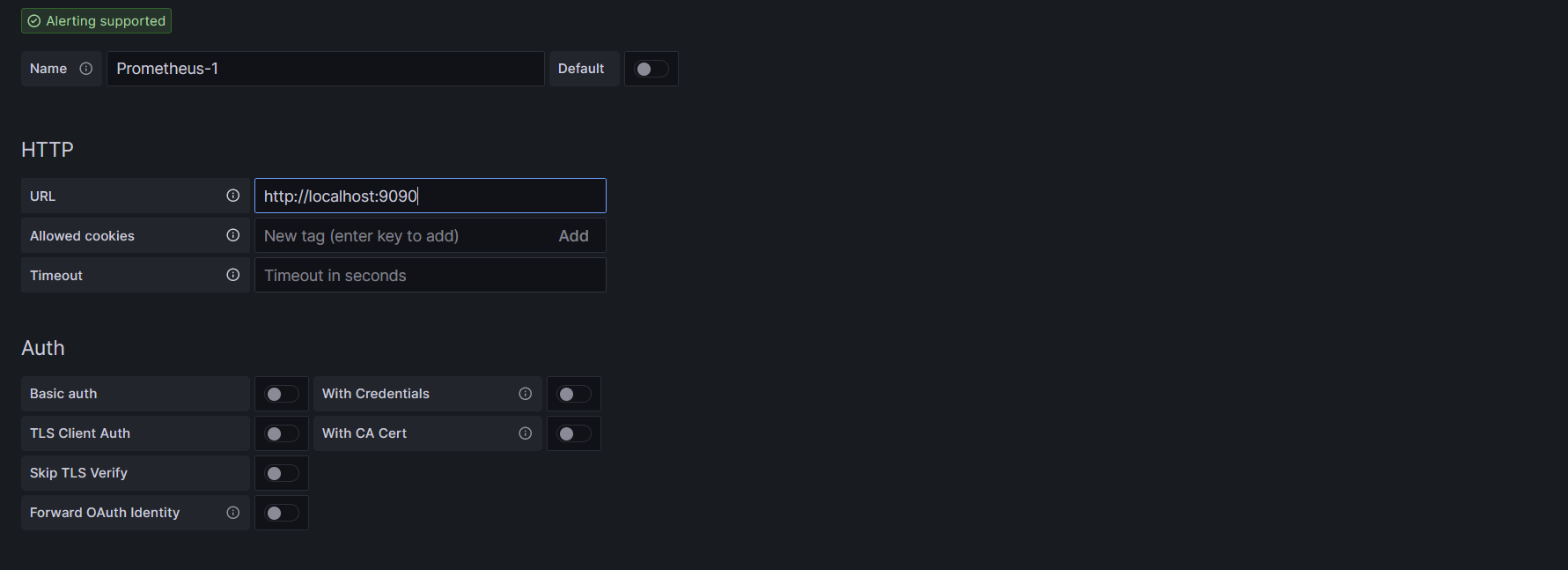

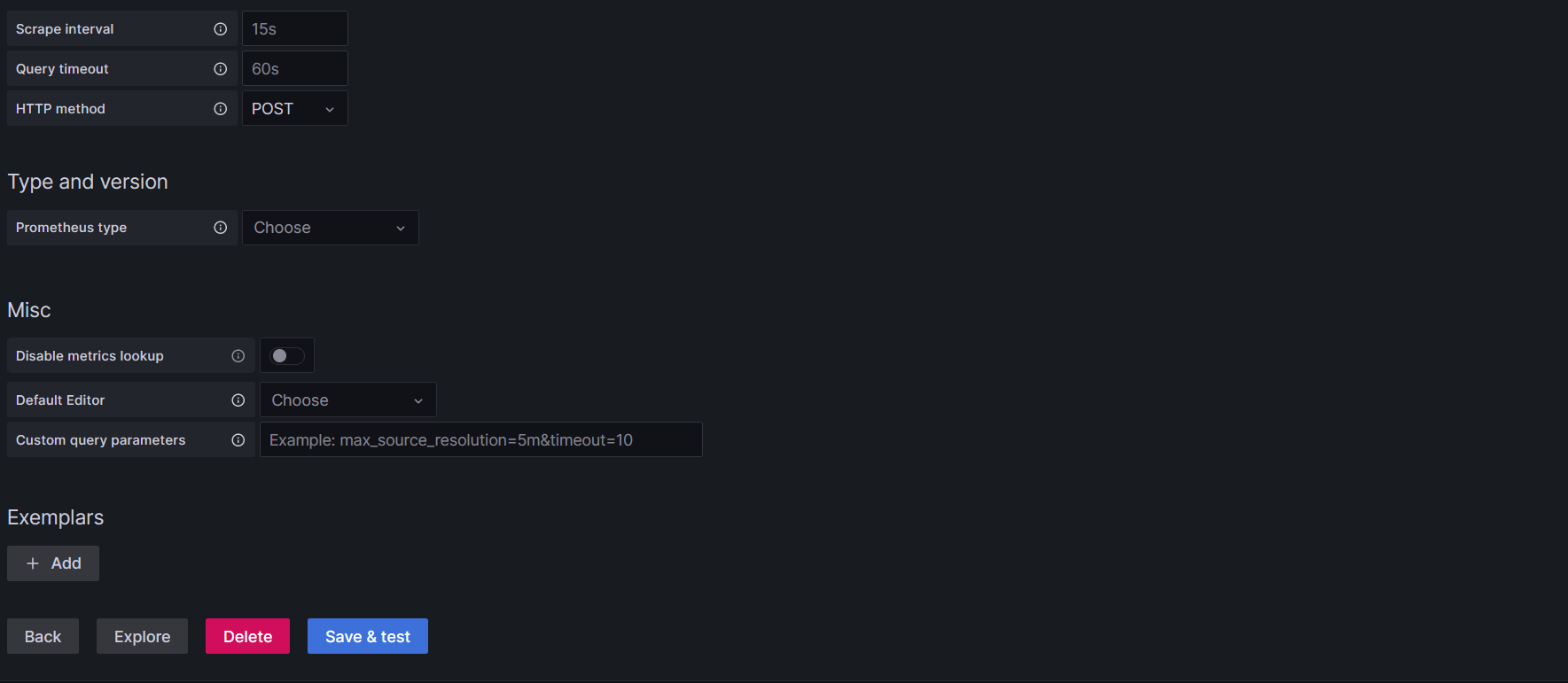

On the URL field, we write the port where the Prometheus server is running: localhost:9090.

The, we click on "Save & test". Grafana will check if it can connect to the Prometheus server.

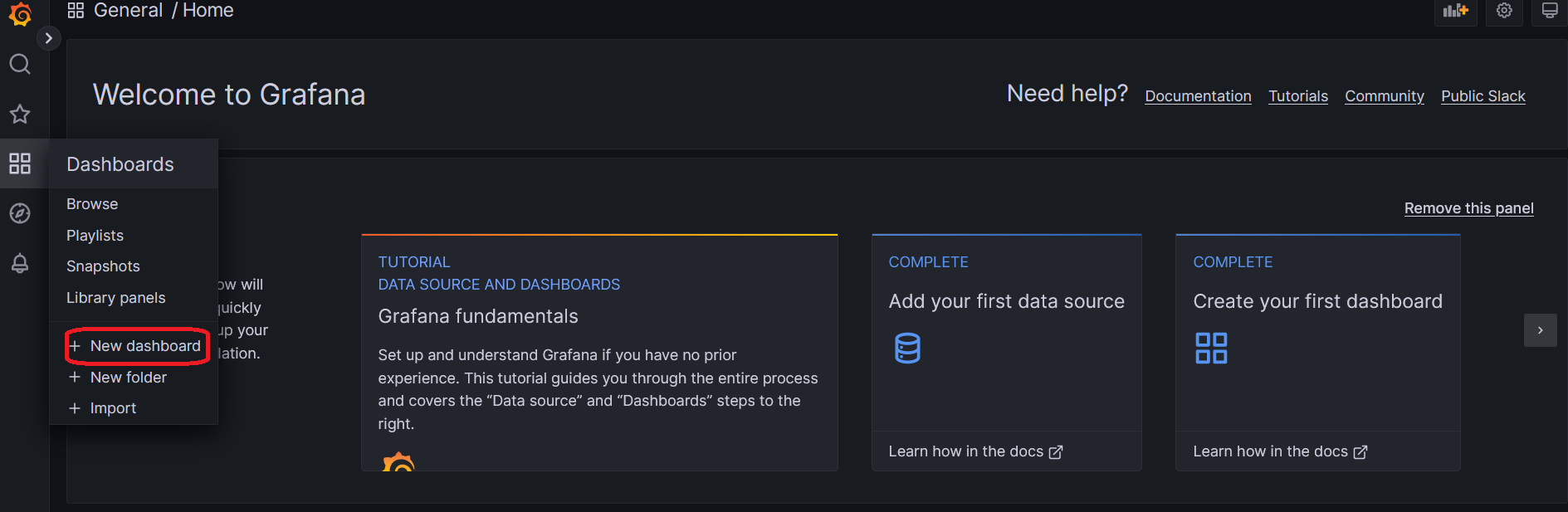

Creating a dashboard

After we defined our data source, we create a dashboard by clicking on "New dashboard".

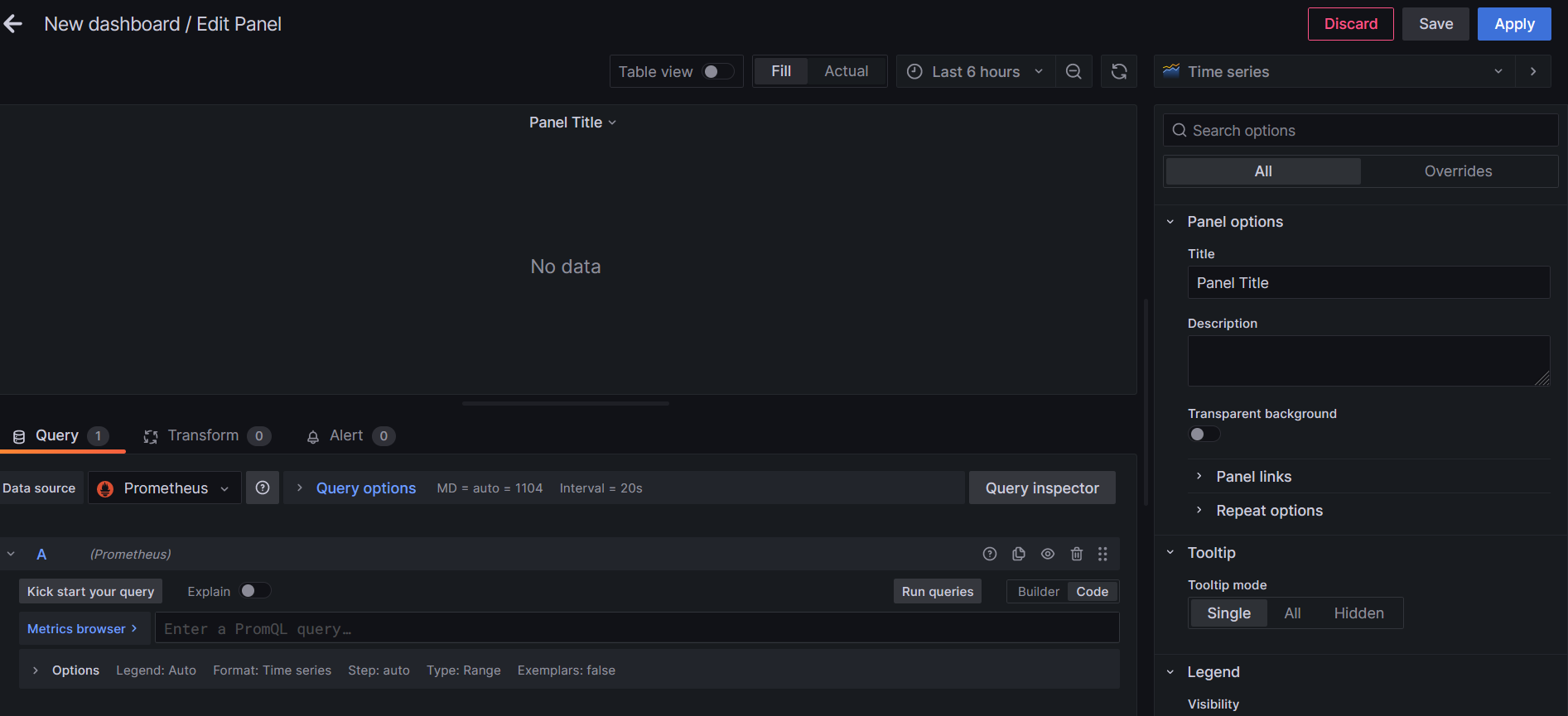

Then, we click on "Add a new panel".

It will show "No data" because there are no queries and we have not started to collect any metrics yet.

Axum Server

Cargo.toml

[package]

name = "axum-prometheus"

version = "0.1.0"

edition = "2021"

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

[dependencies]

axum = "0.6.10"

metrics = "0.18"

metrics-exporter-prometheus = "0.8"

tokio = { version = "1.26", features = ["full"] }

tracing = "0.1.36"

tracing-subscriber = { version = "0.3.16", features = ["env-filter"] }

tower-http = { version = "0.3.4", features = ["trace"] }

tower = { version = "0.4", features = ["make"] }

main.rs

We will use the prometheus-metrics example from the Axum repository. And change a few things.

We will define two apps in the same file. One will be the main app, with two endpoints. And the other one will be the app to record the metrics and export them to Prometheus.

use axum::{

extract::MatchedPath,

http::Request,

middleware::{self, Next},

response::IntoResponse,

routing::get,

Router,

};

use metrics_exporter_prometheus::{Matcher, PrometheusBuilder, PrometheusHandle};

use std::{

future::ready,

net::SocketAddr,

time::{Duration, Instant},

};

use tracing_subscriber::{layer::SubscriberExt, util::SubscriberInitExt};

We will use the metrics_exporter_prometheus crate to export the metrics to Prometheus.

fn main_app() -> Router {

tracing_subscriber::registry()

.with(tracing_subscriber::fmt::layer())

.init();

Router::new()

.route("/hello", get(|| async {"Hello World"}))

.route(

"/slow",

get(|| async {

tokio::time::sleep(Duration::from_secs(1)).await;

}),

)

.route_layer(middleware::from_fn(track_metrics))

.layer(

TraceLayer::new_for_http()

.make_span_with(trace::DefaultMakeSpan::new().level(Level::INFO))

.on_response(trace::DefaultOnResponse::new().level(Level::INFO)),

)

}

Here is the main app, with two endpoints. A middleware to track the metrics. And tracing.

async fn start_main_server() {

let app = main_app();

let addr = SocketAddr::from(([127, 0, 0, 1], 4000));

tracing::debug!("listening on {}", addr);

axum::Server::bind(&addr)

.serve(app.into_make_service())

.await

.unwrap()

}

Here is a function to start the main app from port 4000. Remember we can use neither 3000 nor 9090, because Grafana and Prometheus use them, respectively.

async fn start_metrics_server() {

let app = metrics_app();

// NOTE: expose metrics endpoint on a different port

let addr = SocketAddr::from(([127, 0, 0, 1], 3001));

tracing::debug!("listening on {}", addr);

axum::Server::bind(&addr)

.serve(app.into_make_service())

.await

.unwrap()

}

Here we start the metrics server. This server will show the metrics of the main app from port 3001.

fn setup_metrics_recorder() -> PrometheusHandle {

const EXPONENTIAL_SECONDS: &[f64] = &[

0.005, 0.01, 0.025, 0.05, 0.1, 0.25, 0.5, 1.0, 2.5, 5.0, 10.0,

];

PrometheusBuilder::new()

.set_buckets_for_metric(

Matcher::Full("http_requests_duration_seconds".to_string()),

EXPONENTIAL_SECONDS,

)

.unwrap()

.install_recorder()

.unwrap()

}

Here we create a PrometheusHandle, a handler for accessing metrics stored via PrometheusRecorder.

Then, we create an instance of PrometheusBuilder, is a builder for creating and installing Prometheus recorder/exporter.

The set_buckets_for_metrics function sets the bucket for a specific pattern. Bucket values represent the higher bound of each bucket. If buckets are set, then any histograms that match will be rendered as true Prometheus histograms, instead of summaries. Matcher is an enum that matches a metric name in a specific way. We can find more information in its documentation.

fn metrics_app() -> Router {

let recorder_handle = setup_metrics_recorder();

Router::new().route("/metrics", get(move || ready(recorder_handle.render())))

}

We use the render() function to show the snapshot of the metrics held by the recorder.

async fn track_metrics<B>(req: Request<B>, next: Next<B>) -> impl IntoResponse {

let start = Instant::now();

let path = if let Some(matched_path) = req.extensions().get::<MatchedPath>() {

matched_path.as_str().to_owned()

} else {

req.uri().path().to_owned()

};

let method = req.method().clone();

let response = next.run(req).await;

let latency = start.elapsed().as_secs_f64();

let status = response.status().as_u16().to_string();

let labels = [

("method", method.to_string()),

("path", path),

("status", status),

];

metrics::increment_counter!("http_requests_total", &labels);

metrics::histogram!("http_requests_duration_seconds", latency, &labels);

response

}

This middleware is used to track the metrics of the main app, including the total number of HTTP requests, and the response time of each request. The metrics tracked by this middleware are collected and exported to Prometheus using the metrics_exporter_prometheus crate. It will show the HTTP method executed by the main server, the path, status code and latency.

Also in this handler, we use the metrics crate, to create a counter and a histogram of the HTTP requests.

To start the app we run cargo run command.

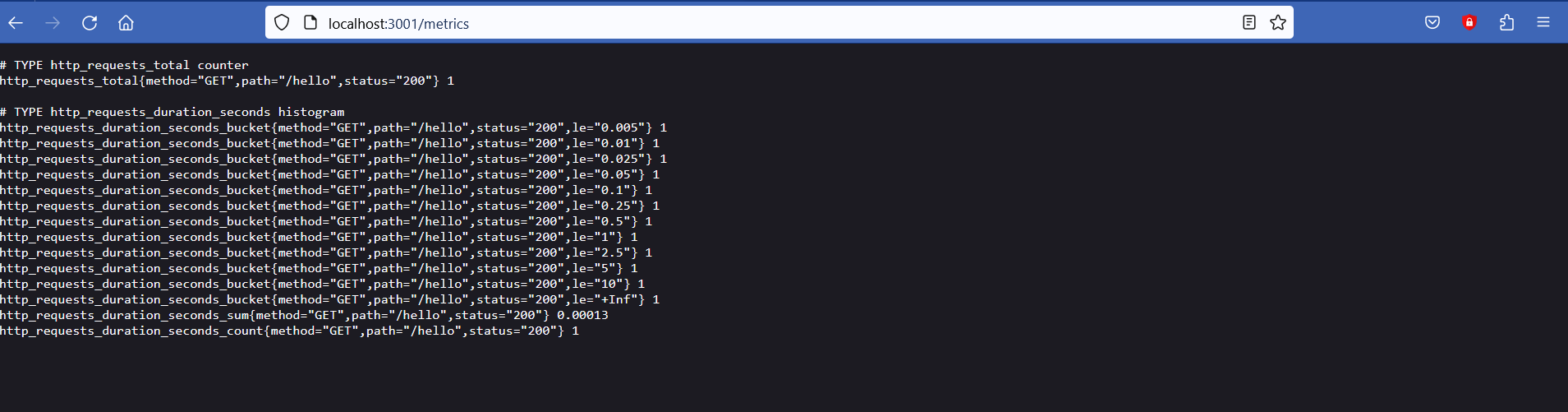

We go to localhost:4000/hello with our browser. Then, we go to localhost:3001/metrics to see the histogram and the total number of HTTP requests with information about the HTTP method executed, the path, and the status code.

Prometheus configuration file

We have to add our Prometheus exporter to prometheus.yml, this way the Prometheus server will be able to collect the metrics of our application.

prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: axum_server

static_configs:

- targets: ["localhost:3001"]

Here, we define the job_name as axum_server and specify the port where the exporter is listening, in our case is 3001.

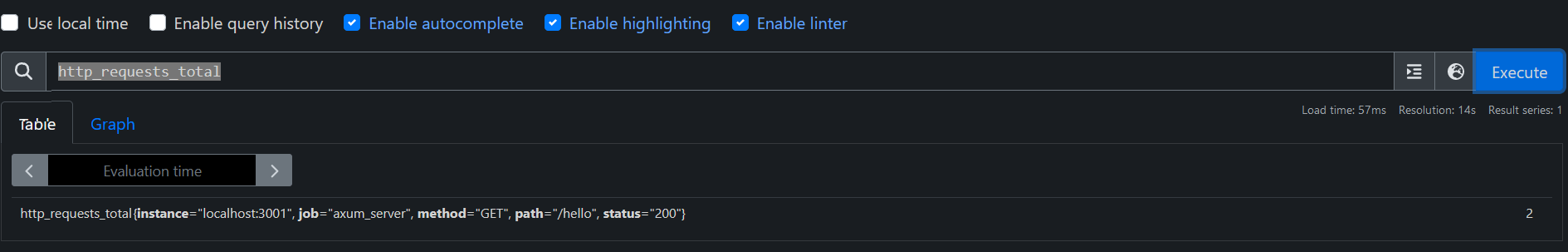

Now, we can go to localhost:9090 and execute a query to test if Prometheus is collecting any metric.

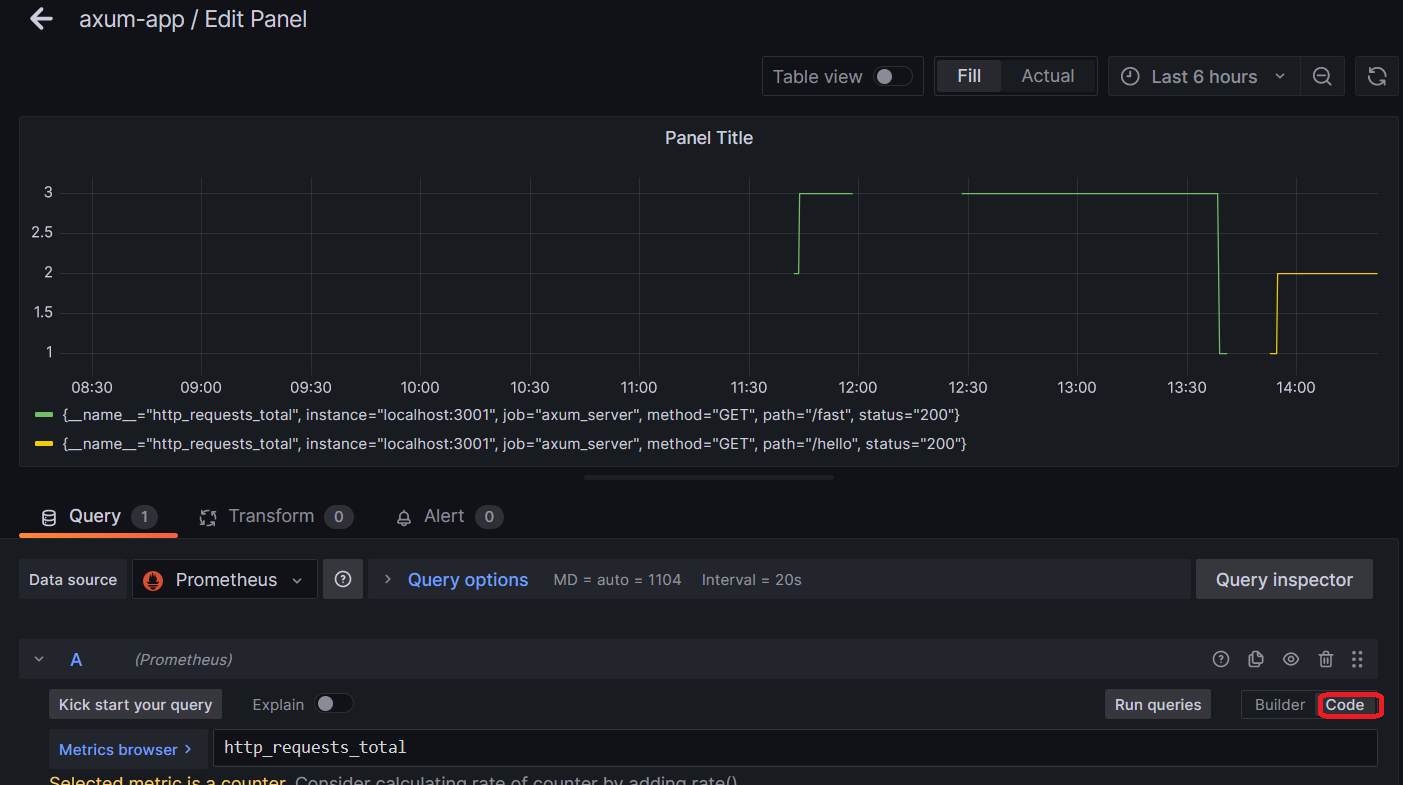

If we go to the grafana server, localhost:3000, and go to our dashboard, we can run the same query: http_request_total.

Conclusion

In conclusion, monitoring an Axum app with Prometheus and Grafana is a straightforward process. By following the step-by-step instructions provided in this article, you can install and configure Prometheus and Grafana, add Prometheus as a data source, create a dashboard, configure the Prometheus exporter, and use the metrics_exporter_prometheus crate to export metrics to Prometheus. With this setup, you can easily monitor the performance of your Axum app and make data-driven decisions to optimize its performance. The source code is available on Github, and the article also provides references to additional resources for further learning.

Thank you for taking the time to read this article.

If you have any recommendations about other packages, architectures, how to improve my code, my English, or anything; please leave a comment or contact me through Twitter, or LinkedIn.

The source code is here.

References

Subscribe to my newsletter

Read articles from Carlos Armando Marcano Vargas directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Carlos Armando Marcano Vargas

Carlos Armando Marcano Vargas

I am a backend developer from Venezuela. I enjoy writing tutorials for open source projects I using and find interesting. Mostly I write tutorials about Python, Go, and Rust.