Invoice Processing with OCR using Google Vision API and GPT-4

rajashekar vt

rajashekar vt

This blog will guide you through using Google Vision to extract text from invoice images and PDFs. You will also learn to extract their corresponding entities with the help of GPT-4. By the end of this guide, you'll have a thorough understanding of Google Vision and GPT-4 for Invoice Processing.

Introduction

Invoice processing is an essential task for any business but can also be time-consuming and error-prone. With large volumes of invoices coming in daily, manual processing becomes challenging and can result in significant delays, inaccuracies, and costs. This is where technology can help. By revolutionizing the invoice processing process with Google Vision and GPT-4, businesses can significantly improve the accuracy, speed, and efficiency of their invoicing process.

Entity Extraction in Invoice Processing

Entity extraction plays a crucial role in invoice processing. It is the process of automatically extracting relevant information from invoices and transforming it into structured data. This structured data can then be used for further processing, such as data entry into an accounting system or analysis. By automating the entity extraction process, businesses can significantly improve the speed, accuracy, and efficiency of their invoice processing.

Google Vision For Invoice Processing

Google Vision is a powerful tool for invoice processing that uses machine learning algorithms to extract data from invoices automatically. With Google Vision, businesses can streamline their invoice processing workflow and reduce the time and effort required to manage invoices manually.

It is designed to automatically recognize and extract text from various document types, including invoices. The technology is highly accurate and can even identify and extract information from scanned documents, making it ideal for businesses that process a large volume of invoices.

To use Google Vision for invoice processing, businesses simply need to upload the invoices to the Google Vision service. The service then uses machine learning algorithms to automatically extract the relevant information from the invoices which can then be used for further processing, such as data entry into an accounting system or analysis.

Here are a few methods showing invoice processing with Google Vision.

Before u follow through with further steps, make sure you have google vision installed in your system.

pip install google-cloud-vision

Once you have installed vision you can then follow these methods.

Invoice Processing as an Image

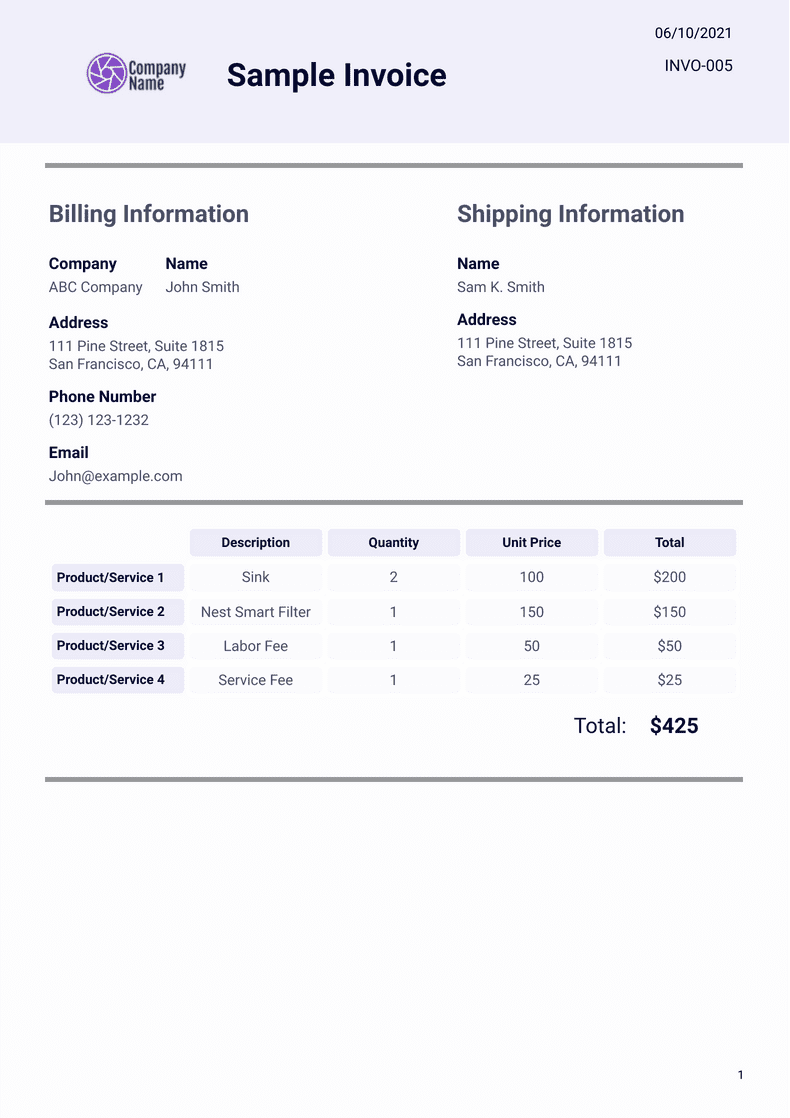

In this method, we are uploading an image file to get OCR results with Google Vision API.

#importing necessary libraries

from importlib.resources import path

import os,io

from google.cloud import vision

from google.cloud import vision_v1

from google.cloud.vision_v1 import types

import pandas as pd

import os

from IPython.display import Image

import json

Importing libraries: The code begins by importing the required modules, including os, io, pandas, IPython.display, json and the Google Cloud Vision API module google.cloud.vision_v1.

#authorizing client credentials

os.environ["GOOGLE_APPLICATION_CREDENTIALS"]=r"YOUR API KEY"

Set up Google Cloud API credentials: The code sets the GOOGLE_APPLICATION_CREDENTIALS environment variable to the path of the JSON key file that contains your Google Cloud API credentials.

client=vision.ImageAnnotatorClient()

Initialize a Vision API client: The code initializes a Vision API client using the vision.ImageAnnotatorClient() method.

google_ocr_dict={}

def detectText(img):

with io.open(img,'rb') as image_file:

content=image_file.read()

image = vision.Image(content=content)

response = client.text_detection(image=image)

texts = response.text_annotations

text_num = 0

google_ocr_dict[text_num]= {}

for text in texts:

# Create a sub-dictionary for each block of text

google_ocr_dict[text_num] = {}

# Get the coordinates of the bounding box around the text

vertices = ([[vertex.x, vertex.y]

for vertex in text.bounding_poly.vertices])

# Add the text and its coordinates to the dictionary

google_ocr_dict[text_num]['text'] = text.description

google_ocr_dict[text_num]['coords'] = vertices

# Increment the text number

text_num+=1

Define a OCR Function: The detectText function takes an image file path as input and returns the extracted text from the image.

Read the image file: The function reads the image file using the io.open() method and reads the contents of the file using the read() method.

Create a Vision API image object: The function creates a Vision API image object using the vision.Image(content=content) method.

Perform OCR using the Vision API: The function performs OCR on the image using the client.text_detection(image=image) method, which returns the OCR results in a response object.

Extract text and bounding box coordinates: The function then extracts the text and bounding box coordinates for each detected text element using a for loop and saves them in a dictionary named google_ocr_dict.

with open("processed_image_new.json","w") as json_file:

json.dump(google_ocr_dict,json_file,indent=4)

print(f"Created processed_image.jpg using Google OCR")

return google_ocr_dict[0]["text"].replace("\n"," ")

Save OCR results to JSON: The function saves the OCR results to a JSON file using the json.dump() method.

FILE_NAME="blog_image.png"

FOLDER_PATH="./Images/"

text=detectText(os.path.join(FOLDER_PATH,FILE_NAME))

Overall, this code uses the Google Cloud Vision API to extract text from an image and sort it based on its position on the page, making it easier to process and analyze the text.

Generated OCR Text:

'Company Name Billing Information Company ABC Company Address 111 Pine '

'Street, Suite 1815 San Francisco, CA, 94111 Phone Number (123) 123-1232 '

'Email Name John Smith John@example.com Sample Invoice Product/Service 1 '

'Product/Service 2 Product/Service 3 Product/Service 4 Description Sink Nest '

'Smart Filter Labor Fee Service Fee Quantity 2 1 1 1 Shipping Information '

'Name Sam K. Smith Address 111 Pine Street, Suite 1815 San Francisco, CA, '

'94111 Unit Price 100 150 50 06/10/2021 INVO-005 25 Total $200 $150 $50 $25 '

'Total: $425 1'

We can't make much sense out of this Generated Output, but once we pass this onto GPT-4, we can see some fruitful results.

Invoice Processing as a PDF Document

This code performs Optical Character Recognition (OCR) on a PDF document using the Google Cloud Vision API to extract text from the document.

#importing necessary libraries

from importlib.resources import path

import os,io

from google.cloud import vision

from google.cloud import vision_v1

from google.cloud.vision_v1 import types

import pandas as pd

import os

from IPython.display import Image

import json

import PyPDF2

Importing Necessary Libraries: os, io, pandas, IPython.display, json, PyPDF2, and the Google Cloud Vision API modules from google.cloud.vision and google.cloud.vision_v1 are imported.

os.environ["GOOGLE_APPLICATION_CREDENTIALS"]=r"YOUR API KEY"

Set up Google Cloud API credentials: The code sets the GOOGLE_APPLICATION_CREDENTIALS environment variable to the path of the JSON key file that contains your Google Cloud API credentials.

client = vision.ImageAnnotatorClient()

Initialize a Vision API client: The code initializes a Vision API client using the vision.ImageAnnotatorClient() method.

def google_pdf_ocr(document):

# Supported mime_type: application/pdf, image/tiff, image/gif

mime_type = "application/pdf"

with io.open(document, "rb") as f:

content = f.read()

input_config = {"mime_type": mime_type, "content": content}

features = [{"type_": vision.Feature.Type.DOCUMENT_TEXT_DETECTION}]

pdf_reader=PyPDF2.PdfReader(document)

num_pages=len(pdf_reader.pages)

page_text={}

num_pages = num_pages + 1

pages = [[i for i in range(j, min(j + 5,num_pages), 1)] for j in range(1,num_pages,5)]

ocr_text = {}

for batch in pages:

requests = [{"input_config": input_config, "features": features, "pages": batch}]

response = client.batch_annotate_files(requests=requests)

response = response.responses[0].responses

page_no = 0

for image_response in response:

ocr_text1 = image_response.full_text_annotation.text

ocr_text["Page_"+str(batch[page_no])] = ocr_text1

page_no += 1

return ocr_text #returns OCR Text as a dictionary

Define an OCR Function: The google_pdf_ocr function takes a pdf file path as input and returns the extracted text in the form of a dictionary where the key is the page number and the value is the OCR text.

Read the PDF File: The MIME type of the input document is set to "application/pdf" and the content of the file is read using the io.open() method. We read the document using PyPDF2 to obtain the number of pages in the document for further processing.

Performing OCR on PDF File: The OCR is performed on the PDF document using the client.batch_annotate_files() method, which takes a list of requests. Each request specifies the input configuration, features, and pages to be processed. In this code, the pages of the document are split into batches of 5 pages and OCR is performed on each batch. The OCR results are saved in a dictionary named ocr_text, where the key is the page number and the value is the extracted text.

text=google_pdf_ocr("Invoice.pdf")

Finally, the google_pdf_ocr() function is called with the file path of the PDF document as the input, and the extracted text is saved in a variable named text.

Generated OCR Text:

{'Page_1': 'Company Name Billing Information Company ABC Company Address 111 Pine '

'Street, Suite 1815 San Francisco, CA, 94111 Phone Number (123) 123-1232 '

'Email Name John Smith John@example.com Sample Invoice Product/Service 1 '

'Product/Service 2 Product/Service 3 Product/Service 4 Description Sink Nest '

'Smart Filter Labor Fee Service Fee Quantity 2 1 1 1 Shipping Information '

'Name Sam K. Smith Address 111 Pine Street, Suite 1815 San Francisco, CA, '

'94111 Unit Price 100 150 50 06/10/2021 INVO-005 25 Total $200 $150 $50 $25 '

'Total: $425 1'}

Since I have a single-page document as PDF only a single-page output is generated, if you have multiple pages, u will see multiple key-value pairs indicating the pages and their corresponding OCR Text.

Benefits of Using Google Vision

Increased speed and efficiency: By automating the entity extraction process, businesses can significantly improve the speed and efficiency of their invoice processing.

Improved accuracy: Google Vision's machine learning algorithms are highly accurate, reducing the risk of errors in the invoice processing process.

Reduced manual effort: By automating the entity extraction process, businesses can significantly reduce the manual effort required to process invoices.

Enhancing Entity Extraction with GPT-4

Entity extraction is the process of identifying and extracting specific information or entities from a large text corpus. In the context of invoice processing, entity extraction can help in automatically extracting information like invoice number, vendor name, invoice date, item description, and amount, among others. This information can then be used for further processing, such as data validation, categorization, and accounting.

GPT-4, or Generative Pretrained Transformer 4, is a state-of-the-art language model developed by OpenAI. GPT-4 has been trained on a massive amount of text data, making it capable of understanding and generating human-like text. This capability can be leveraged to improve the accuracy of entity extraction. By using GPT-4, it's possible to extract entities from unstructured or semi-structured invoices with high accuracy and in a more flexible manner than traditional entity extraction methods.

Traditional entity extraction methods, such as rule-based or machine learning-based systems, require a significant amount of manual effort to create and maintain rules or models. Moreover, these methods are limited in their ability to extract entities from new or unseen invoice formats. On the other hand, GPT-4-based entity extraction can be more flexible and scalable, as it does not rely on pre-defined rules or models. Additionally, GPT-4 can learn from the data and improve its accuracy over time, making it an ideal choice for entity extraction in invoice processing.

#import OpenAI Library and Authenticate with OpenAI Key

import openai

openai.api_key="Your API Key"

Import OpenAI Library and Authenticate with OpenAI Key: Same as we did with the GPT-3, we import the OpenAI library and provide our API key to authenticate with OpenAI.

#define System Role

system_role="Extract entities and thier values as a key-value pair from the provided OCR text and seperate them by a new line.

Define System Role: This is a very important part of defining our use case. we have to define a role for the system so that it tunes its behavior accordingly. This is what makes GPT-4 more reliable compared to GPT-3. It is capable of remembering your past inputs and using them to perform domain-specific tasks.

#Get The Response

ocr_text = "06/10/2021 K Company INVO-005 Name Sample Invoice Billing Information Shipping Information Company Name Name ABC Company John Smith Sam K. Smith Address Address 111 Pine Street, Suite 1815 111 Pine Street, Suite 1815 San Francisco, CA, 94111 San Francisco, CA, 94111 Phone Number (123) 123-1232 Email John@example.com Description Quantity Unit Price Total Product/Service 1 Sink 2 100 $200 Product/Service 2 Nest Smart Filter 1 150 $150 Product/Service 3 Labor Fee 1 50 $50 Product/Service 4 Service Fee 1 25 $25 Total: $425 1"

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role":"system","content":system_role},

{"role":"user","content":ocr_text} #pass the OCR Text obtained from

]

)

Generated Output:

Entities:

Date: 06/10/2021

Invoice Number: INVO-005

Company Name: K Company

Name: Name Sample Invoice

Billing Information:

Company Name: ABC Company

Contact Name: John Smith

Address: 111 Pine Street, Suite 1815

City: San Francisco

State: CA

Zip Code: 94111

Phone Number: (123) 123-1232

Email: John@example.com

Shipping Information:

Name: Sam K. Smith

Address: 111 Pine Street, Suite 1815

City: San Francisco

State: CA

Zip Code: 94111

Description: Product/Service 1

Item: Sink

Quantity: 2

Unit Price: 100

Total: $200

Description: Product/Service 2

Item: Nest Smart Filter

Quantity: 1

Unit Price: 150

Total: $150

Description: Product/Service 3

Item: Labor Fee

Quantity: 1

Unit Price: 50

Total: $50

Description: Product/Service 4

Item: Service Fee

Quantity: 1

Unit Price: 25

Total: $25

Total: $425

For More Information and Understanding of GPT-4 You can refer to this Video:

Google Vision has proven to be an efficient tool for invoice processing as it can extract information from invoices with high accuracy and at a faster rate than traditional methods. The integration of GPT-4 has further improved the entity extraction process by providing human-like understanding and the ability to recognize complex entities.

With these advancements in technology, the future of invoice processing looks bright. The integration of Google Vision and GPT-4 will continue to improve and streamline the invoice processing process, making it faster, more efficient, and more accurate.

In conclusion, using Google Vision and GPT-4 in invoice processing is is set to revolutionize the Generative AI industry, but it depends on the use case at hand. If the task is small, it may be better to stick to GPT-3 since GPT-4 can be quite expensive when compared to its predecessor. But if accuracy and precision are something that you are looking for then GPT-4 is the right one for the job.

If you're interested in invoice processing, you might want to read our previous post, where we compared Amazon Textract and GPT-4 for text extraction.

Follow FutureSmart AI to stay up-to-date with the latest and most fascinating AI-related blogs - FutureSmart AI

Looking to stay up to date on the latest AI tools and applications? Look no further than AI Demos This directory features a wide range of video demonstrations showcasing the latest and most innovative AI technologies. Whether you're an AI enthusiast, researcher, or simply curious about the possibilities of this exciting field, AI Demos is your go-to resource for education and inspiration. Explore the future of AI today with aidemos.com

Subscribe to my newsletter

Read articles from rajashekar vt directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by