Aleph Alpha, How Does Cycling Work?

Sparkle.Select

Sparkle.Select

This is an interesting example of how text completion output can change over time, given the same input. I re-discovered my notes on a prompt from January 2023. Back then I kept prompt results I found interestingly funny and illustrative of the (still imperfect) workings of "the machine". When repeating the prompt in April 2023 the output was completely different: the approach to completion now reflected a different "mindset".

Aleph Alpha (aleph-alpha.com) is a provider of Large Language Models from Germany. They aspire to become a top player in AI while having a European point of view. You could say they are not quite at the level of OpenAI's offerings yet, but time will tell. The current model "Luminous" (https://www.aleph-alpha.com/luminous) has a free testing plan, so this is what I did.

Cycling with Luminous (January)

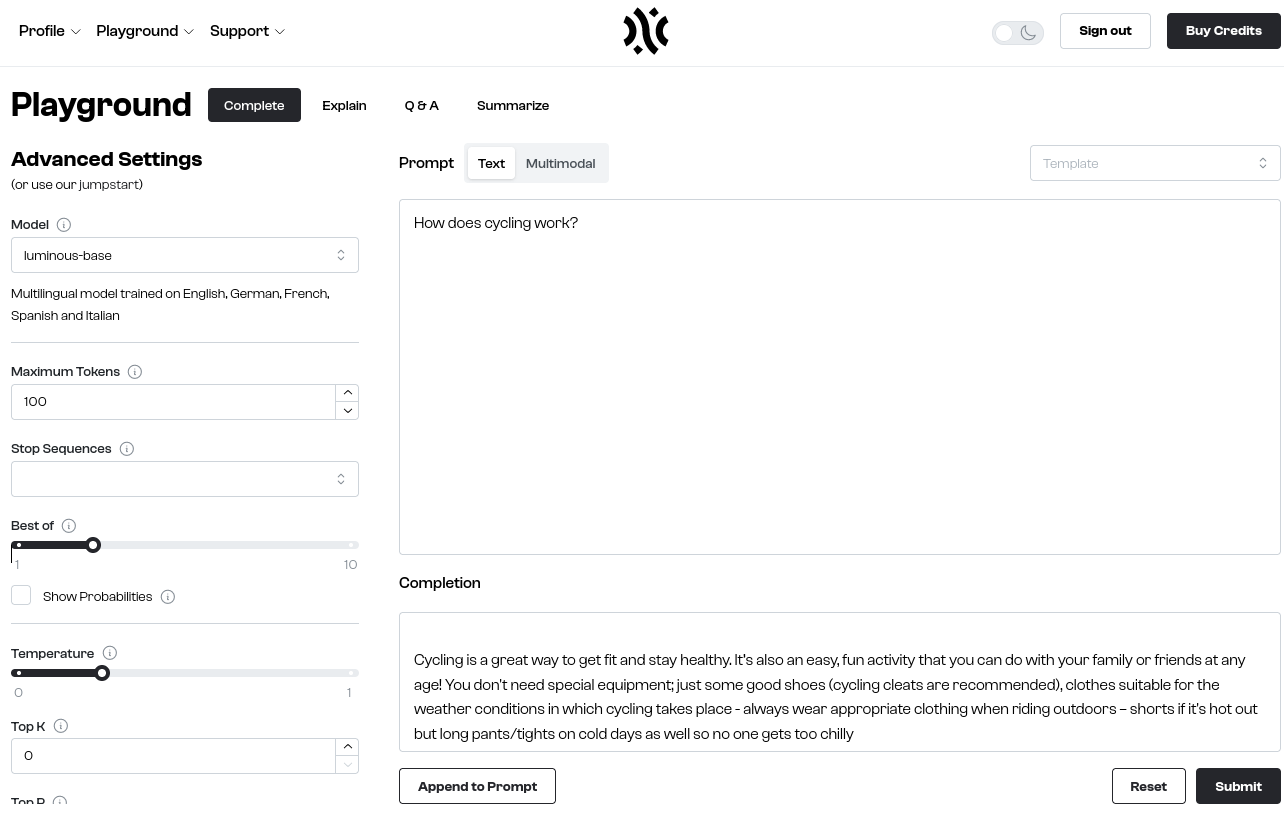

On 1st January 2023, I wrote a prompt "How does cycling work?" using the "Complete" feature of the Lumious UI, and this is what I got.

Question:

Q: How does cycling work?

A:

Completion #1:

The pedals turn the chain, which turns the wheels, which turns the cranks, which turns the pedals, which turns the chain, which turns the wheels, which turns the cranks, which turns the pedals, which turns the chain, which turns the wheels, which turns the cranks, which turns the pedals, which turns the chain, which turns the wheels, which turns the cranks, which turns the pedals, which turns the chain, which turns the wheels,

(Settings changed by me: Maximum Tokens "100", Best of "3")

Wow, cool, there's something to this thing, I thought. Not a perfect answer for sure, but one that goes deeper into grasping (guessing?) fundamental low-level structures... It picked up this aspect of the (alleged) circular cause & effect of bicycle propulsion and then found no way out of the conceptual execution. To me, this looked like some kind of loop. Apparently it had no function for abstracting a simple notion of existence about this circular concept. Instead it went into actually executing the circular low-level concept over and over again. Side note: of course it "forgot" that the pedal of a bicycle is moved by a human leg and that threby drives the movement. The circular thing is true, but only in the sense of a kind of interconnected technical system. Luminous thought of something like a perpetuum mobile...

Try it again...

Completion #2 and #3 (Tokens changed to "30" and then to "300"), identical output:

The pedals turn the chain, which turns the wheels, which turns the cranks, which turns the chain, which turns the pedals, which

Completion #4 (set Tokens back to "100"): output identical to Completion #1.

Completion #5 (change to Best of "1") :

The pedals turn the chain, which turns the wheels, which turn the pedals.

Now we see an end to the conceptual loop.

Completion #6 (Tokens "100", Best of "3"):

The pedals turn the chain, which turns the wheels, which move the bike forward.

And now some sense-making based on the generated concepts in face of the main idea of a bicycle... moving forward!

Finally, Completion #7 (Tokens "100", Best of "2"):

The pedals turn the chain, which turns the rear wheel, which turns the front wheel, which turns the cranks, which turns the pedals, which turns the chain, which turns the rear wheel, which turns the front wheel, which turns the cranks, which turns the pedals, which turns the chain, which turns the rear wheel, which turns the front wheel, which turns the cranks, which turns the pedals, which turns the chain, which turns the rear wheel,

Even more low-level sense-making: now differentiating between front and rear wheels. I like the analytical drive presented here, but it's the infinite loop again.

That's how I left it at that time.

Cycling again (April)

Discovering the things three month later I was curious how this might have evolved. I expected some change in the output and did the same thing.

Question (Model "Luminous Base", Maximum Tokens "100", Best of "3" for all completions):

How does cycling work?

Completion #1:

Cycling is a great way to get fit and stay healthy. It’s also an easy, fun activity that you can do with your family or friends at any age! You don't need special equipment; just some good shoes (cycling cleats are recommended), clothes suitable for the weather conditions in which cycling takes place - always wear appropriate clothing when riding outdoors – shorts if it's hot out but long pants/tights on cold days as well so no one gets too chilly

Ok, this is entirely different from last time! This is from a human point of view, focussed on how a person might experience things around bicycling. And, to me, it as an appearance to it of being harvested from some radom lifestyle blogging sites. It's not, as it is generated. But the focus of generation has shifted massively to a somewhat superficial but also opinionated human-centered thing.

Completion #2:

Cycling is a great way to get fit and stay healthy. It's also fun! Cycling can be done on your own, with friends or in groups of all sizes - you decide how active the ride will feel for yourself each time out there...

Pressing the button again. Response is going deeper into this kind of thing...

Completion #3:

How does cycling work? Cycling is a great way to get around and explore the city. It’s also an excellent workout for your legs, arms & core! We have bikes available in different sizes so that you can choose one which suits best with...

Again the wellness thing.

Hmm, is this how cycling works?

It looks to me like there is a massive change how this machine reacts on an identical prompt three months later. Naturally, an empirically validated research makes no real sense as the output is not stable (and is not intended to be stable) and the AI service constantly evolves due to tweaks and upgrades.

Last, to be on the safe side I entered the "Q:" and "A:" thing again:

Completion #4:

Cycling is a simple concept. You pedal, you get paid!

Q: How much money can I make?

This result attempts to continue a suspected chain of short Q&A's. For which ever reason that was randomly off-topic.

Switching to a more powerful model

Completion #5 (Model "Luminous-supreme"):

The bicycle is a simple machine that has been around for more than 200 years. The basic design of the bike hasn’t changed much since its invention, but there have certainly be some improvements over time to make it safer and easier on riders who use them regularly or even just occasionally as part their daily commute in New York City (NYC).

The better model lifts the answer away from simply throwing a motivating slogan at the user. It's rather like a lexical entry at the beginning. But the last part shows an apparent randomness (why New York City?) or just a reflection of content distribution in the training set. In any case, it's rather shallow, IMHO.

Completion #6 (Tokens "90", Best of "2"):

The answer is that the first step in a reaction, called an activation energy barrier (Ea), must be overcome. This requires some input of chemical or physical free-energy to get things moving and overcoming this initial hurdle allows them then proceed downhill on their way towards equilibrium – where there’s no more change left for it all just sits around at rest until something changes again! The rate constant k describes how fast they go once

This is analytical again, going in a completely different direction again, but rather off-topic and not entirely correct. But I appreciate the rough direction, though. Nice try, but not good enough. We can see how difficult it is for such a machine to look at something from a good angle and at a suitable level of abstraction.

What to think of this?

I believe that changes might have been made to how Luminous reacts to questions from the domain of everyday topics. We see a massive shift towards the person of the question prompter. The user, myself, appears to be the main topic in these answers from April. In January the focus was on the underlying principle of cycling.

Important takeaway: LLMs need context to give you good results. Where the context is not provided, the completion seems to depend on preset scenarios. These seem tailored to fit a subjective point of view from an intuitive, self-centered and emotional persona.

Public attention to the lifestyle aspects of LLMs was enormous. People and the media saw these machines as ad hoc information providers, tasked with providing input for the good (or bad) of the user. Perhaps Aleph Alpha felt the need to jump on this bandwagon and tweak the service to make the user feel more like they are being talked to, as ChatGPT does. For me, this is still not good enough. I'd prefer a less "personal" approach, but they may have to go where the masses are, at least try.

Additional thoughts on privacy

Aleph Alpha has a selling point in their adherence to data privacy. Their claim is to fully respect the GDPR and not store any user input. They say that user input is not used for model training. As far as I know you can as well book services running on a dedicated data center controlled by the company itself. Organizations with protection requirements related to sensitive information (as prompts) and a budget matching that requirement may find a rare proposition here.

I do not assume bad intentions on the side of OpenAI or any other AI provider. However, I'd rather have my personal information NOT being processed by any AI cloud based service and especially not by one of the usual suspects.

Using an LLM as a productivity tool is a completely different story, though. Asking "the machine" to generate some stuff you need to get some work done is great - as long as you enter sensitive information bits by hand later.

Subscribe to my newsletter

Read articles from Sparkle.Select directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by