Create a Ledger Utilizing CockroachDB - Part II - Deployment

Kai Niemi

Kai Niemi

In this second part of a series about RoachBank, a full-stack financial accounting ledger running on CockroachDB, we will look at how to deploy the bank against a global, multi-regional CockroachDB cluster.

Cloud Deployment

The ledger provides a few convenience scripts for deploying to AWS, GCE and Azure using an internal tool called roachprod. This tool is free to use but at your own risk.

The provided scripts will do the following:

Provision a single-region or multi-region CockroachDB cluster

Deploy HAProxy on all client nodes

Deploy bank server and client JAR on all client nodes

Start the bank server on all client nodes

Enable regional-by-row and global tables for multi-region (if needed)

Prerequisites

Roachprod - a Cockroach Labs internal tool for ramping AWS/GCE/Azure VM clusters

You will need the AWS/GCE/AZ client SDK and an account.

Deployment Scripts

AWS Deployment

The $basedir/deploy/aws folder contains a few scripts for provisioning different cluster sizes in different regions. Let's look at the aws-multiregion-eu.sh which is a multi-region configuration spanning eu-west-1, eu-west-2 and eu-central-1.

#!/bin/bash

# Script for setting up a multi-region Roach Bank cluster using roachprod in either AWS or GCE.

# Configuration

########################

title="CockroachDB 3-region EU deployment"

# CRDB release version

releaseversion="v22.2.5"

# Number of node instances in total including clients

nodes="12"

# Nodes hosting CRDB

crdbnodes="1-9"

# Array of client nodes (must match size of regions)

clients=(10 11 12)

# Array of regions localities (must match zone names)

regions=('eu-west-1' 'eu-west-2' 'eu-central-1')

# AWS/GCE cloud (aws|gce)

cloud="aws"

# AWS/GCE region zones (must align with nodes count)

zones="\

eu-west-1a,\

eu-west-1b,\

eu-west-1c,\

eu-west-2a,\

eu-west-2b,\

eu-west-2c,\

eu-central-1a,\

eu-central-1b,\

eu-central-1c,\

eu-west-1a,\

eu-west-2a,\

eu-central-1a"

# AWS/GCE machine types

machinetypes="c5d.4xlarge"

# DO NOT EDIT BELOW THIS LINE

#############################

functionsdir="../common"

source "${functionsdir}/core_functions.sh"

main.sh

By the end of running this script, you would have an AWS provisioned 12-node (instances) cluster, out of which the nodes 10, 11 and 12 are hosting the bank application stack including HAProxy.

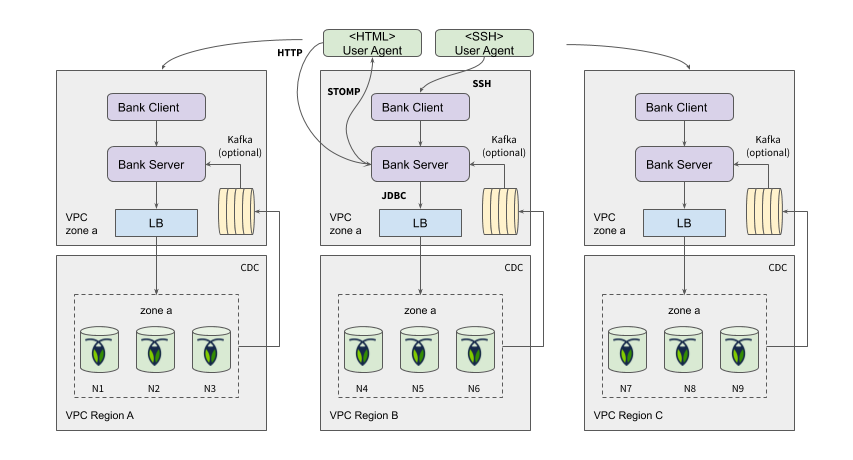

Something like in this diagram:

The setup script is interactive and each step will ask for confirmation. It's launched by this simple command:

./aws-multiregion-eu.sh

After the steps are completed, you should have a page automatically opened in your default browser along with the service landing page showing account boxes.

GCE Deployment

For GGE, the process is quite similar just using different regions and instance types.

For example:

cd deploy/gce

chmod +x *.sh

./gce-multiregion-eu.sh

Azure Deployment

The same goes for Azure, however, it only contains a single region provisioning script.

cd deploy/azure

chmod +x *.sh

./azure-singleregion.sh

Operating in Multi-Region

When the ledger is deployed in a multi-regional topology (like US-EU-APAC), the accounts and transactions need to be pinned/domiciled to each region for best performance.

This is done by using the regional-by-row table locality in CockroachDB. There's an explicit step in the setup script than executes the SQL statements below. This will provide for low read and write latencies in each region.

For the AWS multi-region example:

ALTER DATABASE roach_bank PRIMARY REGION "eu-central-1";

ALTER DATABASE roach_bank ADD REGION "eu-west-1";

ALTER DATABASE roach_bank ADD REGION "eu-west-2";

ALTER TABLE region SET locality GLOBAL;

ALTER TABLE account ADD COLUMN region crdb_internal_region AS (

CASE

WHEN city IN ('dublin','belfast','liverpool','manchester','glasgow') THEN 'eu-west-1'

WHEN city IN ('london','birmingham','leeds','amsterdam','rotterdam','antwerp','hague','ghent','brussels') THEN 'eu-west-2'

WHEN city IN ('berlin','hamburg','munich','frankfurt','dusseldorf','leipzig','dortmund','essen','stuttgart','stockholm','copenhagen','helsinki','oslo','riga','tallinn') THEN 'eu-central-1'

ELSE 'eu-central-1'

END

) STORED NOT NULL;

ALTER TABLE account SET LOCALITY REGIONAL BY ROW AS region;

ALTER TABLE transaction ADD COLUMN region crdb_internal_region AS (

CASE

WHEN city IN ('dublin','belfast','liverpool','manchester','glasgow') THEN 'eu-west-1'

WHEN city IN ('london','birmingham','leeds','amsterdam','rotterdam','antwerp','hague','ghent','brussels') THEN 'eu-west-2'

WHEN city IN ('berlin','hamburg','munich','frankfurt','dusseldorf','leipzig','dortmund','essen','stuttgart','stockholm','copenhagen','helsinki','oslo','riga','tallinn') THEN 'eu-central-1'

ELSE 'eu-central-1'

END

) STORED NOT NULL;

ALTER TABLE transaction SET LOCALITY REGIONAL BY ROW AS region;

ALTER TABLE transaction_item ADD COLUMN region crdb_internal_region AS (

CASE

WHEN city IN ('dublin','belfast','liverpool','manchester','glasgow') THEN 'eu-west-1'

WHEN city IN ('london','birmingham','leeds','amsterdam','rotterdam','antwerp','hague','ghent','brussels') THEN 'eu-west-2'

WHEN city IN ('berlin','hamburg','munich','frankfurt','dusseldorf','leipzig','dortmund','essen','stuttgart','stockholm','copenhagen','helsinki','oslo','riga','tallinn') THEN 'eu-central-1'

ELSE 'eu-central-1'

END

) STORED NOT NULL;

ALTER TABLE transaction_item SET LOCALITY REGIONAL BY ROW AS region;

When transactions are issued against accounts in these different cities, the read-and-write operations will be constrained to the home regions. For example, creating monetary transactions involving accounts in "stockholm" and "helsinki" will be serviced only by the 3 nodes in the region eu-central-1. Read operations will have local latency and write operations will have only one single roundtrip to the next closest region.

As an option to limit the amount of data and overhead of replicating cross regions, you could disable the non-voting replicas with placement restrictions. This would result in no replicas placed outside of the home regions with the consequence of higher latency for follower reads in the other regions.

The tradeoff is regional survival. To combine both regional survival and data domiciling with regional-by-row, you can use super-regions which is covered more in this post.

SET enable_multiregion_placement_policy=on;

ALTER DATABASE roach_bank PLACEMENT RESTRICTED;

Running a Global Workload

First, SSH to the first client machine which in the AWS example is node (aka instance) 10.

roachprod run:$CLUSTER:10

Next, start the bank client and type connect. It should print something like this:

C O C K R O A C H D B

──▄──▄────▄▀ ___ __ ___ __

───▀▄─█─▄▀▄▄▄ / _ \___ ___ _____/ / / _ )___ ____ / /__

▄██▄████▄██▄▀█▄ / , _/ _ \/ _ `/ __/ _ \ / _ / _ `/ _ \/ '_/

─▀▀─█▀█▀▄▀███▀ /_/|_|\___/\_,_/\__/_//_/ /____/\_,_/_//_/_/\_\

──▄▄▀─█──▀▄▄ bank-client (v2.0.1.BUILD-SNAPSHOT) powered by Spring Boot (v3.0.4)

Active profiles: ${spring.profiles.active}

15:30:37.219 INFO [main] [io.roach.bank.client.ClientApplication] Starting ClientApplication v2.0.1.BUILD-SNAPSHOT using Java 17.0.6 with PID 10267 (/home/ubuntu/bank-client.jar started by ubuntu in /home/ubuntu)

15:30:37.220 INFO [main] [io.roach.bank.client.ClientApplication] No active profile set, falling back to 1 default profile: "default"

15:30:38.414 INFO [main] [io.roach.bank.client.ClientApplication] Started ClientApplication in 1.564 seconds (process running for 2.095)

disconnected:$ connect

15:30:42.949 INFO [main] [io.roach.bank.client.command.Connect] Connecting to http://localhost:8090/api..

15:30:43.084 INFO [main] [io.roach.bank.client.command.Connect] Welcome to text-only Roach Bank. You are in a dark, cold lobby.

15:30:43.084 INFO [main] [io.roach.bank.client.command.Connect] Type help for commands.

localhost:$

Next, let's run some account transfers across the cities in the local region. First, we need to verify that the local gateway region is eu-west-1:

localhost:$ gateway-region

eu-west-1

Then start the transfers:

localhost:$ transfer --regions eu-west-1

If you look in the browser tab pointing at the regional bank service, you should see some effects on the accounts in that region. If you are not sure about the URL, use roachprod ip:

roachprod ip $CLUSTER:10-12 --external

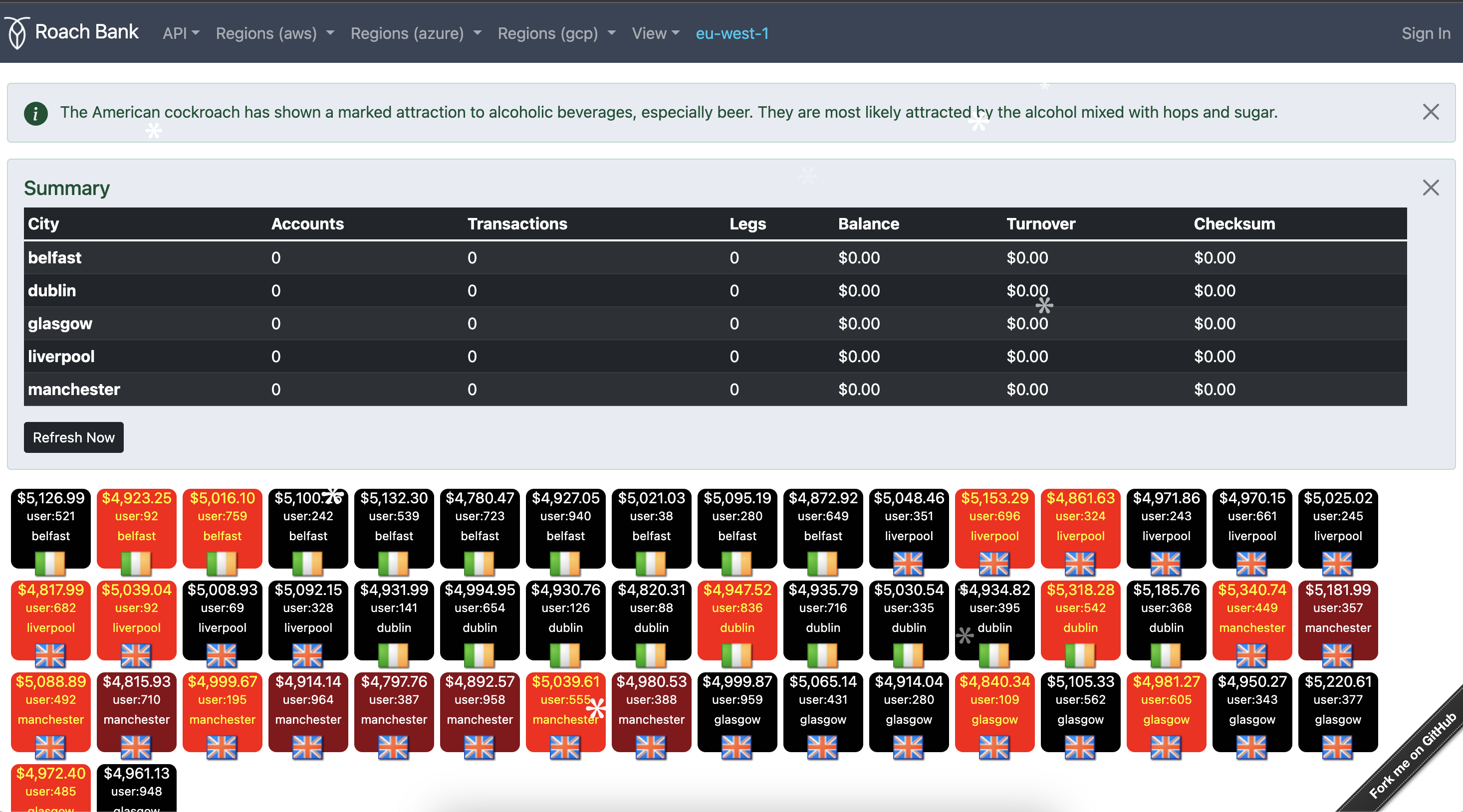

Pick the first IP and append port 8090 and you should see:

Note: For simplicity, the push events that update the balances are not broadcasted across regions, so you can only see effects at a regional level.

The transfer command runs with a very low volume by default but it can be ramped up with more concurrent threads and a higher selection of accounts to avoid contention. The low amount range reduce the risk of ending up with a negative balance causing aborts.

transfer --regions eu-west-1 --concurrency 10 --limit 1000 --amount 0.01-0.15

To run a 100% read-based workload we can use the balance command. This will start then concurrent workers per city in the given region and run point lookups.

balance --regions eu-west-1 --followerReads --concurrency 10 --limit 1000

Lastly, repeat the steps above for client nodes 11 and 12 so you end up with 3 concurrent clients and servers, one pair per region.

roachprod run:$CLUSTER:11

..

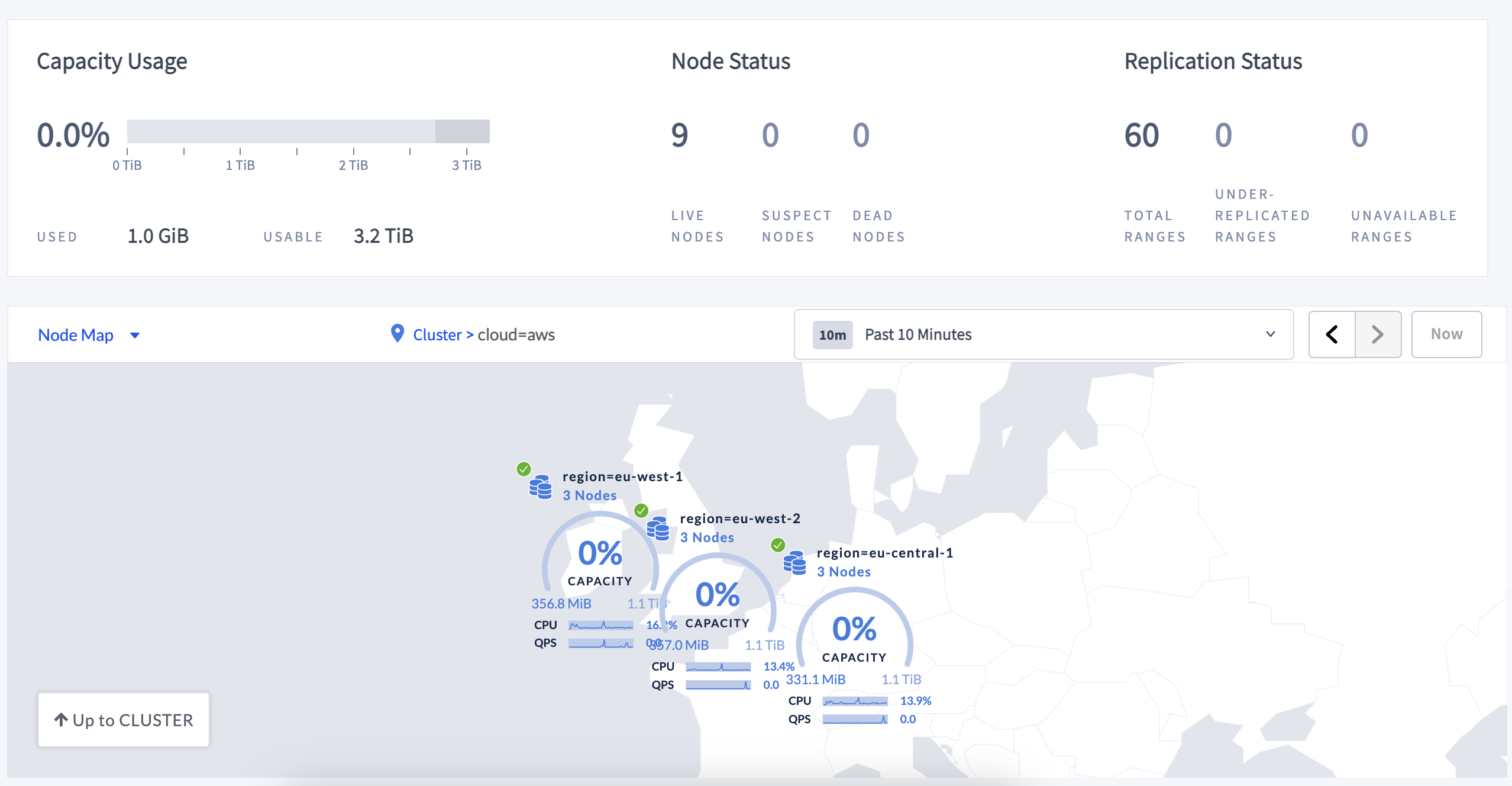

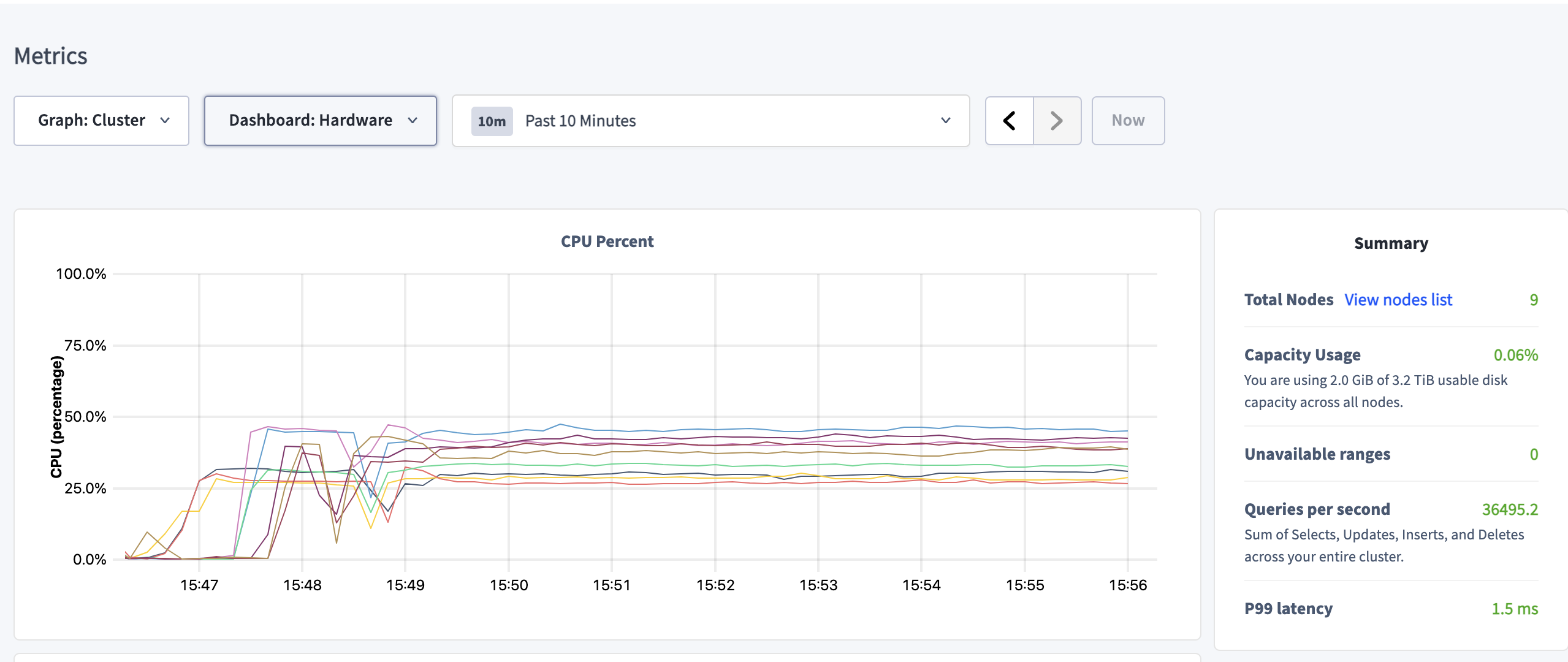

Once the workloads run at full speed, you should see metrics picking up in the DB Console.

As we can see in the hardware dashboard, the vCPU utilization starts reaching the 50% threshold. Using these 16vCPU VMs, we get around 40K QPS at less than 2ms on P99. Keep in mind the cluster stretches across 3 regions in EU.

Summary

This article provides instructions on how to deploy the RoachBank accounting ledger demo on a multi-regional CockroachDB cluster, including instructions for deploying on AWS, GCE and Azure, setting up regional-by-row and global tables, and using the roachprod tool. Prerequisites include the AWS/GCE/AZ client SDK and an account.

Subscribe to my newsletter

Read articles from Kai Niemi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by