Lambda function to send the details (S3 Uri, Name, Size, type) over email on upload a file into S3, for images it will create thumbnails

Ram Kumar

Ram Kumar

Please follow the below-mentioned steps to achieve the above task.

Log on to AWS Console:

Log in to the AWS console (https://aws.amazon.com/console/) with your username and password, if you don't have an account already created on AWS, you can create one. Steps to create the account first-time https://repost.aws/knowledge-center/create-and-activate-aws-account

If you created an account on AWS first time then it will be a root and admin account, you should not use this account for all the operations. Rather than create a new IAM user with admin permission and perform all the operations using an IAM user. Follow the steps to create the IAM admin user https://docs.aws.amazon.com/streams/latest/dev/setting-up.html

Create S3 bucket and folders:

- Create an s3 bucket and two folders inside it, input to upload the files and thumbnails to start the thumbnails. If you need any help in creating an S3 bucket and see my article (steps-to-create-aws-s3-bucket ) on it where I explain each step.

Creating Lambda function and trigger for S3:

- Create a lambda function and trigger for S3 to execute the lambda function whenever an object is uploaded into the S3 bucket. Please follow mentioned in the blog(create-aws-lambda-function-and-trigger-step-by-step ) the steps to create the Lambda function and Trigger

Creating execution layer for lambda for Image processing:

- Please follow the steps to create the layer mentioned in the blog (custom-layer-for-lambda-function )

SES email setup to send an email with details on your email-id:

- Please follow the steps to set up the emails using AWS SES(email-using-aws-ses )

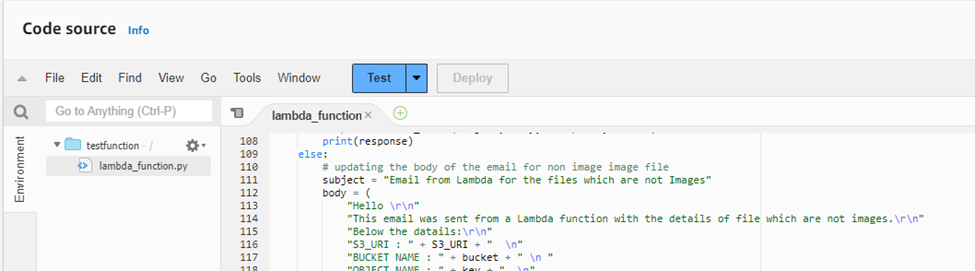

Creating the Python code for the Lambda function:

Got to Lambda functions and click on the testfuction which we created as part of creating lambda function activity.

It will land you in the code, copy the code from the GitHub link (Lambda_fuction_for_s3.py )

Paste it in the lambda function code, add the email of code and add your email address (which you configured in AWS SES) in recipient and sender.

Then save the code by pressing “ctrl+s” the click on deploy.

Your lambda function is ready, now upload the files in the S3 bucket’s input folder.

Your function will execute automatically and it will generate the thumbnails if your file is the image and store the thumbnails in the thumbnails folder in the S3 bucket and send the email to you with the details of the original file and Thumbnails.

If your file is not an image file then it will send email with details of the file.

Upload the files into input folder:

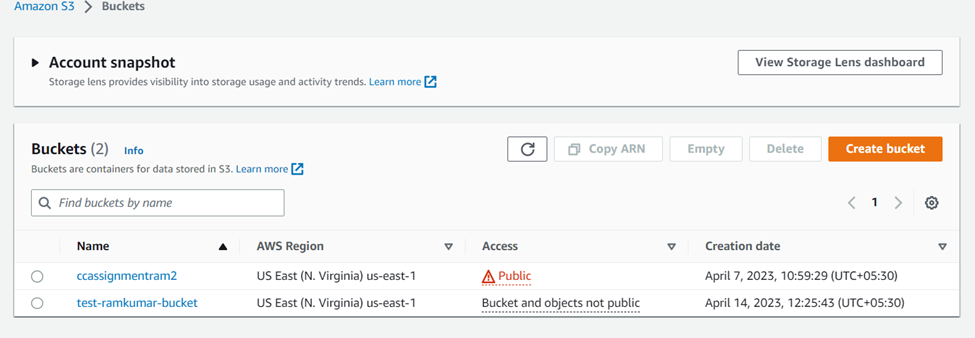

- Go to the AWS console, search for S3 in the search bar, then click the s3 the click on test-ramkumar-bucket bucket which I created, you can use your bucket.

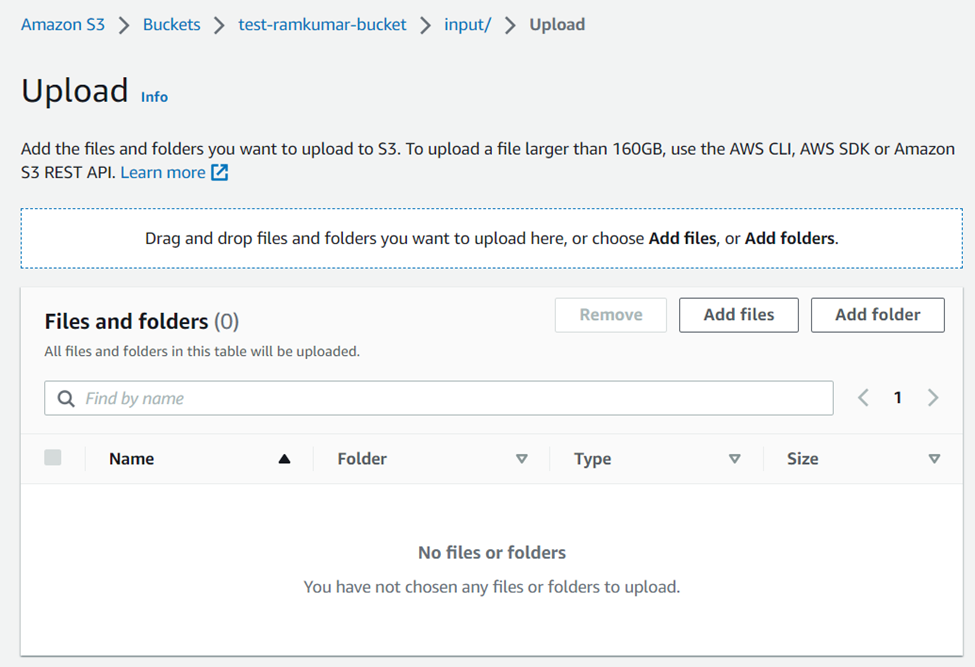

- Then click on the folder input and the upload which is right side in the middle of the screen

- Then click on add files and choose the file from your computer, either image of any other file, you need to test your function with two types of files, image and any other type.

Finally, you should get an email with the details of the uploaded file, in the case of an image, you would get details of thumbnails as well as files. In case of any other type, you would get the email with the details of the file, like, file size, URI of the file, Bucket name

Thank you for reading!!

PLEASE DO like or comment if this blog helps you and any manner, and please provide feedback in the comments so I can create the best content which can help the community

Subscribe to my newsletter

Read articles from Ram Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ram Kumar

Ram Kumar

I am a seasoned DevOps Engineer/Consultant with a passion for helping organizations streamline their software delivery processes. With expertise in tools like GIT, Jenkins, Docker, Kubernetes, AWS, GCP and terraform. I specialize in designing and implementing automated build, test, and deployment pipelines to accelerate time-to-market and improve quality. I have extensive experienced on various Linux flavor's , Shell Scripting, Oracle Andin, SQL, PL/SQL. In my posts, I'll be sharing insights on DevOps best practices, infrastructure automation, and optimizing software delivery workflows. Stay tuned for valuable tips and tricks to enhance your DevOps practices and deliver reliable software to your customers. Let's connect and embark on a journey towards efficient and reliable software delivery! Feel free to reach out for any DevOps-related questions or discussions. Looking forward to connecting with fellow DevOps enthusiasts! #DevOpsConsultant #Automation #SoftwareDelivery #DevOpS Engineer