Cracking the Emotional Code: Can Machines Really Understand Our Deepest Emotions?

Manuj Jain

Manuj Jain

Let’s try re-imagining our world, a world where your computer can sense your emotional state, can re-purpose its responses as per your moods, and even say consoling words while you are feeling down. This might seem like a science fiction movie, but let it be said emphatically, that emotional AI, the technology that enables machines to learn and interpret human emotions is not very far away. Right from natural language processing to facial recognition, emotional AI is definitely decoding the human heart in ways, one never thought was possible.

The fact of the matter is, you might already be using some of it, without even knowing. Do you remember Joaquin Phoenix starred movie– “Her”, where the protagonist falls in love with an operating system? Yeah, that was, and still is far-fetched, but emotional AI is fast coming closer to the imagined reality portrayed in “Her”.

To take some of the examples, companies such as Affectiva, EmoShape, and Receptiviti are in the process of developing emotional AI technologies that make use of machine learning algorithms to interpret and identify human emotions. The technology put forward by these companies is not only able to get an accurate analysis of written text and vocal tones but facial expressions too. Based on its analysis, it can determine the emotional state of the subject. In addition to this, Google’s Sentiment Analysis API deploys machine learning algorithms to have a thorough analysis of human sentiments and emotions, thus providing valuable insights into the behavior of the customers.

Yet, emotional AI is, definitely more than just another tool for entertainment or business. It brings the immense potential to turn around the ways in which we correspond with tech and machines. We are not very far away from a scenario where machines would be able to offer words of consolation sensing that the user is anxious or stressed. Plus, these words of consolation would be tailored to the unique personality of the user concerned. It also has endless possibilities in the domain of mental health care.

So, in this article we are going to explore, the latest developments and research in emotional AI, and would try to find answers, as to if machines can really understand human beings on a sentimental level. Hopefully, by the end of the article, we’d be able to impart a better understanding of emotional AI, and the possibilities of revolutionizing the ways we relate, think, and work.

The Current State of Emotional AI

The prevalent emotional AI technology is able to recognize only a limited set of emotions. Moreover, the accuracy is not always good. The current AI finds it difficult to differentiate between similar emotions such as amazement and suspicion. Also, there are differences at individual levels due to cultural diversity, which additionally overcomplicates the task of interpreting human emotions.

Despite these shortcomings, we see that several research projects and companies have come up in this field.

Affectiva has developed software for emotional recognition that is able to analyze facial expressions and tones to pinpoint emotional states.

EmoShape has developed a path-breaking technology that can express emotions itself.

Receptiviti is one such company, that has its focuses on using emotional AI for mental treatment and diagnosis.

Academia is also making big strides in emotional AI. Affective Computing Group at MIT Media Labs, is conducting intense research on the usage of emotional AI for human-computer interactions, education, and mental health.

Emotional AI Lab at the University of Cambridge, is exploring the use of emotional AI for political campaigning and marketing.

Despite all these advances, emotional AI, like any other shade of AI, is facing privacy and ethical concerns.

Take, for example, the usage of emotional AI in hiring. It may lead to discrimination and bias. Additionally, collecting and analyzing personal emotional data is a big concern about consent and privacy.

Can Machines Really Understand Human Emotions?

There is much debate about whether machines can really understand human emotions. Although it has made big strides in the recent few years, there still are, some limiting factors. One such factor is the complexity of emotions.

Emotions are not states of binary digits having, zero or one, discrete values. Emotions are rather a rainbow of experiences that can be influenced by a multitude of factors. The factors might be personal history, context, and culture. For example, an expression of happiness on the face might occur due to various causes such as a sarcastic remark or receiving good news.

Another big challenge is, humans do not always communicate their emotions explicitly. We tend to display emotions through subtle cues which may or may not include facial expressions, body language, or tone of voice. Although emotional AI is able to recognize some of these subtle cues, it still cannot fully capture the spectrum of human emotional range.

Despite challenges, emotional AI has made tremendous progress, for example in natural language processing, algorithms are able to identify sentiments in text, and the current facial recognition technology can detect some of facial expressions.

So, to conclude there is still not much clarity on whether truly understanding human emotions has become possible or not, as the nuance and complexity of emotions offer significant roadblocks.

How Does Emotional AI Work?

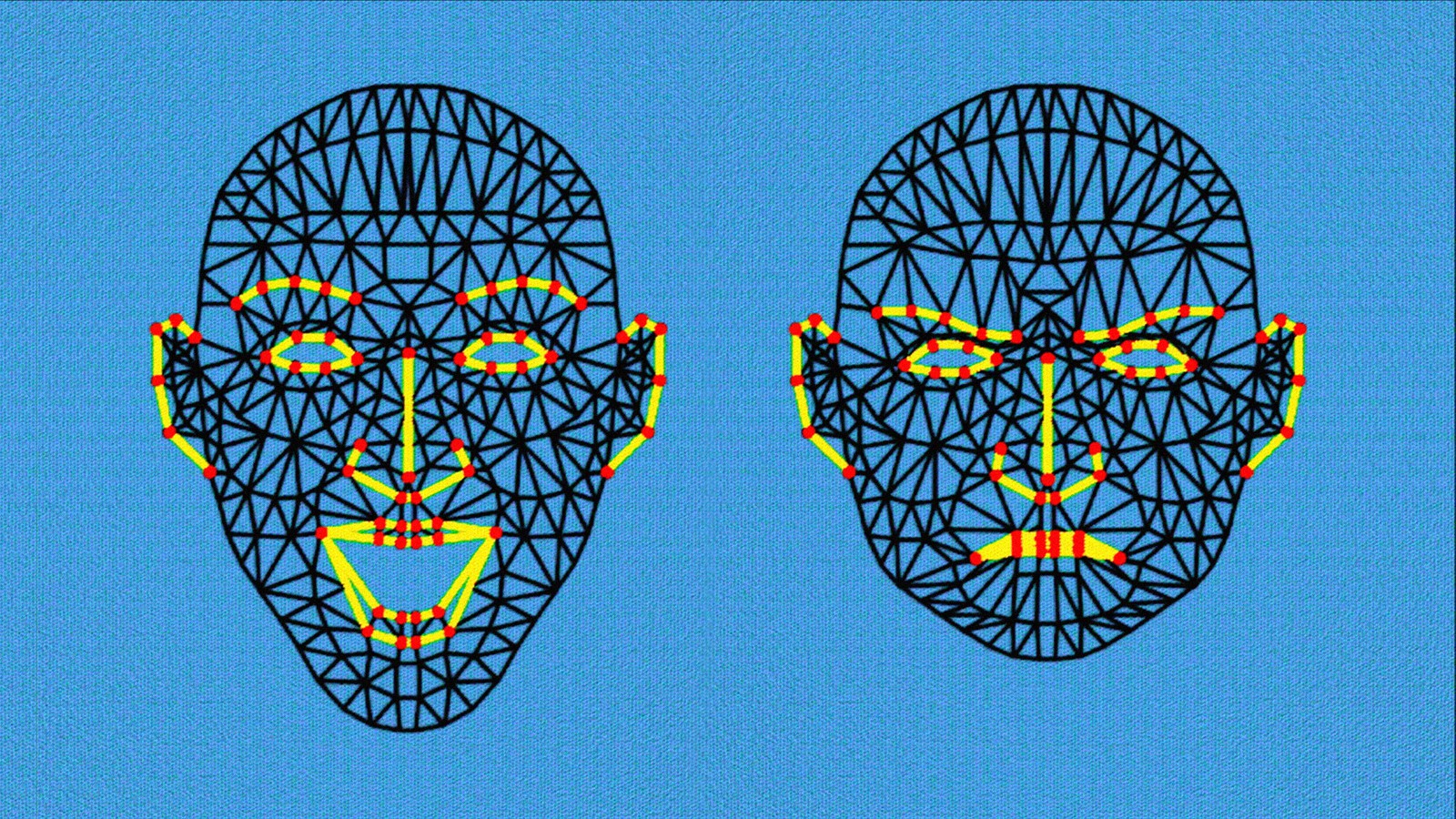

Emotional AI utilizes various methodologies to interpret and identify human emotions. It uses a combination of biometric sensors, facial recognition, and natural language processing to detect emotional cues.

Natural Language Processing (NLP) is one of the most common methods used. This allows machines to analyze spoken or written language to detect the contents of emotional sentiment. Some social media platforms have started using NLP algorithms to detect emotional sentiment in comments and posts.

Facial recognition can analyze the different patterns of expressions which correspond to different emotional states. Take for example, if somebody is smiling, the technology would map it to happiness. Now let us take the case of biometric sensors, which utilize psychological signals such as skin conductance, respiration, and heart rate to detect the changes in human emotional states.

Let’s say, if a person is feeling anxiety, his heart rate shall increase, and the biometric sensors would be able to register this change. So, there might be limitations to emotional AI as yet, but still it has the potential to completely revolutionize some industries such as entertainment, education, and healthcare.

The Benefits and Drawbacks of Emotional AI

Emotional AI has the potential of transforming many industries and bring a lot of benefits. One of the key benefits is enhanced mental healthcare. It can diagnose mental health disorders by undergoing an analysis of speech patterns, facial expressions, and other such emotional cues.

It can also offer individualized treatment plans in accordance with the individual emotional needs. Another use case or benefit could be in customer service. Emotional AI can analyze interactions with customers and can provide feedback in real-time to increase customer satisfaction. Chatbots can use emotional AI to detect if the customer is frustrated and can adjust their tone in the responses accordingly.

However, there are some drawbacks as well. One of the concerns is privacy. Emotional AI needs access to personal data such as biometric statistics and facial recognition patterns of real humans. It raises the bar of privacy concerns and data security too high. As already mentioned, there is a substantial risk of misinterpreting emotions, which might lead to inappropriate responses or incorrect diagnoses.

Additionally, this emotional AI technology might not be suitable in all situations. For example, it might not be appropriate to detect emotions in those cultural contexts where emotions are expressed differently. Similarly, if the emotional AI is not trained on datasets that are culturally representative of diverse populations, the results would certainly be biased.

The Future of Emotional AI

As emotional AI keeps on advancing, the potential it has to change society is definitely very massive. The ongoing research is focused on very sophisticated methods of interpreting and detecting emotions. Potential breakthroughs might include the development of emotionally intelligent robots, and digital assistants that can help humans more emphatically and naturally.

So, in conclusion, emotional AI holds the key to the future with its potential drawbacks as well as benefits. While it holds the promise of enhancing mental healthcare and customer service, the risk of misinterpretation, bias, and privacy compromise are also present. As it keeps on developing, it would be more and more important to address these concerns and to make sure that it is used responsibly and ethically.

Ultimately, emotional AI has the potential to revolutionize the way we understand and interact with human emotions, and it will be exciting to see how this technology continues to evolve in the future.

Subscribe to my newsletter

Read articles from Manuj Jain directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Manuj Jain

Manuj Jain

Technology, politics, and history: often, I find myself drawn to the intersection of these fields. When I am not writing or coding, I spend my time staying up-to-date on the latest developments in the tech industry, reading about political and historical events, and exploring new ideas and perspectives. I am always looking for new and exciting opportunities to learn and grow as a writer and developer.