Day 2 - Kubernetes Networking.

Kamalesh Rana

Kamalesh Rana

Kubernetes Networking: Ingress, Network Policies, DNS, CNI Plugins

Moving from physical networks using switches, routers, and ethernet cables to virtual networks using software-defined networks (SDN) and virtual interfaces involves a slight learning curve. Of course, the principles remain the same, but there are different specifications and best practices. Kubernetes has its own set of rules, and if you're dealing with containers and the cloud, it helps to understand how Kubernetes networking works.

The Kubernetes Network Model has a few general rules to keep in mind:

Every Pod gets its own IP address: There should be no need to create links between Pods and no need to map container ports to host ports.

NAT is not required: Pods on a node should be able to communicate with all Pods on all nodes without NAT.

Agents get all-access passes: Agents on a node (system daemons, Kubelet) can communicate with all the Pods in that node.

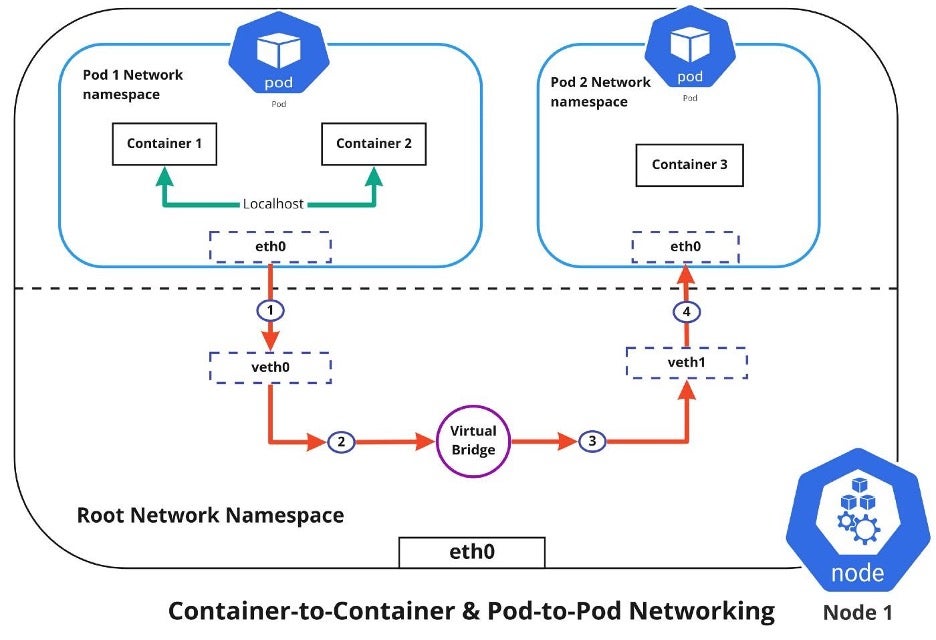

Shared namespaces: Containers within a Pod share a network namespace (IP and MAC address), so they can communicate with each other using the loopback address.

What is a Kubernetes Pod Network?

A Kubernetes Pod network connects several interrelated components:

Pods: Kubernetes Pods are inspired by pods found in nature (pea pods or whale pods). The Pods are groups of containers that share networking and storage resources from the same node. They are created with an API server and placed by a controller. Each Pod is assigned an IP address, and all the containers in the Pod share the same storage, IP address, and port space (network namespace).

Containers: A Kubernetes container is like a virtual machine that shares its Operating System (OS) among several applications. It has its own filesystem, CPU, memory, and process space. Containers are always created in Pods, and multiple containers can be created in one Pod. The containers in a Pod all move together, are scheduled together, and are terminated together. The Pod can move across clouds. Containers can connect through container networking to other containers, a host, and external networks.

Nodes: Pods always run inside a worker node. Nodes are machines that run containerized applications. Kubernetes groups these nodes in clusters.

Master node: Each cluster of nodes will have at least one master node that manages the worker nodes. The master can communicate with each node in a cluster or it can communicate directly to any individual Pod.

Pod-to-Service networking

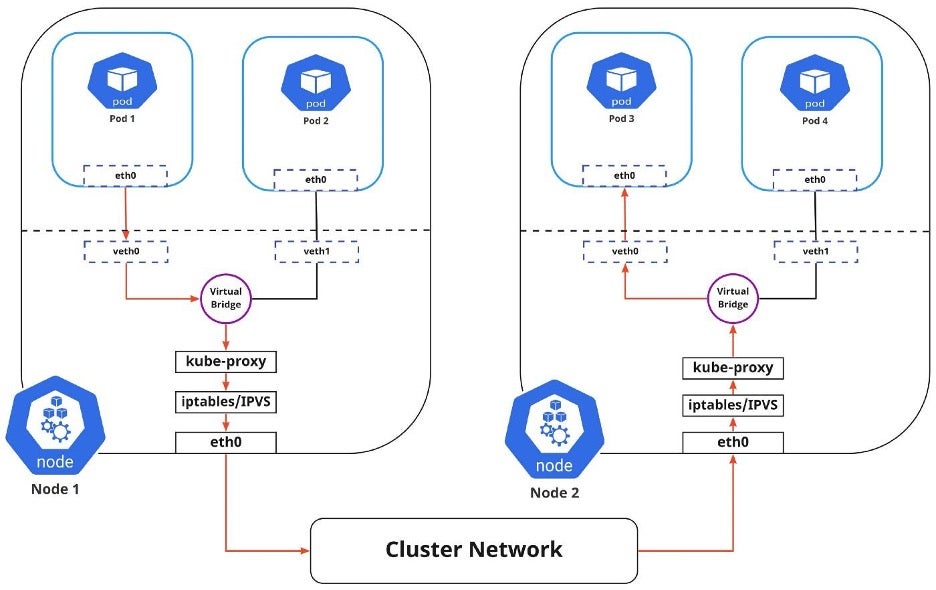

Pods are very dynamic. They may need to scale up or down based on demand. They may be created again in case of an application crash or a node failure. These events cause a Pod's IP address to change, which would make networking a challenge.

(Nived Velayudhan, CC BY-SA 4.0)

Kubernetes solves this problem by using the Service function, which does the following:

Assigns a static virtual IP address in the frontend to connect any backend Pods associated with the Service.

Load-balances any traffic addressed to this virtual IP to the set of backend Pods.

Keeps track of the IP address of a Pod, such that even if the Pod IP address changes, the clients don't have any trouble connecting to the Pod because they only directly connect with the static virtual IP address of the Service itself.

The in-cluster load balancing occurs in two ways:

IPTABLES: In this mode, kube-proxy watches for changes in the API Server. For each new Service, it installs iptables rules, which capture traffic to the Service's clusterIP and port, then redirects traffic to the backend Pod for the Service. The Pod is selected randomly. This mode is reliable and has a lower system overhead because Linux Netfilter handles traffic without the need to switch between userspace and kernel space.

IPVS: IPVS is built on top of Netfilter and implements transport-layer load balancing. IPVS uses the Netfilter hook function, using the hash table as the underlying data structure, and works in the kernel space. This means that kube-proxy in IPVS mode redirects traffic with lower latency, higher throughput, and better performance than kube-proxy in iptables mode.

The diagram above shows the package flow from Pod 1 to Pod 3 through a Service to a different node (marked in red). The package traveling to the virtual bridge would have to use the default route (eth0) as ARP running on the bridge wouldn't understand the Service. Later, the packages have to be filtered by iptables, which uses the rules defined in the node by kube-proxy. Therefore the diagram shows the path as it is.

Internet-to-Service networking

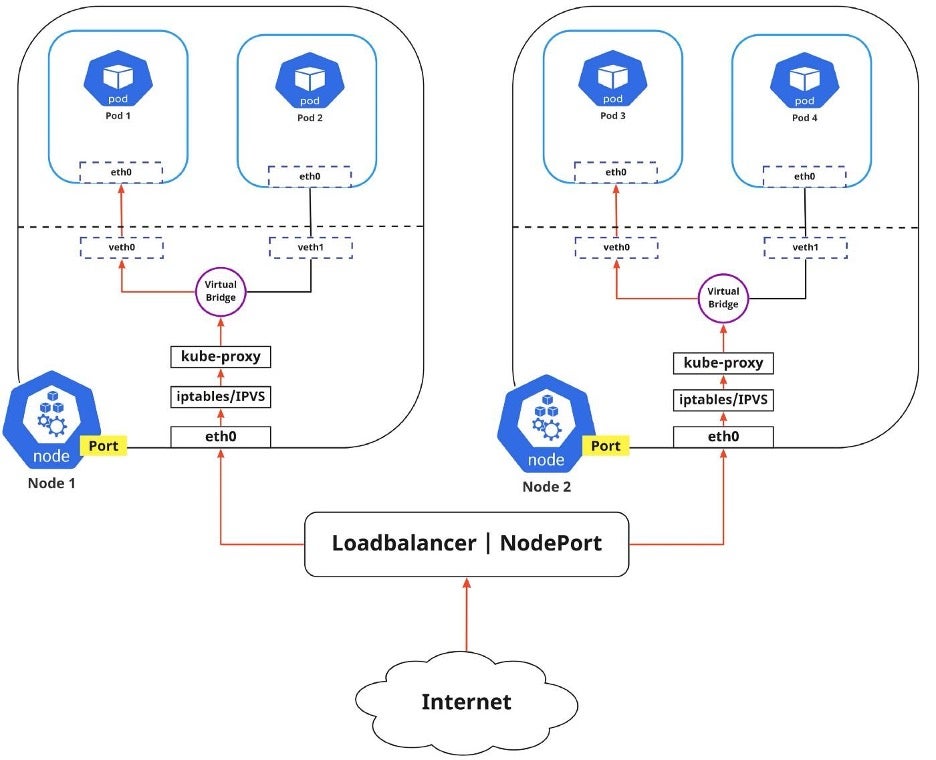

So far, I have discussed how traffic is routed within a cluster. There's another side to Kubernetes networking, though, and that's exposing an application to the external network.

(Nived Velayudhan, CC BY-SA 4.0)

You can expose an application to an external network in two different ways.

Egress: Use this when you want to route traffic from your Kubernetes Service out to the Internet. In this case, iptables performs the source NAT, so the traffic appears to be coming from the node and not the Pod.

Ingress: This is the incoming traffic from the external world to Services. Ingress also allows and blocks particular communications with Services using rules for connections. Typically, there are two ingress solutions that function on different network stack regions: the service load balancer and the ingress controller.

Conclusion

Kubernetes networking is a crucial aspect of deploying and managing containerized applications. And while it may be a vast and complex topic, I hope this post has provided a solid foundation for you to continue your learning and master advanced networking concepts.

Subscribe to my newsletter

Read articles from Kamalesh Rana directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by