Cloud Resume Challenge: The Classical Approach

Ojo-williams Daniel

Ojo-williams DanielTable of contents

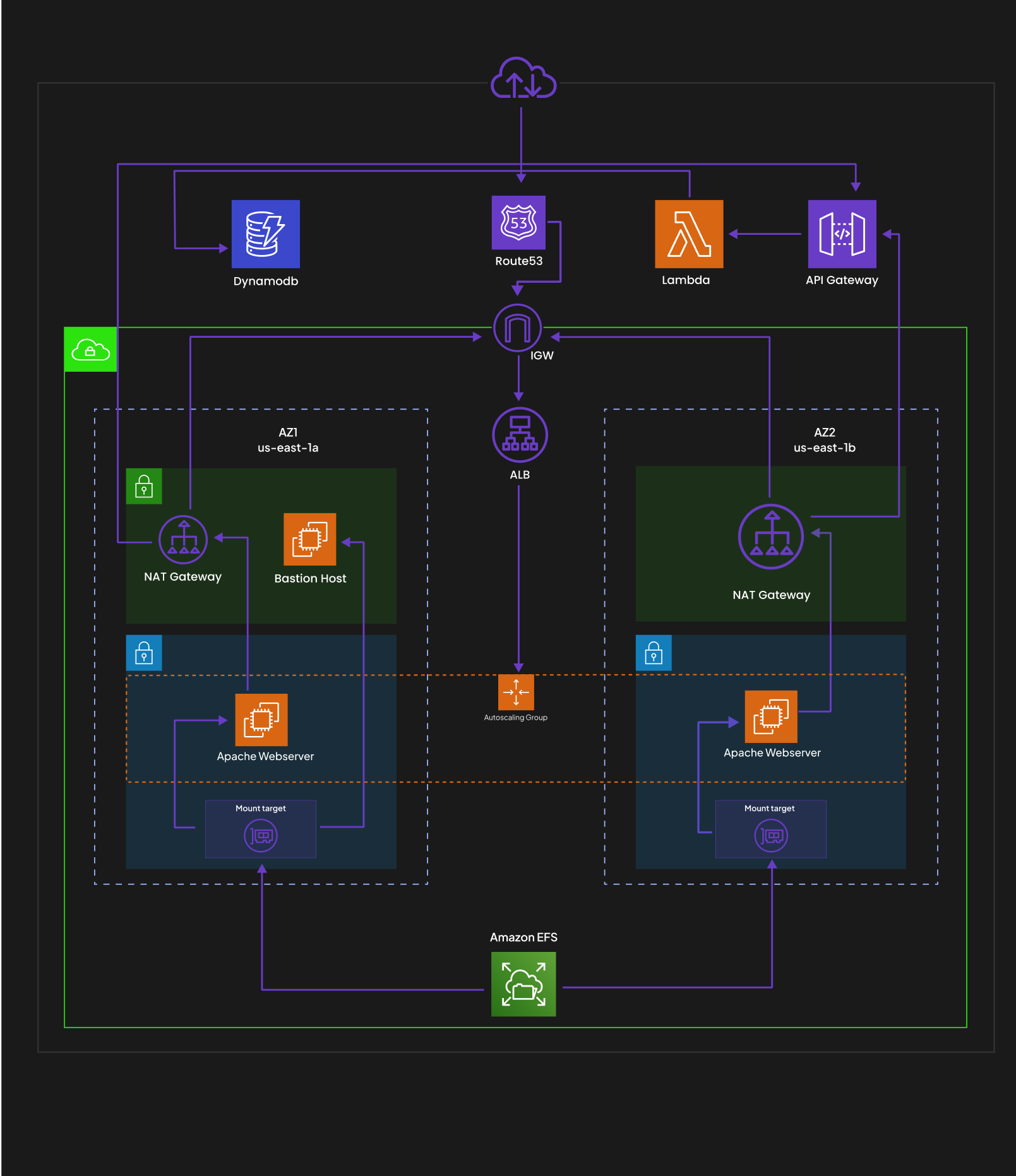

I decided to take the Cloud Resume Challenge to the next level, and I must say, @openupthecloud really delivered on that front. The challenge primarily revolves around leveraging serverless services (such as Lambda, DynamoDB, API Gateway, and S3 buckets). The beauty of these services is that they eliminate the need for server setups and the complexities of networking, making life remarkably simpler.

While the convenience of these serverless services is incredible and has transformed my experience, I've come to realize that there are situations where managed services come into play, and it's crucial to be ready for them too. It's in these moments that the classical/traditional setup shines through. So, I've decided to take a step back and serve my static website (my resume) from a web server hosted on an EC2 instance, replacing the beloved S3 bucket. I might consider hosting the API and database on EC2 instances as well, as part of my ongoing efforts to refine this setup, but this is what is served for now.

To establish this infrastructure, I'll be giving steps on the setup with the following key components:

1. Bastion Host:

The Bastion Host serves as a secure entry point into the network, allowing authorized access to other resources. It acts as a single access point for SSH connections, enabling secure administration of instances and ensuring a controlled and protected environment.

2. Autoscaling Group:

An Autoscaling Group dynamically adjusts the number of instances based on demand, ensuring optimal performance, availability, and cost management. It automatically scales the number of instances up or down based on predefined scaling policies, ensuring that the infrastructure can handle varying traffic loads effectively.

3. Load Balancer:

A Load Balancer evenly distributes incoming traffic across instances within the Autoscaling Group. It plays a crucial role in enhancing the overall performance, scalability, and reliability of the infrastructure. By distributing traffic among multiple instances, it ensures efficient utilization of resources and mitigates the impact of failures.

Step-by-Step Setup:

Step 1: Creating the VPC and Subnets

To establish a solid foundation for this infrastructure, we start by creating a Virtual Private Cloud (VPC) with appropriate subnets. The VPC provides networking capabilities and enables the deployment of resources. Here's a breakdown of the steps involved:

1. Create a VPC with carefully designed IP address ranges and CIDR blocks.

2. Set up subnets within the VPC, including both public and private subnets.

3. Attach an Internet Gateway to the VPC to allow Internet access for the public subnets.

4. Configure route tables to route traffic between subnets and direct internet-bound traffic through the Internet Gateway.

5. Set up Network Address Translation (NAT) gateways to enable outbound internet connectivity for instances in private subnets.

# create vp

resource "aws_vpc" "vpc" {

cidr_block = var.vpc_cidr

instance_tenancy = "default"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "${var.project_name}-vpc"

}

}

# get availability zones

data "aws_availability_zones" "available"{}

# create internet gateway

resource "aws_internet_gateway" "gw" {

vpc_id = aws_vpc.vpc.id

tags = {

Name = "${var.project_name}-igw"

}

}

# create public subnet1

resource "aws_subnet" "public_subnet1" {

vpc_id = aws_vpc.vpc.id

cidr_block = var.public_subnet1_cidr

availability_zone = data.aws_availability_zones.available.names[0]

map_public_ip_on_launch = true

tags = {

Name = "${var.project_name}-public-subnet-1"

}

}

# create public subnet2

resource "aws_subnet" "public_subnet2" {

vpc_id = aws_vpc.vpc.id

cidr_block = var.public_subnet2_cidr

availability_zone = data.aws_availability_zones.available.names[1]

map_public_ip_on_launch = true

tags = {

Name = "${var.project_name}-public-subnet-2"

}

}

# create public route table

resource "aws_route_table" "public_rt" {

vpc_id = aws_vpc.vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.gw.id

}

tags = {

Name = "${var.project_name}-public-rt"

}

}

# associate subnets with public route table

resource "aws_route_table_association" "pub_sub1" {

subnet_id = aws_subnet.public_subnet1.id

route_table_id = aws_route_table.public_rt.id

}

resource "aws_route_table_association" "pub_sub2" {

subnet_id = aws_subnet.public_subnet2.id

route_table_id = aws_route_table.public_rt.id

}

# create private subnet1

resource "aws_subnet" "private_sub1" {

vpc_id = aws_vpc.vpc.id

cidr_block = var.private_subnet1_cidr

availability_zone = data.aws_availability_zones.available.names[0]

map_public_ip_on_launch = false

tags = {

Name = "${var.project_name}private-subnet-1"

}

}

# create private subnet2

resource "aws_subnet" "private_sub2" {

vpc_id = aws_vpc.vpc.id

cidr_block = var.private_subnet2_cidr

availability_zone = data.aws_availability_zones.available.names[1]

map_public_ip_on_launch = false

tags = {

Name = "${var.project_name}private-subnet-2"

}

}

Step 2: Configuring Security Groups

Security is a vital aspect of any infrastructure. To ensure secure communication and access control, we need to configure security groups. My steps here:

1. Create a security group for the Bastion Host:

- Allow inbound SSH traffic from trusted IP addresses.

- Enable inbound http and https traffic for internet access.

2. Create a security group for the Load Balancer:

- Allow inbound traffic from the internet (HTTP and HTTPS).

- Enable outbound traffic to the security group of the private instances.

3. Create a security group for the Private Instances:

- Allow inbound traffic from the Load Balancer security group.

- Allow inbound SSH traffic from the Bastion Host

# create security group for bastion hos

resource "aws_security_group" "bastion_host_sg" {

name = "bastion_host_sg"

description = "Allow http/s inbound traffic"

vpc_id = var.vpc_id

ingress {

description = "http internet traffic"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "http internet traffic"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "https internet traffic"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "bastion-host-SG"

}

}

# create security group for load balancer

resource "aws_security_group" "lb_sg" {

name = "load_balancer_sg"

description = "Allow http/s inbound traffic"

vpc_id = var.vpc_id

ingress {

description = "http internet traffic"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "https internet traffic"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "load-balancer-SG"

}

}

# create security group for private instances

resource "aws_security_group" "private_instances" {

name = "private_instances_sg"

description = "Allow http inbound traffic from load balancer"

vpc_id = var.vpc_id

ingress {

description = "load_balancer_traffic"

from_port = 80

to_port = 80

protocol = "tcp"

security_groups = [aws_security_group.lb_sg.id]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

depends_on = [

aws_security_group.lb_sg

]

tags = {

Name = "private-instances-SG"

}

}

# create security group for efs

resource "aws_security_group" "efs_sg" {

name = "efs_sg"

description = "Allow nfs inbound traffic"

vpc_id = var.vpc_id

ingress {

description = "nfs from bastion host and private instance"

from_port = 2049

to_port = 2049

protocol = "tcp"

security_groups = [aws_security_group.bastion_host_sg.id, aws_security_group.private_instances.id]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "efs-SG"

}

}

# add outbound rule from load balancer to private instance

resource "aws_security_group_rule" "lb2pi" {

type = "egress"

from_port = 80

to_port = 80

protocol = "tcp"

source_security_group_id = "${aws_security_group.private_instances.id}"

security_group_id = aws_security_group.lb_sg.id

depends_on = [

aws_security_group.private_instances

]

}

# add outbound rule from private instance to efs

resource "aws_security_group_rule" "pi2efs" {

type = "egress"

from_port = 2049

to_port = 2049

protocol = "tcp"

source_security_group_id = "${aws_security_group.efs_sg.id}"

security_group_id = aws_security_group.private_instances.id

depends_on = [

aws_security_group.efs_sg

]

}

# add outbound rule from bastion host to efs

resource "aws_security_group_rule" "bh2efs" {

type = "egress"

from_port = 2049

to_port = 2049

protocol = "tcp"

source_security_group_id = "${aws_security_group.efs_sg.id}"

security_group_id = aws_security_group.bastion_host_sg.id

depends_on = [

aws_security_group.efs_sg

]

}

Step 3: Implementing Elastic File System (EFS)

Ensuring consistent file storage and access across instances is crucial for maintaining the website state and handling file-based workloads. Here's how i set up AWS Elastic File System (EFS):

1. Create an EFS file system with appropriate settings, such as throughput and storage capacity.

2. Configure security groups for EFS to control inbound and outbound traffic.

3. Set up mount targets in different availability zones for redundancy and high availability.

4. Mount the EFS file system on instances to provide shared storage for web files, ensuring consistency and synchronization across instances.

# create efs

resource "aws_efs_file_system" "server_files" {

creation_token = "Server files"

encrypted = true

tags = {

Name = "Server files"

}

}

# efs backup

resource "aws_efs_backup_policy" "policy" {

file_system_id = aws_efs_file_system.server_files.id

backup_policy {

status = "ENABLED"

}

}

# create mount target1

resource "aws_efs_mount_target" "target_subnet1" {

file_system_id = aws_efs_file_system.server_files.id

subnet_id = var.private_subnet1_id

security_groups = [var.efs_sg_id]

}

# create mount target2

resource "aws_efs_mount_target" "target_subnet2" {

file_system_id = aws_efs_file_system.server_files.id

subnet_id = var.private_subnet2_id

security_groups = [var.efs_sg_id]

}

Step 4: Deploying the Load Balancer and Autoscaling Group

Now that we have set up the foundational components of our infrastructure, it's time to deploy the Load Balancer and Autoscaling Group to ensure scalability and efficient traffic distribution. Follow these steps:

1. Create a Load Balancer:

- Configure the Load Balancer, listeners, and availability zones.

- Set up health checks to monitor the health of the instances.

2. Create a target group:

- Configure the target group to route traffic to the instances within the Autoscaling Group.

- Define the health check settings for the target group to ensure that only healthy instances receive traffic.

3. Configure the listener:

- Set up the listener rules to define how traffic should be distributed to the target group.

- Specify the appropriate port and protocol configurations.

4. Create an Autoscaling Group:

- Configure the Autoscaling Group with the minimum and maximum instance counts, scaling policies, and health checks.

- Associate the target group created earlier with the Autoscaling Group to direct traffic to its instances.

# create load balance

resource "aws_lb" "server_lb" {

name = "server-lb"

internal = false

load_balancer_type = "application"

security_groups = [var.lb_sg]

subnets = [var.public_subnet1_id, var.public_subnet2_id]

enable_deletion_protection = true

tags = {

name = "${var.project_name}-lb"

}

}

# create target group for load balancer

resource "aws_lb_target_group" "target" {

name = "lb-target-group"

port = 80

protocol = "HTTP"

vpc_id = var.vpc_id

health_check {

enabled = true

path = "/"

interval = 10

matcher = 300

timeout = 3

healthy_threshold = 3

unhealthy_threshold = 2

protocol = "HTTP"

port = "traffic-port"

}

}

# certificate

data "aws_acm_certificate" "amazon_issued" {

domain = ""

types = ["AMAZON_ISSUED"]

most_recent = true

}

# create load balancer listener

resource "aws_lb_listener" "listener" {

load_balancer_arn = aws_lb.server_lb.arn

port = "80"

protocol = "HTTP"

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.target.arn

}

}

# Only use data.aws_acm_certificate for your load balancer if you are using HTTPS (port 443).

Step 5: DNS Configuration

To make my website accessible via a domain name, I configured DNS settings. My steps here:

I have a domain hosted on AWS Route53 which I accessed to create A record for the load balancer dns_name

1. Create an A record for the Load Balancer:

- Create an A record for your domain, directing the traffic to the application load balancer's DNS name.

- This ensures that requests to the domain are routed to the Load Balancer, which then distributes the traffic to the instances in the Autoscaling Group.

# get hosted zone

data "aws_route53_zone" "my_zone" {

name = "ojowilliamsdaniel.online"

private_zone = true

}

# create alias record for load balancer

resource "aws_route53_record" "lb" {

zone_id = aws_route53_zone.my_zone.zone_id

name = "lb.${aws_route53_zone.zone.name}"

type = "A"

alias {

name = var.lb_dns_name

zone_id = var.lb_zone_id

evaluate_target_health = true

}

}e

The combination of a Bastion Host, Autoscaling Group, Load Balancer, and other key components ensure that the website can handle varying traffic loads, remains highly available, and provides an efficient user experience. For future improvements I might remove the serverless API gateway, lambda, and dynamodb, going fully traditional. WATCH OUT!!!

NOTE: In case you want to try it out, this setup can be quite expensive and run into a large cost before you know it, I was able to do this thanks to Amazon Web Services (AWS) for free credit.

All terraform codes are available HERE and site lb.ojowilliamsdaniel.online.

Subscribe to my newsletter

Read articles from Ojo-williams Daniel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by