Introduction to Containers and Orchestrations

Hemanth Narayanaswamy

Hemanth Narayanaswamy

Introduction

A container is a unit of software that packages code with its required dependencies in order to run in an isolated, controlled environment. This allows you to run an application in a way that is predictable, repeatable, and without the uncertainty of inconsistent code execution across diverse development and production environments. A container will always start up and run code the same way, regardless of what machine it exists on.

Containers provide benefits on multiple levels. In terms of the physical allocation of resources, containers provide efficient distribution of computing power and memory. However, containers also enable new design paradigms by strengthening the separation of application code and infrastructure code. This design separation also enables specialization within teams of developers, allowing developers to focus on their strengths.

Considering the breadth of what containers bring to the table, this article will bring you up to speed with the common, related terminology. After a general introduction to the benefits of containers, you will learn the nuance between terms such as “container runtimes”, “container orchestration”, and “container engines”. You will then apply this towards understanding the goals of containers, the specific problems they’re trying to solve, and the current solutions available.

Benefits of Containers

Containers are efficient with their consumption of resources — such as CPU compute power and memory — compared to options such as virtual machines. They also offer design advantages both in how they isolate applications and how they abstract the application layer away from the infrastructure layer.

At a project level, this abstraction enables teams and team members to work on their piece of the project without the risk of interfering with other parts of the project. Application developers can focus on application development, while infrastructure engineers can focus on infrastructure. Separating the responsibilities of a team in this case also separates the code, meaning application code won’t break infrastructure code and vice versa.

Containers provide a smoother transition between development and production for teams. For example, if you need to run a Node.js application on a server, one option is to install Node.js directly. This is a straightforward solution when you are a singular developer working on a singular server. However, once you begin collaborating with multiple developers and deploying to multiple environments, that singular installation of Node.js can differ across different team members using even slightly different development environments.

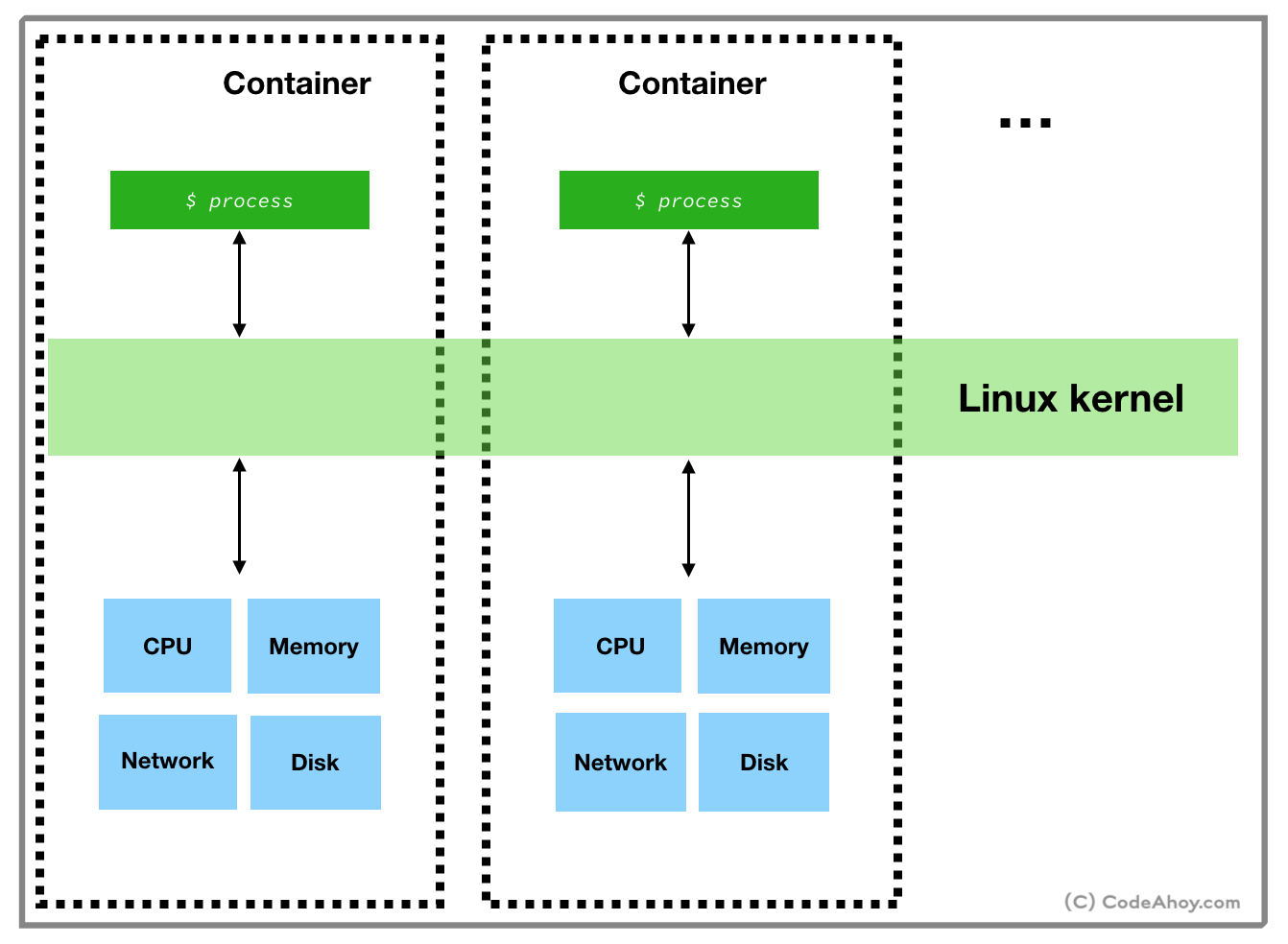

These features may make containers appear similar to virtual machines, but the difference is in their underlying design and subsequent efficiency. Modern container technologies are designed specifically to avoid the heavy resource requirements of virtual machines. While containers share the same principles of portability and repeatability, they are designed at a different level of abstraction. Containers skip the virtualization of hardware and the kernel, which are the most resource-intensive parts of a virtual machine, and instead rely on the underlying hardware and kernel of the host machine.

As a result, containers are comparatively lightweight, with lower resource requirements. Container technology has subsequently fostered a rich ecosystem to support container-centered development, including:

Building of container images.

Storing images in registries and repositories.

Persistence of data through data volumes.

Defining Container Terminology

Due to their broad set of use cases, “containers” can refer to multiple things. To help you understand the nuance between the interconnected concepts, here are some key terms that are often confused or used interchangeably in error:

Operating System: This is the software that manages all other software on your computer, along with the hardware. Often abbreviated as “OS”, an example of this is Linux.

Kernel: This is the component of the OS that specializes in the most basic, low-level interfacing with a machine’s hardware. It translates the requests for physical resources between software processes and hardware. Resources include computing power from the CPU, memory allocation from RAM, and I/O from the hard disk.

Containers: This is a unit of software that packages code with its required dependencies in order to run in an isolated, controlled environment. They virtualize an OS, but not a kernel.

Container runtimes: This manages the start-up and existence of a container, along with how a container actually executes code. Regardless of what container solution you choose, you are essentially choosing between different types of container runtimes as a foundation. Furthermore, complete container solutions often use multiple runtimes in conjunction. Container runtimes themselves are divided into two groups:

Open Container Initiative (OCI): This is the baseline runtime that creates and runs a container, with little else. An example is, which is the most common, used by Docker, and written in Golang. An alternative is

crunfrom Red Hat written in C, and is meant to be faster and lightweight.Container Runtime Interface: This runtime is more focused on container orchestration. Examples include the early

dockershimwhich has steadily been dropped in favor of the more fully featuredcontainerdfrom Docker, or the alternativeCRI-Ofrom Red Hat.

Container Image: These are packages of software required by containers that contain the code, runtime, system libraries, and dependencies. Usually, they start from an image of an OS like Ubuntu. These images can be built manually or can be retrieved from an image registry.

Image Registry: This is a solution for container image storage and sharing. The most prominent example is Docker Hub, but alternative public image registries exist and private image registries can be implemented.

Image Repository: This is a specific location for a container image within an image registry. It contains the latest image along with the history of it within a registry.

Container engines: Complete solutions for container technologies, an example being Docker. When people discuss container technology, what they’re often referring to are container engines. This includes the container, the container runtime underlying it, the container image, and the tools to build them, and potentially can include container image registries and container orchestration. An alternative to Docker would be a stack of Podman, Buildah, and Skopeo from Red Hat.

Container Orchestration: Automation of container deployment. Orchestration involves provisioning, configuration, scheduling, scaling, monitoring, deployment, and more. Kubernetes is an example of a popular container orchestration solution.

Goals of Containers

Container solutions share some common problems that must be addressed in order to be successful:

Portability: Self-contained applications bundled with dependencies allow containers to be predictable. Code is executed the exact same way in any machine since containers bring their own repeatable environment.

Efficiency: Containers not only leverage the existing kernel of their host machine but often only exist long enough to execute the code they are responsible for. Additionally, containers are often designed to only exist as long as the process or application they run is needed, and stops once a task is complete.

Statelessness: Statelessness is an ideal in container design that strives for code to always run the exact same way, without requiring knowledge of past or future code executions. However, this isolating approach has been pushed back by real-world needs and has materialized with most container engines providing a solution for persistent data, often in the form of volume storage that can be shared between containers.

Networking: While containers are often completely isolated from one another, there are many cases where containers must communicate with each other, with the host machine, or with a different cluster of containers. Docker provides both bridge networking for containers that exist under the same instance of Docker to communicate, and overlay networking for containers across different Docker instances.

Logging: By nature, containers are difficult to inspect. A solution for logging errors and outputs makes large-scale container deployments more manageable.

Application and infrastructure decoupling: Application logic should start and stop within the container responsible for it. The management and deployment of the containers themselves in different environments can be the focus of infrastructure teams.

Specialization and microservices: Separation of responsibility spread across multiple containers enables the use of microservices instead of monolithic apps.

What is orchestration?

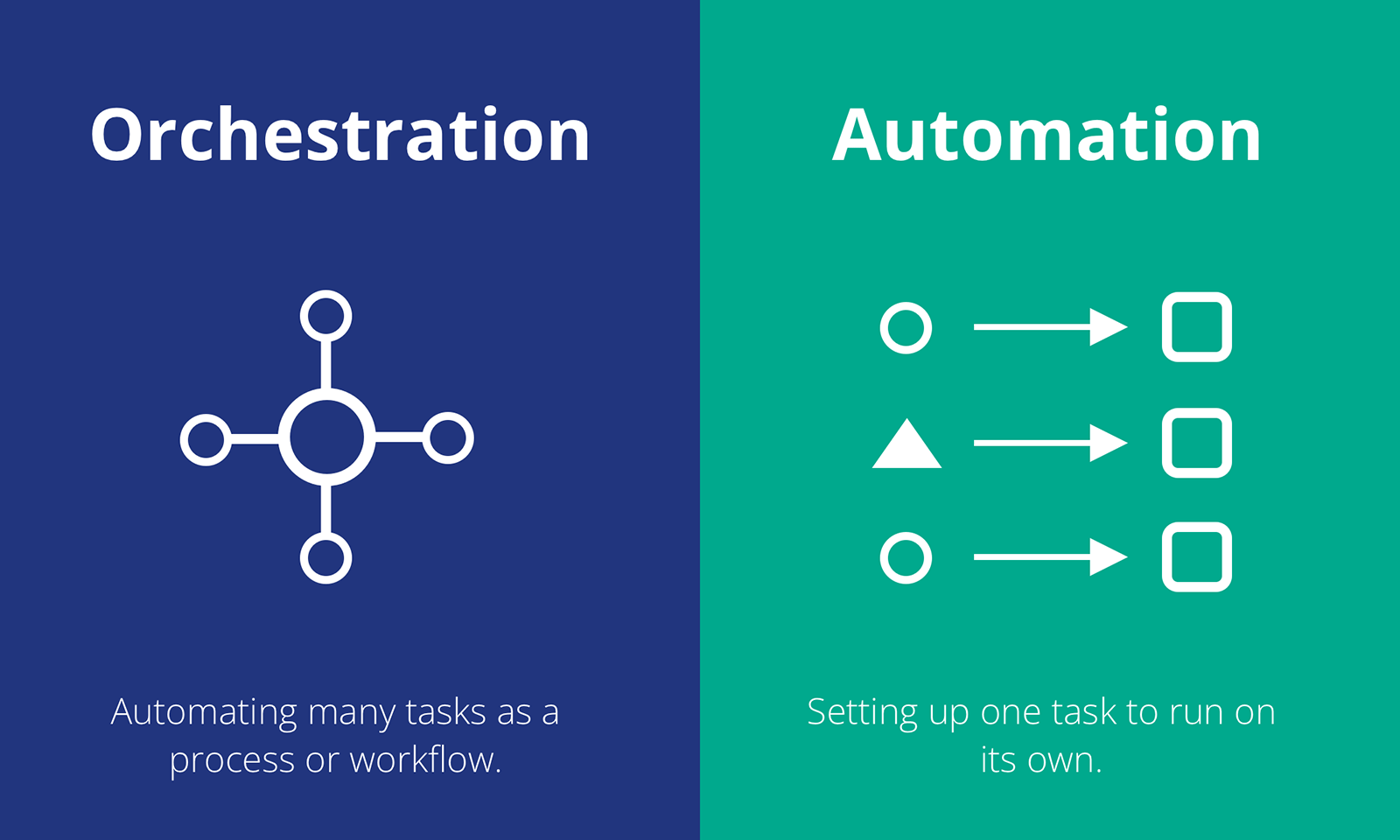

Orchestration is the automated configuration, management, and coordination of computer systems, applications, and services. Orchestration helps IT to more easily manage complex tasks and workflows.

Automation and orchestration are different, but related concepts. Automation helps make your business more efficient by reducing or replacing human interaction with IT systems and instead using software to perform tasks to reduce cost, complexity, and errors.

In general, automation refers to automating a single task. This is different from orchestration, which is how you can automate a process or workflow that involves many steps across multiple disparate systems. When you start by building automation into your processes, you can then orchestrate them to run automatically.

IT orchestration also helps you to streamline and optimize frequently occurring processes and workflows, which can support a DevOps approach and help your team deploy applications more quickly. You can use orchestration to improve the efficiency of IT processes such as server provisioning, incident management, cloud orchestration, database management, application orchestration, and many other tasks and workflows.

Why do we need container orchestration?

Container orchestration is a process and set of tools for the automated deployment, configuration, and management of containers.

It helps reduce manual effort, streamline workflow, and improve consistency in deployments. Containers handle tasks such as:

Automatically starts up the application when a new container is created

Rolling new releases out incrementally to production

Automatically updating monitoring, logging, and other metadata as the containers change

These automated features make container orchestration a perfect complement to automation processes for continuous integration.

How container orchestration works

When you use a container orchestration tool, such as Kubernetes, you describe the application’s configuration in the form of a configuration file known as a YAML file.

This file describes how to configure the application during deployment. Containers get created on host systems during deployment.

Once the container is running on the host, the orchestration tool manages its lifecycle according to the specifications you laid out in the container’s configuration file.

Container orchestration features

Orchestration is a powerful concept that simplifies tasks for managing containers. These tools have many features benefiting developers and the operations teams.

The most notable features include:

Provisioning and deploying containers

Container orchestration automatically provisions and deploys containers. These steps are managed by the orchestration tool, which ensures each container has all the required resources — like CPU and memory.

Scaling

Container orchestration tools scale up the number of containers running on a host by adding more hosts to the cluster and scale down by removing hosts from the cluster.

Load balancing

When you start up a new orchestration tool, it needs to know where to find the containers that will be load-balancing across the cluster.

This is accomplished in a couple of ways.

First, you set up a load-balancing system to expose the load-balancing endpoint for your containers and use a DNS entry to point the orchestration tool to that IP address.

Second, you set up a reverse proxy to distribute the load-balanced endpoint to the orchestration tool.

Monitoring

Container orchestration tools are useful for performance monitoring. The tool monitors container performance metrics such as CPU utilization, memory utilization, network traffic, etc.

The data collected by the orchestration tool is used to troubleshoot when a performance problem is identified.

For example, if a container has high CPU utilization, the data collected by the tool is used to determine whether a container is consuming too much CPU or if the CPU utilization of the host is too high.

Resource allocation

Container orchestration tools allocate resources such as CPU, memory, network bandwidth, etc., as needed.

Re-balancing allows the orchestration tool to maximize the utilization of the server’s resources.

Security

In a cluster of hosts, there may be multiple security domains with different levels of trust. For example, a cluster may have hosts that have been verified as being in a secure data center and others that have not yet been verified.

The verification process may require collecting a host’s MAC address and IP address to be able to trace back to a physical location.

Logging

Container orchestration tools log events such as container start, stop and restart. Additional information such as the name of the application, host, IP address, port, and other details are logged along with these events.

These logs are stored in a centralized log management and analysis tool. The log management tool helps in collecting, filtering, aggregating, and storing these logs.

Benefits of container orchestration

- Automation: Automate container deployment across environments and applications.

- Consistency: Ensure all containers get deployed consistently across environments.

- Scalability: Scale up or down applications without a negative impact on the environment or the application itself.

Security: Manage security policies for each container based on its role in the infrastructure (e.g., web server vs. database server).

This makes it easy for developers and operations staff alike to understand what security policies are in place for each container. This helps teams to comply with those policies while also ensuring they can scale the application without a negative impact on security.Portability: Deploy containers across environments and applications.

Conclusion

This article outlined what containers are and what benefits they bring to the table. Container technology is rapidly evolving, and knowing the terminology will be crucial to your learning journey. Likewise, Container orchestration is an important part of the container ecosystem, as it helps you automate, manage, and scale your application.

Thank you for reading!!

Happy Reading!

Subscribe to my newsletter

Read articles from Hemanth Narayanaswamy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hemanth Narayanaswamy

Hemanth Narayanaswamy

I'm a DevOps Engineer with a passion for automating and streamlining operational processes. I'm constantly learning and adapting to new technologies, and always looking for ways to improve and expand upon existing solutions.