LRU Cache (LeetCode)

Alex Kang

Alex Kang

Problem Description

https://leetcode.com/problems/lru-cache/

Design a data structure that follows the constraints of a Least Recently Used (LRU) cache.

Implement the LRUCache class:

LRUCache(int capacity) Initialize the LRU cache with positive size capacity.

int get(int key) Return the value of the key if the key exists, otherwise return -1.

void put(int key, int value) Update the value of the key if the key exists. Otherwise, add the key-value pair to the cache. If the number of keys exceeds the capacity from this operation, evict the least recently used key. The functions get and put must each run in O(1) average time complexity.

Intuition

If we maintain a list ordered by recency, then whenever a new element is accessed, we can add it to the front of the list and remove the last element from the list. If an element is updated, we also need to move the element to the front of the list.

The operations needed:

Adding an element to the front of the list

Removing an element from the end of the list

Moving an element

- from the middle to the front of the list.

If we use a doubly linked list, all of these operations can be done in constant time.

Time Complexity

Hashmap Lookup: O(1) time <- - -> Array Search: O(n) time

Doubly Linked List Ordering: O(1) time <- - -> Array Sort: O(n) time

Space Complexity

Hashmap: O(n) <- - -> Array: O(n)

Doubly Linked List: O(n) <- - -> Array: O(n)

For efficiency, we can use a hashmap + doubly linked list, since it provides much better time complexity with similar space complexity.

Test Cases

Some cases that need to be tested are:

Evicting the least recently used key

Cache with size 1

Updating the value of an existing key

Calling

get()on a non-existent key

Python Implementation

Things to Note

Node

- The Node class should have a

keyvariable to help delete entries from thehash_map.

How Sentinel Works

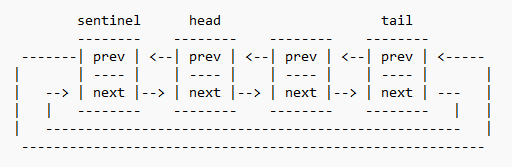

A sentinel node is a dummy node that points to the first and last elements of the list.

sentinel.nextisheadof the doubly linked listsentinel.previstailof the doubly linked list

Hash Map

- When a

nodeis added/removed from the linked list, the correspondingnodehas to be added/removed from thehash_map.

Updating Key

- When a

keyis updated, thenodeshould move to the front of the list

Code

class Node:

def __init__(self, key, val):

self.key = key

self.val = val

self.prev = None

self.next = None

class LRUCache:

def __init__(self, capacity):

self.capacity = capacity

# HashMap: key -> key, value -> Node

self.hash_map = {}

# use sentinel node to simplify operations

# sentinel.next -> head

# sentinel.prev -> tail

self.sentinel = Node(None, None)

self.sentinel.next = self.sentinel

self.sentinel.prev = self.sentinel

def put(self, key, val):

# if key exists, update value and move it to the front

if key in self.hash_map:

node = self.hash_map[key]

self.remove_node(node)

self.insert_into_head(node)

node.val = val

else:

node = Node(key, val)

# if cache is not full

if len(self.hash_map) < self.capacity:

self.hash_map[key] = node

self.insert_into_head(node)

# if cache is full, update linked list

else:

self.hash_map[key] = node

self.insert_into_head(node)

self.remove_from_tail()

def get(self, key):

# if element is in cache, move it to the front

if key in self.hash_map:

node = self.hash_map[key]

self.remove_node(node)

self.insert_into_head(node)

return node.val

else:

return -1

def insert_into_head(self, node):

# currently, sentinel <-> prev_head

# we want it to be updated to sentinel <-> node <-> prev_head

# sentinel <- node - prev_head

node.prev = self.sentinel

# sentinel - node -> prev_head

node.next = self.sentinel.next

# sentinel - node <- prev_head

self.sentinel.next.prev = node

# sentinel -> node - prev_head

self.sentinel.next = node

def remove_from_tail(self):

# remove from hash map since it shouldn't be in the cache

del self.hash_map[self.sentinel.prev.key]

node = self.sentinel.prev

self.remove_node(node)

def remove_node(self, node):

# currently, prev <-> node <-> next

# we want it to be updated to prev <-> next

# prev <- next

node.next.prev = node.prev

# prev -> next

node.prev.next = node.next

# function for debugging

def print_hash(self):

print('---- Hash Map ----')

for key, node in self.hash_map.items():

print("key: ", key, "val: ", node.val)

# function for debugging

def print_linked_list(self):

print('---- Linked List----')

head = self.sentinel.next

lst = []

while head != self.sentinel:

lst.append((head.key, head.val))

head = head.next

print(lst)

Testing

The two functions print_hash and print_linked_list can be used to print the current hash and the linked list.

As mentioned above, the cases that need to be tested are:

Evicting the least recently used key

Cache with size 1

Updating the value of an existing key

Calling

get()on a non-existent key

Test Case 1 (Evicting the least recently used key)

Input:

res = []

lru = LRUCache(2)

res.append(lru.put(1, 1))

res.append(lru.put(2, 2))

res.append(lru.get(1))

res.append(lru.put(3, 3))

res.append(lru.get(2))

res.append(lru.put(4, 4))

res.append(lru.get(1))

res.append(lru.get(3))

res.append(lru.get(4))

Output:

- [None, None, 1, None, -1, None, -1, 3, 4]

States for put()

1.

---- Hash Map ----

key: 1 val: 1

---- Linked List----

[(1, 1)]

2.

---- Hash Map ----

key: 1 val: 1

key: 2 val: 2

---- Linked List----

[(2, 2), (1, 1)]

3.

---- Hash Map ----

key: 1 val: 1

key: 3 val: 3

---- Linked List----

[(3, 3), (1, 1)]

4.

---- Hash Map ----

key: 3 val: 3

key: 4 val: 4

---- Linked List----

[(4, 4), (3, 3)]

As shown, the evicting the least recently used element is properly implemented.

Subscribe to my newsletter

Read articles from Alex Kang directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by