Kubernetes Architecture — Explained

Techiez Hub

Techiez HubTable of contents

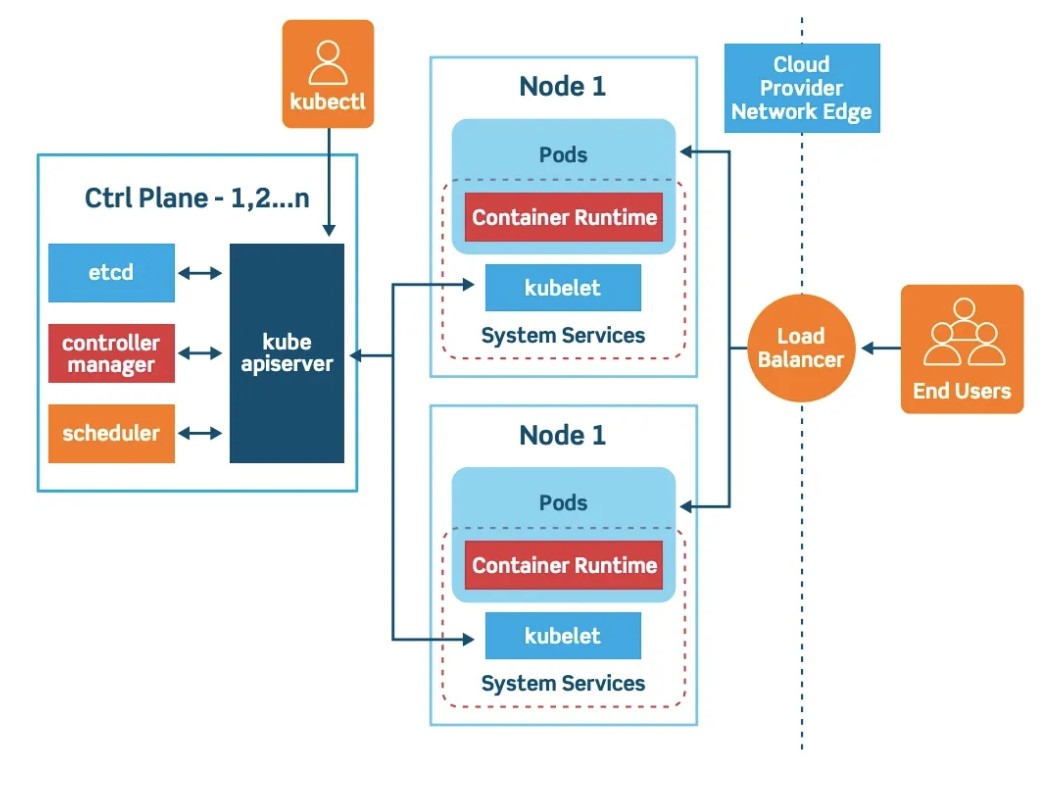

Kubernetes is a powerful container orchestration platform that allows you to manage, deploy and scale containerized applications in a highly efficient and automated way. Its architecture is designed to be highly scalable and fault-tolerant, making it suitable for large-scale distributed systems.

Here is a brief overview of the Kubernetes architecture, along with some real-life examples and pictures:

- Master Node:

The Kubernetes master node is the control plane of the cluster. It manages the overall state of the cluster, including scheduling and scaling of containers, as well as monitoring and logging. It consists of several components, including the Kubernetes API server, etcd, kube-controller-manager, and kube-scheduler.

Real-life example: The master node can be compared to a conductor of an orchestra. Just as a conductor directs individual musicians to play in harmony, the Kubernetes master node directs individual containers to work together in a coordinated manner.

2. Worker Node:

The worker node is where the containers are actually deployed and run. It includes the Kubernetes runtime, which is responsible for managing containers, as well as a container networking interface (CNI) that provides connectivity between containers. Each worker node can run multiple containers, and can be scaled horizontally to handle increased workload.

Real-life example: The worker node can be compared to individual musicians in an orchestra. Just as each musician plays a different instrument to create a unified sound, each container on a worker node performs a different function to create a unified application.

3. Pods:

A pod is the smallest deployable unit in Kubernetes. It represents a single instance of a container, along with its associated data volumes and networking configuration. Pods are created and managed by the Kubernetes API server, and can be scheduled to run on any worker node in the cluster.

Real-life example: A pod can be compared to a single instrument in an orchestra. Just as each instrument produces a distinct sound, each pod performs a distinct function within an application.

4. Services:

A service is an abstraction layer that provides a consistent way to access a set of pods. It allows you to expose a deployment of pods as a network service, which can be accessed by other pods within the cluster or by external clients. Services can be configured to use load balancing, DNS resolution, or other networking features.

Real-life example: A service can be compared to a section of instruments in an orchestra. Just as a section of instruments produces a unified sound, a service provides a unified endpoint for accessing a set of pods.

5. Deployments:

A deployment is a higher-level abstraction that manages the desired state of a set of pods. It allows you to specify the number of replicas you want to run, as well as the rolling update strategy for updating the pods. Deployments can be used to ensure high availability, perform rolling updates, and manage scaling of the application.

Real-life example: A deployment can be compared to the sheet music used by an orchestra. Just as the sheet music specifies the desired state of the performance, a deployment specifies the desired state of the application.

Subscribe to my newsletter

Read articles from Techiez Hub directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Techiez Hub

Techiez Hub

As a highly experienced DevOps Consultant with over 12 years of industry experience, I have honed my skills in building and maintaining scalable infrastructure for cloud-based applications. I have a strong background in AWS and Azure, and I'm well-versed in using tools such as Terraform, Kubernetes, and Docker to create automated, efficient systems. Throughout my career, I have worked on a variety of projects, from small startups to large-scale enterprises. I pride myself on my ability to communicate effectively with clients and colleagues, and to work collaboratively to find the best solutions to complex problems. Some of my key skills and areas of expertise include: 1.Cloud infrastructure design and implementation 2.Automation and scripting using tools such as Terraform and Ansible 3.Containerization with Docker and Kubernetes 4.Monitoring and logging with tools such as Prometheus, Grafana, and ELK stack 5.CI/CD pipelines with Jenkins and GitLab