Probability for Machine Learning:Bayes Theorem

Rhythm Rawat

Rhythm RawatHi techie, hope you're doing great. Previously we've covered the Machine Learning algorithms series (supervised and unsupervised). From this blog, we will be starting the "Probability for Machine Learning series" where we'll be covering probability concepts required for machine learning. In this blog, we will be covering Bayes Theorem.

Bayes theorem is a powerful tool for reasoning under uncertainty. It allows us to update our beliefs about a hypothesis based on new evidence. In machine learning, Bayes theorem can be used for various tasks, such as classification, regression, clustering, and anomaly detection.

What is Bayes Theorem?

Bayes theorem is a mathematical formula that relates the conditional probabilities of two events.The theorem states that:

$$P(A|B) = \frac{P(B|A)P(A)}{P(B)}$$

where A and B are any two events,

P(A|B) is the probability of A given B,

P(B|A) is the probability of B given A,

P(A) is the prior probability of A, and

P(B) is the marginal probability of B.

The theorem can be interpreted as follows: the posterior probability of A given B is proportional to the product of the likelihood of B given A and the prior probability of A. The denominator P(B) is a normalizing constant that ensures that the posterior probability sums to one over all possible values of A.

How to Derive Bayes Theorem?

Bayes theorem can be derived from the definition of conditional probability and the law of total probability.

The definition of conditional probability states that:

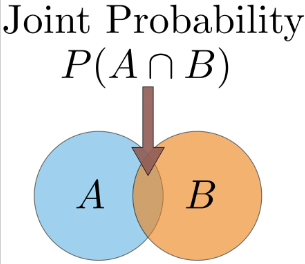

$$P(A|B) = \frac{P(A \cap B)}{P(B)}$$

where P(A∩B) is the joint probability of A and B, i.e., the probability that both events occur.

The law of total probability states that:

$$P(B) = \sum_{i} P(B|A_i)P(A_i)$$

where {Ai} is a partition of the sample space, i.e., a set of mutually exclusive and exhaustive events.

By substituting these two equations into Bayes theorem, we get:

$$P(A|B) = \frac{P(B|A)P(A)}{\sum_{i} P(B|A_i)P(A_i)}$$

which is equivalent to the original formula.

How to Apply Bayes Theorem to Machine Learning?

Bayes theorem can be applied to machine learning problems by treating the hypothesis as an event A and the data as an event B. The goal is to find the most probable hypothesis given the data, i.e., to maximize the posterior probability P(A|B).

For example, suppose we want to classify an email as spam or not spam based on its content. We can use Bayes theorem to calculate the posterior probabilities of each class given the email:

$$P(\text{spam}|\text{email}) = \frac{P(\text{email}|\text{spam})P(\text{spam})}{P(\text{email})}$$

$$P(\text{not spam}|\text{email}) = \frac{P(\text{email}|\text{not spam})P(\text{not spam})}{P(\text{email})}$$

Here, P(spam) and P(not spam) are the prior probabilities of each class, which can be estimated from the frequency of spam and not-spam emails in a training dataset. P(email∣spam) and P(email∣not spam) are the likelihoods of the email given each class, which can be modelled using some text analysis techniques, such as bag-of-words or TF-IDF. P(email) is the marginal probability of the email, which can be ignored since it is constant for both classes.

To classify the email, we can compare the posterior probabilities and choose the class with the higher value. Alternatively, we can use a threshold value to decide whether to classify the email as spam or not spam.

Bayes theorem can also be used for other machine learning tasks, such as regression, clustering, and anomaly detection. The main idea is to use prior knowledge and data to update our beliefs about a hypothesis or a parameter. This approach is known as Bayesian inference or Bayesian learning.

Conclusion

Bayes theorem is a fundamental concept in probability theory and statistics that allows us to update our beliefs about a hypothesis based on new evidence. It has many applications in machine learning, such as classification, regression, clustering, and anomaly detection. By using Bayes theorem, we can incorporate prior knowledge and data to perform Bayesian inference or Bayesian learning. This approach can help us deal with uncertainty, complexity, and noise in real-world problems. Bayes theorem is also a useful tool for reasoning and decision-making under uncertainty.

Hope you like the blog. Please comment down some of your suggestions for any improvement on the blogs, I would really appreciate that. Subscribe to the newsletter to get more such updates.

Thanks :)

Subscribe to my newsletter

Read articles from Rhythm Rawat directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rhythm Rawat

Rhythm Rawat

Machine learning enthusiast with a strong emphasis on computer vision and deep learning. Skilled in using well-known machine learning frameworks like TensorFlow , scikit-learn and PyTorch for effective model development. Familiarity with transfer learning and fine-tuning pre-trained models to achieve better results with limited data. Proficient in Python, Machine Learning, SQL, and Flask.