Keeping it DRY with reusable Terraform modules in the AWS cloud

Mahesh Upreti

Mahesh UpretiTable of contents

Do you know you can just start up all the required resources and destroy them within a minute? Yes, I was also quite fascinated after listening to this and trying to understand what that one thing would help me to do such an automation task within a minute. Then after some exploration, I understood the concept of Infrastructure as a code. So you might be asking, Hey man! what is that?

Well, Infrastructure as a Code (IaaC) is the practice of managing and provisioning computer infrastructure using code. This allows infrastructure elements like compute resources, networks, and storage to be automated, version controlled, tested, and shared. There are different tools and concepts on this particular subject matter. Still, here we are going to look after the 'Terraform' which is essentially one of the best IaaC tools for building, changing, and versioning infrastructure safely and efficiently. Terraform allows you to define the basic building blocks of your infrastructure in .tf configuration files. When you run Terraform commands, it reads your configurations and automatically provisions and manages those resources.

Enough talking! Now, let's move towards the practical :)

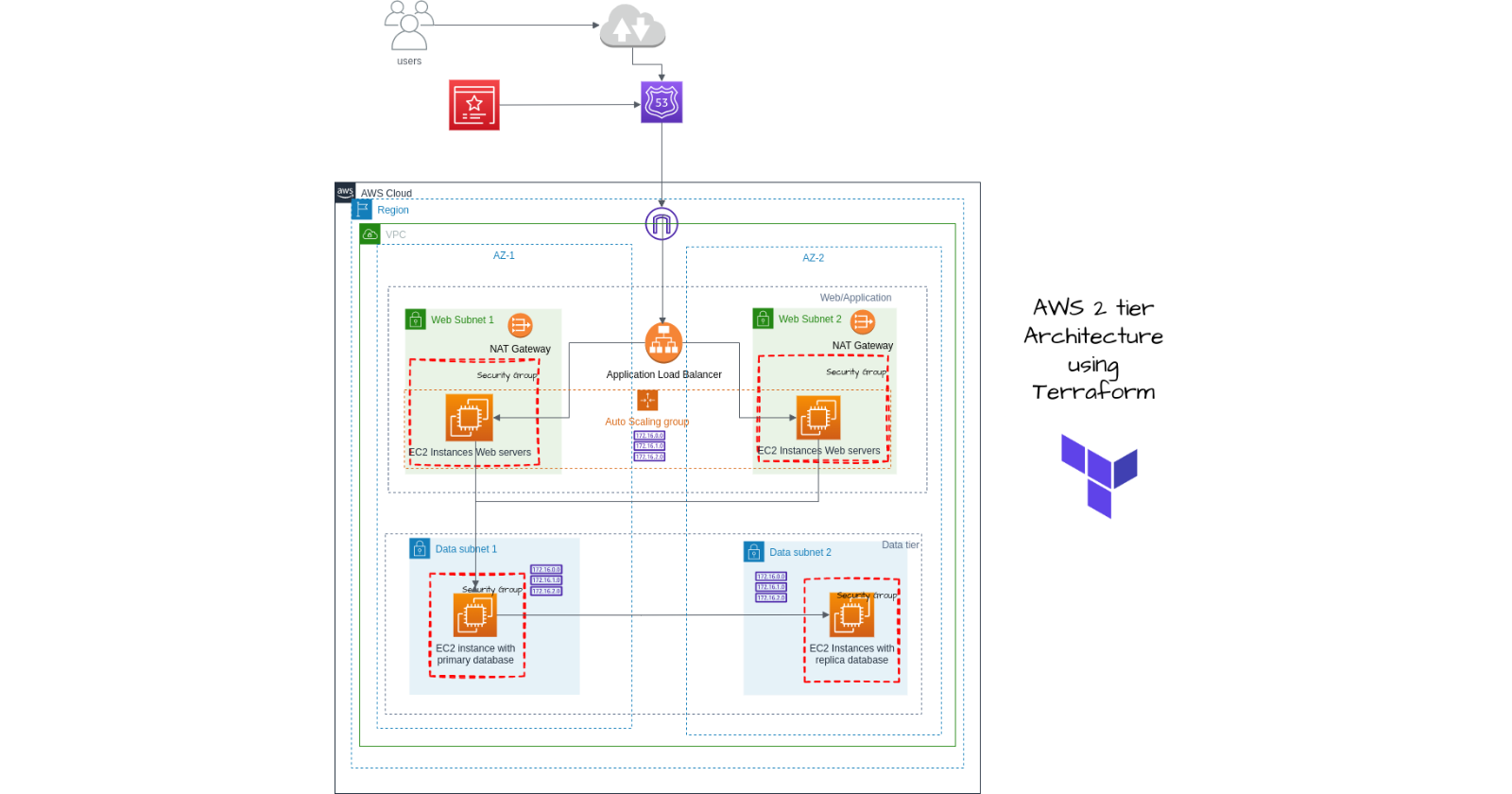

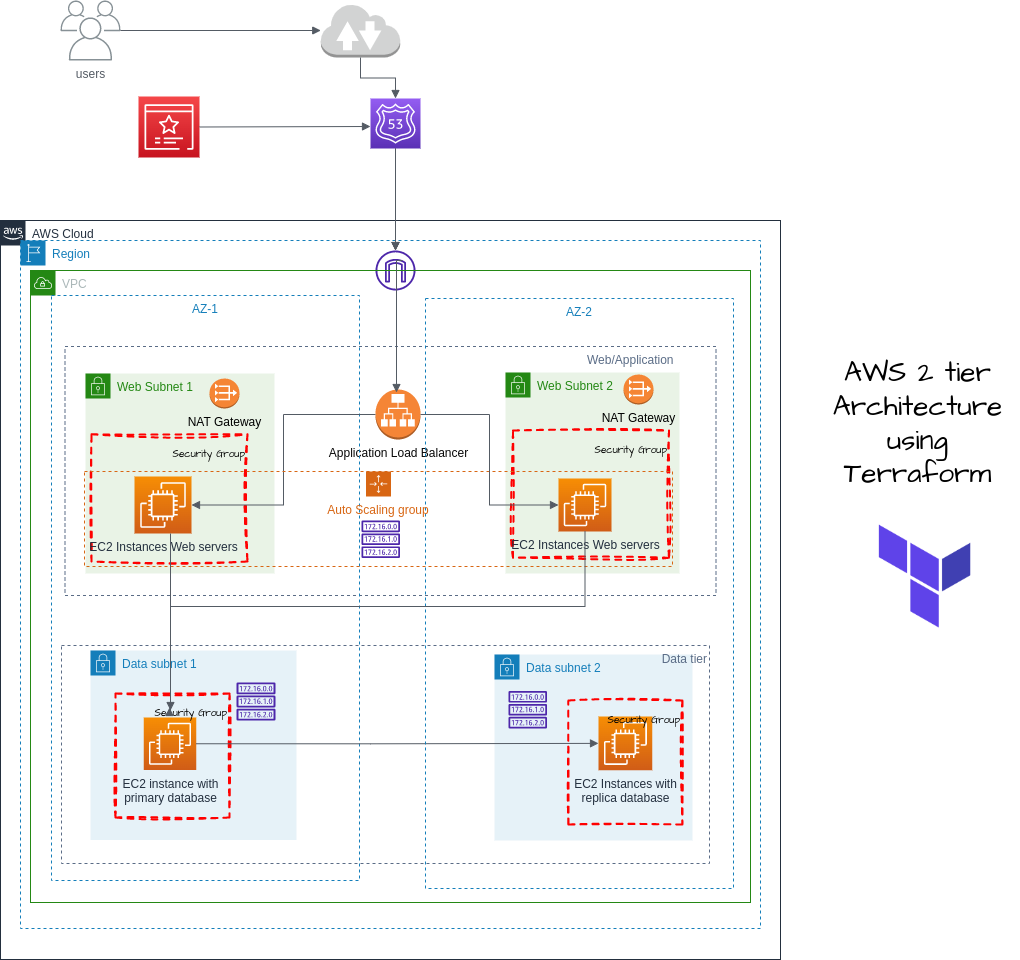

Provisioning 2tier application architecture in AWS cloud with an IaaC approach using Terraform.

Prerequisite

Code Editor installed on your machine (VS Code in our case)

Terraform installed on your machine ( Ubuntu distribution for this tutorial)

Basic understanding and working knowledge about different services like EC2, VPC, Security groups, Route53, Load Balancer, Auto Scaling groups and Amazon Certificate Manager.

Architecture

Here, we will be using SQL database installed in the EC2 instance of private subnet rather than using RDS database engine.

STEP 1: Configuring Keys

To connect to AWS and use the services, the terraform should have IAM Access and secret access keys. For this tutorial, you can create your new IAM user or provide a role( we will be defining an IAM user) and generate the keys. Download the keys.

After the key has been downloaded, verify the profile for that key. In most cases, it will be saved in the following directory and the profile will be [default].

cd home/.aws/credentials

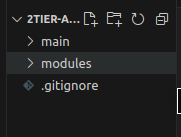

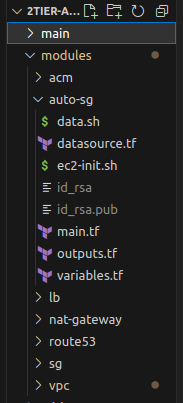

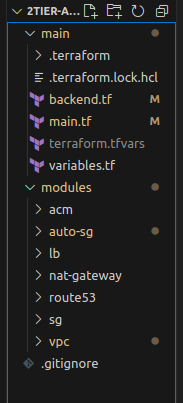

STEP 2: Folder Structure

In the working directory, create two subdirectories as main and modules. The main will contain our main configuration file whereas the modules will contain the separate modules for each service.

Now, inside the main folder create three files: main.tf for configuring the modules, terraform.tfvars for storing the variables and variables.tf for the declaring the variables.

Each module will also contain main.tf for that module configuration, variables.tf to declare the variables required in that module and outputs.tf that can be used as variables in modules.

Inside main directory:

main.tf

provider "aws" {

region = var.region

profile = "default"

}

terraform.tfvars

region = "us-east-1"

variables.tf

variable "region" {}

STEP 3: Setup VPC

Create a directory inside modules named vpc. Inside vpc, we will be creating three files namely:

main.tf

#create VPC in one region

resource "aws_vpc" "main" {

cidr_block = var.vpc_cidr

instance_tenancy = "default"

enable_dns_hostnames = true

tags = {

Name = "${var.project_name}-VPC"

}

}

In the above code, the comments are self-explanatory. We have created vpc in one region. CIDR block values will be provided from the variables which will be assigned in the main folder terraform.tfvars .

#create internet gateway and attach it to the VPC

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.project_name}-IG "

}

}

We have now created the internet gateway and attached the internet gateway to our VPC. You can see the vpc_id is coming from the vpc that we created earlier. So, this is way how we pass the reference variable to each other resource to talk with one another. You can follow Terraform's official documentation for a more detailed view.

https://registry.terraform.io/

#use data source to get all the availability zones in region

data "aws_availability_zones" "available_zones" {}

#create public subnet az1

resource "aws_subnet" "public_subnet_az1" {

vpc_id = aws_vpc.main.id

cidr_block = var.public_subnet_az1_cidr

availability_zone = data.aws_availability_zones.available_zones.names[0]

map_public_ip_on_launch = true

tags = {

Name = "public subnet az1"

}

}

#create public subnet az2

resource "aws_subnet" "public_subnet_az2" {

vpc_id = aws_vpc.main.id

cidr_block = var.public_subnet_az2_cidr

availability_zone = data.aws_availability_zones.available_zones.names[1]

map_public_ip_on_launch = true

tags = {

Name = "public subnet az2"

}

}

The data source is used to get the availability zones dynamically if we want to change it change frequently. Two public subnets are created in the public az1 and public az2.

#Public Route Table to add public subnets

resource "aws_route_table" "public_route_table" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

tags = {

Name = "public route table"

}

}

#associate public subnet az1 to the public route table

resource "aws_route_table_association" "public_subnet_az1_route_table_association" {

subnet_id = aws_subnet.public_subnet_az1.id

route_table_id = aws_route_table.public_route_table.id

}

#associate public subnet az2 to the public route table

resource "aws_route_table_association" "public_subnet_az2_route_table_association" {

subnet_id = aws_subnet.public_subnet_az2.id

route_table_id = aws_route_table.public_route_table.id

}

A public route table is created to add public subnets and each public subnet are associated with the public route table.

#create private data subnet az1

resource "aws_subnet" "private_data_subnet_az1" {

vpc_id = aws_vpc.main.id

cidr_block = var.private_data_subnet_az1_cidr

availability_zone = data.aws_availability_zones.available_zones.names[0]

map_public_ip_on_launch = false

tags = {

Name = "private data subnet az1"

}

}

#create private data subnet az2

resource "aws_subnet" "private_data_subnet_az2" {

vpc_id = aws_vpc.main.id

cidr_block = var.private_data_subnet_az2_cidr

availability_zone = data.aws_availability_zones.available_zones.names[1]

map_public_ip_on_launch = false

tags = {

Name = "private data subnet az2"

}

}

At last, two private data subnets are created.

Till now you might have questions about how to know what values can be found and how to assign each of them. You have well-documented documents on the Terraform official site. You can easily understand the value if you follow this tutorial and terraform documentation.

variables.tf

variable "region" {}

variable "project_name" {}

variable "vpc_cidr"{}

variable "public_subnet_az1_cidr" {}

variable "public_subnet_az2_cidr" {}

variable "private_data_subnet_az1_cidr" {}

variable "private_data_subnet_az2_cidr" {}

The variables defined here are the variables that we used in the main.tf file. These variables now should be declared in main directory variables.tf and assigned if you want to provide value in terraform.tfvars .

outputs.tf

output "region" {

value = var.region

}

output "project_name" {

value = var.project_name

}

output "vpc_id" {

value = aws_vpc.main.id

}

output "public_subnet_az1_id" {

value = aws_subnet.public_subnet_az1.id

}

output "public_subnet_az2_id" {

value = aws_subnet.public_subnet_az2.id

}

output "private_data_subnet_az1_id" {

value = aws_subnet.private_data_subnet_az1.id

}

output "private_data_subnet_az2_id" {

value = aws_subnet.private_data_subnet_az2.id

}

output "internet_gateway"{

value = aws_internet_gateway.igw

}

We exported this output so we can use the output as the variable in modules.

In main directory:

variables.tf

variable "project_name" {}

variable "vpc_cidr" {}

variable "public_subnet_az1_cidr" {}

variable "public_subnet_az2_cidr" {}

variable "private_data_subnet_az1_cidr" {}

variable "private_data_subnet_az2_cidr" {}

terraform.tfvars

project_name = "2tier-Architecture"

vpc_cidr = "10.0.0.0/16"

public_subnet_az1_cidr = "10.0.0.0/24"

public_subnet_az2_cidr = "10.0.1.0/24"

private_data_subnet_az1_cidr = "10.0.2.0/24"

private_data_subnet_az2_cidr = "10.0.3.0/24"

main.tf

#create vpc (referencing the module)

module "vpc" {

source = "../modules/vpc"

region = var.region

project_name = var.project_name

vpc_cidr = var.vpc_cidr

public_subnet_az1_cidr = var.public_subnet_az1_cidr

public_subnet_az2_cidr = var.public_subnet_az2_cidr

private_data_subnet_az1_cidr = var.private_data_subnet_az1_cidr

private_data_subnet_az2_cidr = var.private_data_subnet_az2_cidr

}

STEP 4: Security Groups

Create a directory named sg inside the modules directory and files named main.tf, variables.tf and outputs.tf inside the sg directory.

Since we will be having two subnets in each availability zone. we will create two security groups; one for the application load balancer which faces the internet and the other for the private subnet instance.

main.tf

# create security group for the application load balancer

resource "aws_security_group" "public_instance_security_group" {

name = "public instance security group"

description = "enable http/https access on port 80/443"

vpc_id = var.vpc_id

ingress {

description = "ssh access"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [var.my_ip]

}

ingress {

description = "http access"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "https access"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = -1

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "public instance security groups"

}

}

# create security group for the private subnet instance

resource "aws_security_group" "private_instance_security_group" {

name = "private instance security group"

description = "enable http/https access on port 80/443 via public instance sg"

vpc_id = var.vpc_id

ingress {

description = "ssh access"

from_port = 22

to_port = 22

protocol = "tcp"

security_groups = [aws_security_group.public_instance_security_group.id]

}

ingress {

description = "http access"

from_port = 80

to_port = 80

protocol = "tcp"

security_groups = [aws_security_group.public_instance_security_group.id]

}

ingress {

description = "https access"

from_port = 443

to_port = 443

protocol = "tcp"

security_groups = [aws_security_group.public_instance_security_group.id]

}

egress {

from_port = 0

to_port = 0

protocol = -1

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "Private instance security groups"

}

}

If you have created the security groups in AWS Management Console, this configuration will be easy to understand for you.

variables.tf

variable "vpc_id" {}

variable "my_ip" {}

outputs.tf

output "public_instance_security_group_id" {

value = aws_security_group.public_instance_security_group.id

}

output "private_instance_security_group_id" {

value = aws_security_group.private_instance_security_group.id

}

Inside the main directory:

variables.tf

variable "my_ip" {}

terraform.tfvars

my_ip = "27.34.23.46/32"

#include your ip so you can ssh from yours only.

main.tf

#create security group

module "sg" {

source = "../modules/sg"

vpc_id = module.vpc.vpc_id

my_ip = var.my_ip

}

Till now, we have created the VPC and security groups. If you want to check whether the code is running or not, you can run the following code:

$terraform init (this initializes your provider, backend and other files)

$terraform fmt (this will refactor the code)

$terraform validate (to check the validation of code)

$terraform plan ( to make the configuration ready to apply in real life infrastructure)

$terraform apply( it will configure everything as you have done and set up VPC and security groups in AWS)

$terraform destroy (don't forget to destroy the resources after checking)

STEP 5: NAT Gateway

Create a nat-gateway directory inside the modules directory and files main.tf, and variables.tf inside that subdirectory. We will be creating a nat gateway and associating private subnets in the private route table in each availability zone.

main.tf

# allocate elastic ip. this eip will be used for the nat-gateway in the public subnet az1

resource "aws_eip" "eip_for_nat_gateway_az1" {

vpc = true

tags = {

Name = "nat gateway az1 eip"

}

}

# allocate elastic ip. this eip will be used for the nat-gateway in the public subnet az2

resource "aws_eip" "eip_for_nat_gateway_az2" {

vpc = true

tags = {

Name = "nat gateway az2 eip"

}

}

# create nat gateway in public subnet az1

resource "aws_nat_gateway" "nat_gateway_az1" {

allocation_id = aws_eip.eip_for_nat_gateway_az1.id

subnet_id = var.public_subnet_az1_id

tags = {

Name ="nat gateway az1"

}

# to ensure proper ordering, it is recommended to add an explicit dependency

depends_on = [var.internet_gateway]

}

# create nat gateway in public subnet az2

resource "aws_nat_gateway" "nat_gateway_az2" {

allocation_id = aws_eip.eip_for_nat_gateway_az2.id

subnet_id = var.public_subnet_az2_id

tags = {

Name = "nat gateway az2"

}

# to ensure proper ordering, it is recommended to add an explicit dependency

depends_on = [var.internet_gateway]

}

# create private route table az1 and add route through nat gateway az1

resource "aws_route_table" "private_route_table_az1" {

vpc_id = var.vpc_id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.nat_gateway_az1.id

}

tags = {

Name = "private route table for az1"

}

}

# associate private data subnet az1 with private route table az1

resource "aws_route_table_association" "private_data_subnet_az1_route_table_az1_association" {

subnet_id = var.private_data_subnet_az1_id

route_table_id = aws_route_table.private_route_table_az1.id

}

# create private route table az2 and add route through nat gateway az2

resource "aws_route_table" "private_route_table_az2" {

vpc_id = var.vpc_id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.nat_gateway_az2.id

}

tags = {

Name = "private route table for az2"

}

}

# associate private data subnet az2 with private route table az2

resource "aws_route_table_association" "private_data_subnet_az2_route_table_az2_association" {

subnet_id = var.private_data_subnet_az2_id

route_table_id = aws_route_table.private_route_table_az2.id

}

You can view the comment written to understand what is happening. Here, we have created the elastic IP for two nat gateways in two availability zones. We have also created two private tables in each availability zone.

variables.tf

variable "public_subnet_az1_id" {}

variable "public_subnet_az2_id" {}

variable "internet_gateway" {}

variable "vpc_id" {}

variable "private_data_subnet_az1_id" {}

variable "private_data_subnet_az2_id" {}

Inside the main directory:

module "nat_gateway" {

source = "../modules/nat-gateway"

public_subnet_az1_id = module.vpc.public_subnet_az1_id

internet_gateway = module.vpc.internet_gateway

public_subnet_az2_id = module.vpc.public_subnet_az2_id

vpc_id = module.vpc.vpc_id

private_data_subnet_az1_id = module.vpc.private_data_subnet_az1_id

private_data_subnet_az2_id = module.vpc.private_data_subnet_az2_id

}

STEP 6: Lauch Template

Inside the modules directory, create a subdirectory named auto-sg. Here we will define our launch template and on the basis of the launch template we will configure our auto-scaling groups and assign a load balancer to the template.

Create the structure as shown below:

In the above figure,

#This is the data.sh file containing user data for the private instances.

#!/bin/bash

apt-get update -y

apt-get install -y apache2

systemctl start apache2

systemctl enable apache2

echo "This is the test file for standalone database" > /var/www/html/index.html

#This is the ec2-init.sh file containing user data for public instances

#!/bin/bash

apt-get update -y

apt-get install -y apache2

systemctl start apache2

systemctl enable apache2

echo "This is the test file for web" > /var/www/html/index.html

For the ec2 instance, we also need the key pair to connect through ssh. You need to generate the id_rsa and id_rsa.pub keys by running the key generation command ssh-keygen. (I am leaving this work for you to generate ssh keys and bring that files to this directory)

main.tf

resource "aws_key_pair" "key-tf" {

key_name = "key-tf"

public_key = file("${path.module}/id_rsa.pub")

}

#create launch template for ec2 instance in public subnet

resource "aws_launch_template" "public_launch_template" {

name = "${var.project_name}-publiclaunch-template"

image_id = data.aws_ami.ami.id

instance_type = var.instance_type

key_name = aws_key_pair.key-tf.key_name

user_data = filebase64("../modules/auto-sg/ec2-init.sh")

block_device_mappings {

device_name = "/dev/sdf"

ebs {

volume_size = 20

delete_on_termination= true

}

}

monitoring {

enabled =true

}

placement {

availability_zone = "all"

tenancy = "default"

}

network_interfaces {

associate_public_ip_address = true

security_groups = [var.public_instance_security_group_id]

}

tag_specifications {

resource_type = "instance"

tags = {

name= "public launch template"

}

}

}

#create launch template for ec2 instance in private subnets

resource "aws_launch_template" "private_launch_template" {

name = "${var.project_name}-privatelaunch-template"

image_id = data.aws_ami.ami.id

instance_type = var.instance_type

key_name = aws_key_pair.key-tf.key_name

user_data = filebase64("../modules/auto-sg/data.sh")

block_device_mappings {

device_name = "/dev/sdf"

ebs {

volume_size = 20

delete_on_termination= false

}

}

monitoring {

enabled =true

}

placement {

availability_zone = "all"

tenancy = "default"

}

network_interfaces {

associate_public_ip_address = false

security_groups = [var.private_instance_security_group_id]

}

tag_specifications {

resource_type = "instance"

tags = {

name= "public launch template"

}

}

}

Now, create variables.tf and outputs.tf files too.

STEP 7: Auto Scaling Groups

But first, we will complete main.tf with auto-scaling groups.

main.tf

#after configuring launch template in same main.tf file

#create autoscaling group

resource "aws_autoscaling_group" "public_autoscaling_group" {

name = "${var.project_name}-autoscaling-group"

desired_capacity = var.desired_capacity

min_size = var.min_size

max_size = var.max_size

health_check_grace_period = 300

health_check_type = "ELB"

vpc_zone_identifier = [var.public_subnet_az1_id, var.public_subnet_az2_id]

target_group_arns = [var.public_loadbalancer_target_group_arn]

enabled_metrics = [

"GroupMinSize",

"GroupMaxSize",

"GroupDesiredCapacity",

"GroupInServiceInstances",

"GroupTotalInstances"

]

metrics_granularity = "1Minute"

launch_template {

id = aws_launch_template.public_launch_template.id

version = aws_launch_template.public_launch_template.latest_version

}

depends_on = [aws_launch_template.public_launch_template]

tag {

key = "Name"

value = "web"

propagate_at_launch = true

}

}

# scale up policy

resource "aws_autoscaling_policy" "public_scale_up" {

name = "${var.project_name}-asg-scale-up"

autoscaling_group_name = aws_autoscaling_group.public_autoscaling_group.name

adjustment_type = "ChangeInCapacity"

scaling_adjustment = "1" #increasing instance by 1

cooldown = "300"

policy_type = "SimpleScaling"

}

# scale up alarm

# alarm will trigger the ASG policy (scale/down) based on the metric (CPUUtilization), comparison_operator, threshold

resource "aws_cloudwatch_metric_alarm" "public_scale_up_alarm" {

alarm_name = "${var.project_name}-asg-scale-up-alarm"

alarm_description = "asg-scale-up-cpu-alarm"

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/AutoScaling"

period = "120"

statistic = "Average"

threshold = "70"

dimensions = {

"AutoScalingGroupName" = aws_autoscaling_group.public_autoscaling_group.name

}

actions_enabled = true

alarm_actions = [aws_autoscaling_policy.public_scale_up.arn]

}

# scale down policy

resource "aws_autoscaling_policy" "public_scale_down" {

name = "${var.project_name}-asg-scale-down"

autoscaling_group_name = aws_autoscaling_group.public_autoscaling_group.name

adjustment_type = "ChangeInCapacity"

scaling_adjustment = "-1" # decreasing instance by 1

cooldown = "300"

policy_type = "SimpleScaling"

}

# scale down alarm

resource "aws_cloudwatch_metric_alarm" "public_scale_down_alarm" {

alarm_name = "${var.project_name}-asg-scale-down-alarm"

alarm_description = "asg-scale-down-cpu-alarm"

comparison_operator = "LessThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/EC2"

period = "120"

statistic = "Average"

threshold = "10" # Instance will scale down when CPU utilization is lower than 5 %

dimensions = {

"AutoScalingGroupName" = aws_autoscaling_group.public_autoscaling_group.name

}

actions_enabled = true

alarm_actions = [aws_autoscaling_policy.public_scale_down.arn]

}

Here, we have attached the auto-scaling groups which will scale up and down based on the CPU utilization threshold. We have also configured the Cloudwatch metric so that we can have the alarm for either scaling up or down for both public and private instances. We will be using a standalone database in the EC2 instance of a private subnet instead of database instance from AWS.

variables.tf

variable "project_name" {}

variable "image_name" {}

variable "instance_type" {}

variable "public_subnet_az1_id" {}

variable "public_subnet_az2_id" {}

variable "desired_capacity"{}

variable "min_size"{}

variable "max_size"{}

variable "vpc_id" {}

variable "public_loadbalancer_target_group_arn"{}

variable "public_instance_security_group_id"{}

variable "public_loadbalancer_arn"{}

variable "aws_lb_listener_alb_public_https_listener_arn" {}

outputs.tf

output "public_launch_arn"{

value = aws_launch_template.public_launch_template.arn

}

output "public_launch_id"{

value = aws_launch_template.public_launch_template.id

}

Inside the main directory:

main.tf

#create autoscaling groups

module "auto-sg" {

source = "../modules/auto-sg"

project_name = module.vpc.project_name

image_name = var.image_name

instance_type = var.instance_type

public_subnet_az1_id = module.vpc.public_subnet_az1_id

public_subnet_az2_id = module.vpc.public_subnet_az2_id

desired_capacity = var.desired_capacity

min_size = var.min_size

max_size = var.max_size

vpc_id = module.vpc.vpc_id

public_loadbalancer_target_group_arn = module.lb.public_loadbalancer_target_group_arn

public_instance_security_group_id = module.sg.public_instance_security_group_id

public_loadbalancer_arn = module.lb.public_loadbalancer_arn

aws_lb_listener_alb_public_https_listener_arn= module.lb.aws_lb_listener_alb_public_https_listener_arn

}

variabes.tf

variable "image_name" {}

variable "instance_type" {}

variable "desired_capacity" {}

variable "min_size" {}

variable "max_size" {}

outputs.tf

image_name = "ubuntu/images/hvm-ssd/ubuntu"

instance_type = "t2.micro"

desired_capacity = "1"

min_size = "1"

max_size = "3"

You might have seen datasource.tf file in the folder structure. So, what is it for? Well, it is the data source that looks in the AWS AMI repository and brings the image on the basis of the filter we have defined.

Inside modules/auto-sg -> create datasource.tf

data "aws_ami" "ami" {

most_recent = true

owners = ["amazon"]

filter {

name = "name"

values = ["${var.image_name}-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

filter {

name = "root-device-type"

values = ["ebs"]

}

}

STEP 8: Application Load Balancer

Inside the modules directory, create directory names lb and three files inside lb directory named main.tf, variables.tf and outputs.tf.

Till now you might have gained some idea about working with modules in Terraform.

main.tf

#create loadbalancer for webtier

resource "aws_lb" "public_alb" {

name = "${var.project_name}-publicalb"

internal = false

load_balancer_type = "application"

security_groups = [var.public_instance_security_group_id]

subnets = [var.public_subnet_az1_id, var.public_subnet_az2_id]

tags = {

name = "${var.project_name}-publicalb"

}

}

#create listener on port 80 with redirect to 443

resource "aws_lb_listener" "alb_public_http_listener" {

load_balancer_arn = aws_lb.public_alb.arn

port = 80

protocol = "HTTP"

default_action {

type = "redirect"

redirect {

port = 443

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

}

# create a listener on port 443 with forward action

resource "aws_lb_listener" "alb_public_https_listener" {

load_balancer_arn = aws_lb.public_alb.arn

port = 443

protocol = "HTTPS"

ssl_policy = "ELBSecurityPolicy-2016-08"

certificate_arn = var.aws_acm_certificate_validation_acm_certificate_validation_arn

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.alb_public_target_group.arn

}

}

#create target group for load balancer

resource "aws_lb_target_group" "alb_public_target_group" {

name = "${var.project_name}-pub-tg"

port = 80

protocol = "HTTP"

target_type = "instance" #"ip" for ALB/NLB, "instance" for autoscaling group,

vpc_id = var.vpc_id

tags = {

name = "${var.project_name}-tg"

}

depends_on = [aws_lb.public_alb]

lifecycle {

create_before_destroy = true

}

health_check {

enabled = true

interval = 300

path = "/check.html"

timeout = 60

matcher = 200

healthy_threshold = 5

unhealthy_threshold = 5

}

}

We have created the application load balancer in both subnets. The load balancer listens at port 443 and redirects to 443 also if some requests come to port 80. We have then created the target group with type as an instance which is for our auto-scaling groups.

variables.tf

variable "project_name" {}

variable "vpc_id" {}

variable "public_subnet_az1_id"{}

variable "public_subnet_az2_id"{}

variable "public_instance_security_group_id"{}

variable "public_loadbalancer_target_group_arn"{}

variable "aws_acm_certificate_validation_acm_certificate_validation_arn"{}

outputs.tf

output "public_loadbalancer_id" {

value= aws_lb.public_alb.id

}

output "aws_lb_public_alb_dns_name" {

value = aws_lb.public_alb.dns_name

}

output "aws_lb_public_alb_zone_id"{

value = aws_lb.public_alb.zone_id

}

output "public_loadbalancer_arn" {

value= aws_lb.public_alb.arn

}

output "public_loadbalancer_target_group_id" {

value= aws_lb_target_group.alb_public_target_group.id

}

output "public_loadbalancer_target_group_arn" {

value= aws_lb_target_group.alb_public_target_group.arn

}

output "aws_lb_listener_alb_public_https_listener_arn" {

value = aws_lb_listener.alb_public_https_listener.arn

}

Inside the main directory:

main.tf

#create application load balancer

module "lb" {

source = "../modules/lb"

project_name = module.vpc.project_name

public_instance_security_group_id = module.sg.public_instance_security_group_id

public_subnet_az1_id = module.vpc.public_subnet_az1_id

public_subnet_az2_id = module.vpc.public_subnet_az2_id

vpc_id = module.vpc.vpc_id

public_loadbalancer_target_group_arn = module.lb.public_loadbalancer_target_group_arn

aws_acm_certificate_validation_acm_certificate_validation_arn = module.acm.aws_acm_certificate_validation_acm_certificate_validation_arn

}

STEP 9: Amazon Certificate Manager

Now, we are almost ready to launch the ec2 instance and test it. But we want to go further and apply SSL/TLS certificate and route traffic through our custom domain name to the load balancer and then to instances.

Create a directory named acm inside modules and I guess now you know which files are to be created here :) (Leaving it for you)

main.tf

# request public certificates from the amazon certificate manager.

resource "aws_acm_certificate" "acm_certificate" {

domain_name = var.domain_name

subject_alternative_names = [ var.alternative_name]

validation_method = "DNS"

lifecycle {

create_before_destroy = true

}

}

# get details about a route 53 hosted zone

data "aws_route53_zone" "route53_zone" {

name = var.domain_name

private_zone = false

}

# create a record set in route 53 for domain validatation

resource "aws_route53_record" "route53_record" {

for_each = {

for dvo in aws_acm_certificate.acm_certificate.domain_validation_options : dvo.domain_name => {

name = dvo.resource_record_name

record = dvo.resource_record_value

type = dvo.resource_record_type

zone_id = dvo.domain_name == "${var.domain_name}" ? data.aws_route53_zone.route53_zone.zone_id : data.aws_route53_zone.route53_zone.zone_id

}

}

allow_overwrite = true

name = each.value.name

records = [each.value.record]

ttl = 60

type = each.value.type

zone_id = each.value.zone_id

}

# validate acm certificates

resource "aws_acm_certificate_validation" "acm_certificate_validation" {

certificate_arn = aws_acm_certificate.acm_certificate.arn

validation_record_fqdns = [for record in aws_route53_record.route53_record : record.fqdn]

}

We are creating a public certificate from the certificate manager and creating record set in the Route 53 to validate it.

variables.tf

variable "domain_name" {}

variable "alternative_name" {}

outputs.tf

output "domain_name" {

value = var.domain_name

}

output "certificate_arn"{

value = aws_acm_certificate.acm_certificate.arn

}

output "aws_acm_certificate_validation_acm_certificate_validation_arn" {

value = aws_acm_certificate_validation.acm_certificate_validation.certificate_arn

}

Now inside the main directory:

main.tf

#create ssl certificate

module "acm" {

source = "../modules/acm"

domain_name = var.domain_name

alternative_name = var.alternative_name

}

terraform.tfvars

domain_name = "mupreti.com.np"

alternative_name = "*.mupreti.com.np"

In this case, I have used my domain name. You should use your domain hosted in Route 53.

variables.tf

variable "domain_name" {}

variable "alternative_name" {}

STEP 10: Route 53

Now will route traffic through our custom domain. So, create folder name route53 inside the modules directory.

main.tf

data "aws_route53_zone" "zone" {

name = "mupreti.com.np"

private_zone = false

}

resource "aws_route53_record" "example" {

zone_id = data.aws_route53_zone.zone.zone_id

name = "mupreti.com.np"

type = "A"

alias {

name = var.aws_lb_public_alb_dns_name

zone_id = var.aws_lb_public_alb_zone_id

evaluate_target_health = false

}

}

variables.tf

variable "aws_lb_public_alb_dns_name" {}

variable "aws_lb_public_alb_zone_id" {}

Inside the main directory:

main.tf

module "route-53" {

source = "../modules/route53"

aws_lb_public_alb_dns_name = module.lb.aws_lb_public_alb_dns_name

aws_lb_public_alb_zone_id = module.lb.aws_lb_public_alb_zone_id

}

Congratulations, you have configured it successfully. But wait, what will be the final output and won't you like to confirm your output?

Before that, we have .tfstate which stores the state of our terraform files. What if this file got lost suddenly or someone tried to change it locally? So we will store this file in the s3 bucket of our AWS account. Create backend.tf file inside the main directory.

backend.tf

terraform {

backend "s3" {

bucket = "terraform-2tier-architecture"

key = "2tier-architecture.tfstate"

region = var.region

profile = "default"

}

}

Now, let's confirm our configuration. Your files inside the main directory should look like this.

main.tf

provider "aws" {

region = var.region

profile = "default"

}

#create vpc (referencing the module)

module "vpc" {

source = "../modules/vpc"

region = var.region

project_name = var.project_name

vpc_cidr = var.vpc_cidr

public_subnet_az1_cidr = var.public_subnet_az1_cidr

public_subnet_az2_cidr = var.public_subnet_az2_cidr

private_data_subnet_az1_cidr = var.private_data_subnet_az1_cidr

private_data_subnet_az2_cidr = var.private_data_subnet_az2_cidr

}

#create nat gateway

module "nat_gateway" {

source = "../modules/nat-gateway"

public_subnet_az1_id = module.vpc.public_subnet_az1_id

internet_gateway = module.vpc.internet_gateway

public_subnet_az2_id = module.vpc.public_subnet_az2_id

vpc_id = module.vpc.vpc_id

private_data_subnet_az1_id = module.vpc.private_data_subnet_az1_id

private_data_subnet_az2_id = module.vpc.private_data_subnet_az2_id

}

#create security group

module "sg" {

source = "../modules/sg"

vpc_id = module.vpc.vpc_id

my_ip = var.my_ip

}

#create ssl certificate

module "acm" {

source = "../modules/acm"

domain_name = var.domain_name

alternative_name = var.alternative_name

}

#create application load balancer

module "lb" {

source = "../modules/lb"

project_name = module.vpc.project_name

public_instance_security_group_id = module.sg.public_instance_security_group_id

public_subnet_az1_id = module.vpc.public_subnet_az1_id

public_subnet_az2_id = module.vpc.public_subnet_az2_id

vpc_id = module.vpc.vpc_id

public_loadbalancer_target_group_arn = module.lb.public_loadbalancer_target_group_arn

aws_acm_certificate_validation_acm_certificate_validation_arn = module.acm.aws_acm_certificate_validation_acm_certificate_validation_arn

}

#create autoscaling groups

module "auto-sg" {

source = "../modules/auto-sg"

project_name = module.vpc.project_name

image_name = var.image_name

instance_type = var.instance_type

public_subnet_az1_id = module.vpc.public_subnet_az1_id

public_subnet_az2_id = module.vpc.public_subnet_az2_id

desired_capacity = var.desired_capacity

min_size = var.min_size

max_size = var.max_size

vpc_id = module.vpc.vpc_id

public_loadbalancer_target_group_arn = module.lb.public_loadbalancer_target_group_arn

public_instance_security_group_id = module.sg.public_instance_security_group_id

public_loadbalancer_arn = module.lb.public_loadbalancer_arn

aws_lb_listener_alb_public_https_listener_arn= module.lb.aws_lb_listener_alb_public_https_listener_arn

}

module "route-53" {

source = "../modules/route53"

aws_lb_public_alb_dns_name = module.lb.aws_lb_public_alb_dns_name

aws_lb_public_alb_zone_id = module.lb.aws_lb_public_alb_zone_id

}

variables.tf

variable "region" {}

variable "project_name" {}

variable "vpc_cidr" {}

variable "public_subnet_az1_cidr" {}

variable "public_subnet_az2_cidr" {}

variable "private_data_subnet_az1_cidr" {}

variable "private_data_subnet_az2_cidr" {}

variable "domain_name" {}

variable "alternative_name" {}

variable "my_ip" {}

variable "image_name" {}

variable "instance_type" {}

variable "desired_capacity" {}

variable "min_size" {}

variable "max_size" {}

terraform.tfvars

region = "us-east-1"

project_name = "2tier-Architecture"

vpc_cidr = "10.0.0.0/16"

public_subnet_az1_cidr = "10.0.0.0/24"

public_subnet_az2_cidr = "10.0.1.0/24"

private_data_subnet_az1_cidr = "10.0.2.0/24"

private_data_subnet_az2_cidr = "10.0.3.0/24"

domain_name = "mupreti.com.np"

alternative_name = "*.mupreti.com.np"

my_ip = "27.34.23.46/32"

image_name = "ubuntu/images/hvm-ssd/ubuntu"

instance_type = "t2.micro"

desired_capacity = "1"

min_size = "1"

max_size = "3"

Your folder structure should look something like this:

For now, ignore that .terraform directory and lock the file. Ok, are you ready to launch everything in a minute?

Let's go!

Conclusion

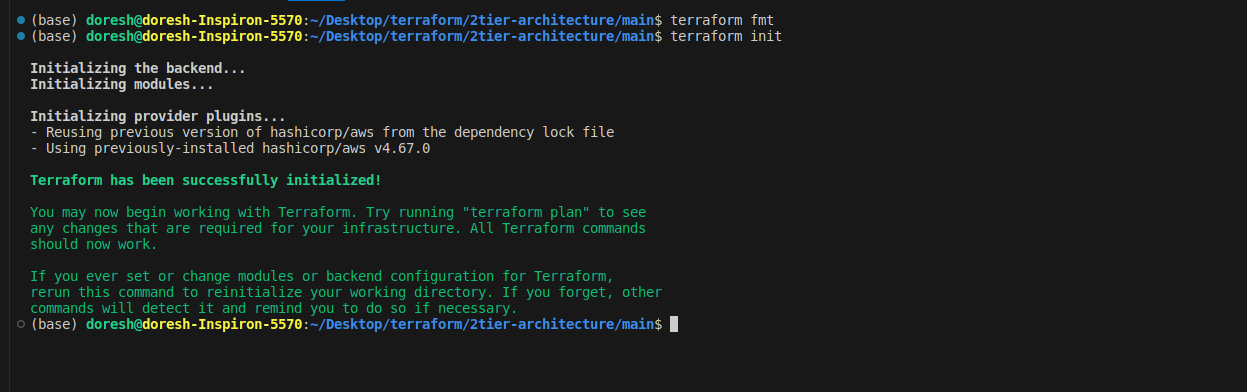

$terraform fmt

$terraform validate

The two above commands are just for refactoring and validating. Actual work begins now.

$terraform init

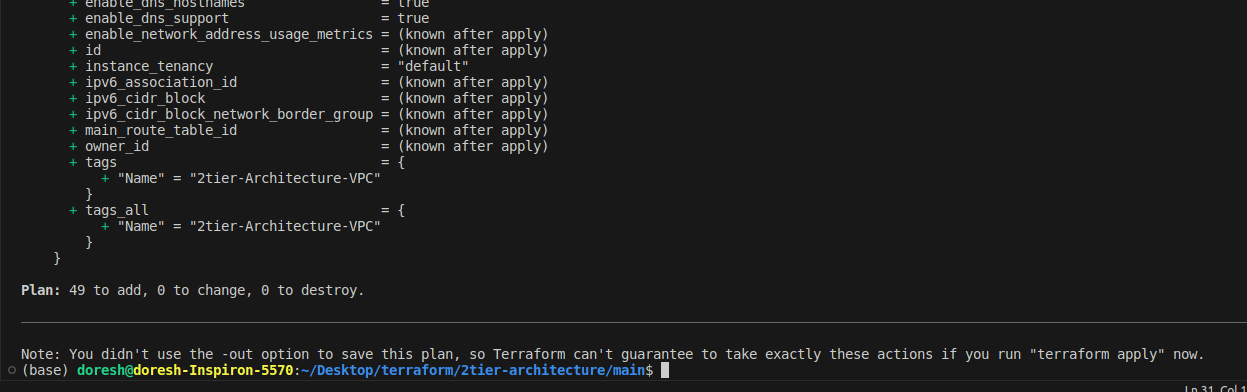

$terraform plan

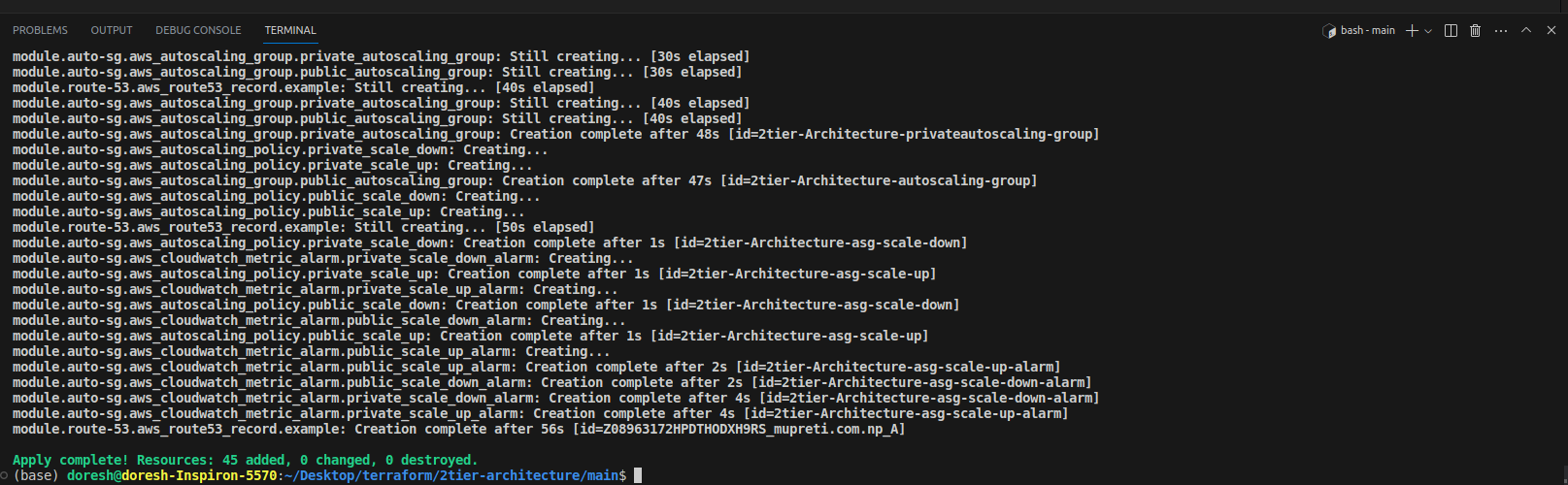

$terraform apply

terraform init- This initializes Terraform in a directory, downloading any required providers. It should be run once when setting up a new Terraform config.terraform plan- This generates an execution plan to show what Terraform will do when you callapply. You can use this to preview the changes Terraform will make.terraform apply- This executes the actions proposed in the plan and actually provisions or changes your infrastructure.

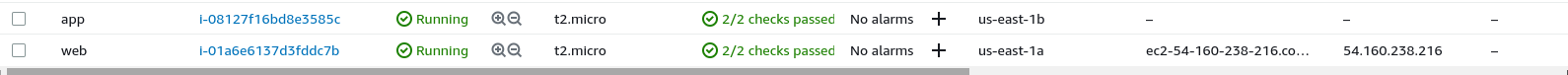

When you visit your AWS management console and the resources you have configured in Terraform, you will see all the resources working together. You can configure it by looking individually at each of them.

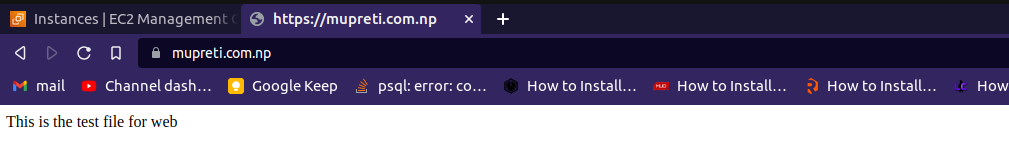

Also, run your domain name and verify whether your content of user data is shown or not in a secure way.

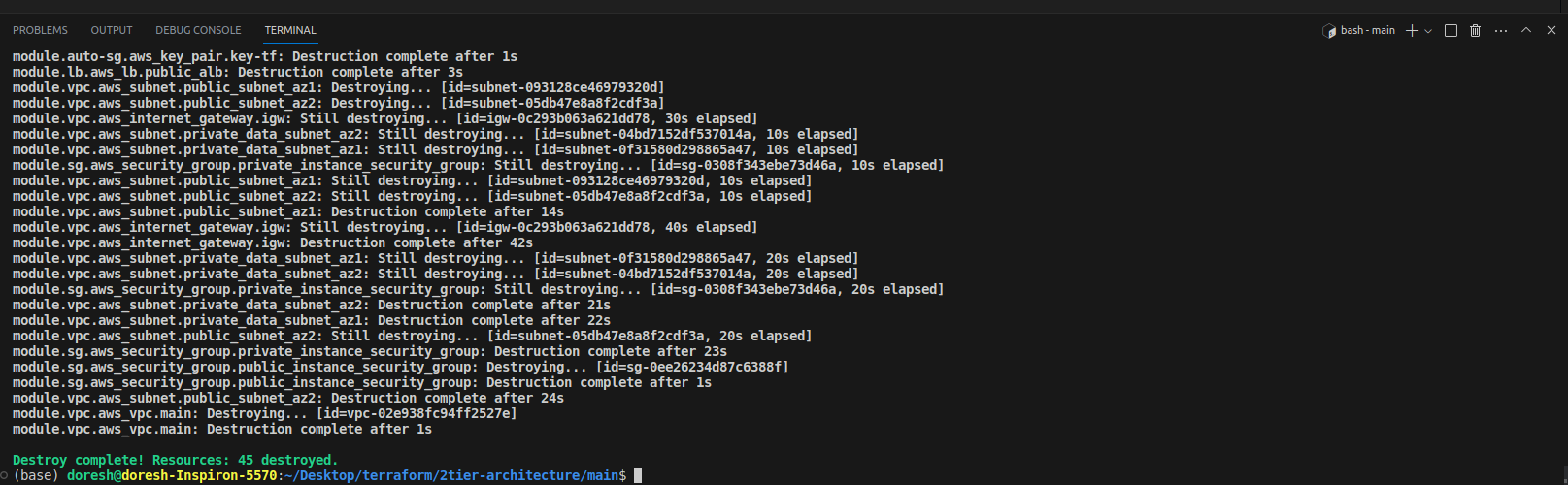

At last, don't forget to run terraform destroy to destroy all the created resources. Otherwise, you will have to pay a huge amount of money and I don't want that :( You might have to delete some resources manually too.

Damn! It was one hell of a task but congratulations, you made it.

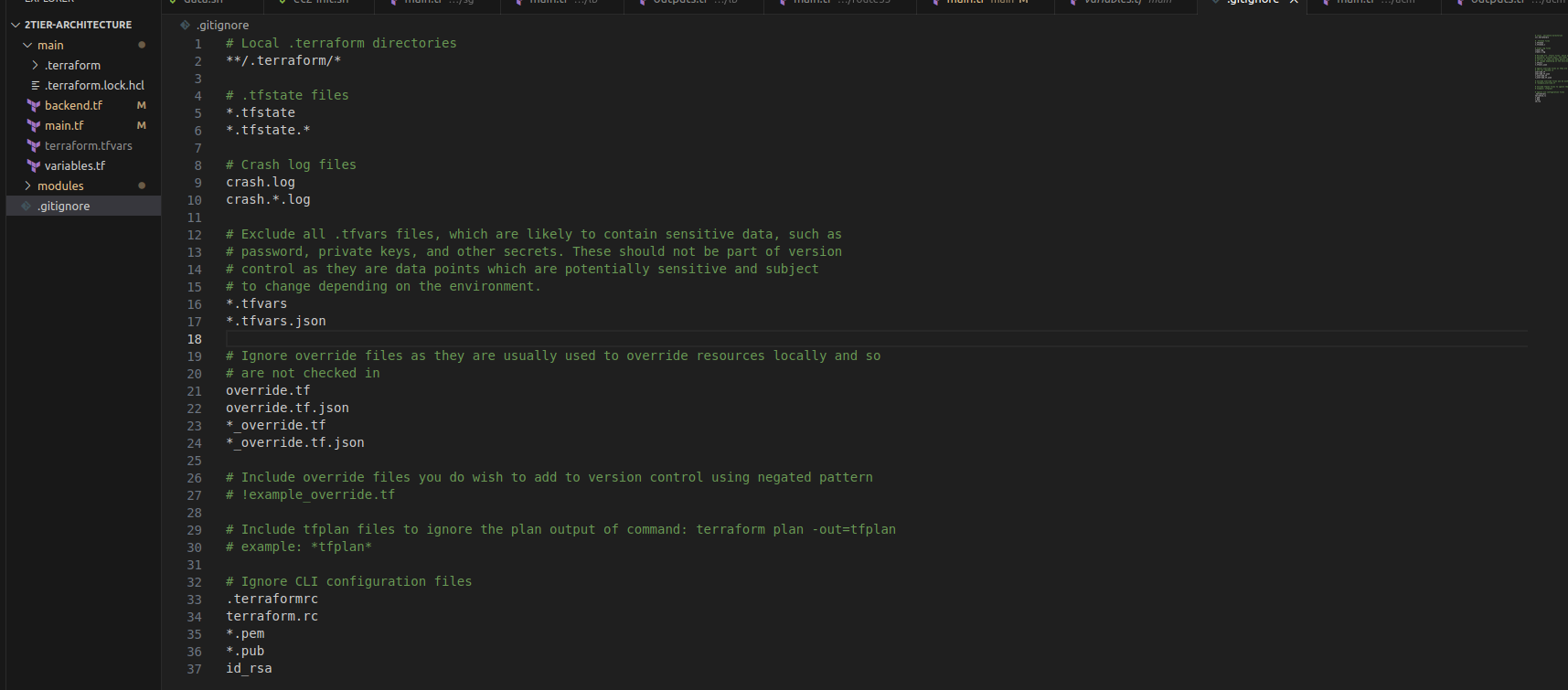

Pro tip: Since you have the public and private keys, you shouldn't push that to GitHub. So keep that in the .gitignore file. Also, you can include other files like:

Now, you can initialize git and push to GitHub.

My GitHub repo: https://github.com/mahupreti/Keeping-it-DRY-with-reusable-Terraform-modules-in-the-AWS-cloud.git

"Thank you for bearing with me until the end. See you in the next one."

References

https://youtu.be/XaV9LbmX3d0 (This video helps to understand terraform easily)

https://spacelift.io/blog/terraform-security-group (You can refer to this article to know about security groups in Terraform. It is so a nicely written article)

Subscribe to my newsletter

Read articles from Mahesh Upreti directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mahesh Upreti

Mahesh Upreti

I am a DevOps and Cloud enthusiast currently exploring different AWS services and DevOps tools.