Setup Kubernetes Cluster using Kubeadm

Charan kumar

Charan kumarKubernetes is an open-source container orchestration tool. The name Kubernetes originates from Greek, meaning helmsman or pilot. K8s as an abbreviation results from counting the eight letters between the “K” and the “s”. Google open-sourced the Kubernetes project in 2014.

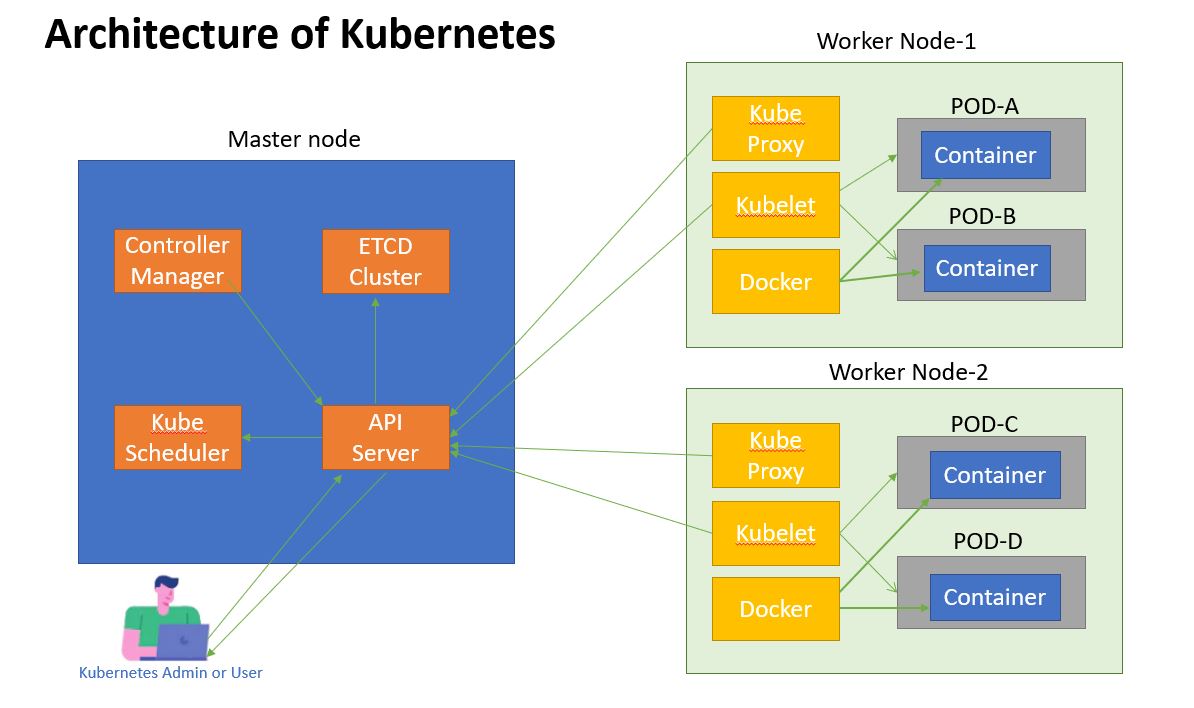

Kubernetes Architecture

The Kubernetes architecture consists of the following components:

Master Components: The master components are responsible for managing the overall Kubernetes cluster. They include:

Kubernetes API Server: The API server is the central control plane of the Kubernetes cluster. It provides a RESTful interface for communication with other components of the cluster.

Etcd: etcd is a distributed key-value store that is used to store the configuration data and the state of the Kubernetes cluster.

Kube-Controller Manager: The controller manager is responsible for managing the various controllers that are responsible for maintaining the desired state of the cluster.

Kube-Scheduler: The scheduler is responsible for scheduling the containerized workloads to the worker nodes.

Worker Node Components: The node components are responsible for running the containerized workloads. They include:

Kubelet: The kubelet is responsible for managing the containerized workloads on a node. It communicates with the API server to receive instructions on how to manage the containers.

Container Runtime: The container runtime is responsible for running the containers on a node. It could be Docker, rkt, or any other container runtime that supports the Kubernetes Container Runtime Interface (CRI).

Kube-proxy: The kube-proxy is responsible for managing the network connectivity of the containers running on a node.

Kubeadm Installation

Kubernetes is an open-source container orchestration system that automates the deployment, scaling, and management of containerized applications. Kubeadm is a tool that makes it easy to set up a Kubernetes cluster.

Steps to follow:-

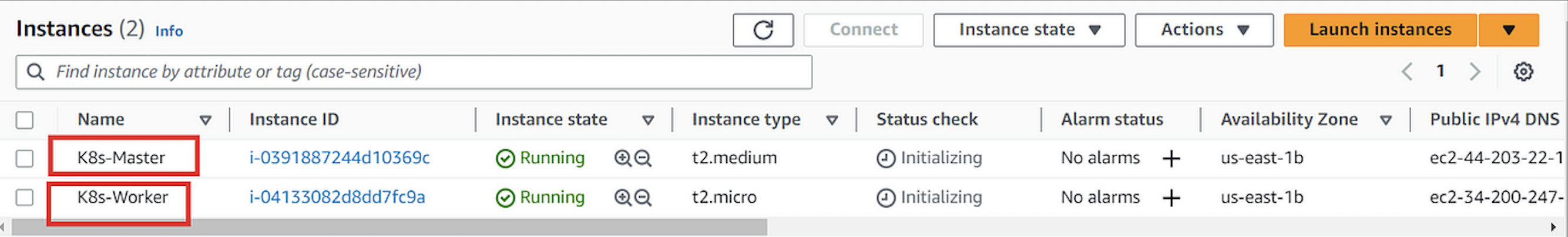

Step 1 — Create two EC2 Instances,K8s-Master(T2 Medium)and K8s-Worker (T2 Micro) Ubuntu

Step 2— Install Docker Engine ( Master and Slave)

Step 3— Add Apt repository and Install Kubernetes components

Step 4— Configure the Master Node.

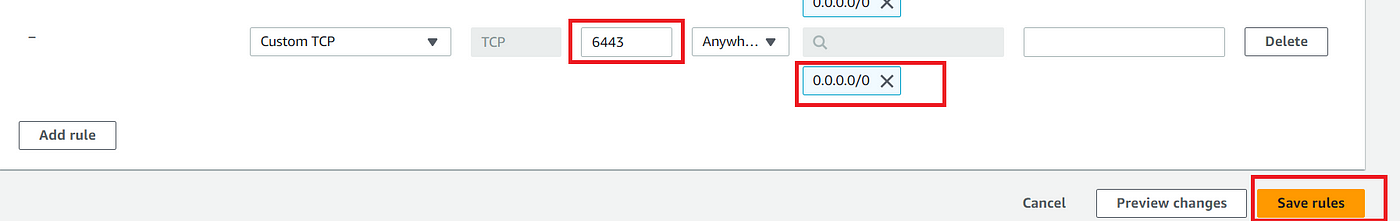

Step 5— Edit the inbound Security Rule. Enable Port 6443 on K8s-Master

Step 6— Configure the Worker Node

Step 7— Verify the cluster

Step 8 — Terminate EC2 Instances

Now, let's get started and dig deeper into each of these steps

Step 1 — Create two EC2 Machines ,K8s-Master(T2 Medium)and K8s-WorkerT2 Micro)

Two machines running Ubuntu 22.04 LTS, one for the master node and the other for the worker node.

The master node requires more vCPU and memory, so an instance type of T2.medium is recommended.

The worker node does not require as much vCPU and memory, so an instance type of T2.micro is sufficient.

Sudo privileges are required on both machines.

Step 2— Install Docker Engine ( Master and Slave)

Connect to your EC2 Instances. Enter these commands on your Master and Worker EC2 Instances to install Docker

sudo apt update -y

sudo apt install docker.io -y

sudo systemctl start docker

sudo systemctl enable docker

Step 3: Add Apt repository and Install Kubernetes components.

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update -y

sudo apt install kubeadm=1.20.0-00 kubectl=1.20.0-00 kubelet=1.20.0-00 -y

Step 4: Configure the Master Node.

The next step is to configure the master node. Run the following commands on the master node only

sudo su

kubeadm init

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

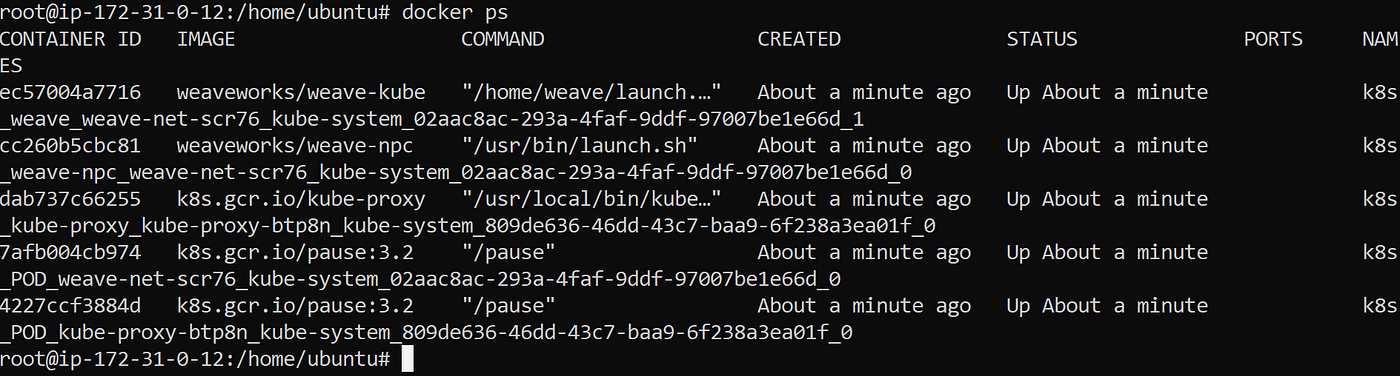

kubectl apply -f https://github.com/weaveworks/weave/releases/download/v2.8.1/weave-daemonset-k8s.yaml

kubeadm token create --print-join-command

Step 5— Edit inbound Security Rule. Enable Port 6443 on K8s-Master

Step 6— Configure the Worker Node.

Run the below command on the Worker Node to join it to the master. The kubeadm reset pre-flight checks command checks if the system meets the requirements for joining the Kubernetes cluster. Then, paste the kubeadm token create command output from the master node on the worker node with the — v=5 flag. This joins the worker node to the Kubernetes cluster.

sudo su

kubeadm reset pre-flight checks

# Paste the Join command on worker node with `--v=5`

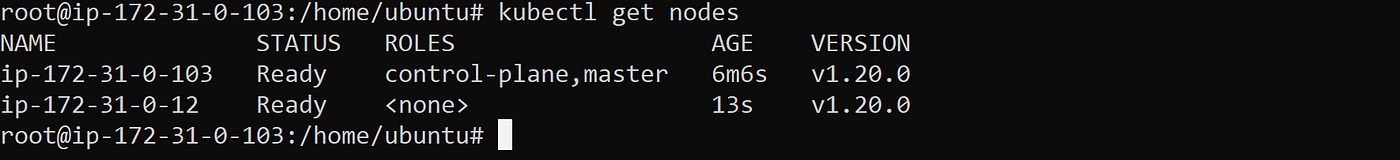

Step 7— Verify the cluster

kubectl get nodes

You will get this output

For starter, we can use kubectl run mypod --image= --restart=Never

The command provided displays a list of all nodes in the cluster, and if the setup is correct, it should include both the master and worker nodes. To create a new deployment or pod, the kubectl run command can be used, such as “kubectl run mypod --image= --restart=Never". By running the "kubectl run nginx --image=nginx --restart=Never" command through the Master node, a pod running the Nginx image was created, and the results can be viewed on the worker node. A screenshot of the Master node running the command is attached.

Screenshot of Worker Node where Nginx container is orchestrated from master:

Step 8 — Terminate EC2 Instances

Goto your EC2 console and terminate EC2 Instances.

Conclusion

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It has a master-slave architecture, and several components work together to manage containerized workloads, such as Pods, ReplicaSets, Deployments, Services, ConfigMaps, and Secrets.

Hope you liked this article and would be able to install kubeadm. Please give your claps and follow me for content on Cloud and DevOps. Thank you! 🙏

Subscribe to my newsletter

Read articles from Charan kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Charan kumar

Charan kumar

Devops engineer at Acro Computing India. Skilled in Git, Ansible, Jenkins, Docker, Kubernetes.