Supercharge Customer Support with Fact-Based Question Answering with ChatGPT and Elasticsearch

Siddharth Kothari

Siddharth Kothari

ChatGPT, powered by OpenAI's advanced GPT-3.5 and GPT-4 models, has been making waves in the tech industry. This language model, trained on a vast corpus of data, has demonstrated its utility in answering questions and providing information across a broad spectrum of topics, including technical documentation.

In this article, I explore how ChatGPT can be used for documentation and knowledge base search in user and customer support scenarios, allowing users to find answers to their questions in a conversational way. However, it's important to note that ChatGPT has its limitations, especially regarding its knowledge cutoff. It can't access data beyond 2021, so any changes or updates to your documentation after this date won't be reflected in its responses.

This limitation means that certain queries related to your documentation may result in outdated or incorrect answers, as ChatGPT doesn't have up-to-date information beyond its cutoff date of September 2021. In this article, I'll show you how to overcome this limitation of ChatGPT by integrating context from a search engine to ensure more accurate responses.

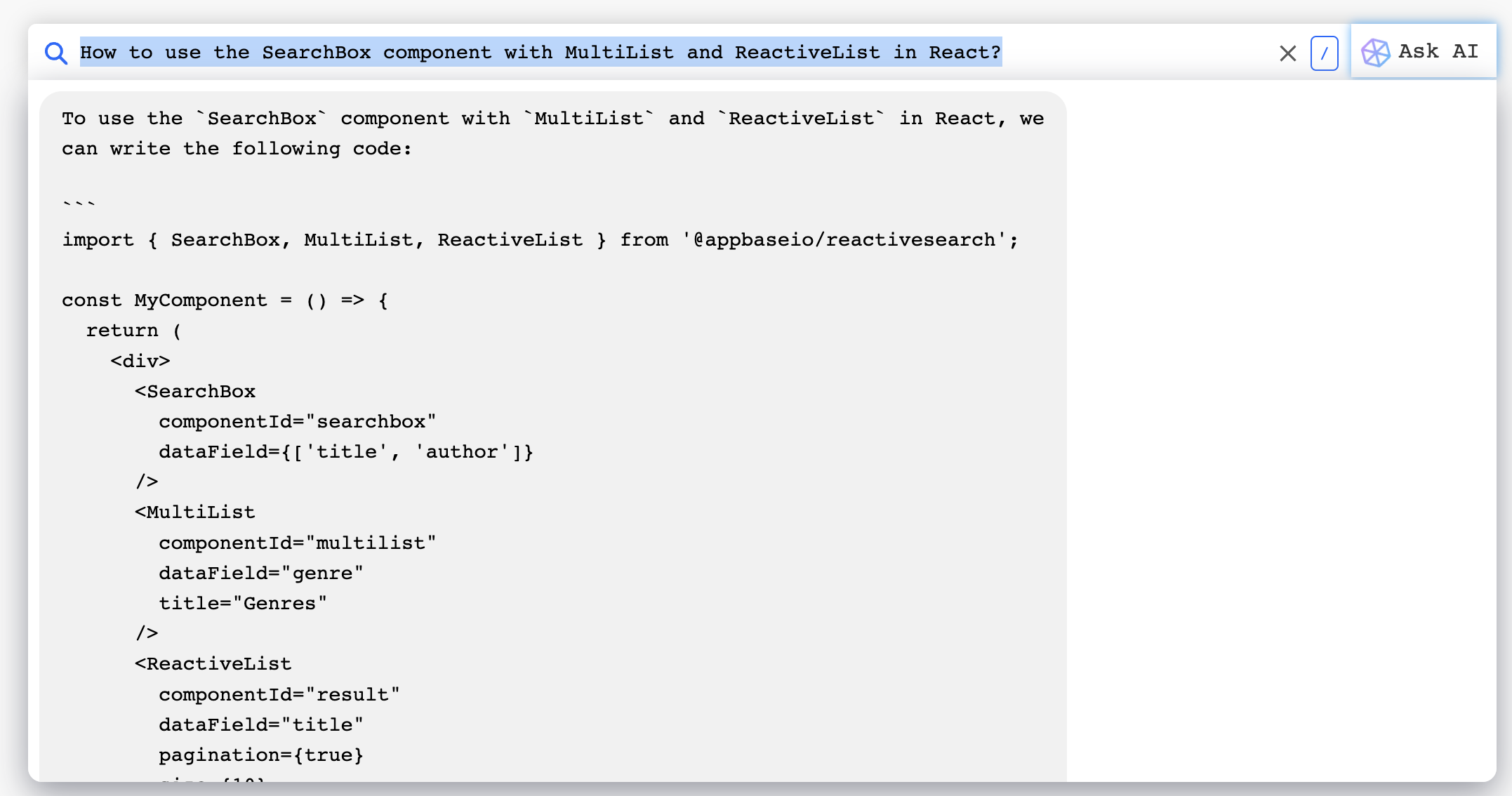

Here's the application I'm going to build:

LTM - QA

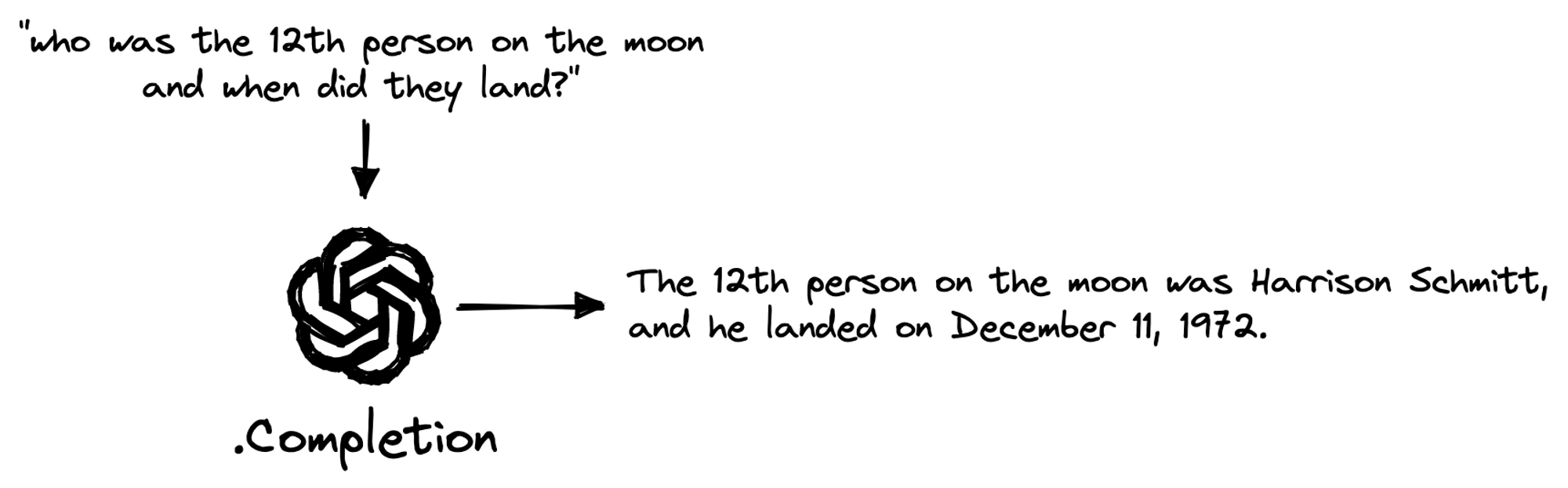

ChatGPT works as a GQA (Generative Question Answering) system. A basic GQA system only requires a user text query and a large language model (LLM).

I enhance GQA with LTM-QA, which stands for Long-Term Memory Question Answering. LTM-QA allows machines to store information for a long time and recall it when needed. This improves both precision and recall - a win-win situation.

The innovative idea here is that I use BM-25 (TF/IDF) relevance instead of requiring data vectorization, although this is also possible - a post on the same is forthcoming. Elasticsearch and OpenSearch (the Apache 2.0 derivative of the former) are the go-to search engines when it comes to relevant text search with filtering capabilities. If your search is used by users who are familiar with the data, then BM-25-based relevance offers instant results at scale and query remixing capabilities that are very nascent with vector search today.

The key idea is to combine the power of ChatGPT and state-of-the-art search engines such as ElasticSearch and OpenSearch to create a robust search experience that's relevant, accurate, concise, and evolves as per the users' prompts.

🤔 Elasticsearch and OpenSearch are the standards (10x adoption, work at scale, and have good Dx) where developers already have their long-term search data, and they support BM-25 and vector search-based relevance models.

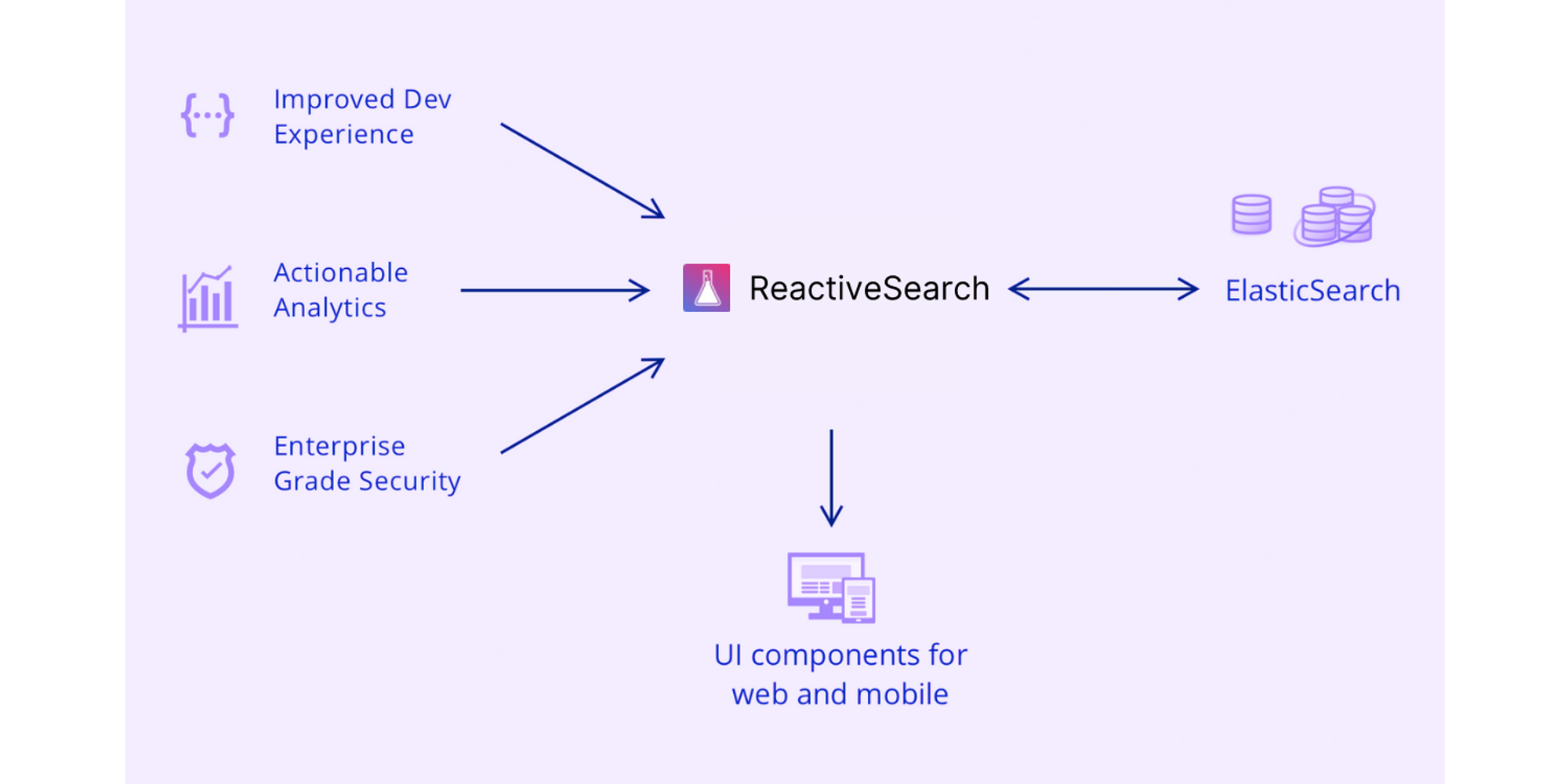

ReactiveSearch is a CloudFlare for Search Engines

ReactiveSearch.io offers a supercharged search experience for creating the most demanding app search experiences with a no-code UI builder and search relevance control plane, AI Answering support (which I will be using here), pipelines to create an extendible search backend, caching, and search insights.

There are additional open-source and hosted tools that simplify the process of building search experiences. You can:

Import your data from various sources via the dashboard or CLI or REST APIs,

Build production-grade search UIs using:

UI component libraries that are available for React, Vue, React Native, Flutter, and vanilla JavaScript,

Get out-of-the-box actionable analytics on top searches, no-result searches, slow queries, and more,

Get improved search performance and throughput with application layer caching,

Build access-controlled search experiences with built-in Basic Auth or JWT-based authentication, read/write access keys with granular ACLs, field level security, IP-based rate limits, and time to live - read more over here.

With recent upgrades, ReactiveSearch supports all major search engine backends like OpenSearch, Mongo Atlas, Solr, OpenAI

Visit the website here.

Data Prep

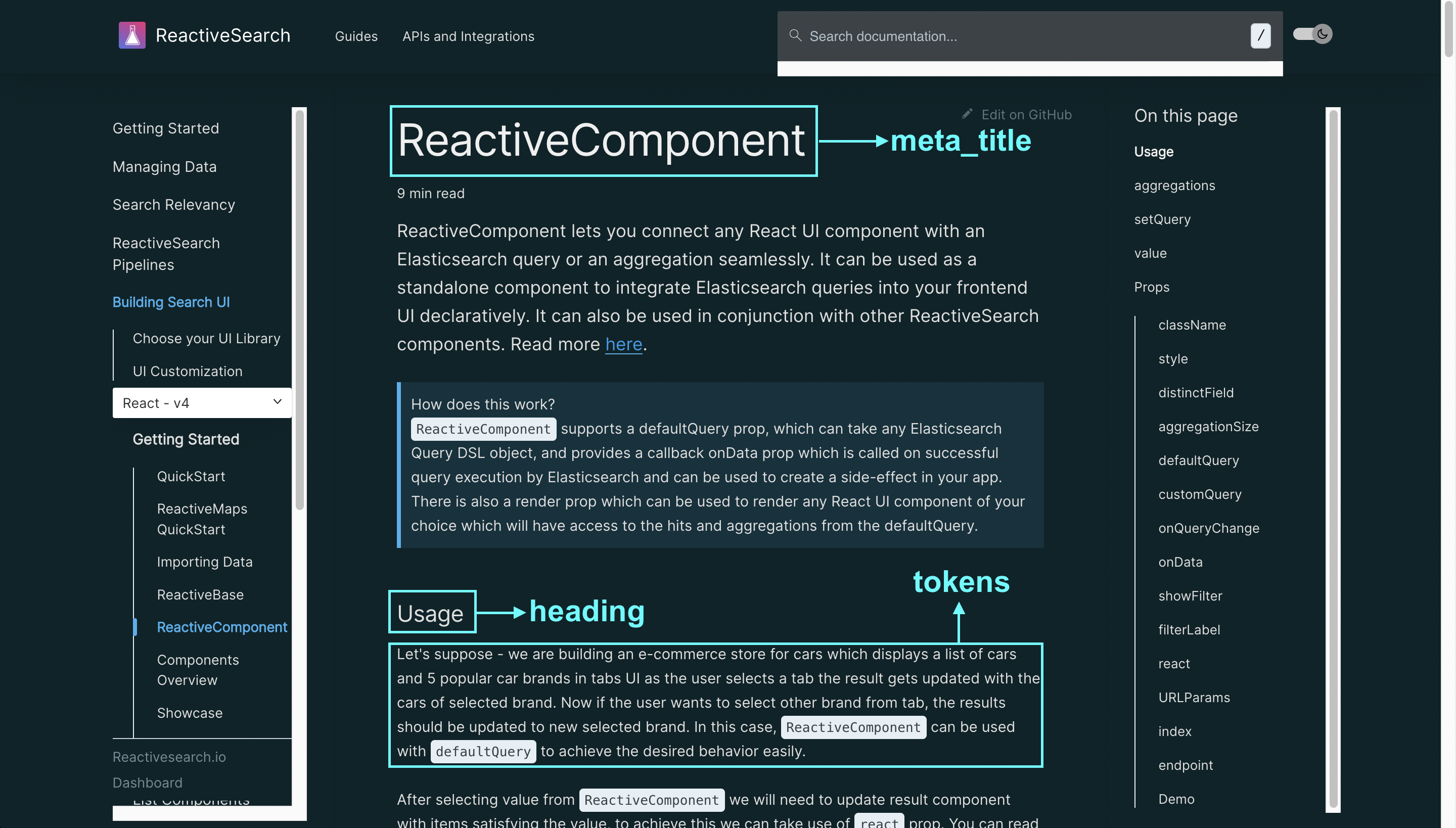

As Elasticsearch works with JSON natively, I will create a search index using the JSON data of ReactiveSearch's documentation pages.

A document looks as below:

{

meta_title: "ReactiveComponent",

meta_description: "ReactiveComponent lets you connect any React UI component with an Elasticsearch query or an aggregation seamlessly.",

keywords: ["react", "web", "reactivesearch", "ui"],

heading: "Usage",

tokens: "ReactiveComponent lets you connect any React UI component with an Elasticsearch query or an aggregation seamlessly. It can be used as a standalone component to integrate Elasticsearch queries into your frontend UI declaratively. It can also be used in conjunction with other ReactiveSearch components. Read more here."

}

The indexing structure I've decided to use here treats each section of a page as a separate document. The following image shows the fields mapped to the documentation page.

For your dataset, you can index using the ReactiveSearch dashboard's importer interface (which supports JSON, JSONL, and CSV formats) or with the REST API.

Building the Search UI

All the code I'm going to share is located inside the ask-reactivesearch repo. If you're new to it, I recommend going through the quick start guide of the ReactiveSearch UI library. I'll also introduce a new component called AIAnswer which creates an experience similar to ChatGPT.

Configuring ReactiveBase

// src/App.jsx

function Main() {

return (

<ReactiveBase

app="reactivesearch_docs_v2"

url="https://a03a1cb71321:75b6603d-9456-4a5a-af6b-a487b309eb61@appbase-demo-ansible-abxiydt-arc.searchbase.io"

theme={{

typography: {

fontFamily: "monospace",

fontSize: "16px",

},

}}

reactivesearchAPIConfig={{

recordAnalytics: true

}}

>

{/*All components go here*/}

</ReactiveBase>

);

}

const App = () => <Main />;

export default App;

You can read about the props we're setting in more detail in the documentation reference over here.

Adding SearchBox Component

function Main() {

return (

<ReactiveBase {...config}>

<SearchBox

componentId="search"

dataField={[

{

field: "keywords",

weight: 4,

},

{

field: "heading",

weight: 1,

},

{

field: "tokens",

weight: 1,

},

{

field: "meta_title",

weight: 2,

},

]}

distinctField="meta_title.keyword"

highlight={false}

size={5}

autosuggest={true}

URLParams

showClear

/>

</ReactiveBase>

);

}

I'm going to create a SearchBox component with a specific configuration. I've set up the dataField property to prioritize keywords, heading, tokens and meta_title. I figured out the best weights for these fields through a bit of trial and error.

I also want to show some FAQs, so I'll create custom suggestions. You can check out the code for the render function on Github and see the result in the Codesandbox below.

Rendering AI Response

There's one last step I want to share with you. It's about choosing to get an AI response for your search query right inside the SearchBox component. All I have to do is set enableAI to true, and just like that, I have a working AI searchbox. Now, since I'm creating suggestions myself, I also choose to render the AI response. The response and other properties come as parameters of my render function.

Adding an AI config is completely up to you. If you're interested in fine-tuning the precision of your AI response, it can be quite handy. You can find more details in the documentation.

<SearchBox

enableAI

AIConfig={{

docTemplate:

"title is '${source.title}', page content is '${source.tokens}', URL is https://docs.reactivesearch.io${source.url}",

queryTemplate:

"Answer the query: '${value}', cite URL in your answer from the context",

topDocsForContext: 3,

}}

AIUIConfig={{

askButton: true,

renderAskButton: (clickHandler) => {

return (

<button className="ask-ai-button" onClick={clickHandler}>

<span className="button-emoji">

<ButtonSvg />

</span>

<span className="button-text">{"Ask AI"}</span>

</button>

);

},

}}

render={({

downshiftProps: {

isOpen,

getItemProps,

highlightedIndex,

selectedItem,

},

AIData: { answer: aiAnswer, isAILoading },

data,

loading,

}) => {

/* Rendering SearchBox with JSX */

}}

/>;

The final app appears as follows:

Try searching for your ReactiveSearch questions and share your experience.

Summary

This blog post discusses the integration of ChatGPT and Elasticsearch for fact-based question-answering for documentation. It begins by introducing ChatGPT, a language model developed by OpenAI, and its application in answering questions and providing information on a wide range of topics, including technical documentation. The post then delves into the limitations of ChatGPT, particularly its inability to access data beyond 2021, and proposes a solution to this issue by integrating it with Elasticsearch, a search engine that provides context for more accurate responses.

The post provides a detailed walkthrough of building an application that leverages ChatGPT and Elasticsearch. It introduces LTM-QA (Long-Term Memory Question Answering), a system that enhances GQA (Generative Question Answering) by storing information for extended periods and recalling it as needed. The integration of BM-25 (TF/IDF) relevance with Elasticsearch and OpenSearch is also discussed.

The source code for the application is open-source, and you can adapt it to build your own AI answering search UI with ReactiveSearch.

Subscribe to my newsletter

Read articles from Siddharth Kothari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Siddharth Kothari

Siddharth Kothari

CEO @reactivesearch, search engine dx