MLOps Zoomcamp: week 2 (W&B)

Wonhyeong Seo

Wonhyeong Seo

Introduction

In the realm of data science and machine learning, experiment tracking plays a vital role in organizing and understanding the various iterations of models, parameters, and results. As projects become more complex, it becomes increasingly important to have a reliable and efficient method for tracking experiments. In this blog post, we will explore the exciting capabilities of Weights & Biases (W&B) for experiment tracking. We will delve into the benefits of using W&B, such as the informative walkthrough videos and code on GitHub, and discuss the potential it holds for future projects.

What is Weights & Biases?

Weights & Biases is an excellent experiment tracking tool that simplifies the process of logging, visualizing, and analyzing machine learning experiments. It provides a centralized platform to manage and compare experiments, making it easy to track models, hyperparameters, and metrics. With W&B, data scientists can better understand the performance of their models, make informed decisions, and collaborate effectively with team members.

Engaging with Weights & Biases

One of the standout features of W&B is its engaging user experience. Upon exploring the platform, I was pleasantly surprised by the extensive collection of walkthrough videos and code available on GitHub. These resources provide a comprehensive introduction to W&B and offer step-by-step guidance on getting started and utilizing its functionalities effectively.

The Walkthrough Videos

The walkthrough videos available on the W&B website are an excellent starting point for beginners. These videos cover a wide range of topics, including experiment tracking basics, hyperparameter optimization, and integration with popular machine learning frameworks. The videos are presented in a concise and easy-to-follow format, allowing users to quickly grasp the core concepts of W&B. Whether you are new to experiment tracking or a seasoned practitioner, these videos are a valuable resource for maximizing your understanding of W&B. A special webinar was hosted for MLOps zoomcamp here:

Code on GitHub

Another aspect of W&B that greatly enhances the learning experience is the availability of code examples on GitHub. These examples serve as a practical guide for incorporating W&B into your existing projects. The well-documented code snippets demonstrate how to log experiments, track parameters and metrics, and visualize results. With the code readily available, users can easily adapt it to their specific use cases, making the onboarding process smooth and efficient. This repo especially accompanies the above webinar for MLOps Zoomcamp:

Benefits of Using Weights & Biases

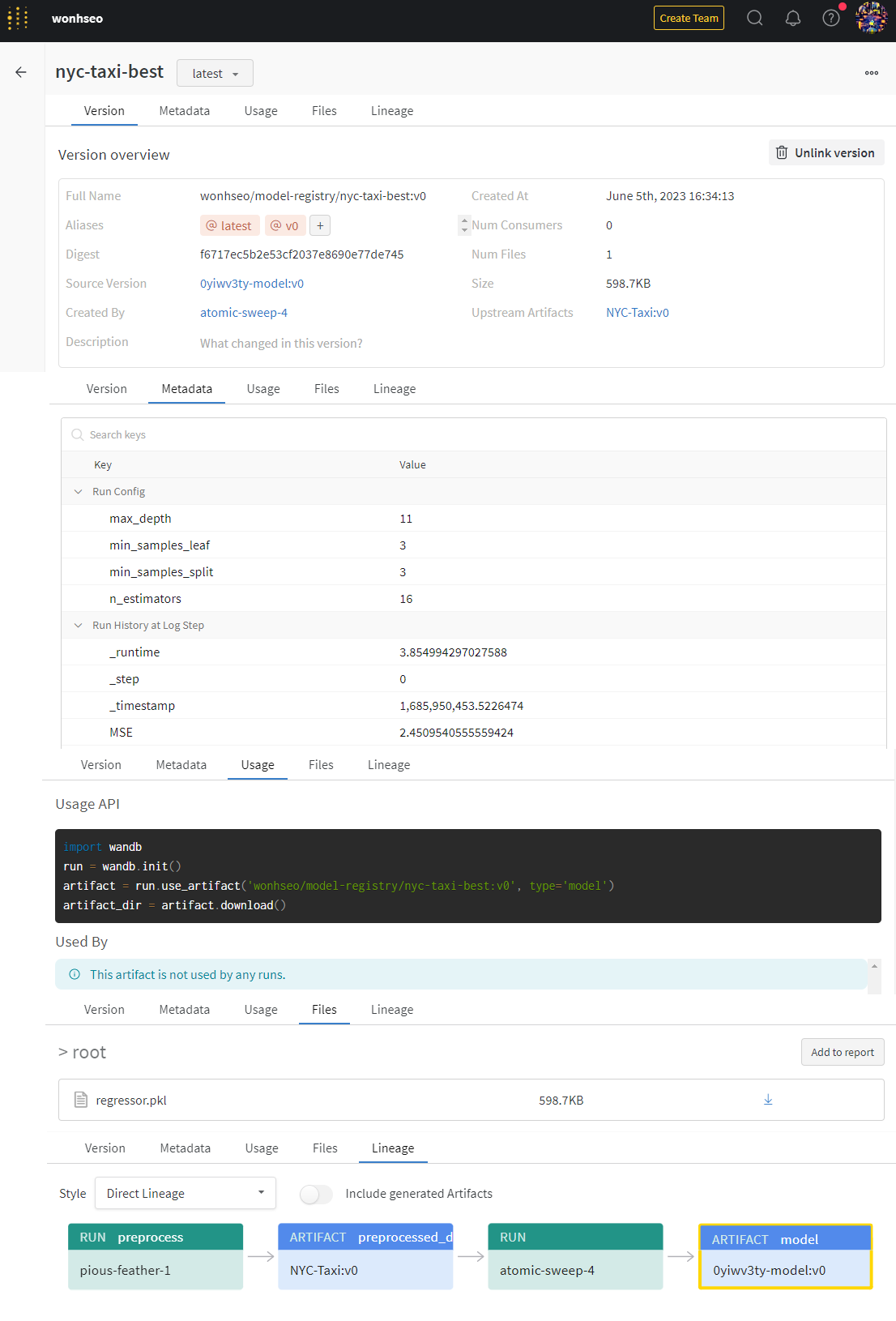

Simplified Experiment Tracking: With W&B, experiment tracking becomes a breeze. The platform provides a simple API that seamlessly integrates with popular machine learning frameworks. By logging relevant parameters, metrics, and artifacts, you can maintain a clear record of your experiments and reproduce them effortlessly.

Visualizing and Analyzing Results: W&B offers a rich set of visualization tools to explore and understand your experiment results. With interactive charts, plots, and tables, you can gain valuable insights into the performance of your models. Comparing different runs becomes effortless, allowing you to identify trends and make data-driven decisions.

Collaborative Workflows: Collaboration is essential in any data science project, and W&B facilitates effective teamwork. With its centralized platform, team members can easily share experiments, findings, and insights. The ability to leave comments and annotations further promotes communication and knowledge sharing.

Future Possibilities with Weights & Biases

Having experienced the benefits of W&B firsthand, I am excited about the potential it holds for future projects. W&B continually evolves, adding new features and integrations to enhance experiment tracking. Here are a few areas where W&B can further empower data scientists:

- Advanced Experiment Comparison: W&B's current comparison features are already useful, but there is scope for expansion. Enhancing the ability to compare different experiments across multiple dimensions, such as hyperparameters and model architectures, would provide deeper insights into model performance.

My code and screenshots

Subscribe to my newsletter

Read articles from Wonhyeong Seo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Wonhyeong Seo

Wonhyeong Seo

constant failure