Terraform State management

Sudipa Das

Sudipa DasTable of contents

- Introduction

- 1.Terraform State

- 2.How Terraform state helps track the current state of resources?

- 3.Storing State Files

- 4.Create a simple Terraform configuration file and initialize it to generate a local state file and provide the Terraform state command and mention its purpose.

- 5.Explore remote state management options using AWS S3

Introduction

Do you know Terraform keeps track of everything that you do with it?

When you run "terraform apply" command to create an infrastructure on the cloud, Terraform creates a state file called “terraform.tfstate”. This State File contains the entire details of resources in your terraform code.

When you modify something in your code and apply it on the cloud, terraform will look into the state file, and compare the changes made in the code from that state file and the changes to the infrastructure based on the state file.

Now Let's deep dive into Terraform state!!!

1.Terraform State

Terraform logs information about the resources it has created in a state file. This enables Terraform to know which resources are under its control and when to update and destroy them. The terraform state file, by default, is named terraform.tfstate and is held in the same directory where Terraform is run.

Terraform State File is written in simple readable “JSON” format. It looks like the Screenshots below.

2.How Terraform state helps track the current state of resources?

Infrastructure resources can be complex and require multiple steps to create, configure, and manage. Terraform allows you to declare the desired state of your resources in code and Terraform uses the state file to keep track of the state of those resources.

-- Whenever a Terraform configuration is applied, Terraform checks if there is an actual change made. Only the resources which are changed will be updated.

-- Terraform maintains a list of dependencies in the state file so that it can properly deal with dependencies that no longer exist in the current configuration.

-- Terraform can be told to skip the refresh even when a configuration change is made. Only a particular resource can be refreshed without triggering a full refresh of the state, hence improving performance.

-- The state keeps track of the version of an applied configuration, and it's stored in a remote, shared location. So collaboration is easily done without overwriting.

-- Invalid access can be identified by enabling logging.

-- Storing the state on the remote server helps prevent the loss of sensitive information.

3.Storing State Files

Terraform state can be managed locally or remotely. By default, it is stored in the local directory where Terraform is run. Local state management stores the state file on the local file system, while remote state management stores the state file in a remote data store such as Microsoft Azure Blob Storage or AWS S3. Remote state management provides better collaboration and security features than local state management.

So as you might already understand, Terraform state management can be divided into two categories:

local state management

remote state management

Local state management :

Local state management stores the state on the local file system of the machine that is running Terraform. It is suitable for small teams or single-person projects because it is easy to set up and manage. Local state management is the default and it makes things easy to get up and running with HashiCorp Terraform IaC deployments. Terraform uses a local state file named terraform.tfstate to store the current state of the resource configuration.

However, it has some disadvantages. It is vulnerable to data loss and is challenging for team collaboration. Therefore, it is recommended to use remote state management with production-level Terraform deployments.

Remote state management:

Remote state management allows you to store the state in a remote data store. Terraform supports several remote data stores, including Microsoft Azure Blob Storage, AWS S3, Google Cloud Storage, and the HashiCorp Terraform Cloud.

One of the significant advantages of using remote state management in Terraform is improved collaboration. Remote state management allows team members to access and update the state file from a central location. This helps to avoid conflicts that may arise when multiple team members work on the same infrastructure.

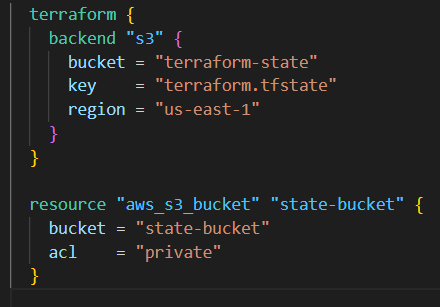

The following is an example of how to configure remote state management using S3:

4.Create a simple Terraform configuration file and initialize it to generate a local state file and provide the Terraform state command and mention its purpose.

I have created a file called "resource.tf", Below is the snapshot of that.

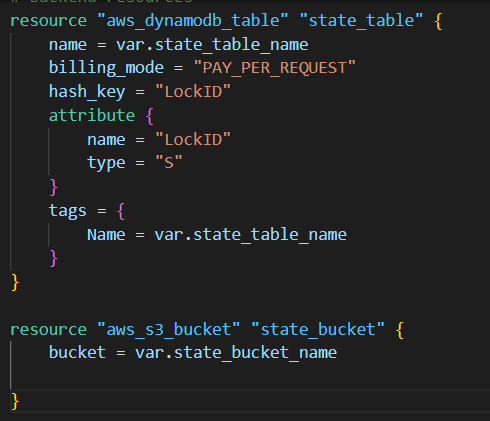

Here we have created an S3 bucket and a dynamo DB for our understanding. Now after executing the "terraform apply" command, terraform.tfstate file is automatically created.

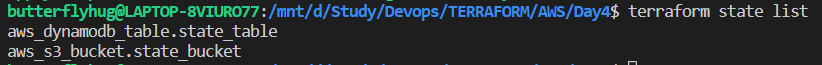

Multiple sub-commands can be used with terraform state. Below are some examples.

List: List resources within terraform state file.

mv : Moves item with terraform state. This command is used in cases where you want to rename an existing resource without destroying and recreating it.

Syntax: terraform state mv [options] SOURCE DESTINATION

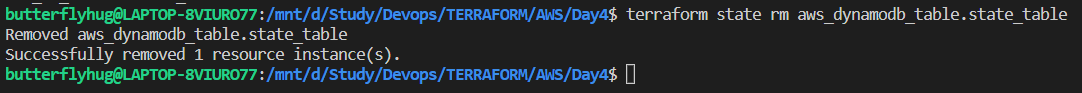

rm : The terraform state rm command is used to remove items from the Terraform state. Mind you, items removed from the Terraform state are not physically destroyed.

For example, if you remove an AWS instance from the state, the AWS instance will continue running but terraform plan will no longer see that instance.

I have deleted the dynamo from the state, but the instance is still running as I did not destroy the configuration.

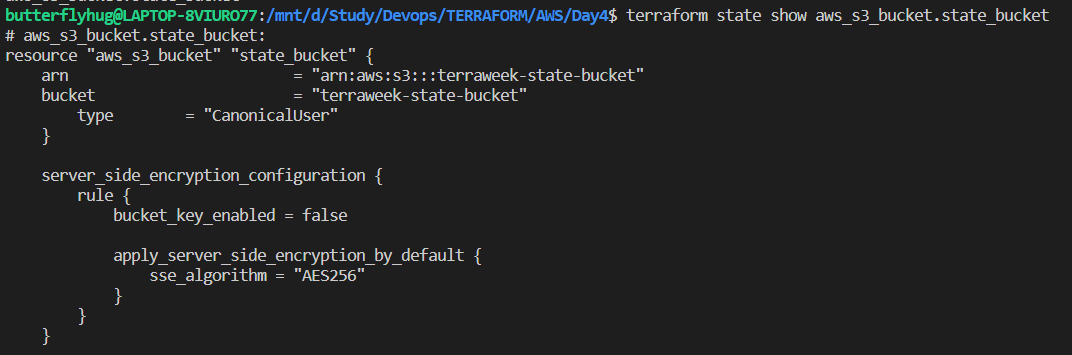

show: The terraform state show command is used to show the attributes of a single resource in the Terraform state.

5.Explore remote state management options using AWS S3

When working with Terraform locally, the state files are created in the project’s root directory. This is known as the local backend and is the default.

These files cannot be a part of the Git repository as every member may execute their version of Terraform config and end up modifying the state files. Thus there is a high chance of corrupting the state file or creating inconsistency in the state files. Managing the state files in a remote Git repository is also discouraged as the state files may contain sensitive information like credentials, etc.

State files need to be stored in a secured and remote location. File storage solutions like AWS S3 offer a secure and reliable way to store and access files within and outside of the internal network.

It is very easy to configure a remote backend using AWS S3 for any Terraform configuration. The steps are summarized below.

Create an S3 bucket.

The screenshot below shows the S3 bucket created to be used as the Terraform state file storage.

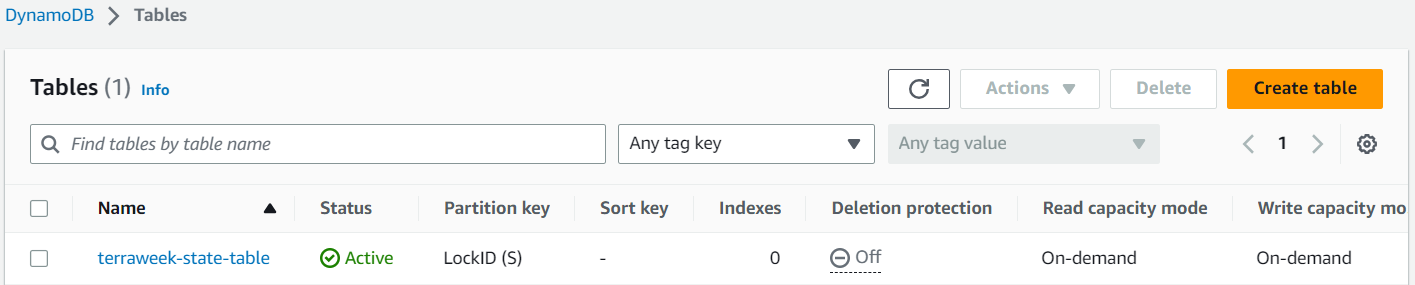

Create a DynamoDB table. The screenshot below shows the DynamoDB table created to hold the LockID for the Terraform operations.

Include the backend block within Terraform block in the Terraform configuration.

Specify the bucket name created in Step 1.

Specify the key attribute, which helps us create the state file with the same name as the provided value.

Specify the DynamoDB table created in Step 2.

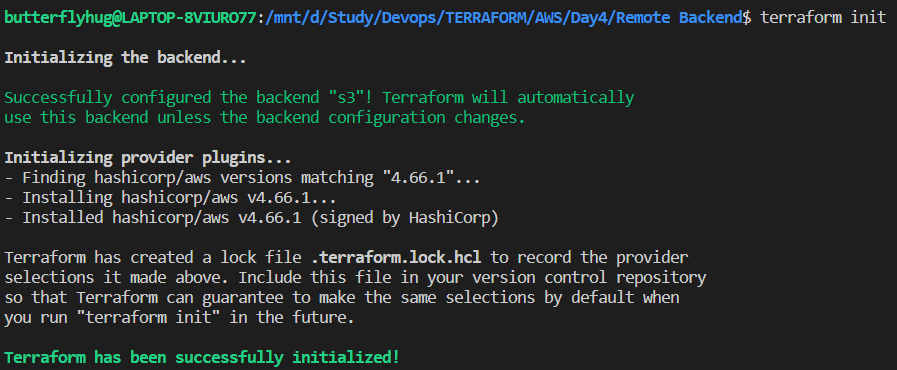

After creating the resources, try to initialize the terraform with terraform init command. The output below confirms that the S3 backend has been successfully configured.

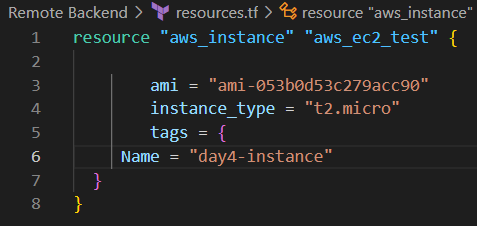

Let’s go ahead and create a simple EC2 instance.

I have used the configuration block below in my resource.tf file to do the same.

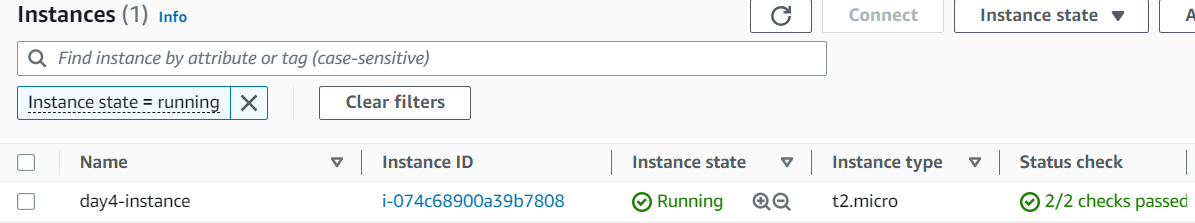

Assuming everything went well, the EC2 instance should appear in the AWS console, as shown below.

Going back to the S3 bucket used for the remote backend configuration, we can also confirm that the state file was created and is being managed here.

These changes enable remote state storage using an S3 bucket and DynamoDB table for locking, with the specified names and configurations.

Suppose one developer is creating EC2 instance and another developer is deleting an EC2 instance using the same config file. That will create confict. But the remote backend will lock the file when one person is using that configarion file, so another person have to wait until it is completed.

Github Link:

https://github.com/SudipaDas/TerraWeek.git

If this post was helpful, please follow and click the 💚 button below to show your support.

_ Thank you for reading!

_Sudipa

Subscribe to my newsletter

Read articles from Sudipa Das directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sudipa Das

Sudipa Das

DevOps || Git || Terraform || Cloud | Linux | Shell Scripting | Splunk | Kubernetes | Docker || Jenkins