Mastering the Mechanics: Manual Calculations of Forward and Backward Propagation in Neural Networks

Naveen Kumar

Naveen KumarTable of contents

INTRODUCTION

Unlocking the intricacies of neural networks, we delve into the manual calculations of forward and backward propagation. By dissecting the derived equations, we gain insight into how information flows and parameters are optimized. Join us on this journey to master the mechanics and empower your understanding of neural network training.

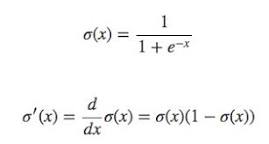

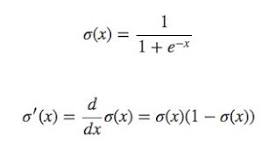

PRE-REQUISITE

Derivation of forward and backward propagation in neural networks

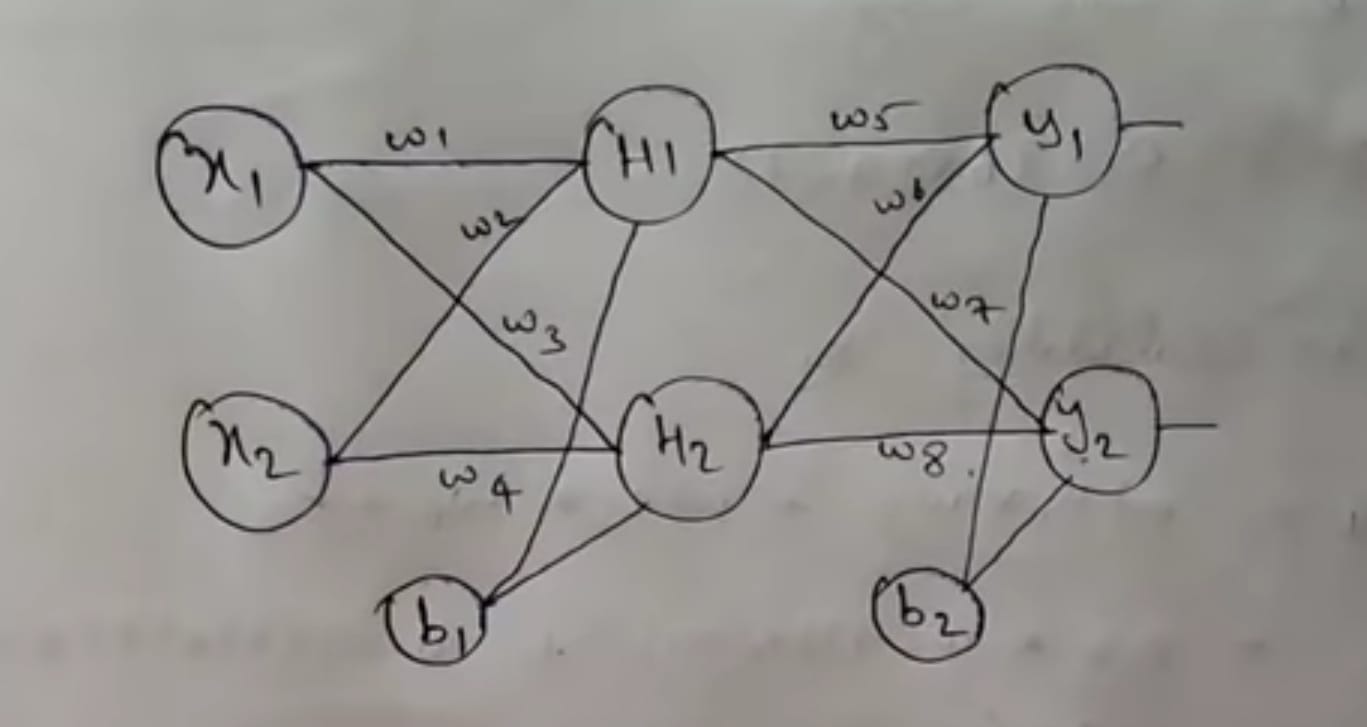

NEURAL NETWORK EXAMPLE

Consider the neural networks with sigmoid activation with a learning rate of 0.5

with input x1=0.05,x2=0.1 and

target values t1=0.01, t2=0.99.

The biases are given by b1=0.35, and b2=0.60.

Initially, randomly initialized weights are given by w1=0.15, w2=0.20, w3=0.25, w4=0.30, w5=0.40, w6=0.45, w7=0.50, and w8=0.55.

SOLUTION

Step 1: Forward propagation

Step 1.1: Forward propagation for Hidden layers (H1, H2)

EQUATION 1:_________________________(H1):

$$H1=x1w1+x2w2+b1$$

Substituting x1,w1,x2,w2,b1

$$H1=(0.05)(0.15)+(0.1)(0.20)+0.35$$

H1:

$$H1=0.3775$$

Since it is given by sigmoid activation

$$H1out=σ(H1)=1/(1+e^(-H1))$$

Substituting the H1 value we get

$$H1out=0.59326$$

EQUATION 2:_________________________(H2):

$$H2=x2w4+x1w3+b1$$

similarly, we will get

H2out:

$$H2out=0.59683$$

Step 1.2:Forward propagation for Output layer(y1,y2)

EQUATION 3:_________________________(y1):

$$y1=H1outw5+H2outw6+b2$$

substituting for the above equation

$$y1=(0.59326)(0.4)+(0.59683)(0.45)+0.6$$

y1:

$$y1=1.105905967$$

Since it is given by sigmoid activation

$$y1out=σ(y1out)=1/(1+e^(-y1))$$

y1out:

$$y1out=0.75136507$$

EQUATION 4:_________________________(y2):

$$y2=H1outw7+H2outw8+b2$$

Similarly, for y2

$$y2out=σ(y2out)=1/(1+e^(-y2))$$

y2out:

$$y2out=0.772928465$$

Step 2: Calculating Error

w.k.t

$$Etot=1/2*∑_i((target-output)^2)$$

Let's take E1 and E2 are error for y1,y2

$$Etot=(1/2*((target1-y_1out)^2))+(1/2*((target2-y_2out)^2))$$

Substituting the values

$$Etot=(1/2*((0.01-0.75136507)^2))+(1/2*((0.99-0.772928465)^2))$$

Total error:

$$Etot=0.298371108$$

Step 3: Backpropagation

Updating weights to reduce the error

W.K.T

Equation_________(UPDATE)

$$w_inew=W_iold-(lr)∂E/∂w_iold$$

**Step 3.1:**Backpropagate from the output layer to the hidden layer

we have w5,w6,w7,w8

For w5:

By applying the chain rule**___________(A)**

$$∂E/ ∂w5= (∂E/ ∂y1out)( ∂y1_out/ ∂y1)( ∂y1/ ∂w5)$$

For the above, we have to find

1] ∂E/∂y1out___________(1)

$$∂((1/2*((target1-y_1out)^2))+(1/2*((target2-y_2out)^2)))/∂y_1out$$

we get

$$∂E/∂y1out=(target1-y1out)*-1$$

substituting the values for above

$$∂E/∂y1out=0.74136507$$

2] ∂y1out/∂y1___________(2)

From step 1.2 y1out formula is

$$y1out=σ(y1out)$$

applying

$$∂y_1out/∂y_1=y_1out(1-y_1out)$$

substituting y1out in the above equation we get

$$∂y_1out/∂y_1=0.186815601$$

3] ∂y1/∂w5___________(3)

from step 1.2 equation 3

$$∂(H1outw5+H2outw6+b2)/∂w5$$

we get

$$∂y1/∂w5=H1out=0.59326$$

Substituting values of ∂E/∂y1out___________(1),

∂y1out/∂y1___________(2),

∂y1/∂w5___________(3) in equation_____(A)

finally, the gradient for w5

$$∂E/ ∂w5=0.082165$$

Step 3.1.1 Substitute ∂E/∂w5 and lr=0.5 and old weight of w5 in

Equation (UPDATE)

$$w_5new=w_5old-lr*∂E/∂w_5$$

The updated W5

$$w5=0.358916$$

Similarly for w6,w7,w8 we get

Updated weights w6,w7,w8

$$w6=0.408666186$$

$$w7=0.511301270$$

$$w8=0.561370121$$

Step 3.2 Backpropagate from the hidden layer to the input layer

we have w1,w2,w3,w4

Let's take E1 and E2 are error for y1,y2

$$Etot=(1/2*((target1-H_1out)^2))+(1/2*((target2-H_2out)^2))$$

For w1:

By applying the chain rule ____________(B)

$$∂E/ ∂w1=(∂E/ ∂H_1out)(∂H_1out/ ∂H1)(∂H1/ ∂w1)$$

For the above, we have to find

1] ∂E/∂H1out_____________(1)

$$∂((1/2*((target1-H_1out)^2))+(1/2*((target2-H_2out)^2)))/∂H_1out$$

After partial differentiation we get,

$$∂E/∂H_1out=(target1-H_1out)*-1$$

substituting the values for the above equation

$$∂E/∂H_1out=0.58326$$

2] ∂H1out/∂H1_____________(2)

From step 1.1 w.k.t H1out

$$H_1out=∂(H1)$$

Applying

we get

$$∂H_1out/∂H_1=H_1out(1-H_1out)$$

By substituting the values for the above equation

$$∂H1out/∂H1=0.59326(1-0.59326)$$

we get

$$∂H1out/∂H1=0.24130257$$

3] ∂H1/∂w1_____________(3)

from step 1.1 EQUATION 1:_________________________(H1)

$$∂(x1w1+x2w2+b1)/∂w1=x1$$

we get

$$∂H1/∂w1=x1$$

substituting the value we get

$$∂H1/∂w1=0.05$$

substituting values of ∂E/∂H1out_____________(1),

∂H1out/∂H1_____________(2),

∂H1/∂w1_____________(3) in equation (B) we get

finally gradient of w1,

$$∂E/∂w1=0.007037$$

Step 3.2.1 Substitute ∂E/∂w1 and lr=0.5 and old weight of w1 in

equation (UPDATE)

Updated w1

$$w1=0.14978$$

similarly, we have calculated for w2,w3,w4

finally, we get

$$w2=0.199561$$

$$w3=0.249751$$

$$w4=0.299502$$

Likewise, we will update the weights iteratively in order to reduce the error rate.

Note: The above example is shown for one forward and backward pass.

CONCLUSION

In this blog, we have explored the numerical problem-solving capabilities of neural networks, delving into their mechanics of forward and backward propagation. By manually calculating the steps involved in training a neural network, we gain a deeper understanding of the inner workings and parameter optimization. I encourage you to personally work out the provided examples to enhance your understanding and solidify your grasp on this fascinating topic, as hands-on experience will undoubtedly lead to a better comprehension of neural network numerical problem-solving.

Hope you enjoyed solving the problem !!!

Practice on your own !!!

Stay tuned !!!

Thank you !!!

Subscribe to my newsletter

Read articles from Naveen Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by