Chapter #2: Migrating ML Models From Running Locally To The Cloud

AniBrain Dev

AniBrain DevTable of contents

TL;DR: As part of the broader initiative to transition AniBrain's data pipeline to the cloud, I successfully relocated the machine learning model API from my local device to the cloud.

Background

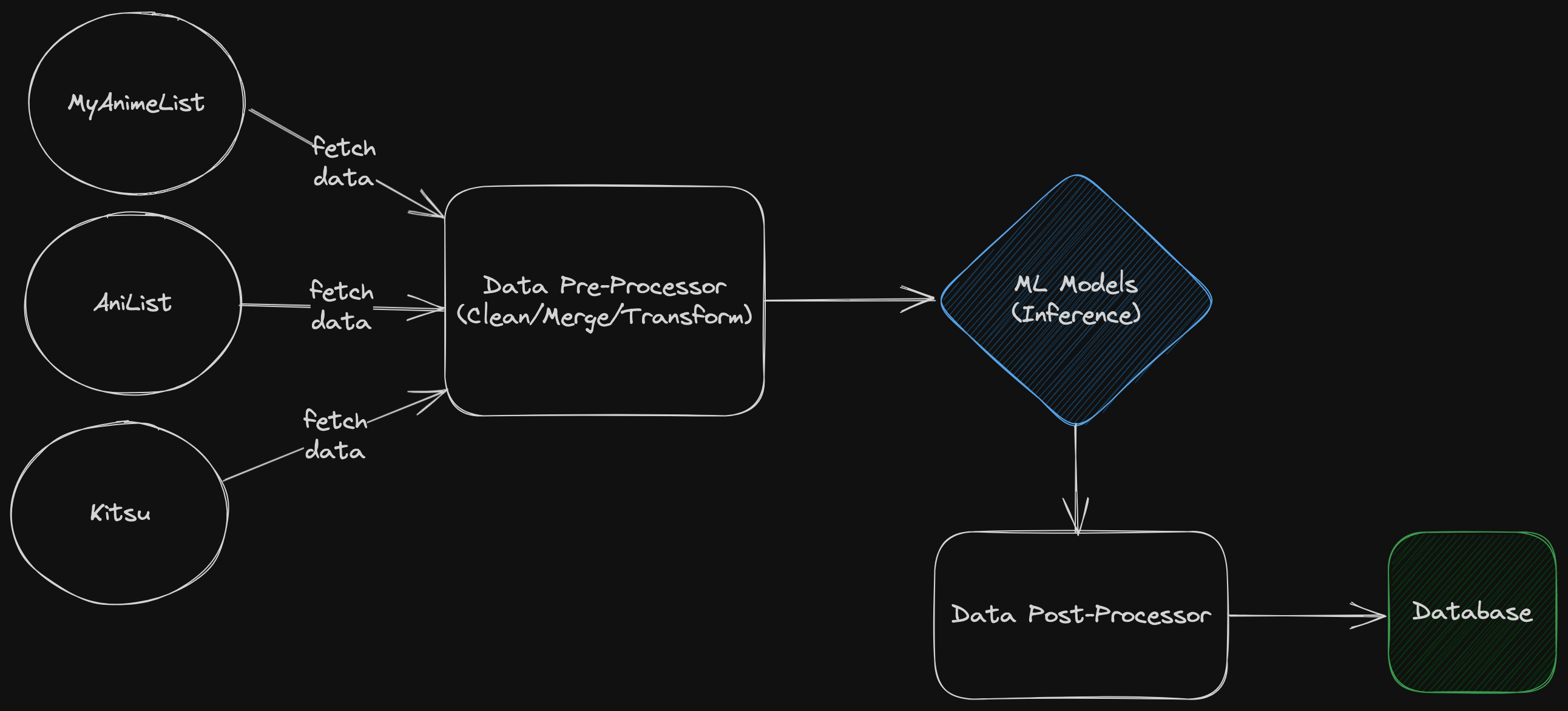

Maintaining AniBrain's database has always been a challenging task. Currently, I use pipelines to extract and transform anime/manga data from AniList, Kitsu, and MyAnimeList (MAL). These sources provide metadata and necessary properties for the recommendation engine. Initially, I had set up an automated process, but changes in the MAL API caused the pipeline to break, resulting in the inclusion of incorrect data in the database. To address this, I switched to manually running the pipeline on a local dev database and thoroughly testing the data before importing it to the production environment. This approach ensures that everything functions as intended, and aside from the extra time requirements, has been a success. I am currently focused on reclaiming my time and maintaining confidence in the pipeline. This will enable me to dedicate more effort to building new features and ensuring consistent updates to the database.

Problem

I developed an API to host the ML models required for AniBrain's recommendation engine. Although starting the API housing these models is simple, it is often overlooked, leading to pipeline failures. Without the API, the pipeline cannot successfully write to the database.

Here's a high-level view of the current pipeline:

To address this issue, I plan to migrate the ML model inference to the cloud, ensuring that the API remains accessible at all times, 24/7. This will provide a reliable solution for AniBrain's operations.

Actions Taken

Here's how I resolved the issue:

I created a Digital Ocean Droplet to host the ML models and serve them via an API. I followed the instructions provided in this guide: https://www.digitalocean.com/community/tutorials/how-to-serve-flask-applications-with-gunicorn-and-nginx-on-ubuntu-20-04

I utilized Cloudflare for enhanced protection.

I secured the routes behind an API key. This step became necessary as the API was now accessible over the internet, unlike before when it was only running locally.

I made necessary adjustments to the Droplet settings to ensure sufficient memory for the models.

Subscribe to my newsletter

Read articles from AniBrain Dev directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

AniBrain Dev

AniBrain Dev

Creator of www.anibrain.ai