ACID Transactions: What’s the Meaning of Isolation Levels for Your Application

Memgraph

Memgraph

Database ACID transactions form the foundational basis of databases. They ensure data integrity by applying appropriate isolation levels in multi-user scenarios. In this article, we will explore the potential impact they can have on your application.

Databases and data consistency

At the time of writing, it is universally known that a database is used to store and retrieve data in an optimal way. The way data is stored and retrieved depends on the type of the database and and their purpose. To gain a better understanding of the diverse databases available, we recommend visiting the DB-engines ranking site, where you will find an a handful of options to explore.

Regardless of the data format, the data should be stored and retrieved consistently. That means that data consistency and integrity should be an absolute priority when designing and building a database engine. Data consistency ensures that data stays correct, up-to-date, and is valid after the multitude of transactions across the database system. Multiple aspects contribute to data consistency, in general, including data validation, error handling, concurrency control, etc.

Though ensuring data consistency in a database can be challenging. To keep the data consistent, databases employ the principles of ACID transactions.

ACID transactions

An ACID-compliant database, as the name suggests, operates based on transactions that adhere to specific rules. ACID stands for Atomicity, Consistency, Isolation, and Durability, and each of these properties contributes to creating a more robust and reliable database system.

Atomicity ensures that a transaction is treated as a single indivisible unit of work, meaning that either all the changes made by the transaction are committed, or none of them are. This means no partial changes can ever occur. Imagine if you were to abruptly interrupt a transaction by pulling the plug. With atomicity, you can rest assured that you won’t end up with some transactions partially executed while others are left incomplete.

Consistency ensures that the database maintains a valid state both before and after the transaction is executed, following specific predefined rules and constraints. For instance, having values within predetermined ranges and ensuring the presence of unique values are examples of maintaining a consistent and valid data state.

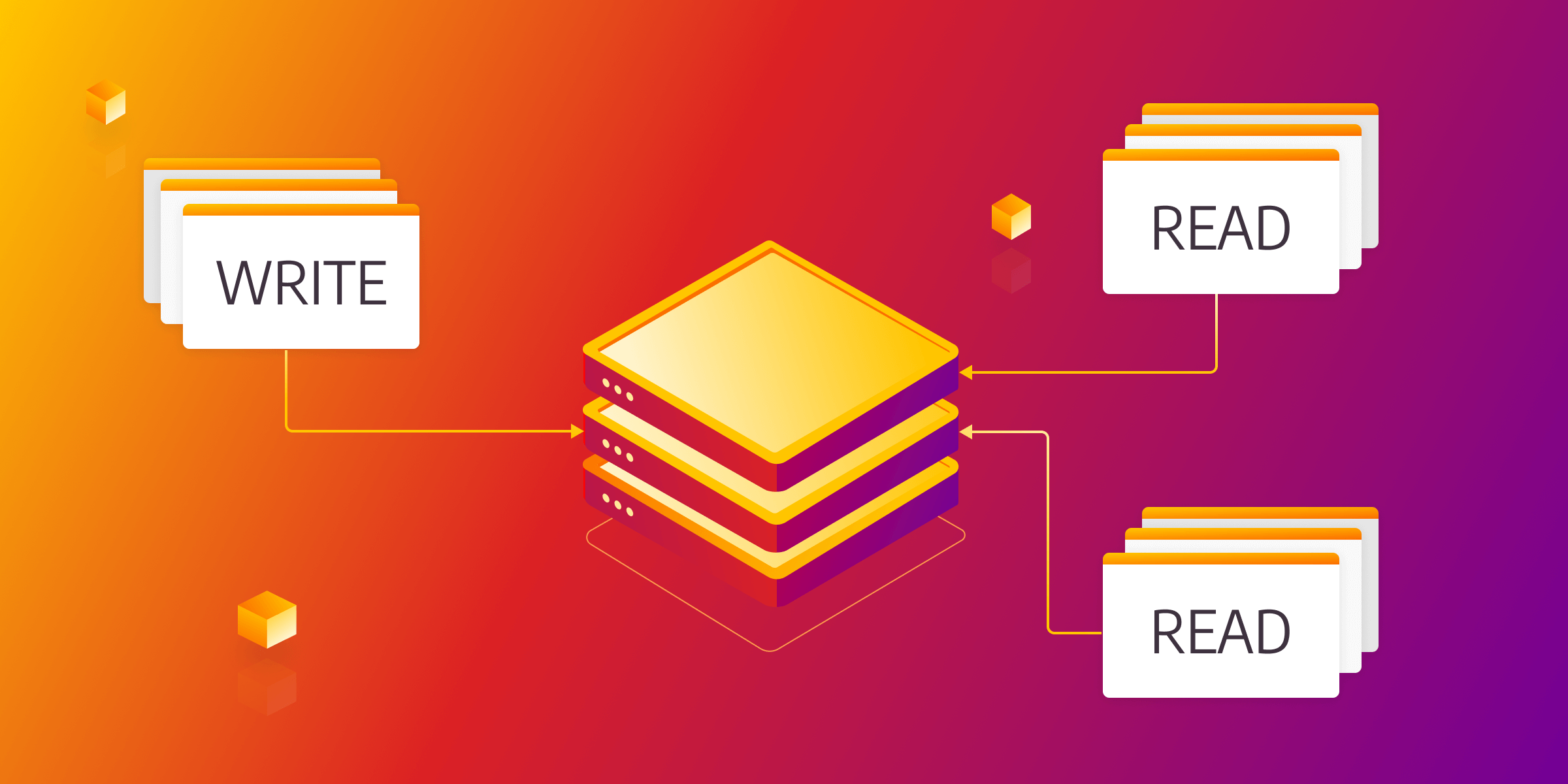

Isolation aims to offer concurrent transactions a sense of independence, ensuring that each transaction is executed in isolation from others, even when they occur simultaneously. This is particularly important for multi-user databases that face a bunch of different concurrent requests.

Finally, durability guarantees that once a transaction is committed, its changes are permanent and will survive any subsequent failures. If you pull the server plug, the database should restore its previous state from durability files.

Each property is crucial for different reasons, but for the purposes of this article, let’s focus on the isolation property, which defines database isolation levels.

Database isolation levels in your application

Database isolation levels are important because they determine the degree of consistency and correctness in a multi-user database system. In a multi-user database system, transactions may overlap and access the same data concurrently, leading to conflicts and inconsistencies if not managed correctly. Isolation levels provide a way to control the degree of interaction and visibility between transactions, which can help ensure that concurrent transactions do not interfere but produce correct and consistent results.

These days, the goal is to maximize the use of server cores by leveraging parallel computing techniques. It is somewhat expected that databases can handle transactions concurrently and from different users. Unfortunately, not all things can run in parallel. Managing a higher isolation level has become increasingly interesting as it offers enhanced data consistency while addressing potential downsides like increased memory usage, contention, and reduced concurrency associated with stricter isolation levels.

Choosing the appropriate isolation level for a database system depends on several factors, including the desired degree of consistency, performance requirements, and the specific types of transactions and access patterns in the database system.

One of the more popular research papers on isolation levels and their e necessity is A Critique of ANSI SQL Isolation Levels. The paper came out as an expansion on top of ANSI SQL isolation levels. It mentions many isolation levels, from the weakest to strongest.

- Read Uncommitted

- Read Committed

- Cursor Stability

- Oracle Read Consistency

- Snapshot Isolation

- Serializable

Each isolation level not only offers a lower or higher level of consistency and correctness but also impacts the performance potential due to locking. Keep in mind that isolation levels depend on vendor implementation and can be weaker or stronger than theoretically defined. When considering isolation levels primarily from a correctness standpoint, also take into account the potential issues that may arise when using lower isolation levels.

The paper defines the following phenomena (or issues):

- Dirty Write

- Dirty Read

- Non-Repeatable or Fuzzy Read

- Phantom

Remember that these are isolation issues from a single paper from 1995. There is a much bigger list of possible issues that exist in real-world scenarios? Let's explore one example of such phenomeno: Dirty Read. Here is a brief explanation of Dirty Read as described in the paper:

“Transaction T1 modifies a data item. Another transaction T2 then reads that data item before T1 performs a COMMIT or ROLLBACK. If T1 then performs a ROLLBACK, T2 has read a data item that was never committed and so never really existed.”

If your database is running using the Read Uncommitted isolation level, it will not help detect the dirty read phenomena described above. On the other hand, the Read Committed isolation level provides a guarantee that a transaction can only access data that has been committed by other transactions. However, it does not necessarily allow access to data that is currently uncommitted or undergoing modifications by other transactions. Read committed isolation level manages access to the data by introducing a read lock on a row or a node that is being read in some transaction. While this may decrease the concurrent execution speed, it will ensure a more consistent database state.

Let’s take a look at the second type of anomaly, Non-repeatable or Fuzzy Read, from the paper: “Transaction T1 reads a data item. Another transaction T2 then modifies or deletes that data item and commits. If T1 then attempts to reread the data item, it receives a modified value or discovers that the data item has been deleted.”

In both Read Uncommitted and Read Committed isolation levels, the earlier described points would not be detected because the changes being read are already committed. However, a higher isolation level, like Snapshot Isolation, would prevent this issue. This example highlights why the Read Committed isolation level may fall short in handling highly concurrent workloads.

Different isolation levels can have a significant impact on the behavior and performance of different user applications that interact with a database system. The selection of the right isolation level for a database system depends on various factors, as mentioned earlier. These include the desired level of consistency, performance requirements, and the specific types of transactions and access patterns within the database system.

To put things into a practical perspective, consider a simple banking application that allows users to transfer funds between accounts. Suppose the application uses the Read Uncommitted isolation level. In that case, a user may be able to see uncommitted changes made by other users, which could lead to incorrect account balances being displayed. On the other hand, if the application uses the Serializable isolation level, each transaction may need to wait for other transactions to complete before executing, which could lead to reduced performance and potentially even deadlock if transactions are blocked waiting for each other.

Different isolation levels can also impact the performance and scalability of database systems in different ways. For example, the Read Committed isolation level may allow for higher concurrency and throughput than the Serializable isolation. Still, it may lead to more conflicts and rollback of transactions due to optimistic locking. Due to the complex nature of the topic, each isolation level should be explored on a database level since the implementation can vary between databases. On top of that, databases provide different means of handling concurrent transactional issues.

Responsibility for handling the concurrency control

When it comes to managing concurrent transactional issues, the following research paper argues that existing concurrency control mechanisms in databases place excessive responsibility on developers to maintain correctness. The paper proposes a fresh approach that redistributes this responsibility towards the database system itself.

The authors of the research paper emphasize the need for more than just traditional concurrency control mechanisms, such as locks, to ensure correctness in modern database systems. Contemporary databases often involve complex transactions with multiple access patterns and dependencies. As a result, developers must write complex application-level logic to ensure correctness, which introduces the likelihood of errors and becomes difficult to maintain over time.

To address these issues, the authors propose a new approach where the database system takes a more active role in managing concurrency control. Specifically, they propose a framework that allows the database system to analyze the access patterns and dependencies of transactions and automatically generate a customized concurrency control mechanism based on the specific workload. This would reduce the burden on developers to write complex application-level logic and ensure correctness while still allowing for high performance.

To be more specific, there is a particular class of bugs called Read followed by a relevant Write. The author defines a sub-class of the issues where there is a read followed by an update on the same data item. Furthermore, there are two sub-cases, both of which are encapsulated under the name RW1:

// RW1 Case 1: Classic lost update.

Read(a); Check a>=1; b=a-1; Update(a)=b;

// RW1 Case 2: No update is lost, but the final value of "a" may become negative.

Read(a); Check a>=1; Update(a)=a-1;

An interesting data point is that the Snapshot Isolation level could solve all RW1 Case 1 issues and that RW1 Case 2 issues are almost half of all the problems (42/94). Many issues happen because developers are unaware of the implications of lowering isolation levels or because databases do not support higher isolation levels, such as Snapshot Isolation.

One might think that the highest possible isolation level (called Serializable) would solve all the issues. But that's not the case. To quote the author: "There are problems that have to be handled correctly at the application level. For example, exceptions caused by duplicate inserts or deletes must be handled properly (Section 3.2). Developers should not assume that a strong isolation level is a panacea that addresses all concurrency-related issues." An example of this would be an inappropriate error and exception handling that is a root cause of 10 issues form the paper.

Proposal of what ACID systems should do: Snapshot Isolation

When using a low isolation level, developers often find themselves investing time in caching and resolving non-critical bugs, which may not directly impact the business. On the other hand, even the most robust isolation level cannot completely eliminate all potential issues while still maintaining optimal performance.

All taken into account, there is an argument that using Snapshot Isolation as a default in database systems can provide developers with certain guarantees out of the box and reduce the overhead of implementing custom concurrency control mechanisms. Specifically, Snapshot Isolation guarantees that each transaction sees a consistent snapshot of the database as it existed at the start of the transaction, regardless of other concurrent transactions that may be modifying the same data. As explained in the previous section, the majority of the application issues could be avoided by just using the Snapshot Isolation level.

Again, it's important to emphasize that Snapshot Isolation is not always sufficient to ensure correctness in all database systems, especially with the presence of complex transactional dependencies and access patterns. For example, Snapshot Isolation can still result in lost updates and write-skew anomalies in certain cases. Furthermore, using Snapshot Isolation as the default isolation level may not be appropriate for all database workloads and may result in reduced performance or scalability for certain types of transactions.

Here are some commen types of tradeoffs with isolation levels:

- Execution time (latency VS throughput)

- Scalability

- Memory usage

- Development and SRE time spent on hunting and fixing application bugs caused by a weak isolation level

When considering the appropriate isolation level for a specific application, it is crucial to carefully evaluate the requirements. However, an essential question arises: What should be the sensible default choice that would cater to the needs of the majority of developers? Having a good default isolation level is significant as it simplifies the development process and ensures a solid foundation that works effectively for most developers.

So what does Memgraph support?

Memgraph default out-of-the-box isolation level is set to Snapshot Isolation. This ensures Memgraph's high data consistency and integrity. Although there is support for lower isolation levels, Read Committed and Read uncommitted, they introduce lower data consistency. You should not jump to the lowest possible level just to get some performance benefits, and this should be carefully considered. Hence we opted for Snapshot Isolation,as a default.

On top of this, Memgraph is implemented with multiversion concurrency control built in. Hence the performance in Snapshot isolation is best-in-class, but more on that in future blog posts. On top of isolation levels, for those the ACID transactions are not that important for and have analytical workloads, there is an analytical mode, which although does not guarantee any data consistency, it still provides lower memory usage and even faster performance.

Subscribe to my newsletter

Read articles from Memgraph directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Memgraph

Memgraph

Open Source Graph Database Built For Real-Time Streaming Data, Compatible With Neo4j.