Generative AI Models:

Sahil Kaushik

Sahil KaushikTable of contents

Introduction:

Generative Artificial Intelligence (AI) models have revolutionized the field of machine learning by enabling computers to generate new content, ranging from text and images to music and video. These models have opened up exciting possibilities in various domains, including creative arts, content generation, and problem-solving. In this article, we will delve into the world of generative AI models, exploring their types and their applications.

Types of Generative AI Models:

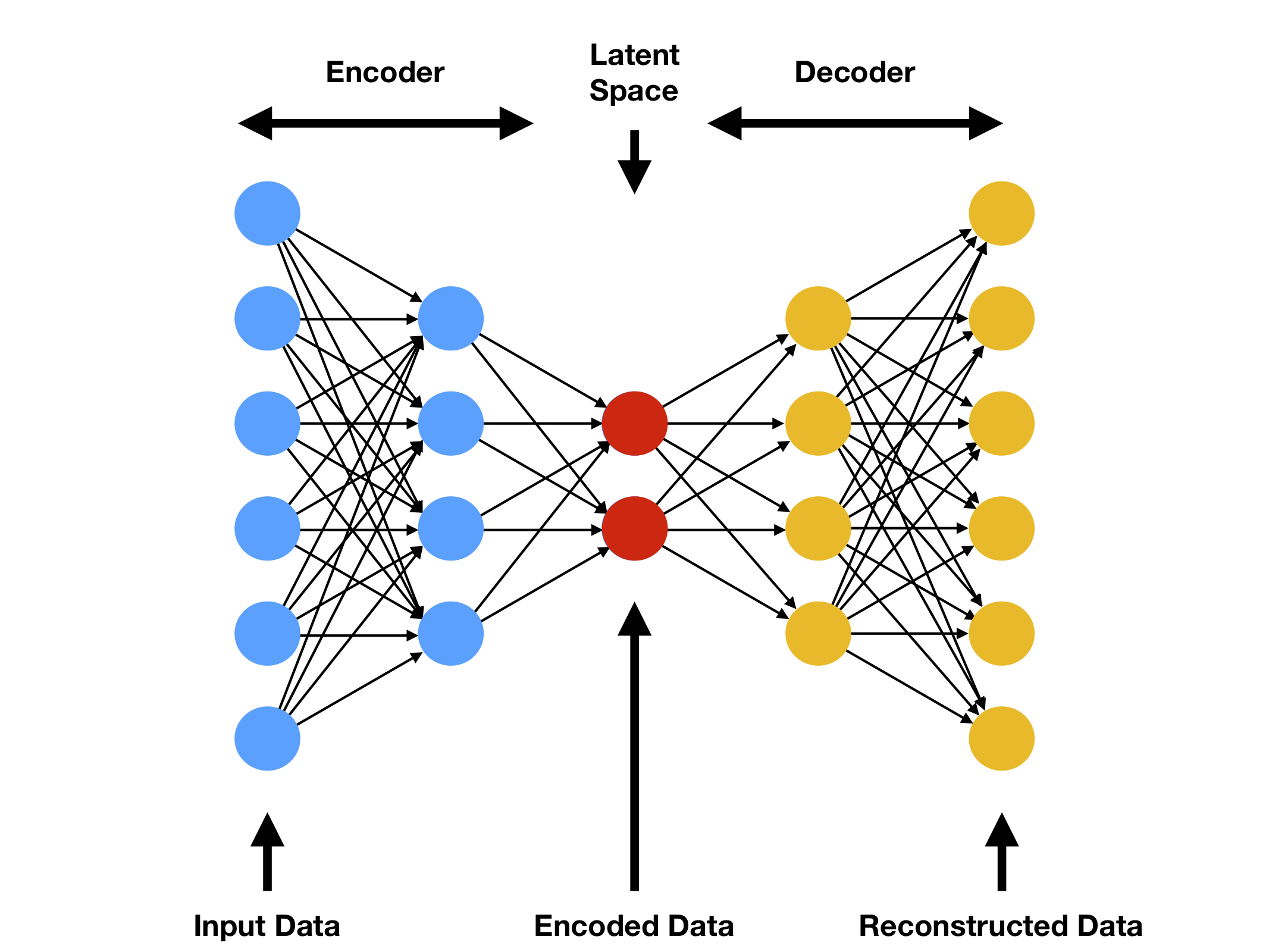

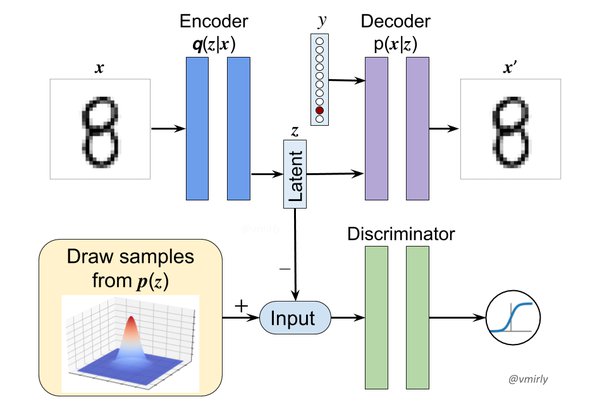

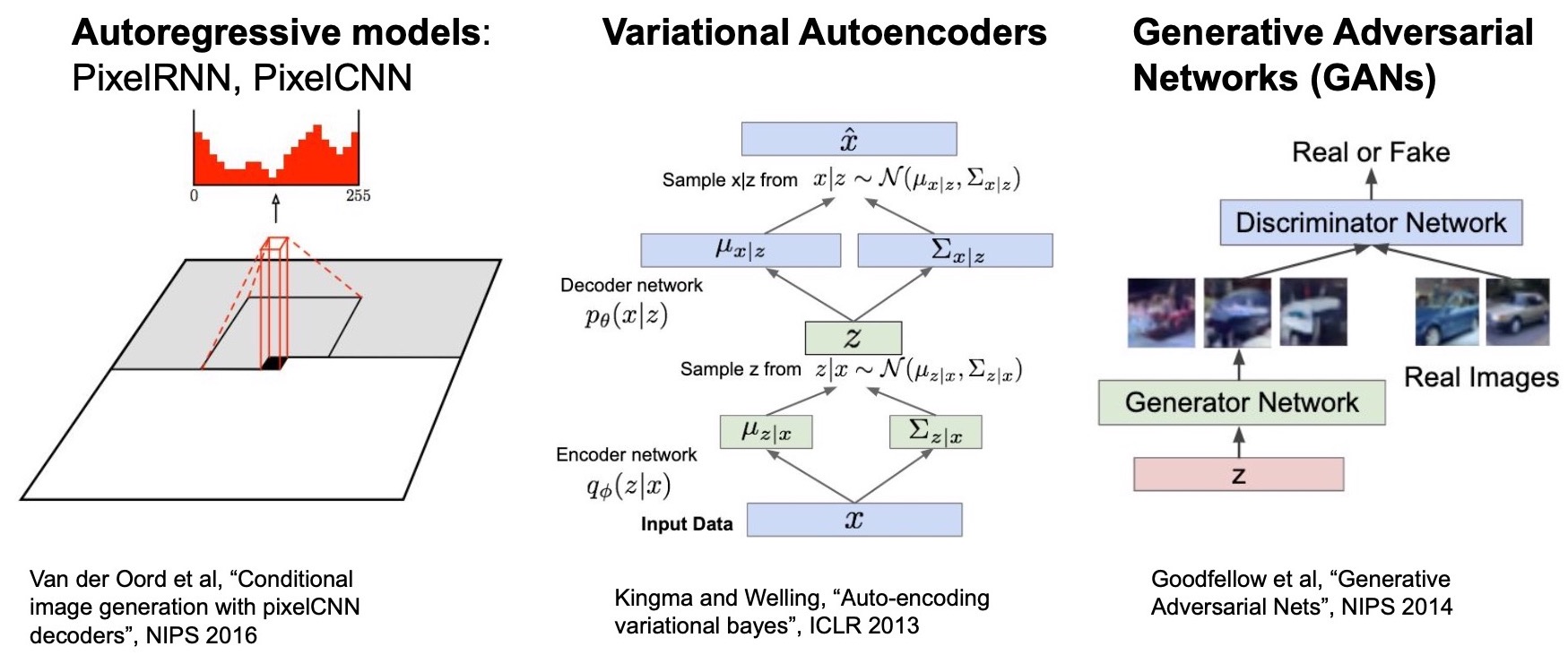

- Variational Autoencoders (VAEs): Variational Autoencoders are a type of generative model that uses unsupervised learning to capture the underlying distribution of the training data. VAEs consist of two components: an encoder and a decoder. The encoder maps the input data into a lower-dimensional latent space, while the decoder reconstructs the data from the latent space. VAEs can generate new data by sampling from the latent space, allowing for diverse outputs.

Applications: VAEs find applications in image synthesis, anomaly detection, and data generation tasks. They have been used to create realistic images, generate new variations of existing images, and even create interactive artwork.

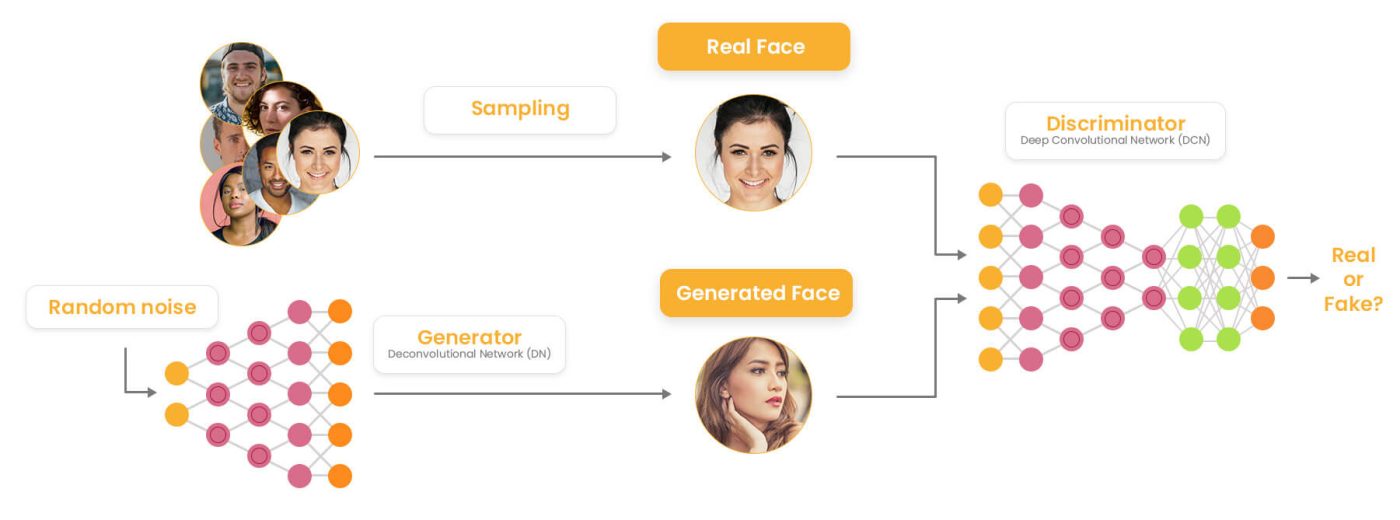

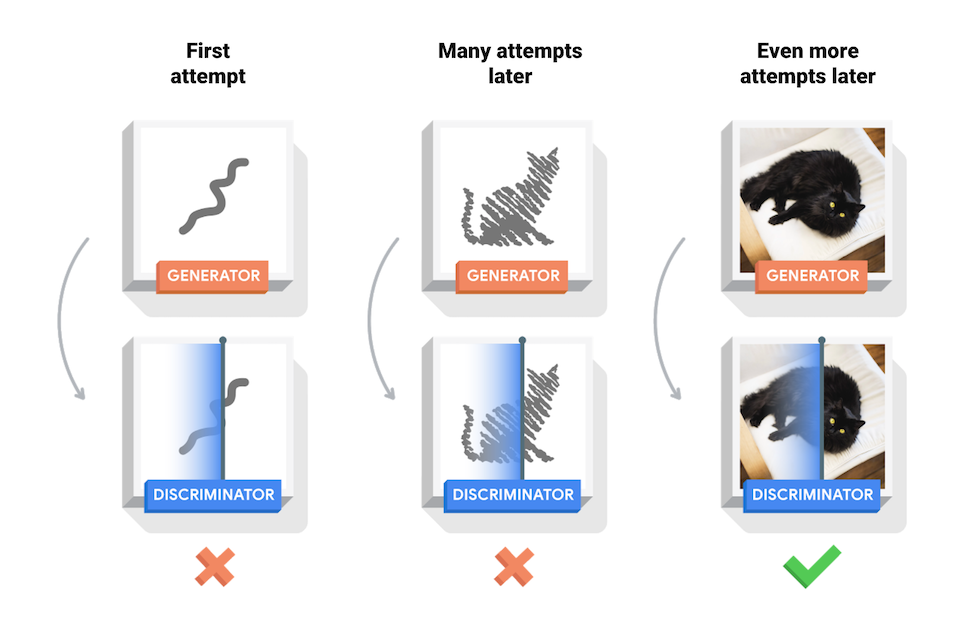

- Generative Adversarial Networks (GANs): Generative Adversarial Networks are a powerful class of generative models that employ a game-theoretic framework. GANs consist of two main components: a generator and a discriminator. The generator generates new samples from random noise, while the discriminator tries to distinguish between real and fake samples. Through an adversarial training process, GANs learn to generate increasingly realistic data.

Applications: GANs have gained popularity in image synthesis, video generation, and style transfer. They have been used to create photorealistic images, generate deepfake videos, and enhance low-resolution images.

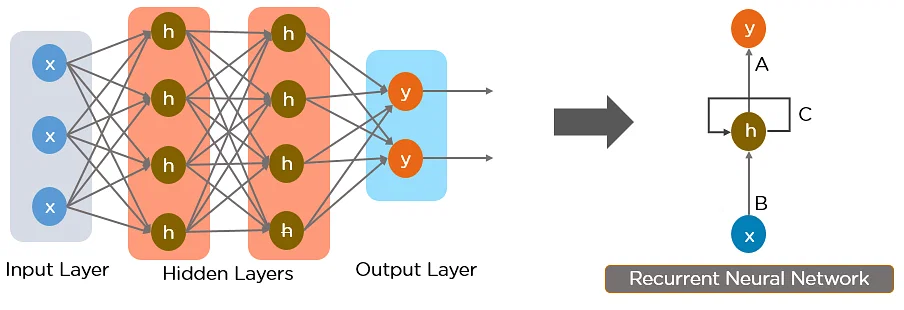

- Recurrent Neural Networks (RNNs): Recurrent Neural Networks are a type of generative model that excels at sequential data generation. RNNs utilize feedback connections, allowing them to capture dependencies across time steps. They can process input sequences of varying lengths and generate new sequences based on learned patterns.

Applications: RNNs are widely used in natural language processing tasks such as text generation, machine translation, and speech synthesis. They have been employed to generate coherent text, compose music, and even create chatbots.

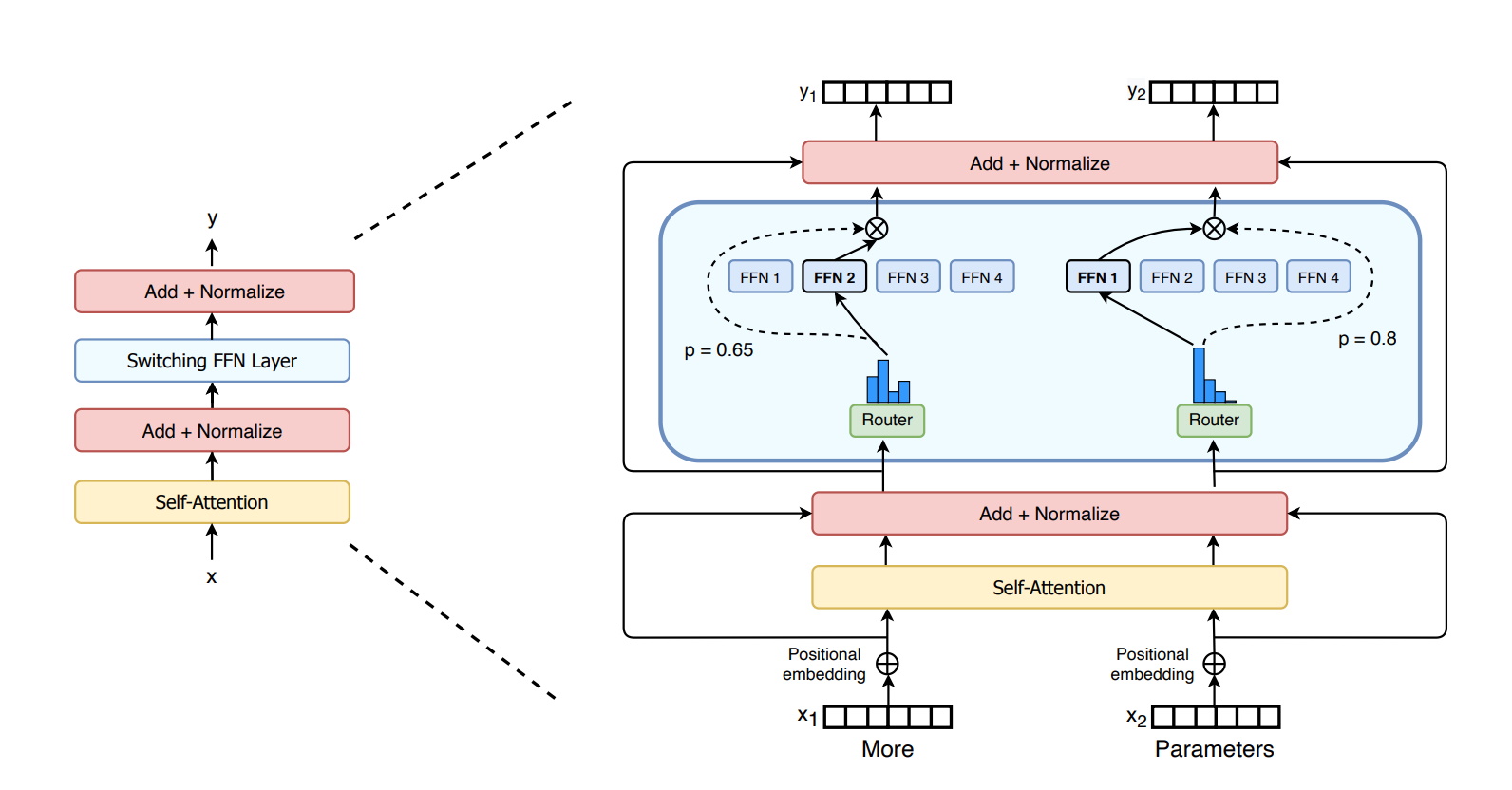

- Transformers: Transformers have gained significant attention in recent years for their ability to model sequential and non-sequential data efficiently. They utilize self-attention mechanisms to capture relationships between different parts of the input. Transformers have been successful in various generative tasks, particularly in natural language processing.

Applications: Transformers have been used for machine translation, text summarization, question answering, and dialogue generation. They have demonstrated impressive capabilities in generating high-quality text and understanding context.

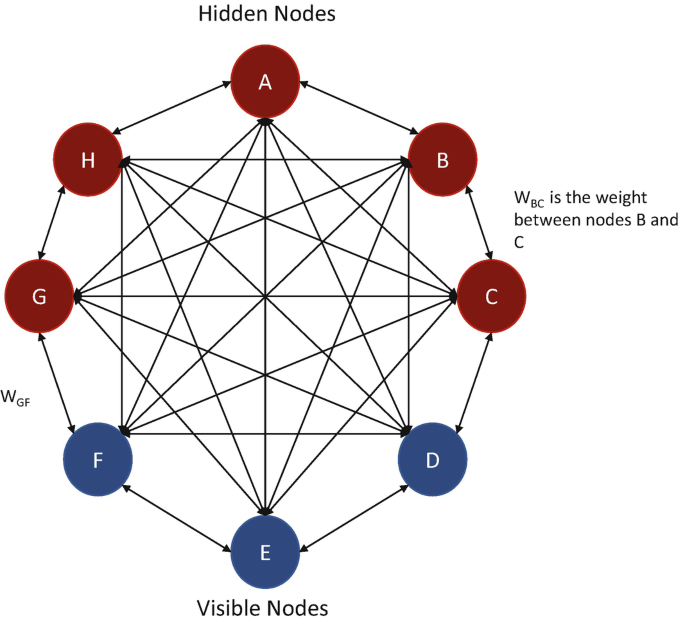

- Boltzmann Machines: Boltzmann Machines are stochastic generative models inspired by statistical physics. They consist of interconnected binary units that model the interactions between variables. Boltzmann Machines are trained using a process called Markov Chain Monte Carlo, which allows them to sample from the learned distribution.

Applications: Boltzmann Machines have been used in recommender systems, feature learning, and collaborative filtering. They have been applied to generate personalized recommendations and discover patterns in large datasets.

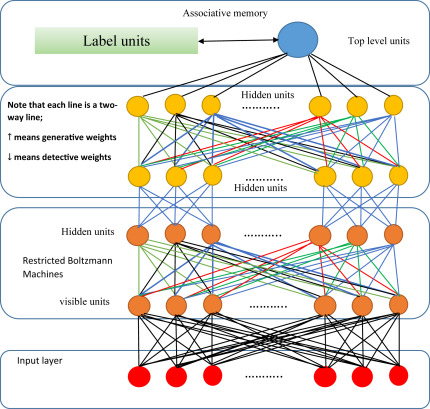

- Deep Belief Networks (DBNs): Deep Belief Networks are hierarchical generative models that combine the power of Boltzmann Machines and deep neural networks. They consist of multiple layers of restricted Boltzmann machines (RBMs), where each layer learns to represent increasingly complex features. DBNs can be trained layer by layer and then fine-tuned using backpropagation.

Applications: DBNs have been applied to image recognition, speech recognition, and natural language processing tasks. They have been used to generate realistic images, classify objects in images, and perform automatic speech recognition.

- Adversarial Autoencoders (AAEs): Adversarial Autoencoders combines the concepts of autoencoders and GANs to generate high-quality data. AAEs use an encoder-decoder architecture similar to VAEs but introduce an adversarial training process. They aim to match the distribution of the latent space to a predefined prior distribution, encouraging diverse and realistic outputs.

Applications: AAEs have been used in image generation, data augmentation, and unsupervised representation learning. They have been employed to generate new images from existing ones, learn disentangled representations, and enhance data diversity for training machine learning models.

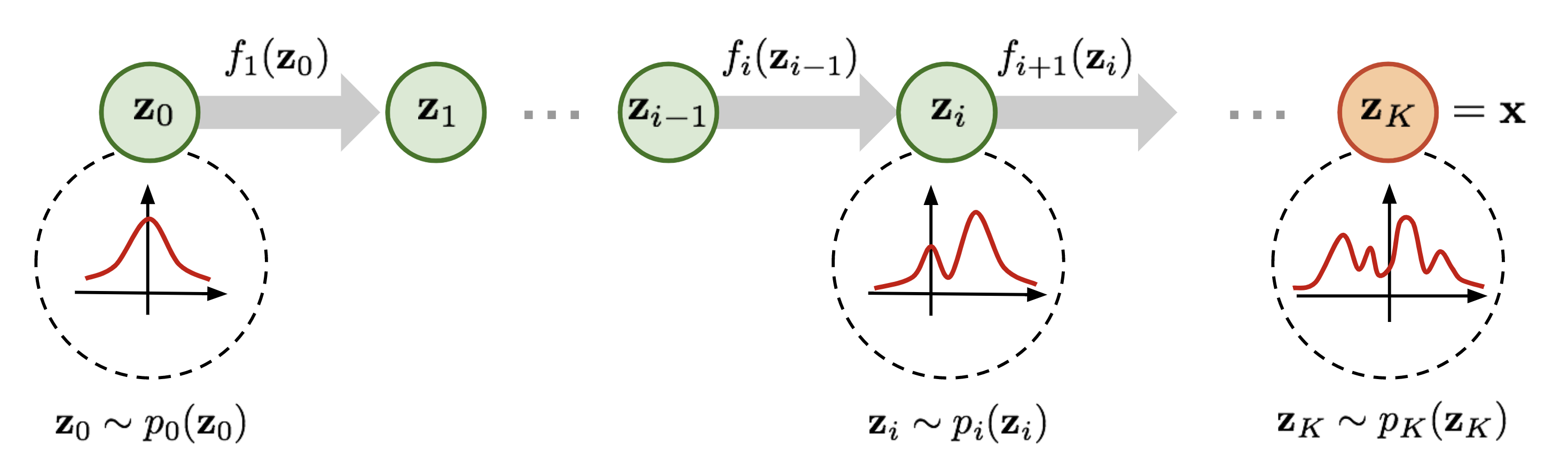

- Flow-based Models: Flow-based models are generative models that directly model the probability density function of the data. They are designed to transform a simple base distribution (e.g., Gaussian) into a complex distribution that matches the data distribution. Flow-based models employ invertible transformations, allowing for efficient sampling and likelihood evaluation.

Applications: Flow-based models have been applied to image synthesis, density estimation, and anomaly detection. They have been used to generate high-resolution images, estimate the probability distribution of data, and identify anomalies in complex datasets.

PixelRNN and PixelCNN: PixelRNN and PixelCNN are generative models that focus on generating realistic images pixel by pixel. These models treat image generation as a sequential process, where each pixel is generated conditioned on previously generated pixels. PixelRNN uses recurrent neural networks to model the pixel dependencies, while PixelCNN employs convolutional neural networks.

Applications: PixelRNN and PixelCNN have been used for image generation, image completion, and image inpainting. They have been applied to generate high-resolution images, fill in missing parts of images, and restore damaged images.

Deep Convolutional Generative Adversarial Networks (DCGANs): DCGANs are an extension of the GAN architecture specifically designed for image generation. DCGANs utilize deep convolutional neural networks in both the generator and discriminator networks. The generator network learns to transform random noise into realistic images, while the discriminator network learns to distinguish between real and fake images.

Applications: DCGANs have been extensively used for image synthesis, image-to-image translation, and data augmentation. They have been employed to generate photorealistic images, translate images from one domain to another (e.g., day to night), and generate new variations of existing images.

Conclusion:

Generative AI models have emerged as powerful tools for content creation and data generation. From VAEs and GANs to RNNs and Transformers, each type of model brings its unique strengths to the table. These models have found applications in diverse fields, including art, entertainment, and problem-solving. As generative AI continues to advance, we can expect further innovation and exciting possibilities in content generation, automation, and creative expression. With responsible and ethical use, generative AI models have the potential to shape the future of human-computer interaction and foster

Subscribe to my newsletter

Read articles from Sahil Kaushik directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by