An Introduction to Deep Learning

Nitin Agarwal

Nitin Agarwal

Introduction:

Deep learning, a subfield of artificial intelligence (AI), has revolutionized various industries and applications, from computer vision to natural language processing. With its ability to analyze and extract intricate patterns from vast amounts of data, deep learning has emerged as a powerful tool for solving complex problems. In this article, we will explore the fundamentals of deep learning, its architecture, and some popular algorithms across different categories.

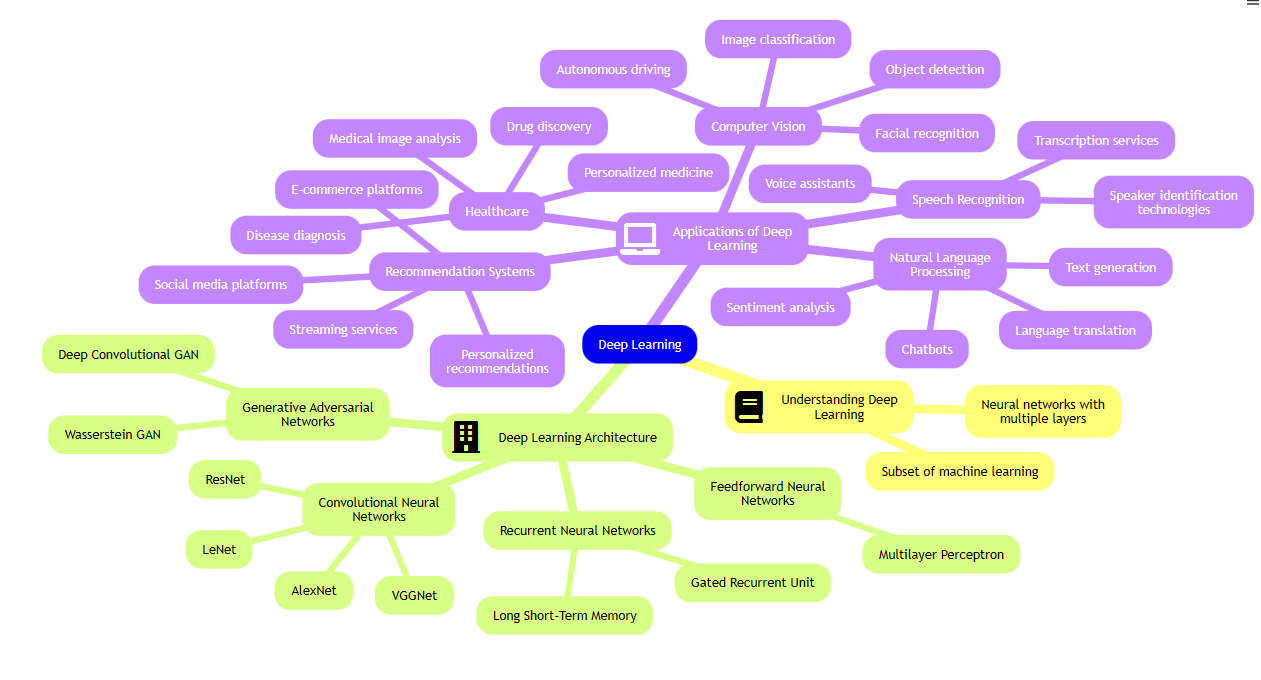

I. Understanding Deep Learning:

Deep learning is a subset of machine learning that focuses on neural networks with multiple layers, enabling the model to learn hierarchical representations of data. It involves training models using a vast amount of labeled data to learn and make predictions or decisions.

II. Deep Learning Architecture:

Feedforward Neural Networks (FNN):

Feedforward Neural Networks, also known as Multilayer Perceptrons (MLPs), are the foundation of deep learning. They consist of an input layer, one or more hidden layers, and an output layer. Each neuron in a layer is connected to all neurons in the next layer. The information flows only in one direction, from the input layer to the output layer, hence the name "feedforward."

Multilayer Perceptron (MLP): MLPs are composed of multiple layers of perceptrons and are widely used for classification and regression tasks. They employ activation functions like ReLU, sigmoid, or tanh for introducing non-linearity.

Recurrent Neural Networks (RNN):

Recurrent Neural Networks are designed to process sequential data by introducing loops within the network, allowing information to persist. Unlike feedforward neural networks, RNNs can maintain internal memory, making them suitable for tasks that involve sequential or time-dependent data.

Popular Algorithms:

Long Short-Term Memory (LSTM): LSTMs address the vanishing gradient problem in traditional RNNs, enabling the network to learn long-term dependencies in sequences. They use memory cells and gates to selectively retain and forget information.

Gated Recurrent Unit (GRU): GRUs are similar to LSTMs in addressing the vanishing gradient problem but have a simplified architecture with fewer gates, making them computationally efficient.

Convolutional Neural Networks (CNN):

Convolutional Neural Networks are specifically designed to process grid-like data, such as images or videos. They employ convolutional layers for feature extraction, enabling them to capture spatial hierarchies of features.

Popular Algorithms:

LeNet: LeNet was one of the first successful CNN architectures, used for handwritten digit recognition.

AlexNet: AlexNet gained attention for its remarkable performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. It contributed to the popularity and widespread adoption of CNNs.

VGGNet: VGGNet is known for its simplicity and uniform architecture, consisting of multiple stacked 3x3 convolutional layers.

ResNet: ResNet introduced the concept of residual learning, allowing the network to handle deeper architectures by using skip connections.

Generative Adversarial Networks (GAN):

Generative Adversarial Networks consist of two networks: a generator and a discriminator. The generator aims to produce realistic outputs, while the discriminator tries to distinguish between the generated and real data. Through competition and iterative training, GANs can generate high-quality synthetic data.

Popular Algorithms:

Deep Convolutional GAN (DCGAN): DCGAN extends the original GAN architecture by using convolutional layers, resulting in more stable training and generating realistic images.

Wasserstein GAN (WGAN): WGAN introduces a different loss function that improves training stability and the quality of generated samples.

III. Applications of Deep Learning:

Computer Vision:

Deep learning has made significant advancements in computer vision, enabling machines to understand and interpret visual data. Some applications include object detection, image classification, facial recognition, and autonomous driving.

Natural Language Processing (NLP):

Deep learning has revolutionized NLP tasks by enabling machines to understand and generate human language. Applications include language translation, sentiment analysis, chatbots, and text generation.

Speech Recognition:

Deep learning algorithms have greatly improved speech recognition systems, leading to the development of voice assistants, transcription services, and speaker identification technologies.

Recommendation Systems:

Deep learning-based recommendation systems analyze user behavior and preferences to provide personalized recommendations for products, content, and services. This technology is widely used in e-commerce platforms, streaming services, and social media platforms.

Healthcare:

Deep learning has found applications in healthcare, aiding in disease diagnosis, medical image analysis, drug discovery, and personalized medicine. It has the potential to assist medical professionals in making accurate diagnoses and improving patient outcomes.

Conclusion:

Deep learning has brought significant advancements in AI, enabling machines to learn complex patterns and make accurate predictions across various domains. With its diverse architectures and algorithms, deep learning continues to push the boundaries of what machines can achieve, revolutionizing industries and opening up new possibilities for the future. As the field continues to evolve, we can expect further breakthroughs and applications that will shape the way we interact with technology.

Subscribe to my newsletter

Read articles from Nitin Agarwal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nitin Agarwal

Nitin Agarwal

Data Scientist with 12 years of industry experience.