Introduction to Few Shot Learning (FSL)

Jay Gala

Jay GalaPopular deep-learning models like Resnet18, Resnet50, VGG16, etc. have achieved outstanding results in various image recognition tasks on benchmark datasets. These neural networks have been trained on mammoth datasets containing millions of labeled examples. However, in many real-life scenarios, acquiring such vast amounts of labeled data is impossible.

Consider a scenario where we want to train a deep learning model to classify diseases based on medical images. Data availability is often an issue in the medical field, and gathering large labeled datasets can be daunting and expensive. Moreover, labeling medical images requires expertise from healthcare professionals, making the labeling process even more challenging and time-consuming.

The question arises: How can we effectively train deep learning models for disease classification and other related tasks with only a few labeled examples? This is where few-shot learning comes in.

Few-shot learning, as the name suggests, addresses the problem of learning from only a few labeled examples. When we see one image of an orange and one of an apple, we can distinguish apples from oranges almost always. This is precisely what FSL tries to mimic. FSL aims to bring us closer to the human brain in terms of efficiency.

Before moving on let's take a look at the lingo in FSL

FSL Lingo

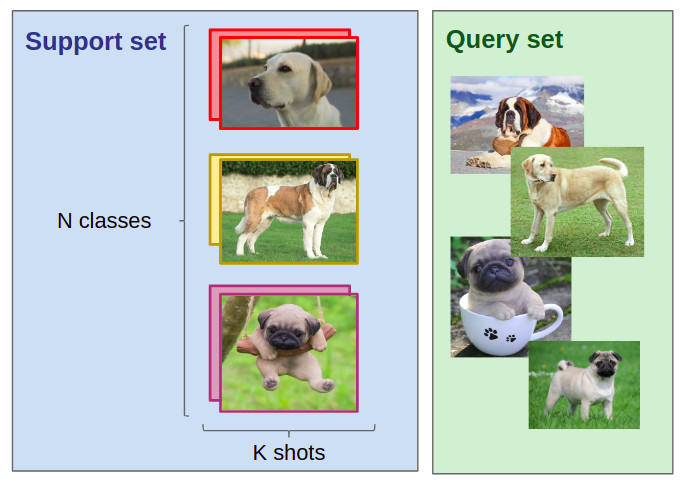

N ways - The number of unique classes that the model will see during training

K shots - The number of examples per class. K is usually kept <10 for it to be considered FSL

Support set - The support set is a collection of (N\K)* samples selected randomly from the training set. This support set is used in training.

Query set - Often denoted by Q (indicating the number of query images per selected class), the query set is a collection of (Q\N)* samples selected randomly from the training set (but these examples do not overlap with the support set examples). The Query set is used to evaluate the performance of the model on the support set.

internet image here 👇 (credit to authors img_link)

- Episode - (a.k.a task) It is a single training / testing instance in an FSL setup. Each task has a support and query set. In every task, the model is expected to learn representations from the support set and then perform well on the query images. Think of it as the "number of batches" per epoch.

Now let's check out the different approaches there are to FSL

There are also a few variations that we will be exploring later in the series:

Zero-Shot Learning: K = 0. The model learns to classify examples with zero-labeled examples

One-Shot Learning: K = 1. The model is trained with only one labeled example per class.

It is as cool as it sounds!

Approaches

There are 2 main approaches in FSL:

Metric-based FS learning:

Here, we try to learn metrics such as distance or similarity between similar and dissimilar examples and the models try to learn which is which.

The main process is to feed some samples to the model --> The model computes some features --> Model calculates distance / similarity / some-other-metric --> Tell it whether they are similar or not --> model learns --> tries to do well on query samples

Special loss functions such as Triplet Loss, Constrastive Loss, etc. are also used to encourage similarity / dissimilarity learning.

Popular models include Prototypical nets, Relation nets, Siamese nets, etc.

Meta Learning:

Meta learning is cooler. Meta learning is basically "learning to learn."

It involves a meta learner that captures the commonalities across similar tasks and a task-specific learner which is fine-tuning parameters on new tasks

Meta learning, in theory, enables the models to generalize to tasks that it was never designed to work for thus making it super versatile and adaptable.

The most popular algorithm is MAML by Finn et al.

Conclusion and Future Scope

While Few Shot Learning has shown great promise, it is far from perfect. Thus, it presents many challenges as well as opportunities for research. In computer vision, FSL is being used for image classification primarily but there have been efforts to expand it to object detection as well as image segmentation.

FSL also finds its use in natural language applications such as text classification and sentiment analysis. It will be exciting to see how FSL evolves in the coming years!

The next blog will probably be on Siamese Networks for one-shot learning. Stay tuned!

Subscribe to my newsletter

Read articles from Jay Gala directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jay Gala

Jay Gala

Currently working as an AI Software Engineer at Intel