Feature Engineering

Om

Om

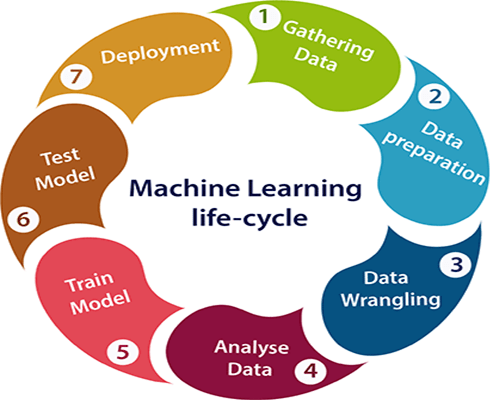

Before Beginning This Tutorial I would Like to show you the pictorial diagram of the complete Machine Learning Life Cycle

As you can see there is a step called Data Preparation, this step is really important in the life cycle, it consists 3 substeps which are 1)Data Processing and Wrangling 2) Feature Extraction and Engineering 3) Feature Scaling and Selection

Feature Engineering is itself a very vast topic and cannot be covered in one go so for today we will understand the Basics of Feature Enginnering and Learn About Feature Scaling, This whole Article is also beneficial for very beginners

so let's begin

Importance of Feature Engineering

Enhances the performance of the model by extracting relevant features and information from the raw data

Improves predictive accuracy and reduces overfitting

Enables the model to capture complex patterns and relationships among the different features, leading to more accurate and robust prediction

Introduction to Feature Engineering

in Feature Engineering there are certain steps to cover

- Feature Transformation :(making changes in the features such that the machine learning algo would perform better)

a. Missing Value Imputation

b. Handling Categorical Features

c. Outlier Detection

d.Feature Scaling

Feature Construction : (sometimes we have to create such features manually so that better results would come)

Feature Selection :( Selecting and removing non-important features)

4)Feature Extraction:(remove the completely new features programmatically ,By performing feature extraction, we can reduce the complexity of the data and make it easier for the machine learning algorithm to learn and make accurate predictions)

now that we have seen the birds-eye view of the feature engineering world now let's what is Feature Scaling

Feature Scaling :

Feature Scaling is a technique to Standardize the independent features present in the data in a fixed range

But Why Feature Scaling is necessary

It brings features to a similar scaler, preventing one feature from dominating others during model training

It improves the performance of Ml models

Ensured fair and unbiased comparison between different features

In Feature Scaling there are two types :

- Standardization (also called Z-score Normalization):

X_standardized=X−μ / σ

Where:

*X_*standardized represents the standardized value of the feature.

X is the original value of the feature.

μ is the mean of the feature's values.

σ is the standard deviation of the feature's values.

- Normalization (also known as min-max scaling)

Normalization, also known as min-max scaling, is a technique used in feature engineering to transform numerical features to a specific range, typically between 0 and 1. This ensures that all features have the same scale and prevents any particular feature from dominating others.

X_normalized = X -Xmin/Xmax-Xmin

Where:

*X_*normalized represents the normalized value of the feature.

X is the original value of the feature.

Xmin is the minimum value of the feature.

Xmax is the maximum value of the feature.

By applying this formula to each value of a feature, we can scale the feature's values to a range between 0 and 1. This allows for easier comparison and analysis of the feature across different scales and ensures that no particular feature dominates the others due to differences in magnitude.

Subscribe to my newsletter

Read articles from Om directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by