Web Scraping with Puppeteer

Tabish Naqvi

Tabish Naqvi

Puppeteer is a Node.js library that is used extensively for web scraping, and can interact with a browser in such a way that anything you can do manually on the web, puppeteer can do so too.

The way it does this is by opening a headless browser in your terminal, which is essentially just a browser without a GUI (Graphical User Interface), and controls Chrome/Chromium over the DevTools Protocol. Yes, that means it can automate the interaction of web pages such as filling out forms, visiting links on a page, and taking screenshots. This all sounds good until you realize they can be used for performing DDoS attacks and increasing advertisement impressions.

Illegal shenanigans aside, in this article we're going to be building a web scraper that scrapes YouTube video views based on the id provided to it. GitHub repo in case you get stuck :) let's start then.

Installing Puppeteer

Begin by opening up your terminal of choice, I'll be using Visual Studio Code for this tutorial. Head over to your terminal and type in the following commands one by one:

npm init -y

touch main.js

npm install puppeteer

Nothing much here, all you did was create a package.json, then proceeded to create an empty javascript file and finally installed Puppeteer.

Coding the Scraper

Create a folder, and name it src, and populate it with a file scraper.js.

We will start with importing the puppeteer module:

const puppeteer = require("puppeteer");

Create an asynchronous function as we will need to use the await clause in this context, to wait for the page to load the browser content:

const scrape = async(id) => {

const browser = await puppeteer.launch({headless: "true"});

const page = await browser.newPage();

const pageURL = `https://www.youtube.com/watch?v=${id}`;

try {

await page.goto(pageURL);

}

catch (error) {

console.log('Slow network, restarting...');

await browser.close()

return scrape(id);

}

}

Should be pretty self-explanatory till here, I hope. All we did till now is launch a headless browser (no GUI, in the terminal itself), and went to the channel referenced by the id passed to the function.

But, on a slow network, this would just stop, and throw an error, so we called the function recursively.

Let's play with the browser DOM now.

const puppeteer = require("puppeteer");

const scrape = async(id) => {

const browser = await puppeteer.launch({headless: "true"});

const page = await browser.newPage();

const pageURL = `https://www.youtube.com/watch?v=${id}`;

try {

await page.goto(pageURL);

}

catch (error) {

console.log('Slow network, restarting...');

await browser.close()

return scrape(id);

}

let viewsText = null

await page.waitForSelector('tp-yt-paper-button#expand-sizer');

//use the DOM to expand the description of the video

try {

await page.click('tp-yt-paper-button#expand-sizer')

//click to expand

viewsText = await page.evaluate(() => {

//evaluating the DOM

const views = document.querySelector("yt-formatted-string#info.style-scope.ytd-watch-metadata > span").innerHTML

//views = "xyz views", we only need the numeric (xyz)

return views.split(" ")[0];

//use the split function to get only the number

});

}

catch (error) {

console.log({ error: error.message });

browser.close();

return

}

await browser.close();

//ALWAYS remember to close the browser!

}

module.exports = scrape;

}

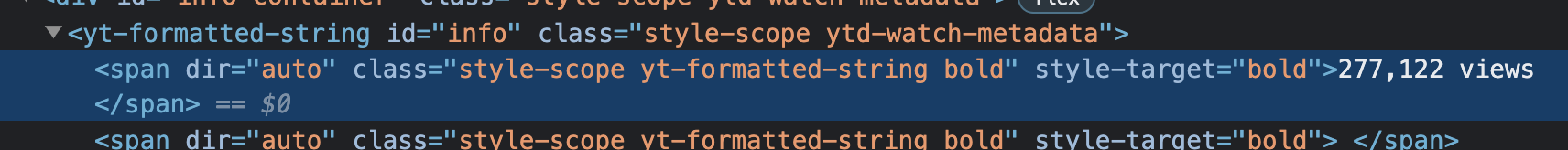

Whew! Hope the comments helped, but if you're still confused about what yt-formatted-string#info.style-scope.ytd-watch-metadata > span exactly is, let me explain. What we did here is selected the element yt-formatted-string with id (the HTML one, not the video) = info.style-scope and class = ytd-watch-metadata and selected the first span that we encounter, which is the view count. How did I know this? See for yourself:

Let's move on to the next part!

main.js

const scrape = require('./src/scraper');

//importing the scrape fucntion we just coded

const main = async() => {

//argument validation

if(process.argv.length < 3){

console.log('no video id was entered :(');

process.exit(1);

}

if(process.argv.length > 3){

console.log(`too many args`);

process.exit(1);

}

const id = process.argv[2];

try{

const views = await scrape(id);

console.log(views);

}

catch(error){

const errorMsg = error.message

console.log(errorMsg);

}

return;

}

main()

Nothing much, we just validated the arguments our main function will be taking in, then called our function to do the scraping with whatever id is passed to it. You could just write all the code in one file, but that's bad practice.

There you go!

Running our Scraper

Head over to your terminal, and type in the following:

node main 0fONene3OIA

And you should get the view count of the video corresponding to the videoID you entered. Visit https://www.youtube.com/watch?v=0fONene3OIA to confirm your code is functioning properly.

Homework: Fetch the channel name.

Conclusion

That's a wrap!

Thank you for reading this article to the very end, it means a lot, and I hope I was able to provide some value to you. Follow me on my socials for more content like this!

See 'yall in the next one!

Signing off 👾

-Tabish.

Subscribe to my newsletter

Read articles from Tabish Naqvi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tabish Naqvi

Tabish Naqvi

Hey, I'm a sophomore computer science student and a self-taught full stack developer who's into tech, startups and building projects that really matter. When I'm not coding, I'm either brainstorming my next project, reading or hanging out.