Understanding Architecture, Components, and the Docker vs.ContainerD

Ashutosh Mahajan

Ashutosh Mahajan

🗼Introduction

In recent years, Kubernetes has emerged as the de facto standard for container orchestration, revolutionizing the way we deploy, scale, and manage applications. Understanding the architecture and components of Kubernetes is essential for mastering this powerful tool. Additionally, the choice between Docker and ContainerD as container runtimes in Kubernetes has sparked significant discussions in the containerization community. In this blog post, we will delve into the Kubernetes architecture, explore its various components, and compare Docker and ContainerD to shed light on this ongoing debate.

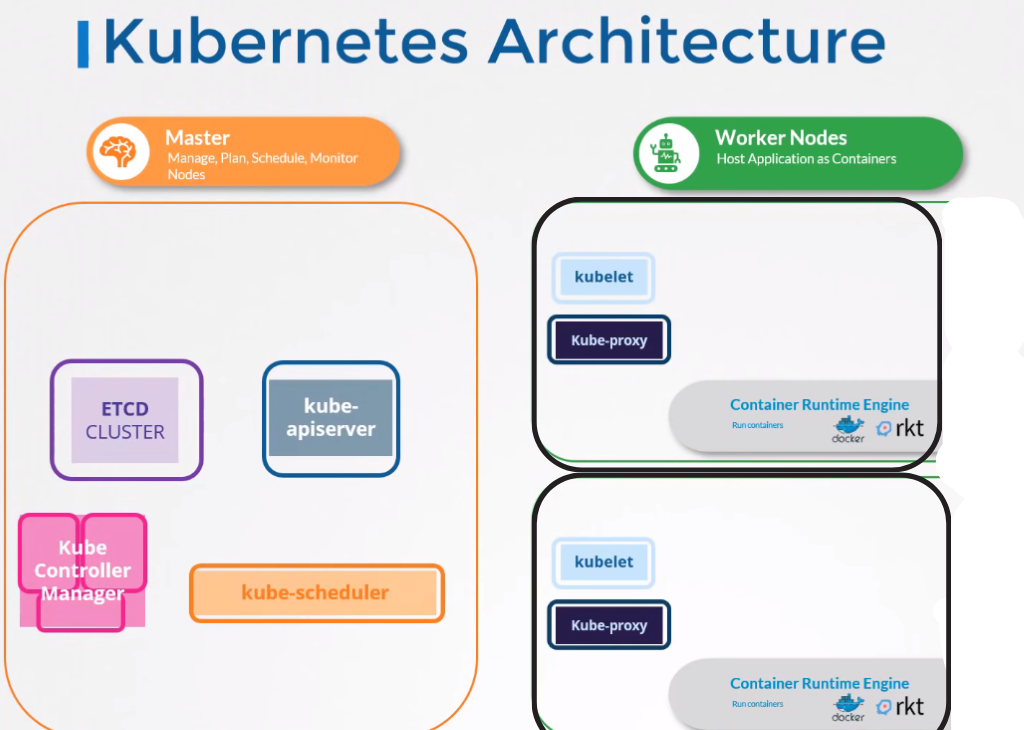

🗼Kubernetes Architecture

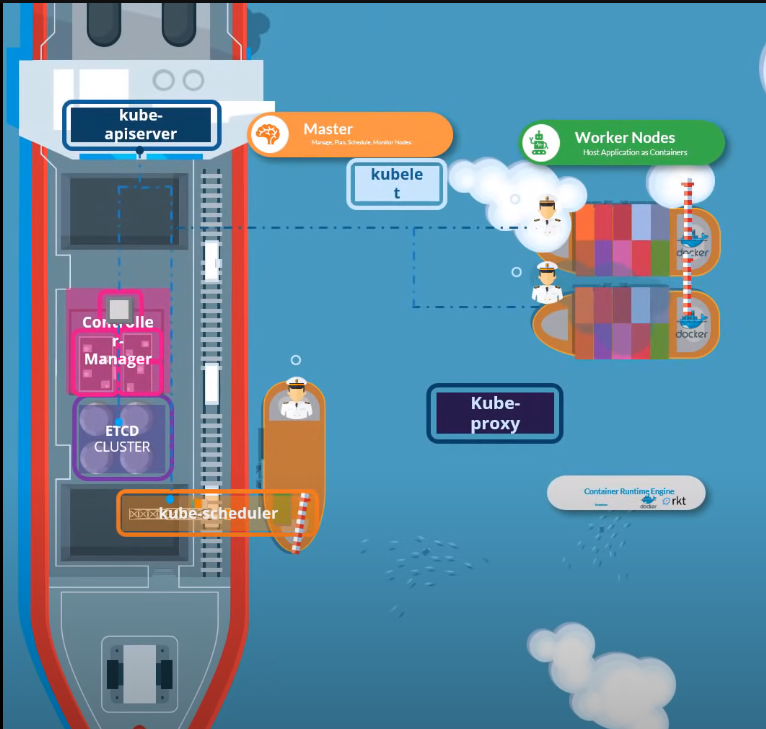

Master Node:

- This is responsible for managing the Kubernetes cluster such as storing information regarding the nodes, planning which container goes where, Monitoring the nodes and scheduling the pods .

Worker Node:

- It hosts your application as a container.

At its core, Kubernetes follows a master-worker architecture, consisting of several components that work together to orchestrate containers. Here are the key components:

🗼Kubernetes Components

ETCD Cluster:

A distributed key-value store that maintains the cluster's configuration data, acting as the cluster's source of truth. It is like a Database that stores information in a key-value format.

Kubernetes Scheduler:

It identifies the right node to place a container on based on the container's resource requirements, the worker node capacity or any other policy such as Tains and Tolerations and Node Affinity rules.Control Manager :

Monitors the cluster's desired state and ensures that the actual state matches it by interacting with the API server. It takes care of nodes , responsible for onboarding new Nodes to the Cluster, Handles situations where a node becomes available or gets destroyed, and the relocation controller takes care of the desired number of containers are running at all times in a replication.

Kube-apiserver :

The kube-apiserver responsible for orchestrating all operations within the cluster. It exposes the Kubernetes API which is used by external users to perform management operations on the cluster. It also monitors the state of the cluster and makes necessary changes as required and the worker Nodes to communicate with the server.

Kubelet :

Kubelet is an engine that runs on every node in a cluster. It listens to the instruction from Kube-apiserver and deploys and destroys containers on the Nodes as required. Kube-apiserver periodically fetches the status report from the kubelet to monitor the status of nodes and containers on them Kubelet is similar to Captain on the ships that manage containers on the ship.

Kube-proxy:

Kube-proxy enables communication between the nodes. It has all the rules on the worker node to allow the container to reach each other.

🎗️ You can understand more about Kubernetes Components from the following image:

🗼Docker vs ContainerD

Docker and ContainerD are two popular container runtimes that are used to manager containers.

Scope: Docker is a complete platform that provides tools for building, packaging, and deploying containers, while Containerd is primarily focused on running containers.

Architecture: Docker is built as a monolithic application that includes a container runtime, a container registry, and other tools, while Containerd is designed to be a lightweight, modular runtime that can be embedded into other systems.

Extensibility: Docker provides a rich set of features and plugins for managing containers, while Containerd provides a simple, extensible platform that can be extended with plugins and modules.

Community: Docker has a large and active community of users and contributors, while Containerd has a smaller but growing community of users and contributors.

Again, for simplicity, we left some parts out, like the Docker Daemon. But in a nutshell, this is what happens after someone enters that command:

Docker CLI understands what we want to do, and then sends instructions to Containerd.

containerd does its magic and downloads the Nginx image if it's not available.

Next, containerd tells runc to start this container

And we finally get our result: nginx running in a little isolated container box.

This means we Kubernetes don't need Docker with all of its parts, such as the Docker CLI, Docker Daemon, and some of its other bits and pieces. However, Kubernetes still need containerd and runc to start a container. So why not tell containerd directly about our intention? If we skip running Docker CLI, and the Docker Daemon, at least, we would use less memory on our system. It would be more efficient, that is true. It's one of the reasons why Kubernetes removed Docker and opted to use containerd directly.

🗼Conclusion

Understanding the architecture and components of Kubernetes is vital for effectively deploying and managing containerized applications. The core components, along with the additional components, work together to provide a scalable and resilient platform.

When it comes to choosing a container runtime for Kubernetes, Docker and ContainerD are two popular options. While Docker provides a comprehensive container platform with an extensive ecosystem, ContainerD offers a lightweight runtime focused solely on container execution. The choice between them ultimately depends on specific requirements, performance considerations, and the need for additional tooling.

In the ever-evolving landscape of containerization, Kubernetes continues to be at the forefront, empowering organizations to build and deploy resilient and scalable applications. By understanding the architecture, components, and available container runtimes, you are well-equipped to navigate the vast possibilities offered by Kubernetes.

Subscribe to my newsletter

Read articles from Ashutosh Mahajan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ashutosh Mahajan

Ashutosh Mahajan

Proficient in variety of DevOps technologies, including AWS, Linux, Shell Scripting, Python, Docker, Terraform, Jenkins and Computer Networking. They have strong ability to troubleshoot and resolve issues and are consistently motivated to expand their knowledge and skills through expantion of new technologies.